Today NVIDIA announced that with the help of some of its global partners, it is launching new NVIDIA HGX A100 systems. The new systems are looking to accelerate AI and HPC by adding NVIDIA elements such as NVIDIA A100 80GB PCIe GPU, NVIDIA NDR 400G InfiniBand networking, and NVIDIA Magnum IO GPUDirect Storage software. These new HGX systems will be brought to market by partners including Atos, Dell Technologies, Hewlett Packard Enterprise (HPE), Lenovo, Microsoft Azure, and NetApp.

NVIDIA has made high-powered GPUs for years. The emerging AI market, particularly in HPC, has driven more and more supercomputers to leverage the company’s technology. NVIDIA themselves have been making HPC servers and workstations for the last few years with DGX and HGX models. The latter has brought together several NVIDIA IPs under one roof for better performance The new systems do this once more with the latest and greatest NVIDIA has to offer.

NVIDIA A100 80GB PCIe GPU

The NVIDIA A100 was announced last year at GTC. This new 7nm GPU leverages the company’s Ampere architecture and contains 54 million transistors. NVIDIA quickly improved the product with the introduction of the NVIDIA A100 80GB PCIe GPU, doubling its memory. The A100 80GB PCIe GPU is the first part of the new HGX A100 systems. Its large memory capacity and high bandwidth allow for more data and larger neural networks to be held in memory. This means less internode communication as well as less energy consumption. The high memory also allows for higher throughput which can lead to faster results.

As stated, the NVIDIA A100 80GB PCIe GPU is powered by the company’s Ampere architecture. This architecture features Multi-Instance GPU, also referred to as MIG. MIG can deliver acceleration for smaller workloads, i.e., AI inference. This feature allows users to scale both compute and memory down with a guaranteed QoS.

The partners surrounding the NVIDIA A100 80GB PCIe GPU include Atos, Cisco, Dell Technologies, Fujitsu, H3C, HPE, Inspur, Lenovo, Penguin Computing, QCT, and Supermicro. There are a few cloud services providing the technology as well including AWS, Azure, and Oracle.

NVIDIA NDR 400G InfiniBand networking

The second piece to the NVIDIA HGX A100 system puzzle is the new NVIDIA NDR 400G InfiniBand switch systems. This will sound a bit obvious, but HPC systems need very high data throughput. NVIDIA acquired Mellanox a few years back for nearly $7 billion. Since then, it has been steadily releasing new products while slowly phasing out the Mellanox name for just NVIDIA. Last year is released its NVIDIA NDR 400G InfiniBand with 3x the port density and 32x the AI acceleration. This is being integrated into the new HGX systems through the NVIDIA Quantum-2 fixed-configuration switch system. This system is said to deliver 64 ports of NDR 400Gb/s InfiniBand per port or 128 ports of NDR200.

According to the company, the new NVIDIA Quantum-2 modular switches provide scalable port configurations up to 2,048 ports of NDR 400Gb/s InfiniBand (or 4,096 ports of NDR200) with a total bi-directional throughput of 1.64 petabits per second. This represents over a 5x improvement over the previous generation with 6.5x greater scalability. Using a DragonFly+ network topology, users can connect to over a million nodes. Finally, the company has added in its 3rd gen NVIDIA SHARP In-Network Computing data reduction technology, which it goes on to claim can see 32x higher AI acceleration when compared to previous generations.

NVIDIA Quantum-2 switches are both backward and forward compatible. Manufacturing partners include Atos, DDN, Dell Technologies, Excelero, GIGABYTE, HPE, Lenovo, Penguin, QCT, Supermicro, VAST, and WekaIO.

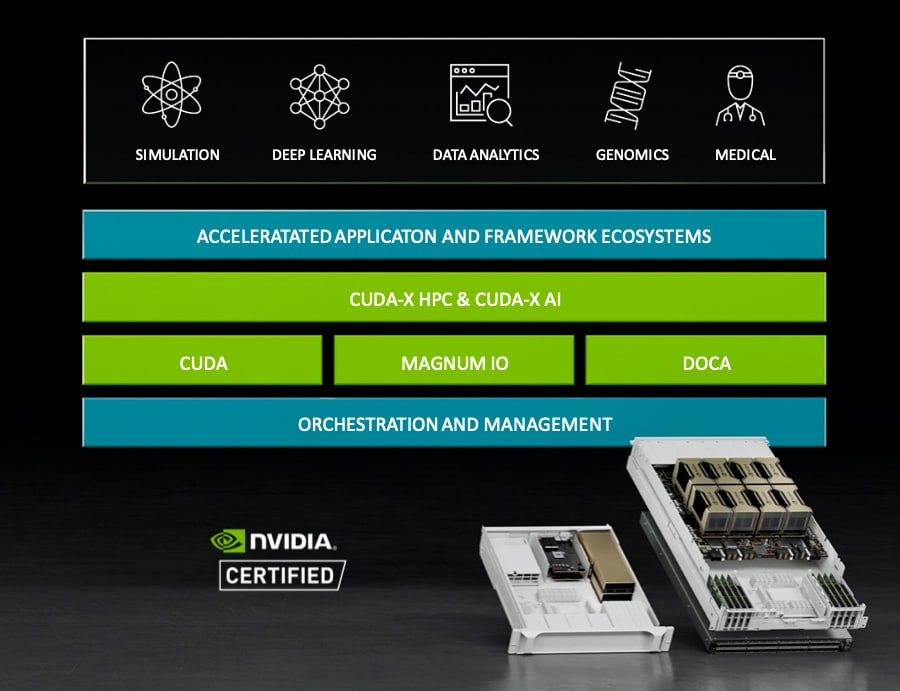

Magnum IO GPUDirect Storage

The final piece of the new NVIDIA HDX A100 puzzle is the new Magnum IO GPUDirect Storage. This allows direct memory access between GPU memory and storage. This has several benefits including lower I/O latency, bull use of bandwidth of the network adapters, and less of an impact on the CPU. Several partners have Magnum IO GPUDirect Storage available now including DDN, Dell Technologies, Excelero, HPE, IBM Storage, Micron, NetApp, Pavilion, ScaleFlux, VAST, and WekaIO.

Amazon

Amazon