Meta has been designing, building, and deploying its hardware in some of the largest data centers for over 14 years. In 2009, following rapid growth, engineers were called up to rethink their infrastructure to support the massive internal growth in data, people, and resources as Facebook engineers began the journey to design an energy-efficient data center with software, servers, hardware, cooling, and power. They’ve now contributed the Grand Canyon Storage System to OCP as the next standard for bulk storage.

The new facility was 38 percent more energy efficient to build and 24 percent less expensive to run than the company’s previous facilities. Facebook decided to share its success with the global engineering community. So, in 2011, the Open Compute Project Foundation (OCP) was initiated to increase the pace of innovation for networking equipment. Everything from general-purpose servers, GPU servers, storage appliances, and rack designs came out of the OCP collaboration model and is being applied beyond the data center, helping to advance the telecom industry & edge infrastructure.

So it was only fitting that Meta used the OCP Global Summit to take attendees through the latest storage server and JBOD called Grand Canyon, and a new single socket server referred to as Barton Springs. The design for Grand Canyon came from the demand to build a better and faster storage server.

The motivation behind the new server was based on the following:

- Higher CPU Performance per slot

- Improved chassis to support dense drives (up to 2025-2026-ish timeframe)

- Power efficiency

- Maintain Flexibility (ORv3, Modular CPU Card)

- Design with longer-life components

The design for Grand Canyon increased the modularity to extend the upgradeability and the lifespan of the new chassis.

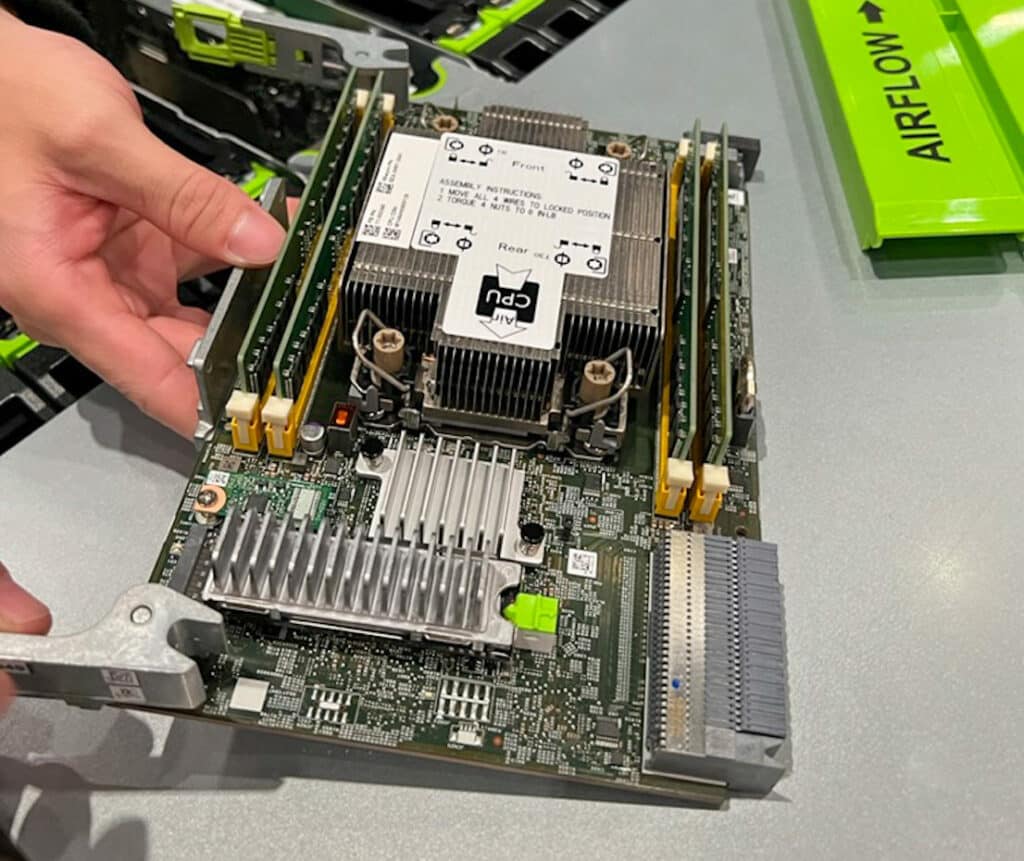

| Component | Description |

| CPU | Intel Xeon “Cooper Lake” SOC |

| Boot Drive | M.2 Solid state (2280 form factor Hyperscale NVMe Boot SSD Specification) |

| DIMM | 1 DDR4 DIMM per channel at 4 channels up to 3200MT/s |

| PCH | Intel PCH chipset |

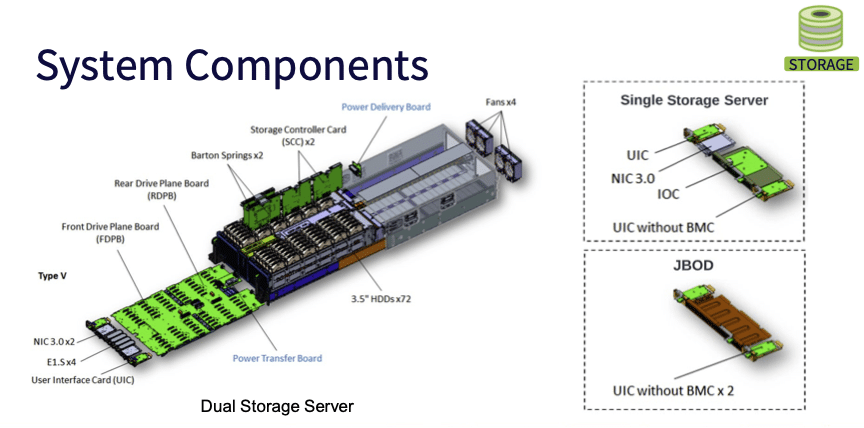

The new storage server consists of two storage nodes supporting 36 x 3.5″ HDDs in a single drawer. Grand Canyon uses the Barton Springs 1-socket server with some impressive specs. Additional flexibility has been incorporated in the new chassis, allowing the system to be built in three configurations.

The first configuration option is a stand-alone dual storage server that includes two compute nodes and 72 HDDs where all the drives are powered on all the time for faster data access.

The second configuration is a single storage server with a single compute node that can also be used as a head node. This configuration connects to two of the JBOD chassis, which is the third configuration and does not feature a compute node, and supports up to 216 HDDs.

Each configuration has different populations for the modules in the system, as illustrated in the exploded view above. A key element of this design is how easy it is to access all components from the front or the top of the chassis.

Grand Canyon vs. Bryce Canyon (Prior Gen)

| Bryce Canyon | Grand Canyon | |

| Compute | 16 Core | 26 Core |

| SpecIntRate | 1 | 1.87 (turbo enabled) |

| DRAM Capacity, BW per CPU | 2x 32GB DDR4, 33GB/s | 4x 16GB DDR4, 93GB/s |

| Boot SSD per Compute | 256 GB | 256 GB |

| Data SSD per Compute | 2x2TB m.2 | 2x2TB E1.S 9.5mm (Upgradeable capacity |

| Drives per chassis, Drives per Compute | 72, 36 | 72, 36 |

| NIC | 50Gbps, Single-host, OCP2.0 | 50Gbps, Single-host, OCP 3.0 (Upgradeable to 100Gbps) |

Grand Canyon Storage System Structural Design Improvements

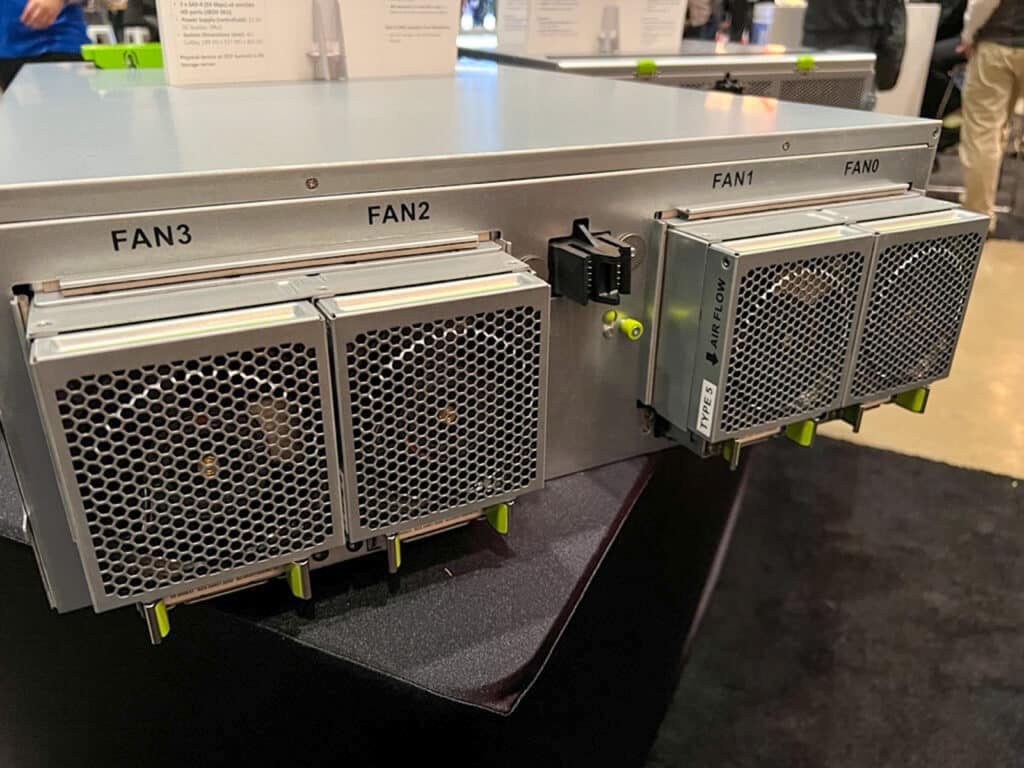

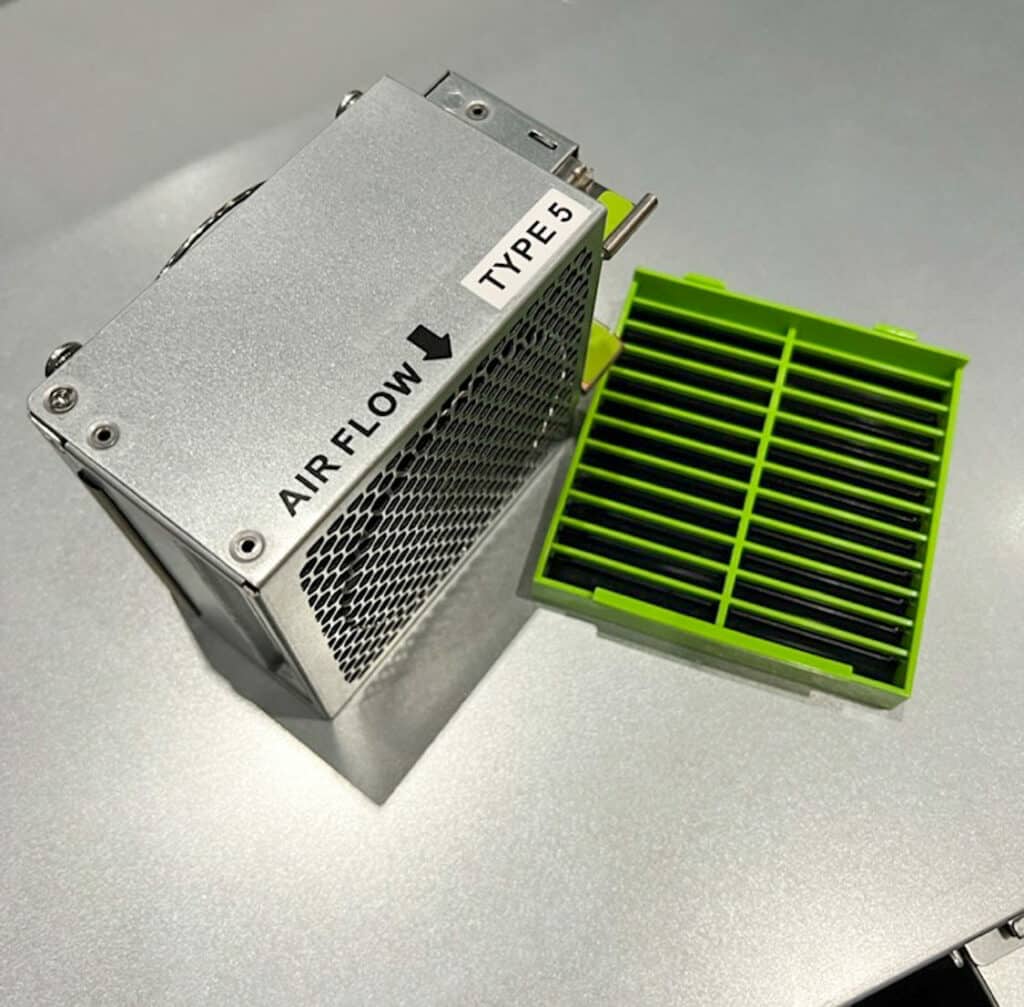

With the number of HDDs in this chassis and the potential for vibration from the fans, Meta made improvements to using vibration-damping features, both structural and acoustic, to allow for dense HDD capacity adoption and prevent fan vibration from passing through to the enclosure.

Meta engineers used a surrogate to measure vibration and reached out to hard-drive vendors to compare the benefits of the vibration mitigation used in designing the Grand Canyon chassis.

The fan system was modified to include a blind that will close if a fan shuts down, preventing loss of airflow across the system. This ensures proper cooling throughout the chassis, even in the event of a fan loss.

The Grand Canyon system uses the latest IOC and Expanders from Broadcom that includes SAS Gen4 capabilities, is interoperable with SAS and SATA HDDs, and provides hardware and firmware compatibility for command duration limits on SAS/SATA HDDs, as well as dual actuator HDDs.

Supporting Documentation

For information on Surrogate HDDs built for Grand Canyon characterization, refer to this talk.

For information on Surrogate HDDs, improvements & standardization, join the OCP HDD Dynamics workstream.

For more information on features of interest to Meta, check out the ‘HDD features for the future session here.

The spec can be viewed on the GitHub Repository (OpenBMC).

The Grand Canyon systems are available from Wiwynn, dubbed SV/ST7000G4 in their product guide.

Amazon

Amazon