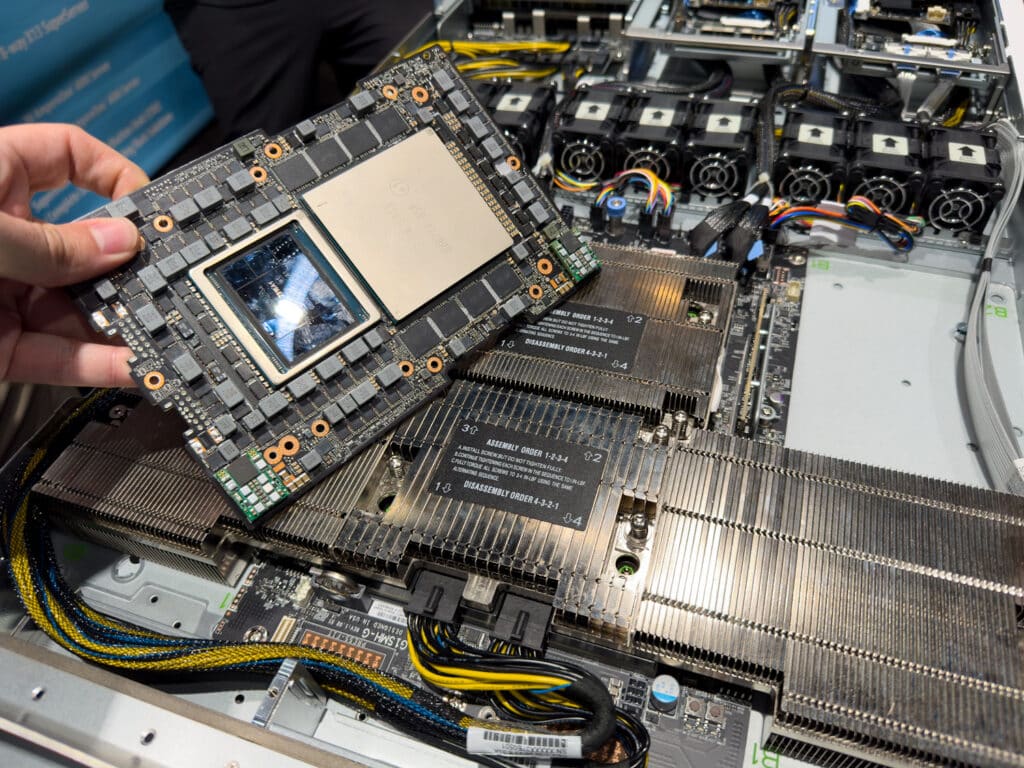

Supermicro has unveiled a comprehensive new portfolio of servers designed to set new standards in the AI and high-performance computing domains. These servers are the industry’s first line of NVIDIA MGX systems and are built around the NVIDIA GH200 Grace Hopper Superchip. Aimed at drastically improving AI workload performance, these new offerings are engineered with cutting-edge technological advancements.

Supermicro has unveiled a comprehensive new portfolio of servers designed to set new standards in the AI and high-performance computing domains. These servers are the industry’s first line of NVIDIA MGX systems and are built around the NVIDIA GH200 Grace Hopper Superchip. Aimed at drastically improving AI workload performance, these new offerings are engineered with cutting-edge technological advancements.

From integrating the latest DPU networking and communication technologies to incorporating Supermicro’s advanced liquid-cooling solutions for high-density deployments, these servers represent a leap forward in scalable and customizable computing solutions.

The new server models include the following:

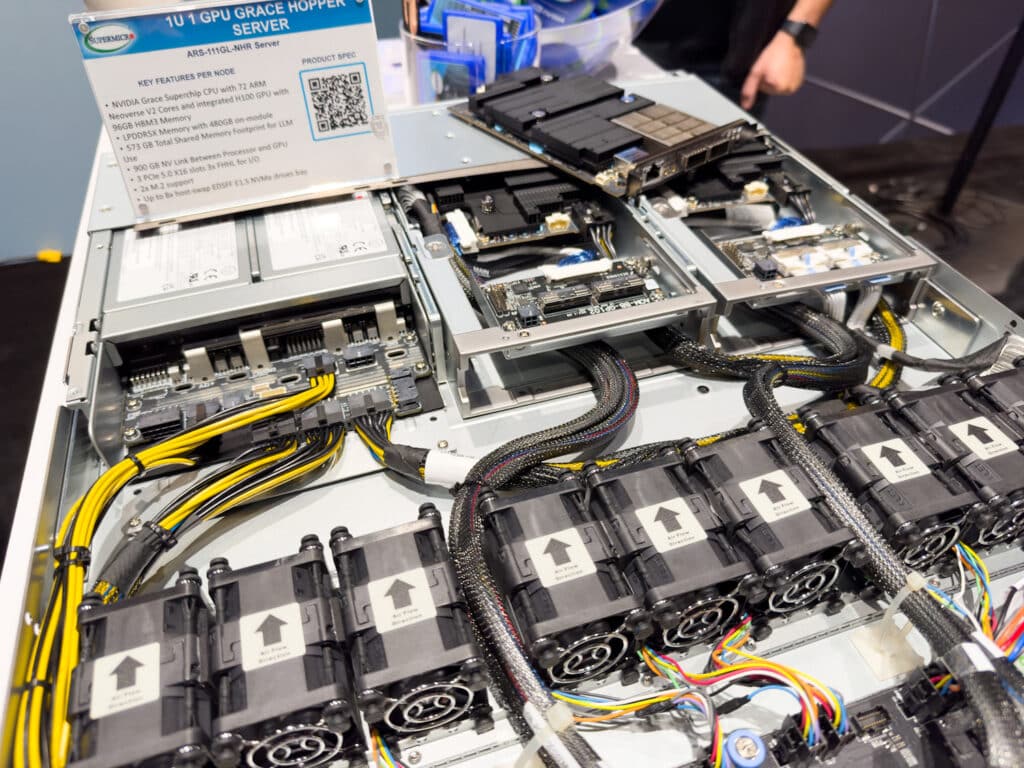

ARS-111GL-NHR: This air-cooled server is a high-density 1U GPU system that features an integrated NVIDIA H100 GPU and NVIDIA Grace Hopper Superchip. With a 900GB/s NVLink Chip-2-Chip interconnect and up to 576GB of coherent memory, this server is specifically designed for demanding applications such as High-Performance Computing, AI/Deep Learning, and Large Language Models. The system also includes 3x PCIe 5.0 x16 slots and nine hot-swap heavy-duty fans for optimal thermal management.

ARS-111GL-NHR-LCC: Similar to the ARS-111GL-NHR, this system is a 1U high-density GPU system but with the added benefit of liquid cooling. It shares the same core features like the NVIDIA Grace Hopper Superchip and 900GB/s NVLink C2C interconnect. The liquid cooling enhances energy efficiency and makes it suitable for similar high-compute applications but with a potentially lower operational cost due to seven hot-swap heavy-duty fans.

ARS-111GL-DHNR-LCC: The GPU ARS-111GL-DNHR-LCC packs two nodes into a 1U form factor, each supporting an integrated NVIDIA H100 GPU and Grace Hopper Superchip. With up to 576GB of coherent memory per node, this server provides a balance of high computing power and space efficiency. It is well-suited for Large Language Model applications, High-Performance Computing, and AI/Deep Learning.

ARS-121L-DNR: This high-density 1U server includes two nodes, each supporting an NVIDIA H100 GPU and a 144-core NVIDIA Grace CPU Superchip. The server has 2x PCIe 5.0 x16 slots per node and can accommodate up to four hot-swap E1.S drives and two M.2 NVMe drives per node. With 900GB/s NVLink interconnect between CPUs, this server is optimized for High-Performance Computing, AI/Deep Learning, and Large Language Models.

ARS-221GL-NR: The GPU ARS-221GL-NR is a 2U high-density GPU system that can support up to four NVIDIA H100 PCIe GPUs. With 480GB or 240GB LPDDR5X onboard memory options, this server is designed for low-latency, high-power efficiency tasks, making it ideal for High-Performance Computing, Hyperscale Cloud Applications, and Data Analytics.

SYS-221GE-NR: This 2U high-density GPU server supports up to four NVIDIA H100 PCIe GPUs and offers a maximum of 8TB of ECC DDR5 memory across 32 DIMM slots. The server also features seven PCIe 5.0 x16 FHFL slots and supports NVIDIA BlueField-3 Data Processing Unit for demanding accelerated computing workloads. With E1.S NVMe Storage Support, this server is apt for High-Performance Computing, AI/Deep Learning, and Large Language Model Natural Language Processing.

Each MGX platform is upgradable with either NVIDIA BlueField-3 DPU or NVIDIA ConnectX-7 interconnects, offering superior performance in InfiniBand or Ethernet networking.

Technical Specifications

Supermicro’s new 1U NVIDIA MGX systems are designed for robust performance, featuring up to two NVIDIA GH200 Grace Hopper Superchips, each with two NVIDIA H100 GPUs and two NVIDIA Grace CPUs. These units come packed with 480GB LPDDR5X memory for the CPU and either 96GB or 144GB of HBM3/HBM3e memory for the GPU.

The systems utilize NVIDIA-C2C interconnects that link the CPU, GPU, and memory at a blistering 900GB/s—seven times faster than the PCIe Gen5 standard. Additionally, the modular design includes multiple PCIe 5.0 x16 slots, allowing for expandability in terms of additional GPUs, DPUs for cloud and data management, and storage solutions.

In terms of operational efficiency, Supermicro’s 1U 2-node design, equipped with liquid cooling technology, promises to reduce operational expenditures (OPEX) by over 40 percent while enhancing computing density. This makes it ideal for deploying large language model clusters and high-performance computing applications.

Moving on to the 2U configuration, these platforms are compatible with both NVIDIA Grace and x86 CPUs and can host up to four full-size data center GPUs. They also feature additional PCIe 5.0 x16 slots for I/O and eight hot-swappable EDSFF storage bays.

Ultimately, Supermicro’s MGX systems are designed to be versatile and efficient with NVIDIA Grace Superchip CPUs that feature 144 cores and offer twice the performance per watt compared to current x86 CPUs. These systems are positioned to redefine compute densities and energy efficiency in both hyperscale and edge data centers.

Product Pages:

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed