Veeam Software has released the results of its latest annual Availability Report; this year marks its fifth. The study illustrates the demands of customers who want always-on 24/7 enterprise and though companies recognize this, the amount of downtime and frequency is actually going up. The report showed that 84% of IT decisions-makers (ITDMs) from numerous countries admitted that their organizations were suffering from an ‘Availability Gap’ (the gulf between what IT can deliver and what users demand). Not only is this number up 2% from that last report, Veeam’s report found that this Availability Gap is costing businesses $16 million a year in lost revenue and could negatively impact the organizations image.

Veeam Software has released the results of its latest annual Availability Report; this year marks its fifth. The study illustrates the demands of customers who want always-on 24/7 enterprise and though companies recognize this, the amount of downtime and frequency is actually going up. The report showed that 84% of IT decisions-makers (ITDMs) from numerous countries admitted that their organizations were suffering from an ‘Availability Gap’ (the gulf between what IT can deliver and what users demand). Not only is this number up 2% from that last report, Veeam’s report found that this Availability Gap is costing businesses $16 million a year in lost revenue and could negatively impact the organizations image.

Veeam Software has released the results of its latest annual Availability Report; this year marks its fifth. The study illustrates the demands of customers who want always-on 24/7 enterprise and though companies recognize this, the amount of downtime and frequency is actually going up. The report showed that 84% of IT decisions-makers (ITDMs) from numerous countries admitted that their organizations were suffering from an ‘Availability Gap’ (the gulf between what IT can deliver and what users demand). Not only is this number up 2% from that last report, Veeam’s report found that this Availability Gap is costing businesses $16 million a year in lost revenue and could negatively impact the organizations image.

Veeam commissioned the survey using Vanson Bourne. Vanson Bourne is an independent technology market research specialist. Vanson Bourne interviewed 1,140 senior ITDMs from countries around the world. And while almost all of those interviewed stated that they had implemented tightened measures to reduce availability incidents the current cost of $16 million is up $6 million from last year. What’s more alarming is that respondents classify 48% of their workloads as mission-critical.

With the connected population growing, 3.4 billion people or 42% of the world’s population, and billions of connected devices the demand for always on is only going to grow. And while this study found that two-thirds of the respondents were investing in their data centers the Availability Gap seems to be growing. On a more uplifting note, organizations both see the problem and are investing in ways to counteract it.

Key findings include:

- Users want support for real-time operations (63%) and 24/7 global access to IT services to support international business (59%)

- When modernizing their data centers, high-speed recovery (59%) and data loss avoidance (57%) are the two most sought-after capabilities; however, cost and lack of skills is inhibiting deployment

- Organizations have increased their service level requirements to minimize application downtime (96% of organizations have increased the requirements) or guarantee access to data (94%) to some extent over the past two years, but the Availability Gap still remains

- To address this, however, respondents stated that their organizations are currently, or are intending in the near future, to modernize their data center in some way – virtualization (85%) and backups (80%) are among the most common areas to update for this purpose

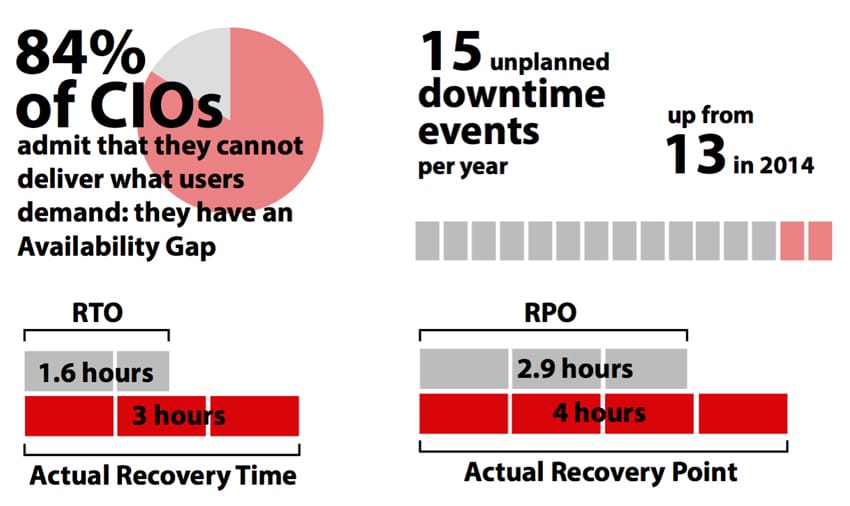

- SLAs for recovery time objectives (RTOs) have been set at 1.6 hours, but respondents admit that in reality recoveries take 3 hours. Similarly, SLAs for recovery point objectives (RPOs) are 2.9 hours, whereas 4.2 hours is actually being delivered. Respondents report that their organization, on average, experiences 15 unplanned downtime events per year. This compares to the average of 13 reported in 2014. With this, unplanned mission-critical application downtime length has increased from 1.4 hours to 1.9 hours year over year, and that non-mission-critical application downtime length has increased from 4.0 hours to 5.8 hours

- Just under half only test backups on a monthly basis, or even less frequently. Long gaps between testing increase the chance of issues being found when data needs to be recovered – at which point it may be too late for these organizations. And of those that do test their backups, just 26% test more than 5% of their backups

- As a result, the estimated average annual cost of downtime to enterprises can be up to $16 million. This is an increase of $6 million on the equivalent 2014 average

- The average per hour cost of downtime for a mission-critical application is just under $80,000. The average per hour cost of data loss resulting from downtime for a mission-critical application is just under $90,000. When it comes to non-mission-critical applications, the average cost per hour is over $50,000 in both cases

- Loss of customer confidence (68%), damage to their organization’s brand (62%), loss of employee confidence (51%) were the top three ‘non-financial’ results of poor availability cited

Sign up for the StorageReview newsletter