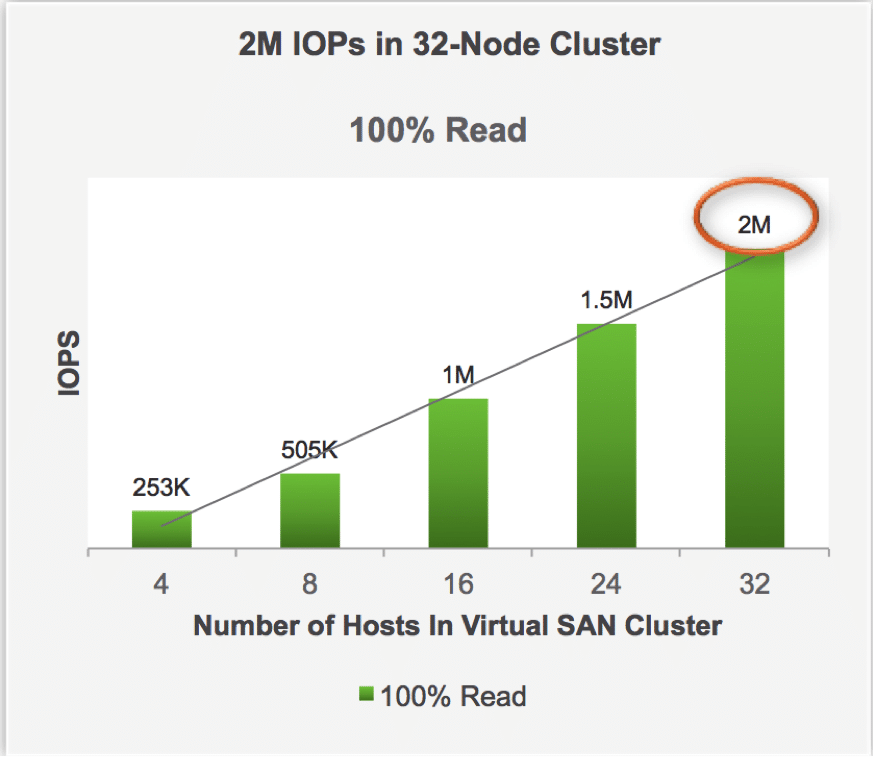

Virtual SAN (VSAN) 5.5, VMware’s first Software Defined Data Center product, was released last month and has been very well received by enterprises looking to get more out of traditional compute servers. VSAN essentially gives users access to redundant nodes that combine compute and storage, while layering into the virtualization environment that is all managed through VMware's vSphere client. According to VMware’s internal benchmarks, VSAN has the capability of reaching 2 million IOPS in a 32-node cluster. How did VMware accomplish this and what are the details of the configuration they used to reach this feat?

VMware recently released their test configuration that hit the remarkable 2 million IOPS number. Of course we know that IOPS are only part of the performance picture for any storage product, application testing will reveal more about VSAN's capabilities in terms of latency and potential throughput, but the industry as it is today largely understands IOPS as a measure of storage performance.

VMware posted VSAN performance results in two scenarios; 1) 100% read workload and 2) 70% read, 30% write workload. To begin, each host used a Dell PowerEdge R720 with dual-socket Intel Xeon CPU E5-2650 v2 @ 2.6GHz (Ivy Bridge), 128GB RAM, 10GbE, LSI 9207-8i, 1x 400GB Intel S3700 and a hard drive configuration of 4x 1.1TB 10K RPM Hitachi SAS drives and 3x 1.1TB 10K RPM Seagate SAS drives.

VMware used vSphere 5.5 U1 with Virtual SAN 5.5 on the cluster with following changes to the default settings in vSphere:

- Increase the heap size for the vSphere network stack to 512MB. “esxcli system settings advanced set -o /Net/TcpipHeapMax -i 512”. You can validate this setting using “esxcli system settings advanced list -o /Net/TcpipHeapmax”

- Allow VSAN to form 32-host clusters. “esxcli system settings advanced set -o /adv/CMMDS/goto11 1”.

- Installed the Phase 18 LSI driver (mpt2sas version 18.00.00.00.1vmw) for the LSI storage controller.

- Configured BIOS Power Management (System Profile Settings) for ‘Performance’, (for example, all power saving features were disabled)

For a more detailed look at ESXi configuration, visit their VMware Knowledgebase page

100% Read Benchmark Settings

VMware made it so that each host ran a single 4-vcpu 32-bit Ubuntu 12.04 VM with 8 virtual disks (vmdk files) on the VSAN datastore with disks distributed across two PVSCSI controllers. The default driver for pvscsi (version 1.0.2.0-k) was also used.

In order to better support large-scale workloads with high outstanding IO, VMware modified the boot time parameters for pvscsi to “vmw_pvscsi.cmd_per_lun=254 vmw_pvscsi.ring_pages=32″. Visit the VMware Knowledgebase for more details on this configuration. VMware applied a storage policy-based management setting of HostFailuresToTolerate=0 to the vmdks for this benchmark test.

IOMeter with 8 worker threads was run in each VM, with every thread configured to work on 8 GB of a single vmdk. Additionally, each thread ran a 100% read, 80% random workload with 4096 byte IOs aligned at the 4096 byte boundary with 16 OIO per worker. Essentially, each VM on each host issued the following:

- 4096 byte IO requests across a 64GB working set

- 100% read, 80% random

- Aggregate of 128 OIO/host

VMware ran the configuration for one hour, measuring an aggregate guest IOPS at 60 second intervals. This resulted in an unprecedented median IOPS of 2,024,000.

70% Read 30% Write Benchmark

In the 70/30 IO profile, each host ran a single 4-vcpu 32-bit Ubuntu 12.04 VM with 8 virtual disks (vmdk files) on the VSAN datastore. Additionally, the disks were distributed across two PVSCSI controllers. The default driver for pvscsi was used (version 1.0.2.0-k) while the boot time parameters was modified for pvscsi to improve the support capability of high outstanding IO: “vmw_pvscsi.cmd_per_lun=254 vmw_pvscsi.ring_pages=32″.

Like in the 100% Read configuration, VMware ran IOMeter with 8 worker threads in each VM, though each thread was configured to work on 4GB of a single vmdk. Each thread runs a 70% read, 80% random workload with 4096 byte IOs aligned at the 4096 byte boundary with 8 OIO.

Essentially, each VM on each host issued the following:

- 4096 byte IO requests across a 32GB working set

- 70% read, 80% random

- Aggregate of 64 OIO/host

In the 70/30 test VMware recorded a speed of 652,900 iops with an average latency of 2.98ms and bandwidth during that time was 3.2GB/s.

What does this mean?

While much more benchmarking remains to be done, including our suite of benchmarks that include things like SQL server and VMmark, it’s obvious that with these performance numbers, albeit with a maximum 32 node cluster, VSAN has a lot of potential from a performance perspective. It's also worth noting that VMware used only 8 bays in the backplanes, with this configuration they could have effectively doubled the storage, adding another SSD and 7 more HDDs to each node.

VMware Virtual SAN is currently available at $2,495 per processor with VMware Virtual SAN for Desktop priced at $50 per user.

Amazon

Amazon