WEKA has announced its strategic integration with the NVIDIA AI Data Platform reference design. It achieved critical NVIDIA storage certifications to provide optimized infrastructure explicitly tailored for agentic AI and complex reasoning models. Alongside this announcement, WEKA introduced its innovative Augmented Memory Grid capability and additional NVIDIA Cloud Partner (NCP) Reference Architectures certifications, including NVIDIA GB200 NVL72 and NVIDIA-Certified Systems Storage for enterprise-scale AI factory deployments.

Accelerating AI Agents

The NVIDIA AI Data Platform represents a significant leap forward in enterprise AI infrastructure, seamlessly integrating the NVIDIA Blackwell architecture, NVIDIA BlueField DPUs, Spectrum-X networking, and NVIDIA AI Enterprise software. By pairing this advanced NVIDIA infrastructure with the WEKA Data Platform, enterprises can deploy a massively scalable storage foundation designed explicitly for high-performance AI inference workloads.

This integrated solution directly addresses the growing demands of enterprise AI, providing AI query agents seamless, accelerated access to critical business intelligence, resulting in significantly improved inference performance and reasoning accuracy. WEKA’s Data Platform ensures AI systems can rapidly transform data into actionable intelligence, thus supporting the sophisticated reasoning required by next-generation AI models.

WEKA Augmented Memory Grid

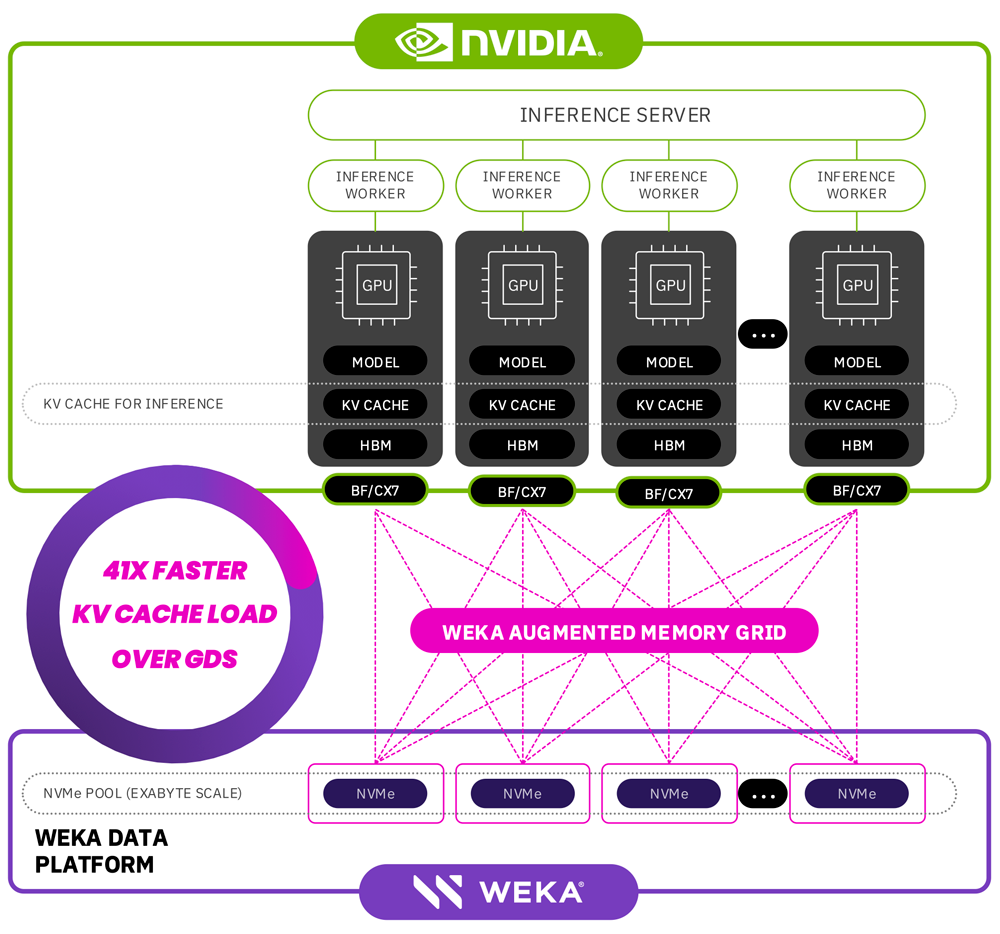

Agentic AI models continuously evolve and can manage larger context windows, expansive parameter counts, and more significant memory requirements. These advancements often push the limits of traditional GPU memory capacities, creating bottlenecks in AI inference. WEKA addresses these challenges with its groundbreaking Augmented Memory Grid solution, an innovation that extends available memory for AI workloads, providing petabyte-scale increments—far beyond current single-terabyte limitations.

WEKA’s Augmented Memory Grid significantly enhances AI inference by combining WEKA’s data platform software with NVIDIA’s accelerated computing and networking technologies. This combination enables near-memory-speed access with microsecond latency, dramatically improving token processing performance and overall AI inference efficiency.

Key advantages of WEKA’s Augmented Memory Grid include:

-

Dramatically Reduced Latency: In tests involving 105,000 tokens, WEKA’s Augmented Memory Grid delivered a remarkable 41x improvement in time-to-first-token compared to traditional recalculation methods.

-

Optimized Token Throughput: WEKA’s solution efficiently handles inference workloads across clusters, achieving higher token throughput at a reduced overall cost, lowering the cost per token processed by up to 24% system-wide.

These performance gains directly translate into enhanced economic efficiency, enabling enterprises to accelerate AI-driven innovation without compromising model capabilities or infrastructure performance.

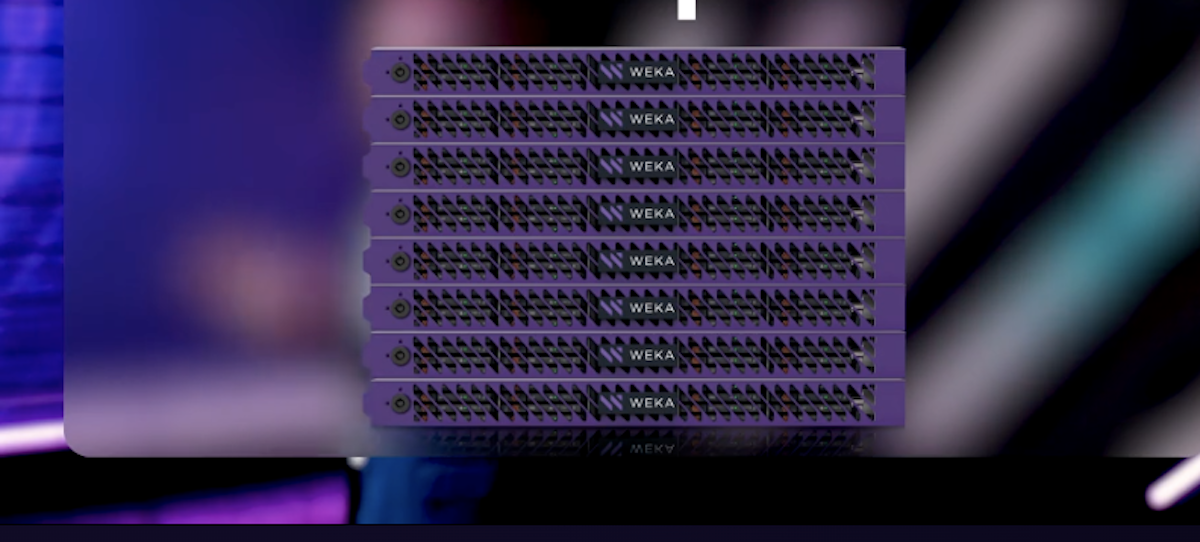

WEKApod Nitro

WEKApod Nitro Data Platform appliances have earned multiple NVIDIA certifications, establishing WEKA as a leading high-performance storage provider for enterprise AI:

-

NVIDIA Cloud Partner (NCP) Certification: WEKApod Nitro is among the first storage solutions certified for NVIDIA’s NCP Reference Architectures, including HGX H200, B200, and GB200 NVL72. These appliances empower service providers and developers by supporting massive GPU clusters—up to 1,152 GPUs in an 8U configuration—while maintaining exceptional performance density and power efficiency.

-

NVIDIA-Certified Systems Storage Designation: WEKApod Nitro appliances have received NVIDIA’s new enterprise storage certification, ensuring compatibility with NVIDIA Enterprise Reference Architectures and best practices. This designation validates that WEKA’s Data Platform delivers optimal storage performance, efficiency, and scalability for demanding enterprise AI and HPC deployments.

Nilesh Patel, WEKA’s Chief Product Officer, emphasized the transformative potential of this partnership with NVIDIA. Comparing this development to breakthroughs in aerospace, Patel stated, “Just as breaking the sound barrier unlocked new frontiers, WEKA’s Augmented Memory Grid shatters the AI memory barrier, dramatically expanding GPU memory and optimizing token efficiency. This innovation fundamentally transforms AI token economics, enabling faster innovation and lower costs without sacrificing performance.”

Rob Davis, Vice President of Storage Networking Technology at NVIDIA, further highlighted the importance of this integration: “Enterprises deploying agentic AI and reasoning models require unprecedented efficiency and scalability. Combining NVIDIA and WEKA technologies ensures AI agents can access and process data with unmatched speed and accuracy during inference.”

Availability

- WEKA’s NCP reference architecture for NVIDIA Blackwell systems will be available in March.

- The WEKA Augmented Memory Grid capability will be generally available to WEKA Data Platform customers in Spring 2025.

Amazon

Amazon