Chilldyne Podcast – liquid cooling solutions for data centers focusing on leak-proof design and efficiency for high-performance servers.

Brian rarely gets to sit down to a podcast with a doctor. However, today, he is joined by Dr. Steve Harrington, CEO of Chilldyne. We have been keenly interested in liquid cooling technologies, and there are more popping up all the time. But we have a soft spot for Chilldyne.

Some background on Dr. Steve Harrington. He is the CTO of Chilldyne and the founder of Flometrics. He is an expert and inventor in Fluid Dynamics and thermodynamics, designing pumps, valves, nozzles, flowmeters, aircraft cooling systems, rocket fuel pumps, rocket test stands, turbine flow measurement systems, medical ventilators, air/oxygen mixers, respiratory humidifiers, CPAP machines, spirometers, heat exchangers, vacuum cleaners, oxygen concentrators, motorcycle fairings, infusion pumps, electronics cooling systems, wave machines, data acquisition systems etc. But wait, there’s more. His expertise extends to electronics, programming, optics, nuclear physics, biology, and physiology.

Steve has more than 29 years of experience in fluid dynamics and thermodynamics. He has consulted for aerospace, semiconductor, medical device, racing, electronic cooling, and other industries. He has over 25 patents and has completed projects for NASA, DARPA, SOCOM, and USACE.

When he is not busy, he is a part-time faculty member at the University of California, San Diego, where he teaches an aerospace engineering senior design class where students instrument, build, and fly liquid rockets. He is also a surfer, a pilot, a scuba diver, a boat and car mechanic, an electrician, and a plumber.

There is much to learn about liquid cooling and we think this podcast will help answer some of the questions on the minds of IT and data center professionals everywhere.

That should be enough to make you interested in listening to this entire podcast. However, if you are strapped for time, we have broken the pod down into five-minute segments so you can hop around as needed.

00:00 – 05:30 Introduction

“Hot” New Technology: Liquid Cooling

Brian opens with a pun about liquid cooling being a “hot” technology in data centers. Liquid cooling has come full circle—once abandoned with the advent of CMOS, it’s now making a resurgence due to the intense heat generated by modern processors.

From Supercomputers to Jet Engines

Steve provides some personal history on his journey to liquid cooling, starting with cooling supercomputers in the 1980s. Fun fact: His expertise in cooling rocket engines and laser systems translated well to modern data center liquid cooling.

Why Did Liquid Cooling Leave?

Liquid cooling took a break because CMOS technology was thought to have solved the power problem. Surprise! Power demands are back with a vengeance.

From Aerospace to Data Centers

In aerospace, liquid cooling isn’t just about cooling; it’s about reliability over time—think planes, rockets, and lasers. Data centers, on the other hand, need uptime and longevity, adding unique challenges to Steve’s transition from aerospace.

ARPA-E Grant and the 2 Kilowatt Chip

Steve’s foresight included partnering with ARPA-E to develop a cold plate for a two-kilowatt chip. This foresight is paying off, as more data centers are bidding for projects with these high-power chips.

05:30 – 10:24 Reassuring the CFO

Liquid Cooling: A CFO’s Nightmare?

Testing liquid cooling systems can cost millions. Convincing CFOs to sign off on such “experiments” is no easy task, especially since they don’t fit neatly into the financial spreadsheet. It’s like buying a $2 million test drive—it sounds fun but is risky.

Sharing Secrets: The Meta Paradox

Meta and other commercial giants tend to lock up their liquid cooling secrets tighter than the company’s algorithm. Sharing is caring, but not when there’s competition involved.

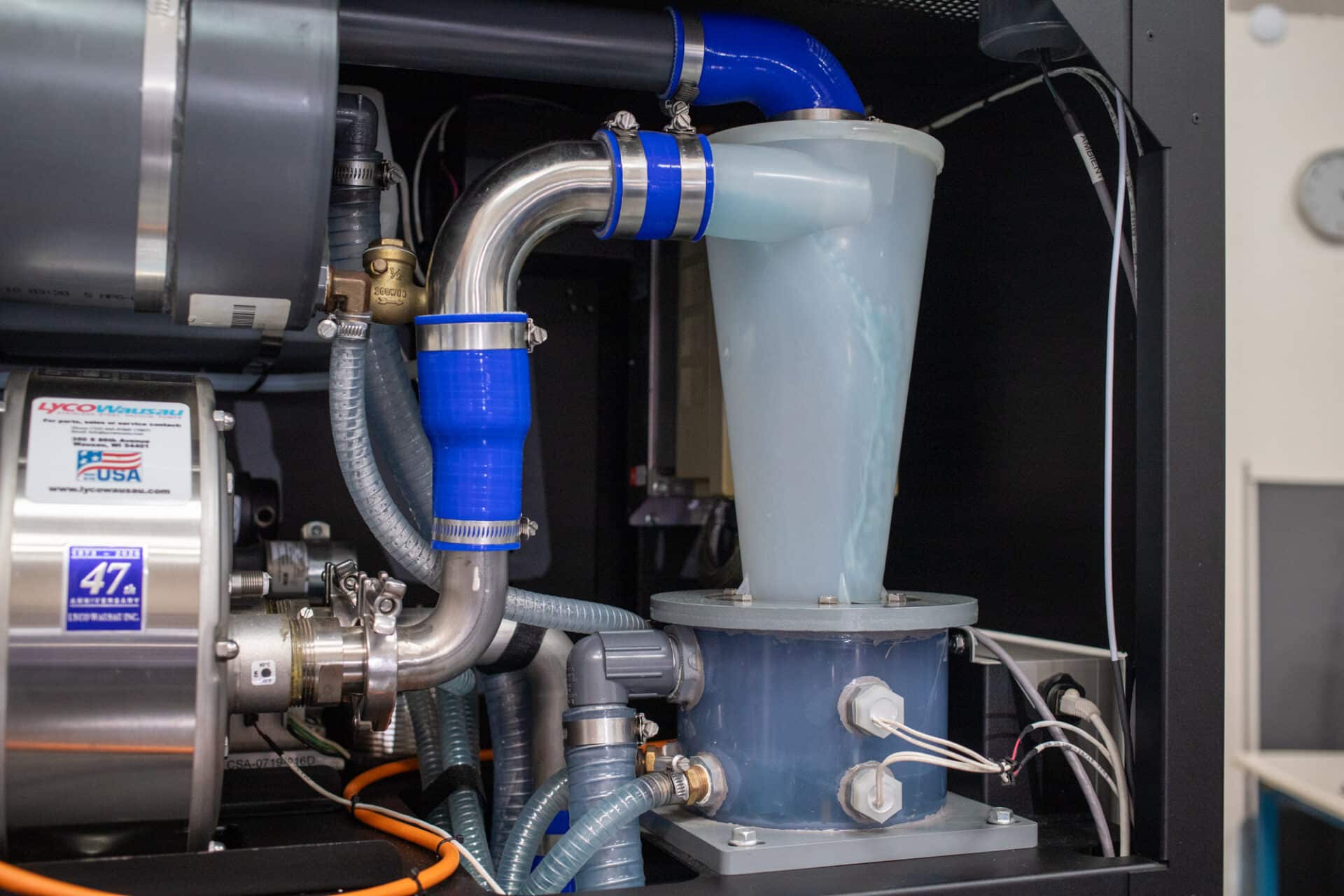

Chilldyne’s Leak-Proof, Negative Pressure System

Chilldyne’s secret sauce: a negative pressure system that’s essentially leak-free. If a leak happens, air enters instead of coolant spilling, keeping the chips safe from a soggy fate.

Handling Leaks: When Not to Panic

Even with leaks, Chilldyne’s system keeps running.

Liquid Cooling Data: The Missing Chapter

Industry-wide data on the impact of liquid cooling on wear and tear is sparse. Liquid cooling can feel like more of a mysterious “beer conference” topic than an open discussion.

10:24 – 14:55 Keep the bacteria out of the water

The Biodiversity of Data Center Coolant

Did you know that the local bacteria in your water supply can mess with your liquid cooling system? Different regions have different microbes, which can wreak havoc on data center coolant systems, leading to clogged plates and overheating GPUs.

Coolant Chemistry 101

Chilldyne has a built-in chemistry lab to monitor coolant quality. Forget your sterile water dreams; this is a battle between biology and technology.

Coolant Additives: A Budget Dilemma

Don’t cheap out on your coolant chemistry unless you enjoy emergency maintenance.

PG 25: Gamers’ Friend, Data Centers’ Enemy

PG 25 is great for gamers—it doesn’t freeze and prevents bacteria growth. However, it attacks seals, leading to leaks over time, making it less ideal for long-term data center use.

Chemistry’s Role in Data Center Maintenance

Data centers often forget that liquid cooling isn’t a “set it and forget it” solution. It requires regular monitoring and maintenance.

14:55 – 20:16 The need for low-toxicity additives

The Additives That Keep the Cool

Chilldyne uses low-toxicity additives: a splash of antibacterial and anti-corrosion chemicals.

Say No to PG 25 (Sometimes)

PG 25 is a “don’t freeze” lifesaver for gamers shipping their liquid-cooled rigs but less so for data centers.

Facility Water is Just the Start

When installing a CDU (cooling distribution unit), Chilldyne starts with distilled or reverse osmosis-filtered water.

The Cold Plate Clean-Up Crew

One customer refused to use recommended additives, leading to a clogged, hot mess in their GPUs. Chilldyne stepped in with a chemical cleanse, but if a cold plate gets too gummed up, sometimes it’s game over—time for replacement.

Liquid Cooling ≠ Set It and Forget It

Electronics may run smoothly for years, but liquid cooling? That’s a different beast.

20:16 – 26:03 Water is still the best for cooling

Water: The MVP of Cooling Fluids

Why stick with good ol’ water? It’s cheap, non-toxic, and performs well—especially in single-phase systems.

Immersion Cooling: The Slow Burner

Immersion cooling with engineered oils has some potential, especially in edge zones or moderate power servers, but it’s just not efficient enough for today’s two-kilowatt monsters.

When Pipes Get Silly Big

Watch for logistical nightmares as cooling systems scale up.

Electrical Limits: The Real Bottleneck

While liquid cooling systems can scale, there’s a limit to how much electrical current a chip can handle.

Don’t Forget the Chiller

While cooling towers are ideal in many places, some regions require chillers due to water scarcity. Go with a cooling tower.

26:03 – 29:44 Is that a leak?

The Leaks Won’t Tell You

Unlike server components, liquid cooling systems aren’t yet smart enough to give you advanced warnings about leaks.

The Problem with Positive Pressure

Positive pressure systems that detect leaks with special tape are reactive—they shut down servers when something goes wrong. Negative pressure systems like Chilldyne’s, however, keep the servers running even with minor leaks, avoiding costly downtime.

Compatibility Chaos

The issue with buying parts from multiple vendors is compatibility problems.

Plumbing Isn’t an IT Skill

Data center operators are skilled in networking, cybersecurity, and power management—but they’re not plumbers or chemists. That’s where specialized vendors come in.

Switch-Over Valves: The Fail-Safe

Chilldyne uses switch-over valves to provide redundancy in cooling systems. These valves work like airplane safety mechanisms—if one system fails, the other kicks in without the servers even noticing.

29:44 – 35:22 Make sure liquid cooling is what you need

Scale Matters: The 100kW Threshold

Steve suggests that liquid cooling isn’t worth the hassle unless you’re dealing with over 100kW of compute power.

Fortune 500 and Beyond

Big companies are already there, but even mid-tier enterprises are starting to feel the heat. The power consumption of GPU-packed servers means that liquid cooling will soon be a necessity for many organizations.

The Rack Scale CDU That Wasn’t

Chilldyne has a design for a 50-100kW rack scale CDU, but no takers yet.

The Four-Inch Pipe Problem

Liquid cooling systems max out at around 1-2MW before the plumbing gets unwieldy. Keeping the system manageable is key.

Electrical vs. Cooling Limits

We’re approaching the point where electrical limits are more problematic than cooling capacity.

35:22 – 40:16 Is it hot in here?

The Heat Disposal Conundrum

It’s not enough to cool the gear—you’ve got to deal with all that heat.

The Chip Temperature Tango

There’s a balance between cooling efficiency and chip performance. Design systems that can go colder if needed because the next-gen chips might perform 20% faster with lower temperatures.

Data Center Math is Getting Hard

Factor in cooling tower efficiency, GPU performance, fan speeds, and a whole lot more. The math behind today’s cooling solutions is critical for optimization.

HVAC and IT: Strange Bedfellows

HVAC engineers and IT teams used to operate separately, but liquid cooling is bringing them together.

Keeping the IT Guys in the Loop

Encourage collaboration between hardware providers and cooling experts to ensure the solution won’t melt under pressure.

40:16 – 43:35 Wrap-up

Get Started with Liquid Cooling Now

Steve recommends starting small, but start now. Get a system, run it, and learn from it before you’re in too deep.

The Danger of Overconfidence

Some companies are planning massive liquid-cooled data centers without ever testing smaller systems.

Lead Time: It’s Real

Lead times for liquid cooling systems can range from 16 to 52 weeks. So, better get those orders in early!

Spec Confusion with New Racks

NVIDIA’s new MVL racks are on the horizon, but specific water temperature and chemistry guidelines are still unclear.

Home Lab Mentality: Get Your Reps In

Steve encourages enterprises to take the “home lab” approach: start small, mess around with it, and learn.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed