Procyon’s AI Text and Image Generation benchmarks are designed to make working with large language and image models easier. These tests are standardized, repeatable, and reflect real-world scenarios, so you don’t have to worry about the complexity of measuring performance.

AI text and image generation workloads can push hardware to its limits, so having consistent and practical benchmarks is essential. Whether you’re running tests on a high-performance GPU or a smaller neural processing unit, Procyon provides clear and actionable insights to help you understand exactly how your hardware performs.

By bridging the gap between advanced AI capabilities and practical performance measurement, Procyon gives users an intuitive way to see how well their systems handle today’s most demanding AI tasks.

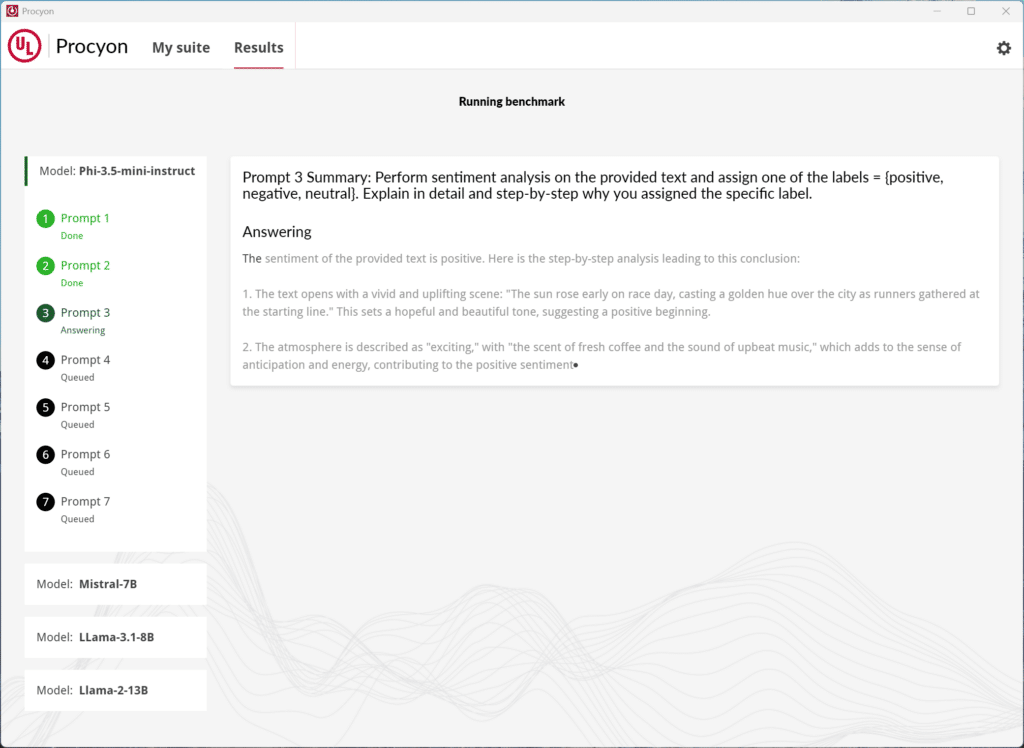

The Procyon AI Text Generation Benchmark

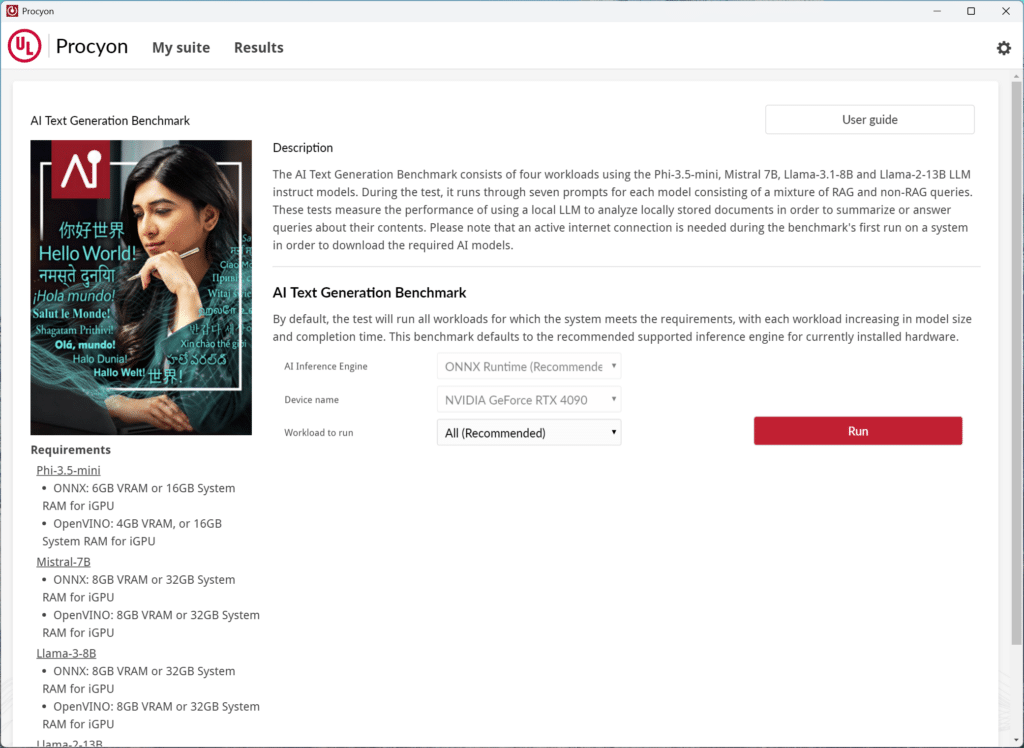

The Procyon AI Text Generation Benchmark evaluates how effectively a computer or device can run AI models, such as those behind tools like ChatGPT, to generate text. It checks how fast and smoothly the system can produce responses, write content, or summarize information when given prompts while also monitoring how much of the computer’s resources—like its processor, graphics card, and memory—are used during the process.

What is unique about Procyon’s benchmark is that it simplifies the complex task of evaluating local large language model (LLM) performance, making it accessible to users like enterprise professionals, hardware reviewers, and engineering teams. Traditional benchmarking requires significant storage, extensive downloads, and careful configuration to manage variables like quantization and token handling. Procyon streamlines this process with a structured testing framework and pre-packaged, optimized AI models that deliver consistent, repeatable results without the need for technical expertise or manual setup.

How Procyon AI Text Generation Works and Why It Matters

Procyon automates LLM testing by preloading four widely recognized models, enabling quick and reliable performance evaluation of inference tasks—the real-time generation of text based on input prompts. It monitors critical metrics such as tokens per second, latency, and hardware resource usage (CPU, GPU, and memory) during tests. The platform provides real-time insights and generates detailed post-test reports highlighting inference speed, potential resource bottlenecks, and overall efficiency.

These results help users optimize performance and allow enterprises to assess how well their hardware manages demanding AI workloads.

Real-World Testing Scenarios

Procyon’s benchmarking suite simulates realistic use cases with seven diverse test prompts, covering two key workloads:

| Test Type | Workload Focus | Input Format | Prominent Use Case Examples | Unique Features |

| Retrieval-Augmented Generation (RAG) | High-complexity retrieval | Tokenized data | Generating knowledge-based summaries | Tests retrieval integration accuracy |

| Creative Non-RAG Text | Free-form generation | Natural language text | Writing creative drafts, stories | Evaluates generative fluency |

- Retrieval-Augmented Generation (RAG): RAG tasks measure how effectively a model integrates external knowledge into its responses. These could include generating summaries or answering questions requiring data access outside the LLM’s training set.

- Creative Non-RAG: In free-form generation tasks, the focus is on evaluating text fluency, coherence, and creative output when the model relies solely on its internal training.

Procyon reflects real-world applications by covering both tasks, including enterprise AI workflows (knowledge retrieval) and creative content generation (free-form tasks).

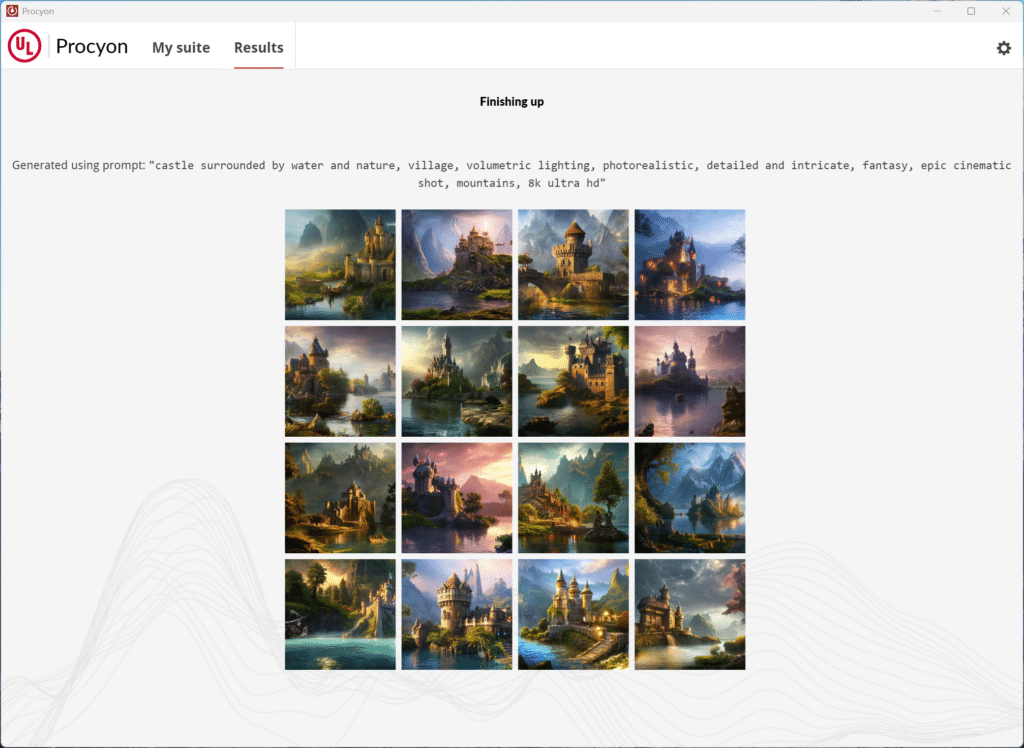

Procyon AI Image Generation Benchmark

Like the text version, the Procyon AI Image Generation Benchmark measures how efficiently a computer or device handles AI-driven image generation tasks, such as transforming text prompts into high-quality images. It was developed with input from industry leaders to evaluate a range of hardware—from low-power neural processing units (NPUs) to high-performance GPUs—using Stable Diffusion models, widely used for text-to-image generation by professionals and everyday users.

What Makes Procyon AI Image Generation Benchmark Unique?

Procyon’s image benchmark offers three distinct tests, each tailored to different hardware capabilities, ensuring a comprehensive evaluation for a variety of devices:

- Stable Diffusion XL (FP16): Designed for high-end GPUs, this is the most demanding test. It generates 1024×1024 resolution images with 100 steps.

- Stable Diffusion 1.5 (FP16): A balanced workload for mid-range GPUs, producing 512×512 resolution images with a batch size of 4 and 100 steps.

- Stable Diffusion 1.5 (INT8): An optimized test for low-power devices like NPUs, focusing on 512×512 images with lighter settings of 50 steps and a single image batch.

How Procyon AI Image Generation Works and Why It Matters

Procyon evaluates your system’s performance by measuring critical factors like image generation speed, GPU utilization, and overall resource efficiency. It tracks real-time metrics such as GPU temperature, clock speeds, and memory usage while also analyzing the quality of the generated images. Procyon also supports multiple inference engines, including NVIDIA TensorRT, Intel OpenVINO, and ONNX with DirectML, allowing it to run seamlessly across different platforms and hardware configurations.

At the end of testing, Procyon generates detailed reports highlighting performance scores, resource bottlenecks, and the quality of the outputs, giving users a clear understanding of how well their hardware handles the computational demands of text-to-image tasks. This is great for a range of use cases, whether you’re a developer fine-tuning AI engines, a hardware reviewer comparing systems, or an enterprise optimizing workflows.

The benchmark ensures reliable comparisons across hardware by standardizing the use of text prompts and Stable Diffusion models. The accompanying reports allow users to review overall performance scores and the quality of generated images, providing a complete picture of how their systems handle the computational demands of text-to-image tasks.

Benchmarking Tests

When evaluating systems for AI workloads, the hardware can vary widely, from portable, consumer-grade laptops to high-end workstations designed for professional environments. Each configuration has strengths and limitations, making it essential to test across diverse platforms to understand how different hardware profiles handle demanding AI tasks.

For this analysis, we used the Procyon benchmarks on various systems, including a gaming laptop, an enterprise-focused workstation, and two versatile professional devices. The variety allowed us to observe performance differences influenced by GPU capability, memory architecture, storage solutions, and processor types.

- Alienware Laptop: Running Windows 11 Home, the Alienware laptop is a consumer-grade laptop designed primarily for gaming but well-suited for AI workloads due to its equipped NVIDIA RTX 4090 GPU. Its Intel Core i9-14900KF processor and 32GB of DDR4 memory ensure solid computing power, while a Samsung PM9A1 NVMe SSD handles the storage.

- Precision 5860 Tower: Built for enterprise-level performance, the Precision 5860 Tower features NVIDIA’s RTX 6000 GPU, a professional-grade powerhouse tailored for intensive workloads like AI and 3D rendering. Its Intel Xeon w7-2595X CPU offers workstation-class processing capabilities, complemented by 128GB of DDR5 RAM.

- Lenovo ThinkPad: The Lenovo ThinkPad balances portability and professional-grade performance, making it ideal for users who need mobility without compromising capability. It has an NVIDIA RTX A4000 GPU, a workstation-class card designed for AI and graphical workloads. The system is powered by an Intel Xeon W-11955M processor, supported by 32GB of DDR4 memory. The storage solution is a Samsung 980 Pro SSD, a popular NVMe drive.

- Lenovo ThinkStation: The Lenovo ThinkStation is a professional-grade workstation designed to handle the heaviest computational loads. It’s engineered for peak AI inference performance with an NVIDIA RTX A5500 GPU and an Intel Xeon Gold 5420+ CPU. Backed by 256GB of DDR5 memory, it offers immense multitasking and data-handling capabilities. The system uses a Kioxia Exceria Pro SSD, a high-endurance, high-speed drive that meets the demands of large-scale data processing. Like the others, it runs on Windows 11 Pro.

Testing these systems with the Procyon AI benchmarks allows us to see how these tools work in action while demonstrating how different types of hardware handle AI tasks. Whether it’s a gaming laptop with a top-tier consumer GPU or a professional workstation built for heavy-duty workloads, each setup offers something unique.

AI Text Generation

| System | Model | Overall Score | Output Tokens/s |

|---|---|---|---|

| Alienware Procyon (NVIDIA RTX 4090, ONNXRuntime-DirectML 1.20.0) |

PHI3.5 | 3031 | 226.56 tokens/s |

| Mistral 7B | 3507 | 171.9 tokens/s | |

| LLAMA3.1 | 3487 | 142.26 tokens/s | |

| LLAMA2 | 3527 | 90.59 tokens/s | |

| Precision 5860 Tower (NVIDIA RTX 6000, ONNXRuntime-DirectML 1.20.0) |

PHI3.5 | 2245 | 180.472 tokens/s |

| Mistral 7B | 2725 | 146.639 tokens/s | |

| LLAMA3.1 | 2692 | 118.806 tokens/s | |

| LLAMA2 | 2733 | 77.326 tokens/s | |

| Lenovo Thinkpad (Intel UHD Graphics(iGPU), Intel OpenVINO 2024.5.0) |

PHI3.5 | 133 | 8.98 tokens/s |

| Mistral 7B | 108 | 5.54 tokens/s | |

| LLAMA3.1 | 107 | 2.93 tokens/s | |

| LLAMA2 | 100 | 8.98 tokens/s | |

| Lenovo Thinkstation (NVIDIA RTX A5500, ONNXRuntime-DirectML 1.20.0) |

PHI3.5 | 1551 | 99.43 tokens/s |

| Mistral 7B | 1556 | 64.18 tokens/s | |

| LLAMA3.1 | 1580 | 59.55 tokens/s | |

| LLAMA2 | 1644 | 37.38 tokens/s |

AI Image Generation

| System | Benchmark | Overall Score | Image Generation Speed (/s) |

|---|---|---|---|

| Alienware Procyon (NVIDIA RTX 4090, NVIDIA TensorRT) |

Stable Diffusion 1.5 (FP16) | 5995 | 1.043 s/image |

| Stable Diffusion 1.5 (INT8) | 49692 | 0.629 s/image | |

| Stable Diffusion XL (FP16) | 4944 | 7.584 s/image | |

| Precision 5860 Tower (NVIDIA RTX 6000, NVIDIA TensorRT) |

Stable Diffusion 1.5 (FP16) | 44169 | 0.708 s/image |

| Stable Diffusion 1.5 (INT8) | 3094 | 12.120 s/image | |

| Stable Diffusion XL (FP16) | 4182 | 1.494 s/image | |

| Lenovo Thinkpad (NVIDIA RTX A4000, TensorRT) |

Stable Diffusion 1.5 (FP16) | 1308 | 4.778 s/image |

| Stable Diffusion 1.5 (INT8) | 15133 | 2.065 s/image | |

| Stable Diffusion XL (FP16) | 858 | 43.702 s/image | |

| Lenovo Thinkstation (NVIDIA RTX A5500, NVIDIA TensorRT) |

Stable Diffusion 1.5 (FP16) | 2401 | 2.603 s/image |

| Stable Diffusion 1.5 (INT8) | 25489 | 1.226 s/image | |

| Stable Diffusion XL (FP16) | 2000 | 18.747 s/image |

Stay tuned to this page as we continue to run these new tests across a wide range of systems that come through the StorageReview lab.

Amazon

Amazon