There’s little doubt that Amazon is the leader when it comes to a variety of cloud services offered through their EC2 (Elastic Compute Cloud) web service. With a relatively simple provisioning process and the ability to easily scale instances and storage needs up or down, EC2 aims to deliver all the promise of the cloud at cost-effective price points. For some, though, the cloud isn’t just about flexibility and ease of deployment; it’s about performance. The business benefits of being able to spin powerful environments up or down for critical applications like analytics can often vastly outweigh the expense of doing so through OPEX instead of the long-term investment of CAPEX. To that end, in May Amazon GA’d the i3 bare metal instance family that provides direct access to CPU and memory resources of the underlying server.

i3.metal instances are built on the Nitro system, which is a collection of AWS-built hardware offload and server protection components that “securely provide high performance networking and storage resources to EC2 instances.” i3.metal instances also take advantage of all of the other services AWS Cloud offers like Elastic Block Store (EBS), which we leveraged as part of this review. The instances also feature up to 15.2TB of NVMe SSD-backed instance storage, as well as 2.3 GHz Intel Xeon processors offering 36 hyper-threaded cores (72 logical processors) and 512 GiB of memory. On the fabric side, the i3.metal instances deliver up to 25 Gbps of aggregate network bandwidth, driving high networking throughput and lower latency via Elastic Network Adapter (ENA)-based Enhanced Networking.

Within EC2, there are a number of instance types. The i3 instances fall within the “Storage Optimized” category, with the i3.metal instances taking the mantle as the highest performing of that group. The table below highlights the family and the configuration of the instance types.

| Model | vCPU | Memory (GiB) | Networking Performance | Storage (TB) |

| i3.large | 2 | 15.25 | Up to 10 Gigabit | 1 x 0.475 NVMe SSD |

| i3.xlarge | 4 | 30.5 | Up to 10 Gigabit | 1 x 0.95 NVMe SSD |

| i3.2xlarge | 8 | 61 | Up to 10 Gigabit | 1 x 1.9 NVMe SSD |

| i3.4xlarge | 16 | 122 | Up to 10 Gigabit | 2 x 1.9 NVMe SSD |

| i3.8xlarge | 32 | 244 | 10 Gigabit | 4 x 1.9 NVMe SSD |

| i3.16xlarge | 64 | 488 | 25 Gigabit | 8 x 1.9 NVMe SSD |

| i3.metal | 72 | 512 | 25 Gigabit | 8 x 1.9 NVMe SSD |

i3.metal instances are available in the AWS US East (N. Virginia), US East (Ohio), US West (Oregon), Europe (Frankfurt) and Europe (Ireland) Regions, and can be purchased as On-Demand instances, Reserved instances (3-Yr, 1-Yr, and Convertible), or as Spot instances. For the purposes of this review, we tested in the North Virginia region. We tested with both NVMe storage as well as EBS block volumes.

Performance

VDBench Workload Analysis

To evaluate the performance of the EC2 i3.metal instance, we leveraged VDBench installed locally to test both EBS (30x1TB) and NVMe (8×1.7TB) storage. With both storage types, we allocated 12% of each device and grouped them together in aggregate to look at peak system performance with a moderate amount of data locality.

These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices.

Profiles:

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

- 4K Random Write: 100% Write, 64 threads, 0-120% iorate

- 64K Sequential Read: 100% Read, 16 threads, 0-120% iorate

- 64K Sequential Write: 100% Write, 8 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Linked Clone Traces

We look at both EBS and NVMe in this review. Since there is a dramatic performance difference, we have separated the results into two charts (the latency would be so far apart that the charts would be very difficult to read).

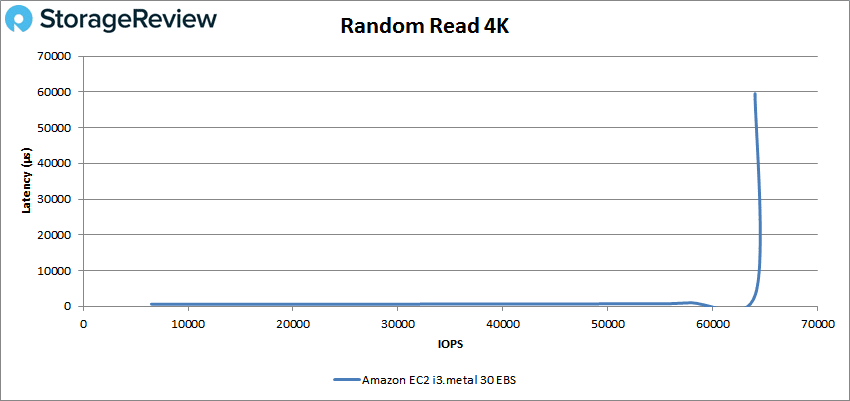

Our first test looks at 4K random read. Here the EBS instance had sub-millisecond latency performance right until the end. Right at about 64K IOPS it spiked up in latency to 59.46ms with performance of 64,047 IOPS.

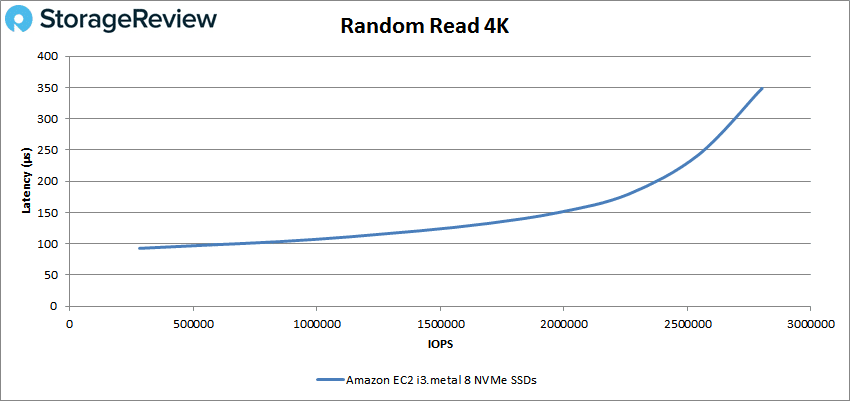

Looking at the NVMe 4K peak random read, we see the instance perform much better. The instance peaked at 2,802,904 IOPS with a latency of 348μs.

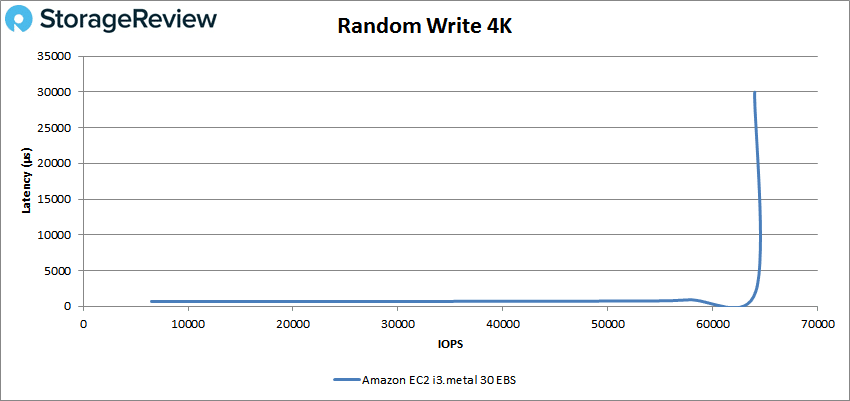

4K random write with EBS saw nearly the same thing as 4K reads with EBS. The instance broke 1ms a bit earlier, about 60K IOPS, and peaked at 64,003 IOPS with a latency of 29.97ms.

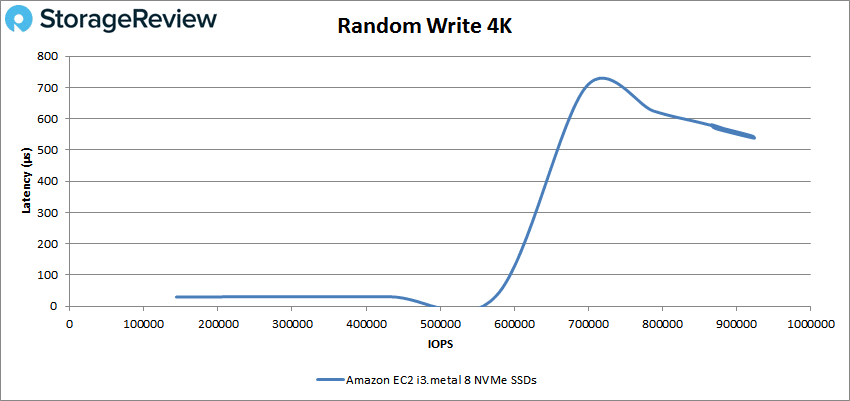

For the NVMe version of the instance, there was a bit of a spike in latency but still under 1ms. The instance peaked at 920,975 IOPS with a latency of 545μs.

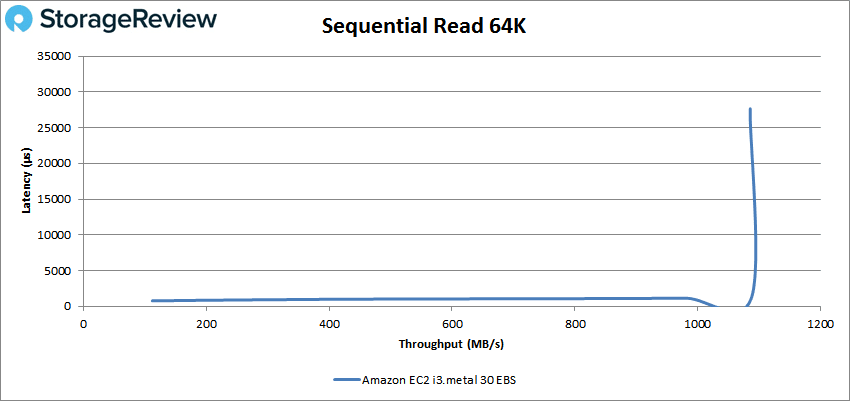

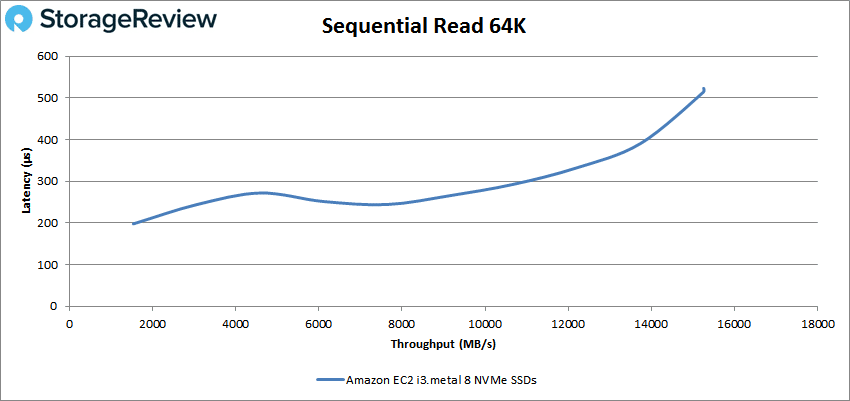

Switching over to sequential tests, for the EBS 64K peak read performance, the instance had sub-millisecond latency until about 70K IOPS or about 450MB/s and peaked at 17,360 IOPS or 1.08GB/s with a latency of 27.65ms.

64K sequential read with the NVMe gave us peak performance of 244,037 IOPS or 15.25GB/s with a latency of 514μs.

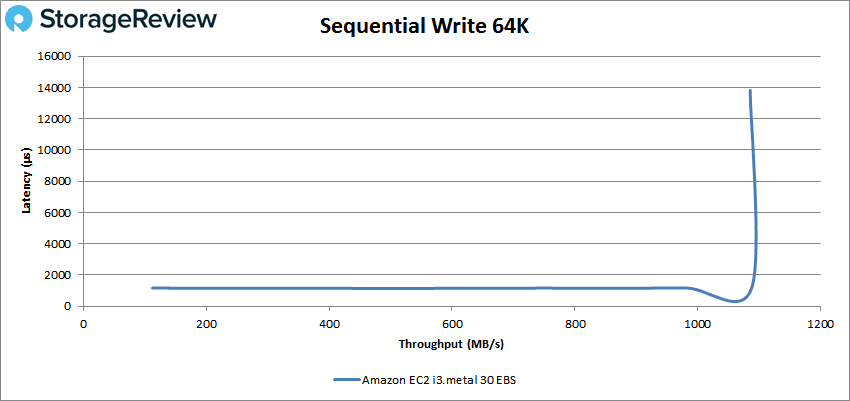

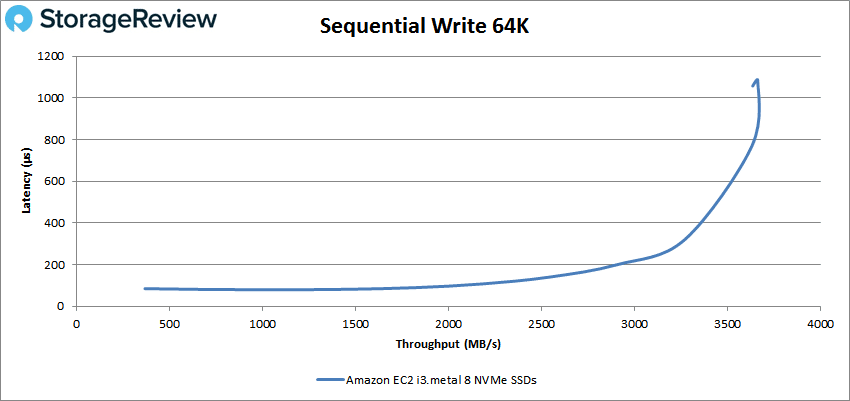

With 64K write with EBS, the instance started above 1ms and peaked at 17,359 IOPS or 1.08GB/s with a latency of 13.8ms.

The 64K sequential write is the first time we see the NVMe instance go over 1ms. The instance peaked at 58,572 IOPS or 3.66GB/s with a latency of 1.08ms.

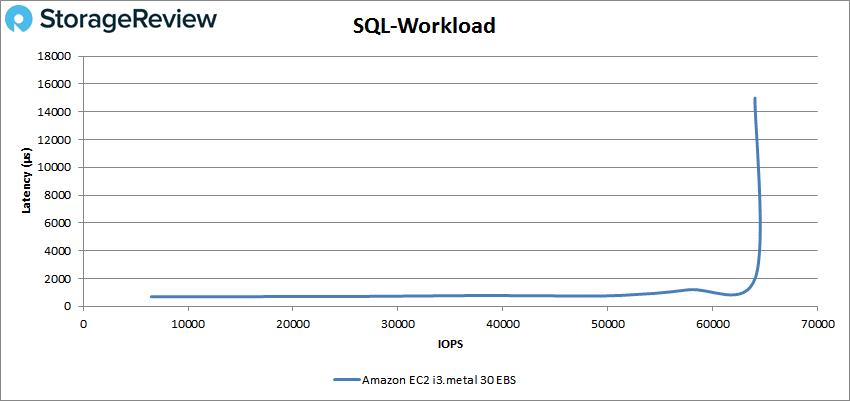

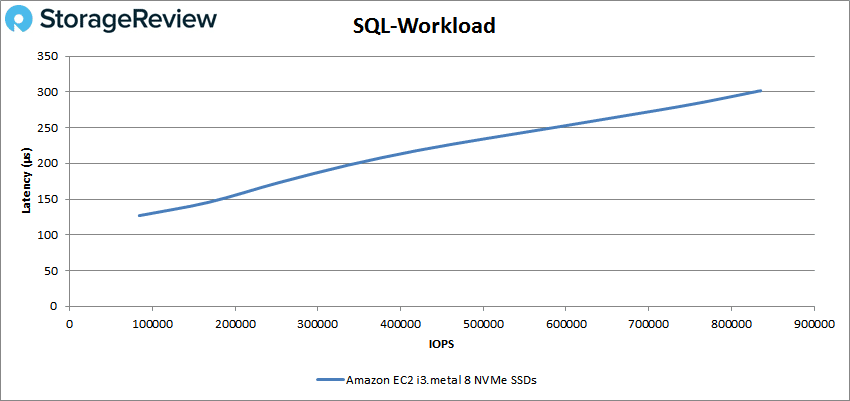

Switching over to our SQL workloads, the EBS instance had sub-millisecond latency performance until around 55K IOPS and peaked at 64,036 IOPS with a latency of 14.93ms.

For the NVMe version of the instance, we saw peak performance of 834,231 IOPS with a latency of 302μs for the SQL test.

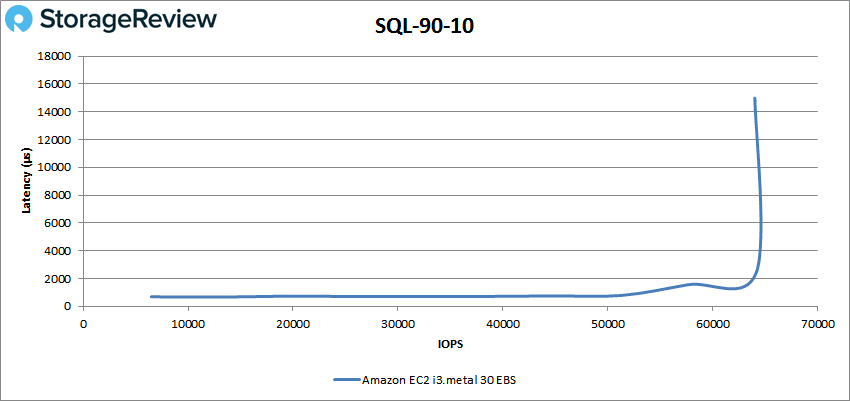

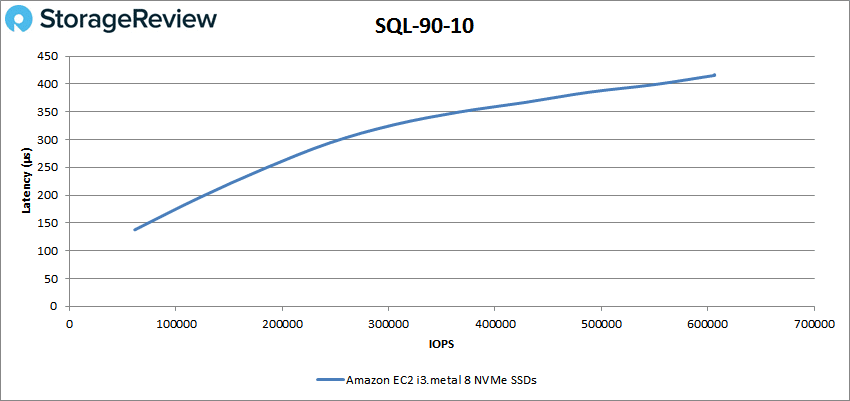

For the SQL 90-10 with EBS, the instance again broke 1ms latency around 55K IOPS and went on to peak at 64,036 IOPS with 14.99ms latency.

The NVMe version of the instance in the SQL 90-10 had a peak performance of 605,150 IOPS and a latency of 415μs.

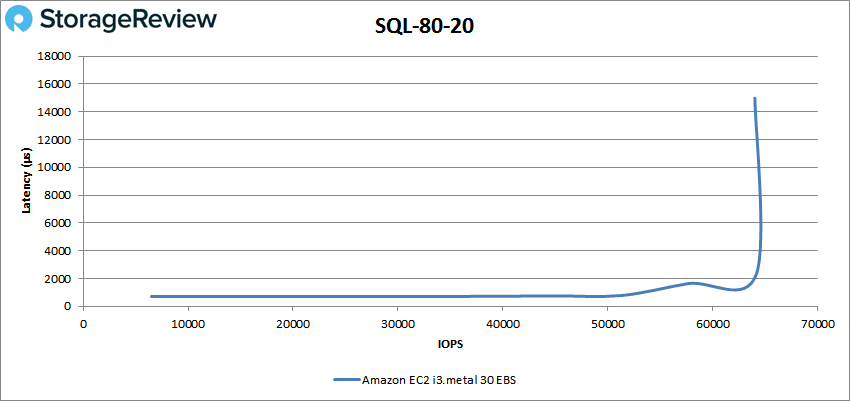

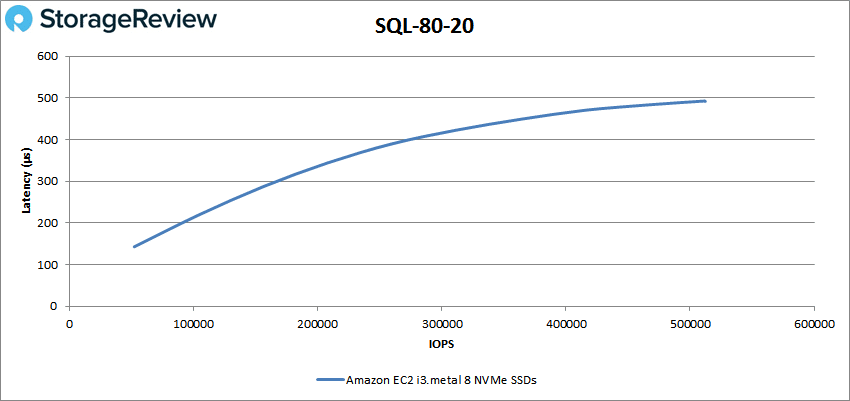

The SQL 80-20 with EBS again gave nearly the same numbers with the sub-millisecond latency ending around 55K IOPS and a peak of 64,036 IOPS with 14.93ms latency.

The SQL 80-20 with NVMe had the instance hit numbers of 511,840 IOPS and a latency of 493μs.

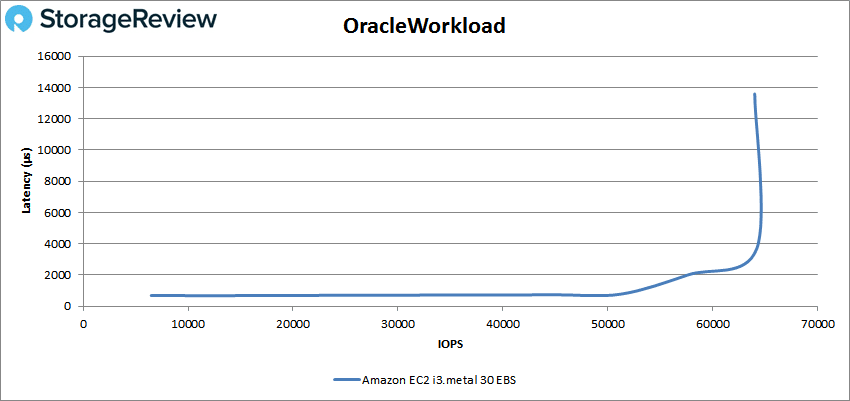

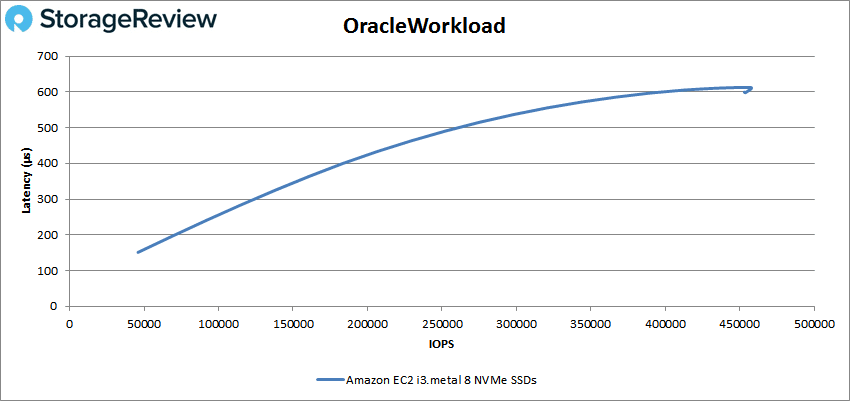

The Oracle tests for EBS showed the same odd peak performance of nearly the same numbers. For Oracle, the instance hit 64,036 IOPS with a latency of 13.6ms. The instance had sub-millisecond latency performance until around 55K IOPS.

With NVMe, the instance hit 457,372 IOPS with a latency of 613μs in the Oracle test.

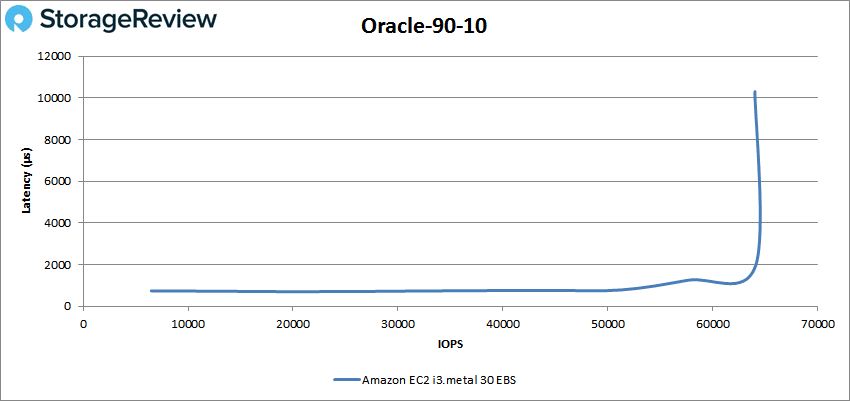

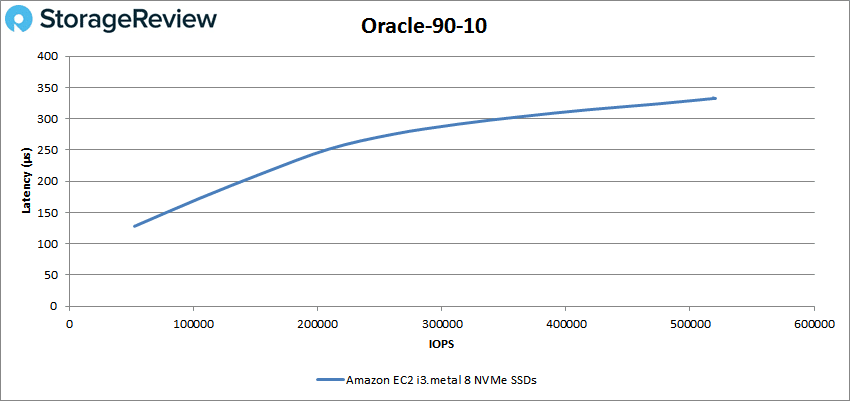

Oracle 90-10 had the EBS instance peak at 64,035 IOPS with a latency of 10.3ms. The instance broke 1ms at around 55K IOPS.

The NVMe version of the instance in the Oracle 90-10 was able to hit 520,448 IOPS with a latency of 333μs.

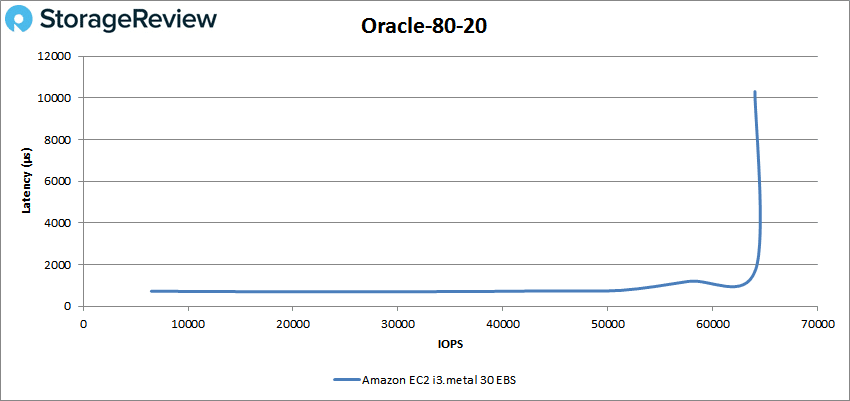

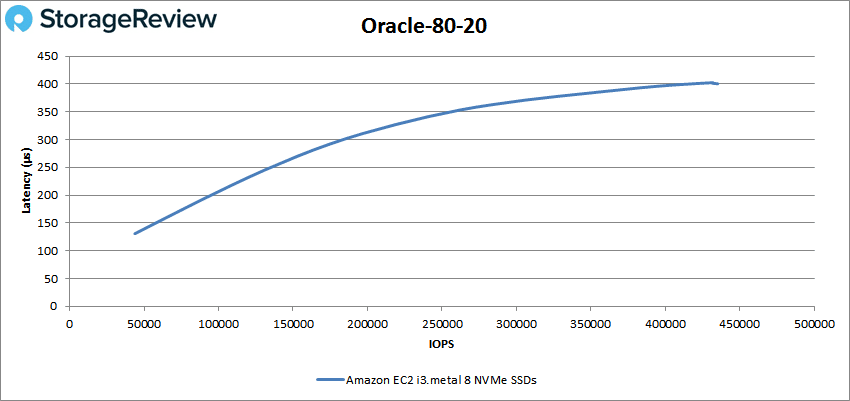

Oracle 80-20 had the EBS break 1ms around 55K IOPS and peak at 64,036 IOPS with a latency of 10.3ms.

The instance with NVMe had peak performance of 435,265 IOPS with a latency of 400μs.

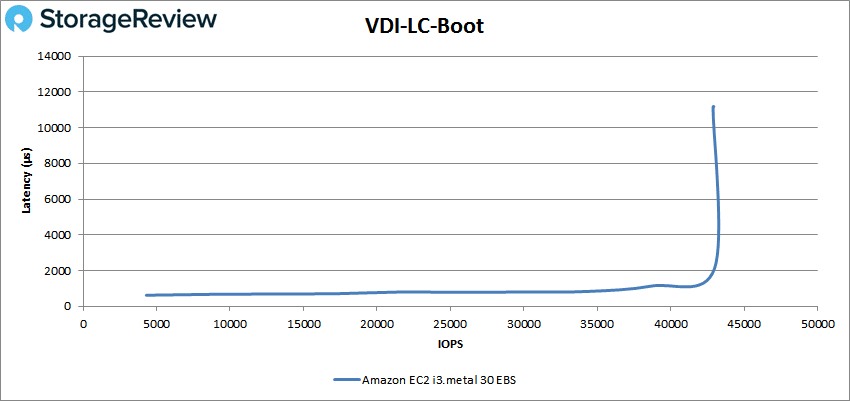

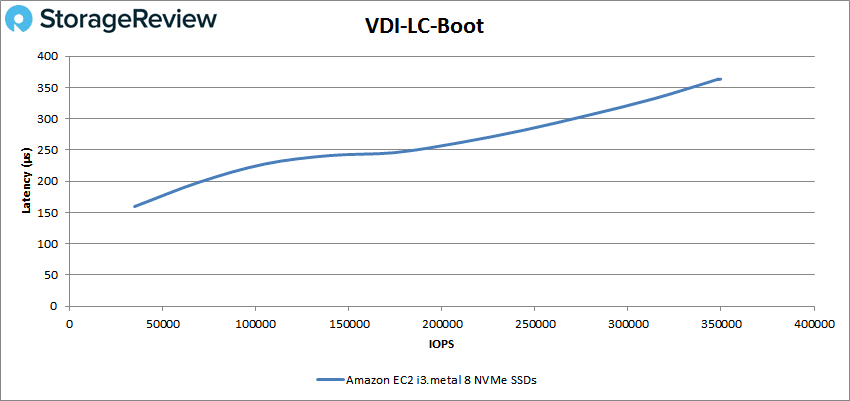

Next we look at our VDI Linked Clone (LC) tests. Starting with Boot, the EBS version of the instance had sub-millisecond latency until about 35K IOPS and peaked at 42,893 IOPS with a latency of 11.2ms.

The NVMe version of the instance was able to peak at 349,799 IOPS and a latency of 363μs in our VDI LC Boot test.

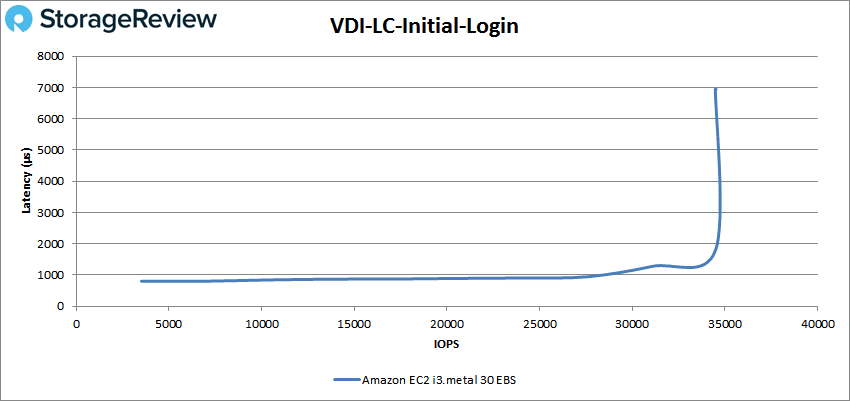

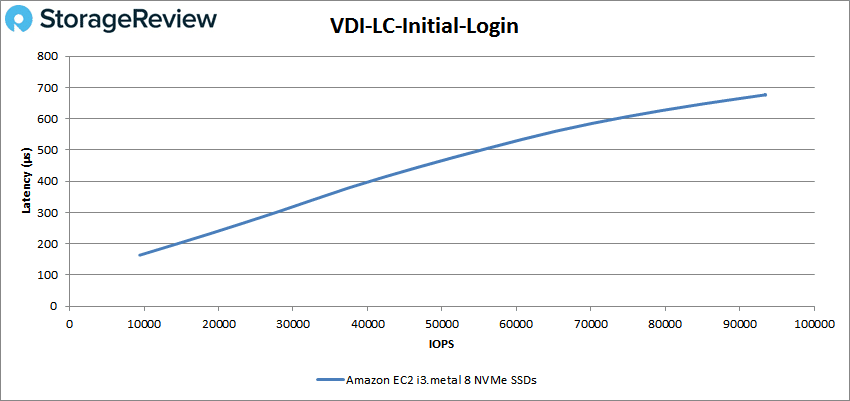

For the VDI LC Initial Login, the EBS instance straddled the line of 1ms for some time before falling over around 31K IOPS. The instance peaked at 34,486 IOPS and 6.95ms latency.

With VDI LC Initial Login, the NVMe instance peaked at 93,405 IOPS with a latency of 677μs.

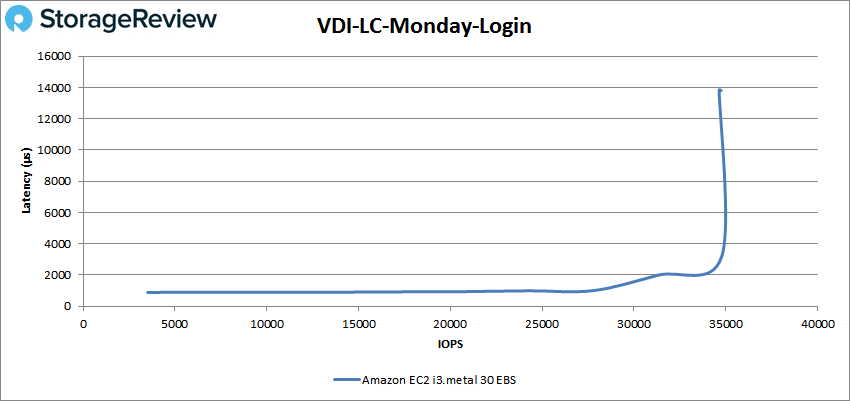

With the VDI LC Monday Login, once again the EBS instance flirted with 1ms for some time before taking the plunge around 25K IOPS and going on to peak at 34,643 IOPS with a latency of 13.85ms.

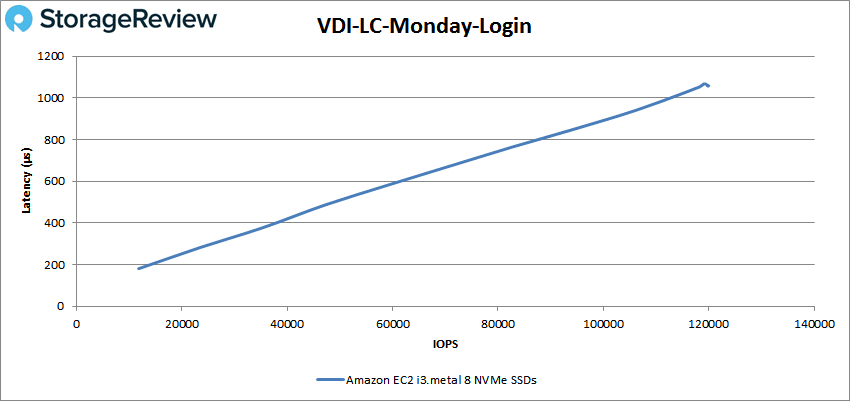

And finally, our VDI LC Monday Login with NVMe had the instance peak at 119,615 IOPS with a latency of 1.1ms.

Conclusion

Amazon’s cloud services are typically thought of as the most versatile around. While the compute and storage are certainly varied, Amazon has performance options as well. Amazon offers a plethora of performance options on its EC2 web service, including its i3 bare metal instance. These instances leverage the AWS Nitro system of purpose-built hardware and software. The i3.metal instances provide better performance through direct access to the CPUs and memory while still offering users the ability to take advantage of other features such as AWS EBS attached storage and local NVMe storage.

For performance, we tested both block storage (EBS) and NVMe. This in intended to give readers an idea what to expect in terms of options, and less of one being better than the other (typically NVMe storage performs better and has much lower latency, which is why there are two sets of charts). Looking at the EBS, we saw a pattern where the instance went above 1ms and then shortly after, ramped up in latency and finish. Throughout our testing, the EBS peaked at around 64K IOPS, which is the maximum allowed for EBS. This included the 4K tests, and all three SQL and Oracle tests. The instance slowed a bit for our VDI tests. Amazon does indicate that it’s possible to tweak settings to attain more than the 64K prescribed IOPS, but the processes for getting there aren’t what most customers would experience, so we did not pursue this path.

For our NVMe testing, we saw results that were more in line with our normal benchmarks. Highlights here included a random 4K read performance of 2.8 million IOPS, 4K write of 920K IOPS, 64K read of 15.25GB/s and SQL performances over 500K IOPS in all three tests (with the SQL test being around 834K IOPS). Oracle tests also had a strong showing with the NVMe, with results between 435K IOPS and 520K IOPS. Our VDI Linked Clone showed a strong Boot performance with roughly 350K IOPS. The only disappointment here is that customers can’t opt for more local NVMe in the i3.metal instances.

Overall, the i3.metal instance gave us exactly what Amazon promised it would. That’s reassuring for customers who want to know they’re going to receive the service level promised. This is, of course, not without cost, as with any cloud deployment, the concern is going to be about ease of use and results in the cloud vs. other cloud providers vs. on prem. That said, customers that have workloads that can make do with a lower IOPS level can save quite a bit of money. However, it really comes down to what the ultimate requirements are for the particular instance. To that end, the i3.metal instances are great for mainstream applications that aren’t going to or aren’t capable of exceeding the performance limits i3.metal offers and don’t need the IOPS an all flash array on prem would deliver for instance. Also, if you’re already in the Amazon family, the move to bare metal is familiar and there’s probably a cost benefit while consuming several AWS programs in bulk.

Amazon

Amazon