The Dell EMC PowerEdge R6525 is a 1U, dual-socket server designed for intensive, dense computing. Having the product number end in a 5 indicates that this particular server is an AMD EPYC variety. In this case, the R6525 leverages two AMD CPUs with support for up to 128 cores.

The Dell EMC PowerEdge R6525 is a 1U, dual-socket server designed for intensive, dense computing. Having the product number end in a 5 indicates that this particular server is an AMD EPYC variety. In this case, the R6525 can leverage up to two AMD CPUs for up to 128 cores.

Like most PowerEdge servers, the R6525 is highly customizable. The server looks to provide a balance while bringing innovations for dense computing environments. To drive performance, the R6525 offers 32 DIMM slots that can be configured with up to 4TB of 3,200MT/s LRDIMM (2TB RDIMM) to complement the twin CPUs. The system also offers up to three PCIe Gen4 expansion slots and support for the OCP Mezz card.

Looking at storage, there are a number of backplane configuration options. For more compute-heavy needs, the R6525 may be configured with a 4 x 3.5” SAS/SATA backplane, or the small form factor 8 x 2.5” SAS/SATA option. For those that can benefit from more storage performance, the system supports a 12 x 2.5” SATA/SAS/NVMe offering that uses 10 front bays and two in the rear (at the cost of PCIe expansion). Dell also supports the BOSS card with twin M.2 SSDs for boot, or alternatively, the dual-SD card adaptor. It should be noted that the R6525 supports PCIe Gen4 in all the NVMe bays, however early versions of the server shipped with only Gen3 support in the front bays. This can be upgraded via a cabling kit.

The Dell EMC PowerEdge R6525 utilizes OpenManage for its system management. We have talked at length on OpenManage in the past but as a recap, this management system is created for PowerEdge servers to deliver what the company calls an efficient and comprehensive solution through tailored, automated, and repeatable processes. OpenManage can automate server life cycle management with scripting via the iDRAC Restful API with Redfish conformance. Leveraging the OpenManage Enterprise console, users can simplify and centralize one-to-many management. And users can take advantage of OpenManage Mobile and PowerEdge Quick Sync 2 on their mobile devices.

Dell EMC PowerEdge R6525 Server Specifications

| Processor | Two 2nd Generation AMD EPYC Processors with up to 64 cores per processor |

| Memory | Up to 32 x DDR4 Maximum RAM: RDIMM 2 TB LRDIMM 4TB Max Bandwidth up to 3200 MT/S |

| Availability | Hot plug redundant Hard drives, Fans, PSUs |

| Controllers | PERC 10.5 – HBA345, H345, H745, H840, 12G SAS HBA Chipset SATA/SW RAID (S150): Yes |

| Drive Bays | |

| Front Bays | Up to 4 x 3.5” hot plug SAS/SATA (HDD) Up to 8 x 2.5” hot plug SAS/SATA (HDD) Up to 12 x 2.5” (10 Front + 2 Rear) hot plug SAS/SATA/NVMe Optional: BOSS (2 x M.2) |

| Power Supplies | 800W Platinum 1400W Platinum 1100W Titanium |

| Fans | Hot plug Fans |

| Dimensions | Height: 44.45mm (1.75”) Width: 434.0mm (17.1”) Depth: 736.54mm (29”) Weight: 21.5kg (47.39lbs) |

| Rack Units | 1U Rack Server |

| Embedded mgmt. | iDRAC9 iDRAC RESTful API with Redfish iDRAC Direct Quick Sync 2 BLE/wireless module |

| OpenManage SW | OpenManage Enterprise OpenManage Enterprise Power Manager OpenManage Mobile |

| Embedded NIC | 2 x 1 GbE LOM ports |

| GPU Options | Up to 2 Single-Wide GPU |

| Ports | |

| Front Ports | 1 x Dedicated iDRAC direct micro-USB 1 x USB 2.0 1 x VGA |

| Rear Ports | 1 x Dedicated iDRAC network port 1 x Serial (optional) 1 x USB 3.01 x VGA |

| PCIe | 3 x Gen4 slots (x16) at 16GT/s |

| Operating Systems & Hypervisors | Canonical Ubuntu Server LTS Citrix Hypervisor Microsoft Windows Server with Hyper-V Red Hat Enterprise Linux SUSE Linux Enterprise Server VMware ESXi |

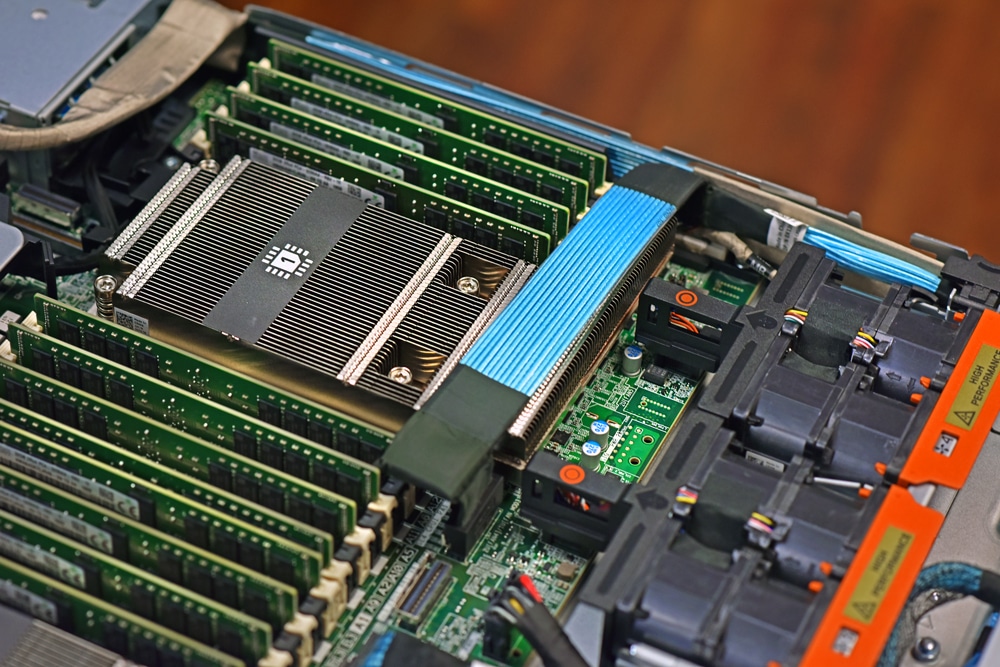

Dell EMC PowerEdge R6525 Design and Build

Depending on the particular storage configuration, users can have up the front storage configured to support four 3.5” HDDs, eight 2.5” HDDs, or ten 2.5” SSDs that take up the majority of the front under the bezel. Additionally, those SSDs bays can be set up to support traditional SATA/SAS connectivity, or with a separate backplane offer NVMe support.

On the right side are the power button, a dedicated iDRAC direct micro-USB, a VGA port, and one USB 2.0 port. The left side has indicators LEDs. As with other servers in the Dell EMC portfolio, additional information and some configuration is offered through the bezel. Our system included it, which is handy to view the iDRAC IP information, change it on the fly to DHCP or pull down system information without having to log into the server itself.

Flipping the server around to the rear, we see hot-swappable PSUs on either side. This is a bit different than most other Dell servers with the PSUs both located on one side. Users can configure the rear of the chassis in many ways depending on what the goal is.

For PCIe add-in cards, you can select up to 3 slots or two if you need full-height. Additionally, you are able to configure the server with up to two NVMe bays in the rear, giving you support for up to 12 NVMe SSDs in total. An OCP 3.0 slot gives you network connectivity from quad-1GbE NICs up to quad 10/25GbE offerings, depending on what your deployment needs are. More common items include a system ID button, iDRAC dedicated port, USB 2.0 and 3.0 ports, and a VGA port under it.

Inside the server can be equipped with a BOSS controller card, which is Dell’s solution to RAID-protected onboard boot storage through an add-in card supporting two M.2 SSDs. Currently, most configuration options surround 240 and 480GB SSDs, using either one or two SSDs, with RAID or no RAID selected. Dual microSDHC card options are still offered through the Internal SD Module, giving buyers cost-effective choices depending on the specific situation.

Dell EMC PowerEdge R6525 Management

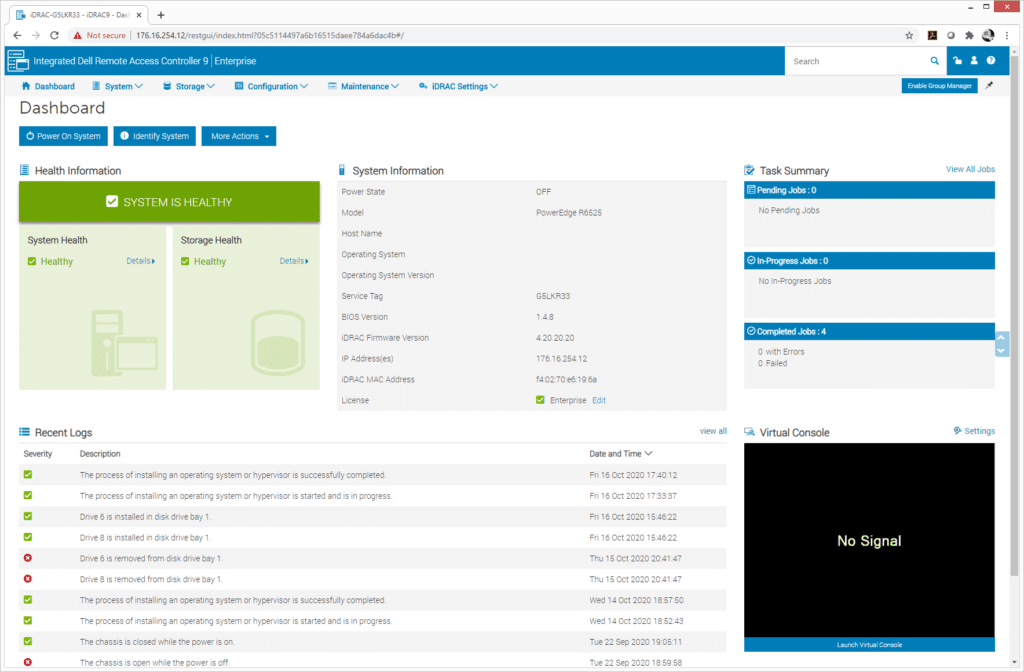

The Dell EMC PowerEdge R6525 server is managed by Integrated Dell Remote Access Controller 9 or iDRAC9 for short. Embedded in all PowerEdge servers, iDRAC9 is designed to make server administrators more productive, providing advanced, agent-free local and remote server administration, as well as the ability to securely automate a range of management tasks. This is a comprehensive set of management tools with a very clean-looking and modern interface. We are going to briefly go over some of its features, so for a more detailed review, please see our Dell EMC iDRAC9 V4.0 Overview.

iDRAC’s dashboard displays the server’s overall health, and recent hardware logs and notes. It also shows users general system information such as firmware and location details, license information, BIOS details, location, and a virtual console. Users will also be able to power on the system from the dashboard and use the “Graceful Shutdown” option, the latter which turn off the server via software function so it can safely shut down processes and close connections. In addition to the Dashboard link, the main tabs along the top include System, Storage, Configuration, Maintenance, and iDRAC Settings.

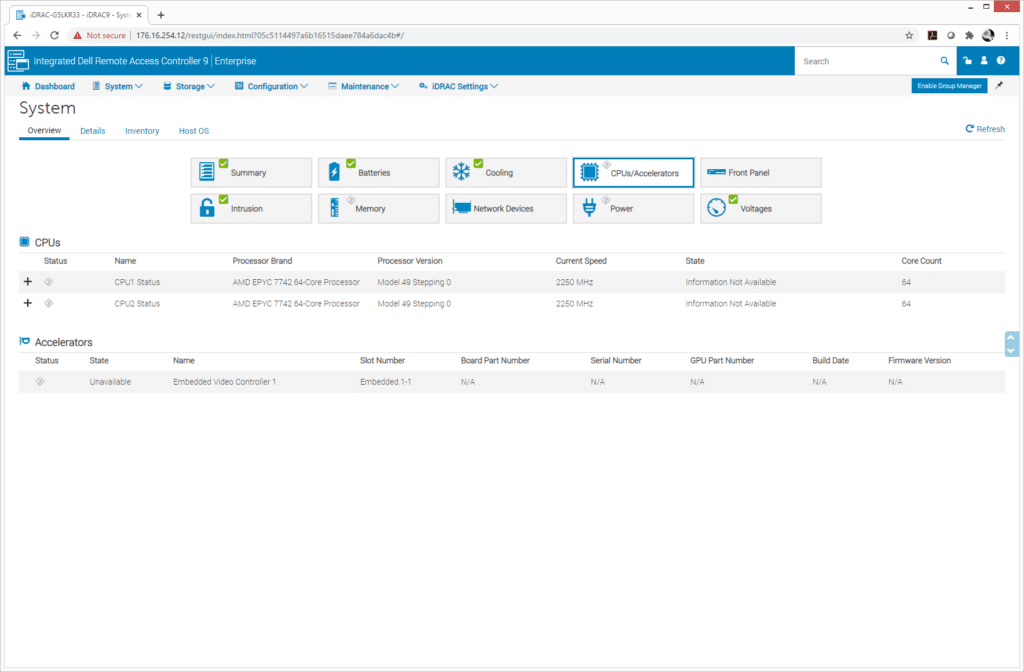

In the System tab, information on server batteries, cooling system, CPUs, the front panel layout, memory, power, and voltages can be found by clicking on the appropriate icon. The CPU section displays the name, processor brand, core count and version, current speed of all CPUs you have installed.

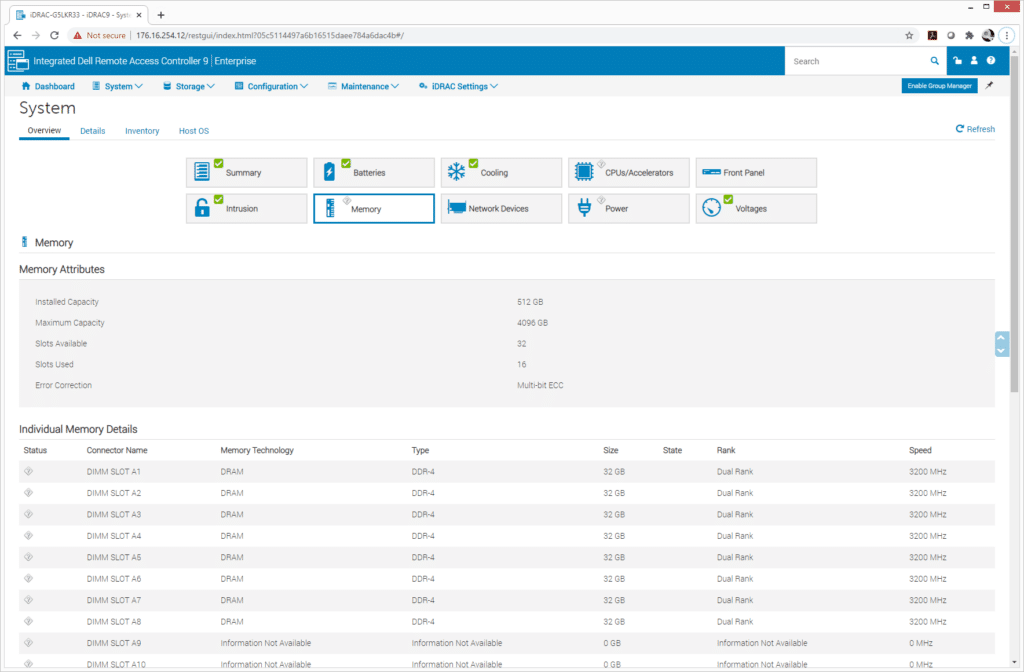

Under the Memory section of the System tab, you will find all of the memory attributes of your server, including maximum capacity, available and used slots, and information on the error correction. Below are details on each memory DIMM slot, such as the status, type, size, speed, and rank.

Dell EMC PowerEdge R6525 Performance

Dell EMC PowerEdge R6525 Configuration:

- 2 x AMD EPYC 7742

- 512GB, 256GB per CPU

- Performance Storage: 4 x Micron 9300 NVMe 3.84TB

- CentOS 8 (2004)

- ESXi 6.7u3

While the product page and technical manual don’t mention it currently, the PowerEdge R6525 does support Gen4 SSDs, as long as the Gen4-supporting cabling is installed between the backplane and motherboard. The system we received for review didn’t connect with Gen4 SSDs at Gen4 speeds, so we were not able to include that portion of testing in the review.

SQL Server Performance

StorageReview’s Microsoft SQL Server OLTP testing protocol employs the current draft of the Transaction Processing Performance Council’s Benchmark C (TPC-C), an online transaction processing benchmark that simulates the activities found in complex application environments. The TPC-C benchmark comes closer than synthetic performance benchmarks to gauging the performance strengths and bottlenecks of storage infrastructure in database environments.

Each SQL Server VM is configured with two vDisks: 100GB volume for boot and a 500GB volume for the database and log files. From a system resource perspective, we configured each VM with 16 vCPUs, 64GB of DRAM, and leveraged the LSI Logic SAS SCSI controller. While our Sysbench workloads tested previously saturated the platform in both storage I/O and capacity, the SQL test looks for latency performance.

This test uses SQL Server 2014 running on Windows Server 2012 R2 guest VMs and is stressed by Dell’s Benchmark Factory for Databases. While our traditional usage of this benchmark has been to test large 3,000-scale databases on local or shared storage, in this iteration we focus on spreading out four 1,500-scale databases evenly across our servers.

SQL Server Testing Configuration (per VM)

- Windows Server 2012 R2

- Storage Footprint: 600GB allocated, 500GB used

- SQL Server 2014

- Database Size: 1,500 scale

- Virtual Client Load: 15,000

- RAM Buffer: 48GB

- Test Length: 3 hours

- 2.5 hours preconditioning

- 30 minutes sample period

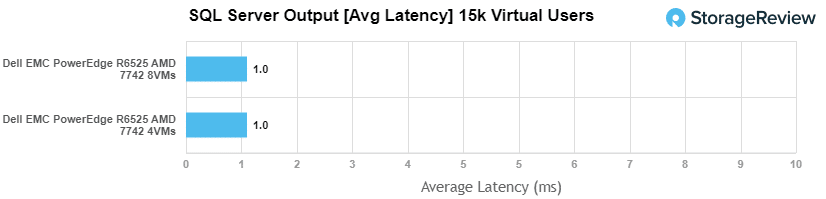

For our average latency SQL Server benchmark, the Dell EMC PowerEdge R6525 was tested with both 4VMs and 8VMs hitting 1ms for both across the board.

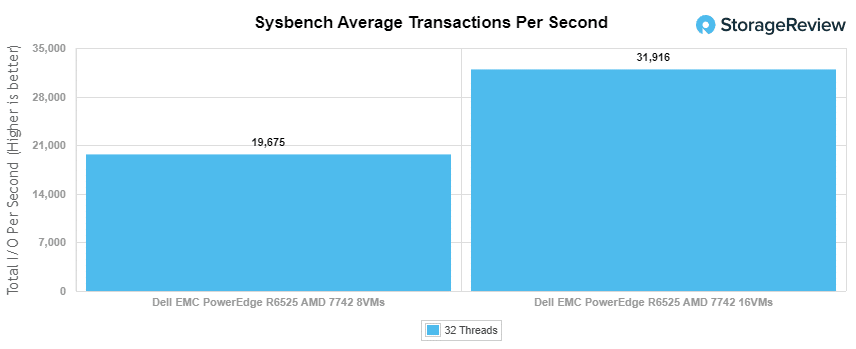

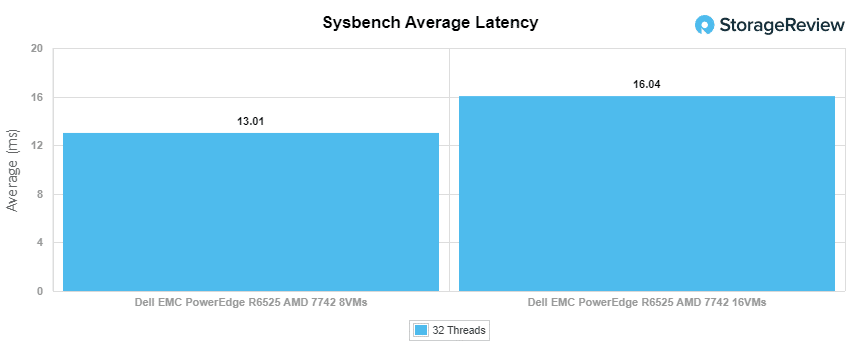

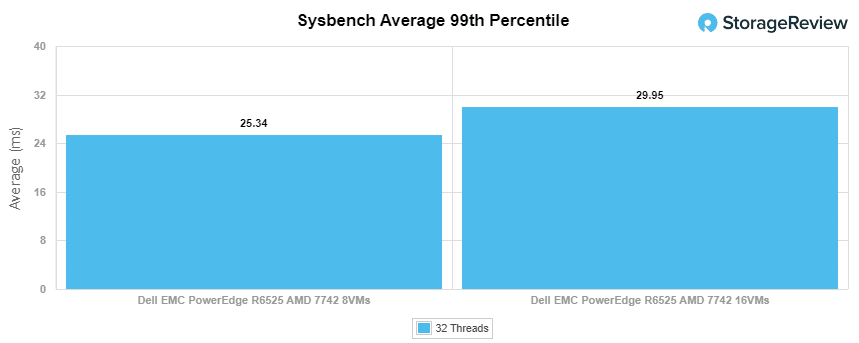

Our first local-storage application benchmark consists of a Percona MySQL OLTP database measured via SysBench. This test measures average TPS (Transactions Per Second), average latency, and average 99th percentile latency as well.

Each Sysbench VM is configured with three vDisks: one for boot (~92GB), one with the pre-built database (~447GB), and the third for the database under test (270GB). From a system resource perspective, we configured each VM with 16 vCPUs, 60GB of DRAM, and leveraged the LSI Logic SAS SCSI controller.

Sysbench Testing Configuration (per VM)

- CentOS 6.3 64-bit

- Percona XtraDB 5.5.30-rel30.1

- Database Tables: 100

- Database Size: 10,000,000

- Database Threads: 32

- RAM Buffer: 24GB

- Test Length: 3 hours

- 2 hours preconditioning 32 threads

- 1 hour 32 threads

With the Sysbench we ran both 4VM and 8VM for our transactional benchmark we saw aggregate scores of 19,674.9 TPS for 8VMs and 31,915.9 TPS for 16VMs.

For Sysbench average latency we saw aggregate scores of 13.01ms for 8VMs and 16.04ms for 16VMs.

In our worst-case scenario latency (99th percentile) we saw aggregate scores of 25.34ms for 8VMs and 29.95ms for 16VMs.

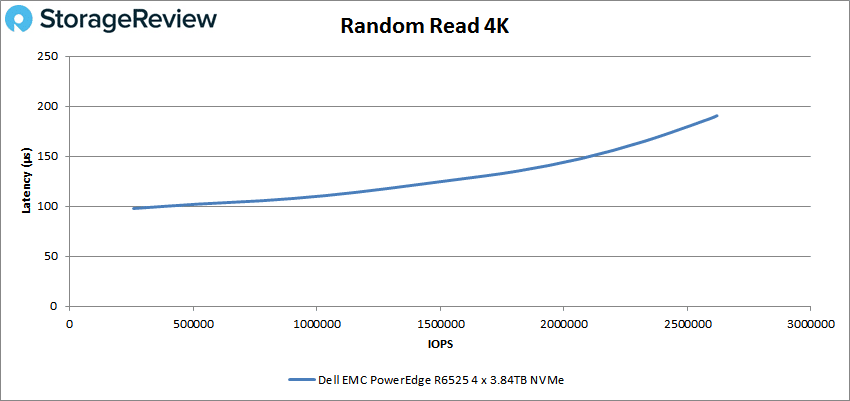

VDBench Workload Analysis

When it comes to benchmarking storage arrays, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions. These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices.

Profiles:

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

- 4K Random Write: 100% Write, 64 threads, 0-120% iorate

- 64K Sequential Read: 100% Read, 16 threads, 0-120% iorate

- 64K Sequential Write: 100% Write, 8 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

With random 4K read, the Micron 9300 NVMe SSDs in the Dell EMC PowerEdge R6525 Server peaked at 2,619,533 IOPS with a latency of 190.5µs.

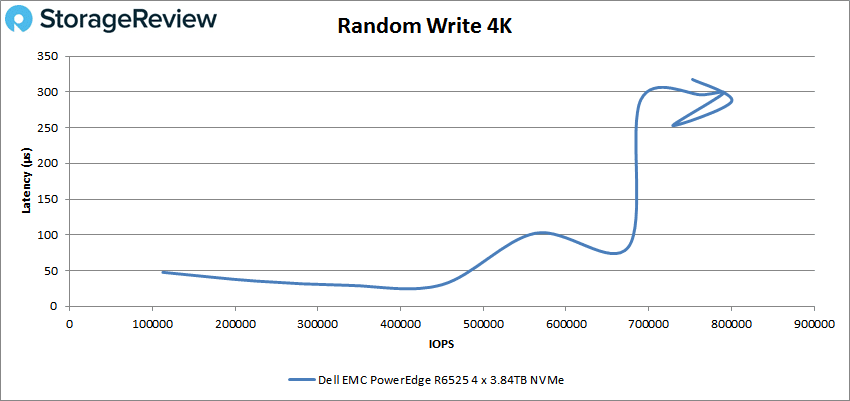

Random 4K write saw the Micron 9300 NVMe SSDs start to suffer from some uneven performance when approaching 700K IOPS, ending up peaking at around 800,426 IOPS with a latency of 287ms before showing one last spike.

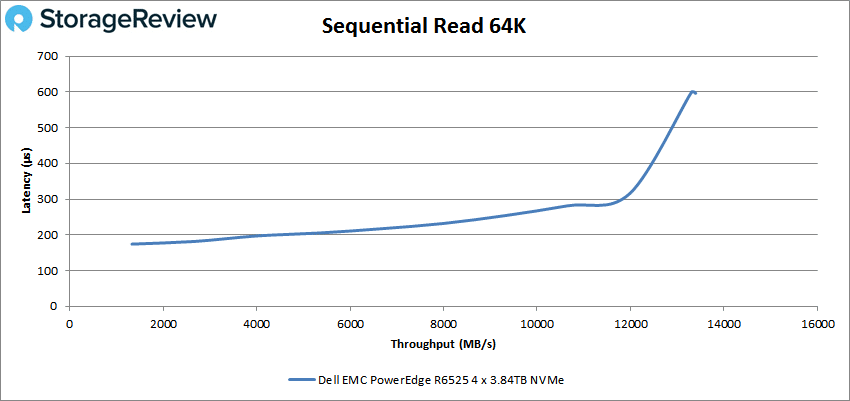

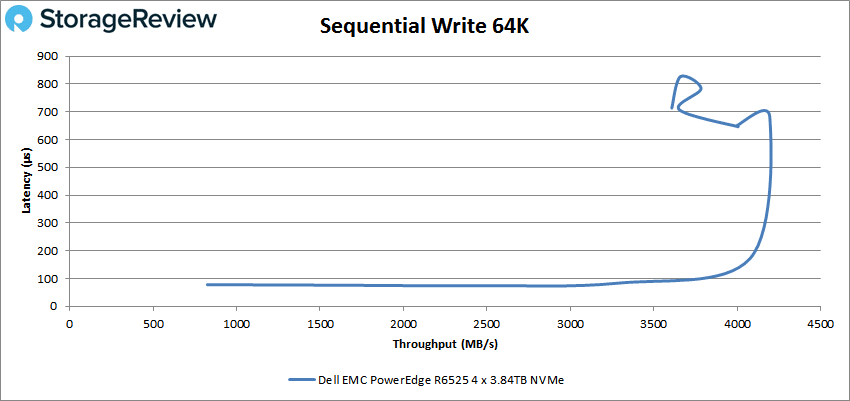

For 64K write, the drives topped out as the workload approached the 4GB/s mark. Nonetheless, it peaked at 4.193GB/s before taking an increase in latency to 683.6ms.

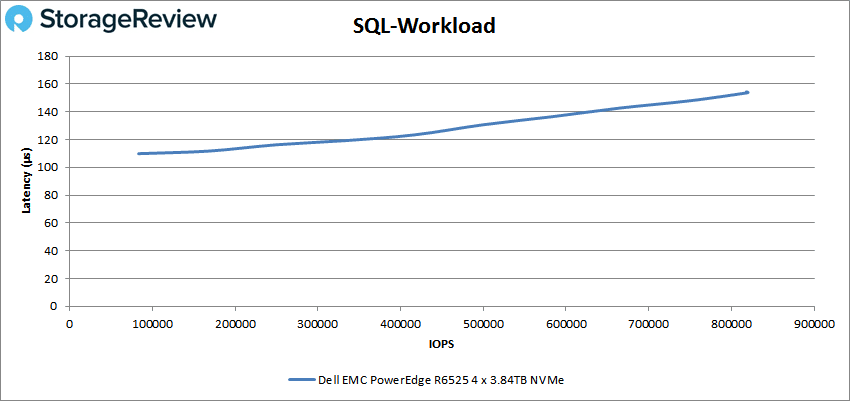

Our next set of tests are our SQL workloads: SQL, SQL 90-10, and SQL 80-20. Starting with SQL, the Dell EMC server saw a peak of 819,928 IOPS at a latency of 153.8µs.

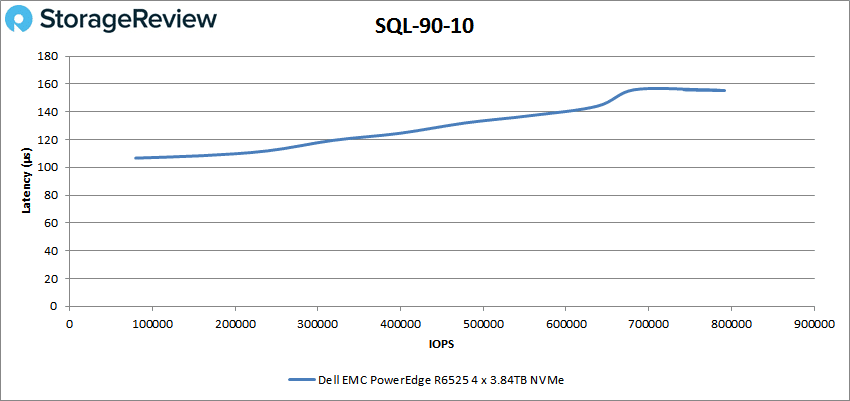

For SQL 90-10 the server peaked at 719,148 IOPS at 155.3µs for latency before taking a hit in IOPS (it’s relatively hard to see in the chart below).

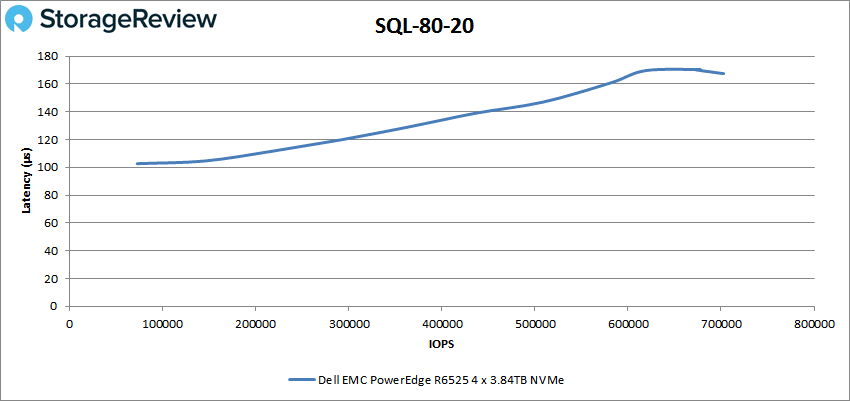

Moving on to SQL 80-20, the Dell EMCR6525 server saw a peak performance of 702,708 IOPS with a latency of 167.5µs.

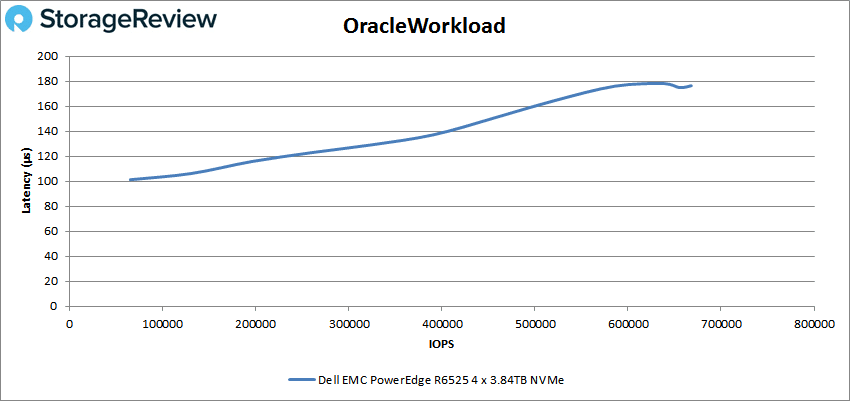

Next up are our Oracle workloads: Oracle, Oracle 90-10, and Oracle 80-20. Starting with Oracle, the Dell EMC server recorded a peak of its performance at 667,961 IOPS with a latency of 176.4µs.

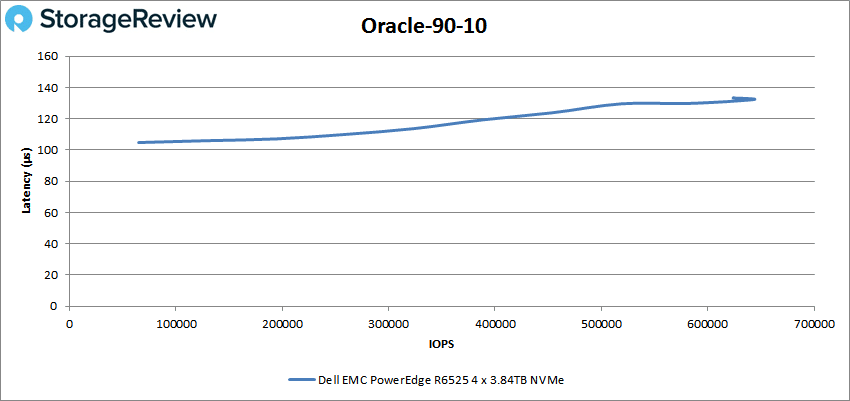

With Oracle 90-10, the Dell EMC server was peaked at 642,973 IOPS at a latency of 132.3µs before taking a slight hit in performance at the very end of the workload.

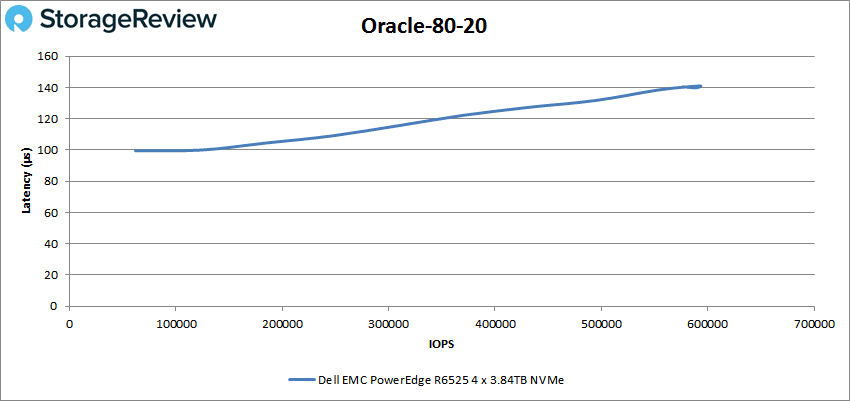

Oracle 80-20 gave the server a peak performance of 592,949 IOPS at a latency of 141µs, again taking a small dip in IOPS at the end of the test.

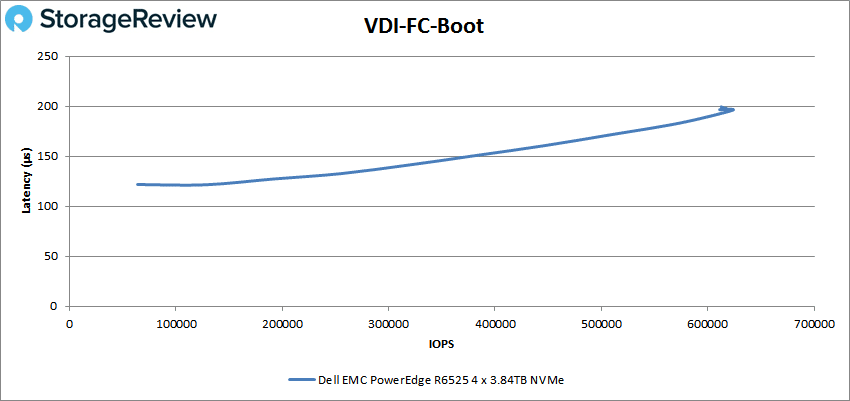

Next, we switched over to our VDI clone test, Full and Linked. For VDI Full Clone (FC) Boot, the Dell EMC server hit a peak of 623,036 IOPS with a latency of 196µs.

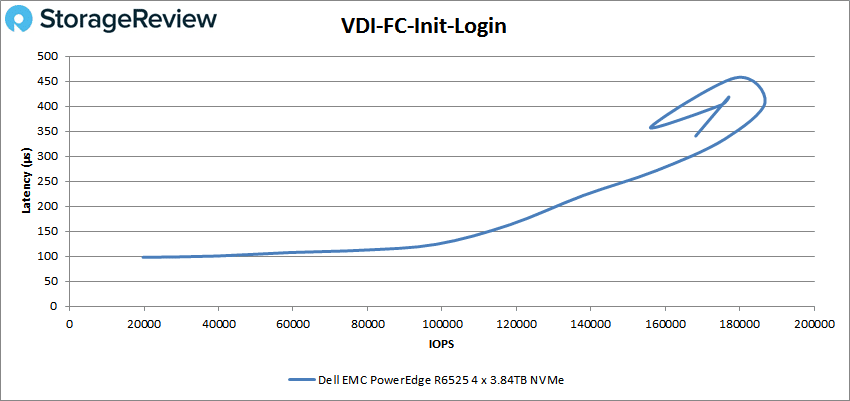

VDI FC Initial Login saw some movement near the end of the workload when it peaked at 186,801 IOPS with a latency of 407.4µs.

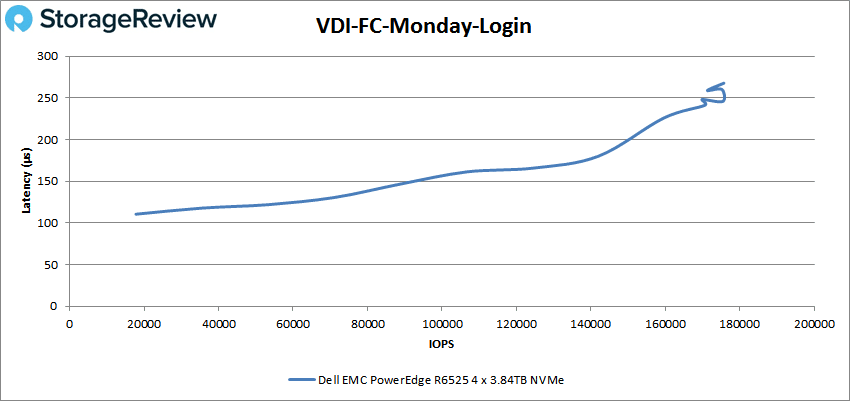

Next up is VDI FC Monday Login, which gave us giving us a peak of 175,193 IOPS and latency of 260.3µs.

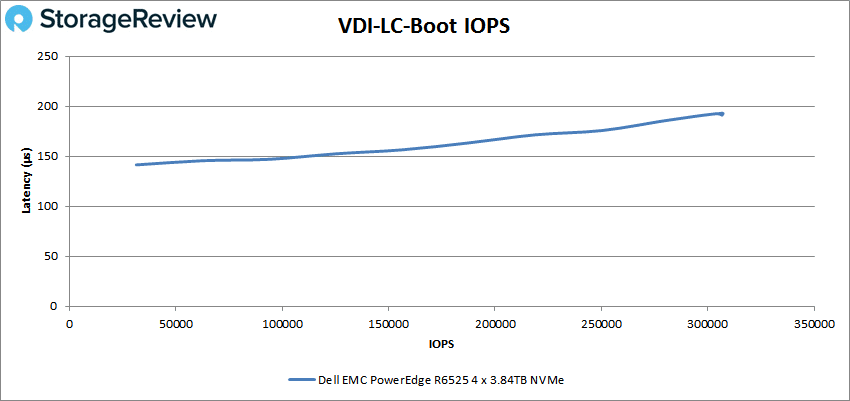

Switching to VDI Linked Clone (LC) Boot, the Dell EMC server showed a peak of 306,695 IOPS and a latency of 191.2µs.

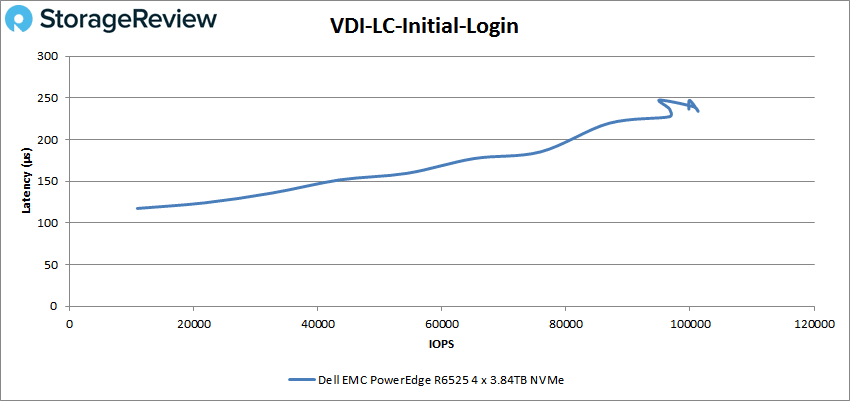

For VDI LC Initial Login the server peaked at 101,301 IOPS with a latency of 234.4µs.

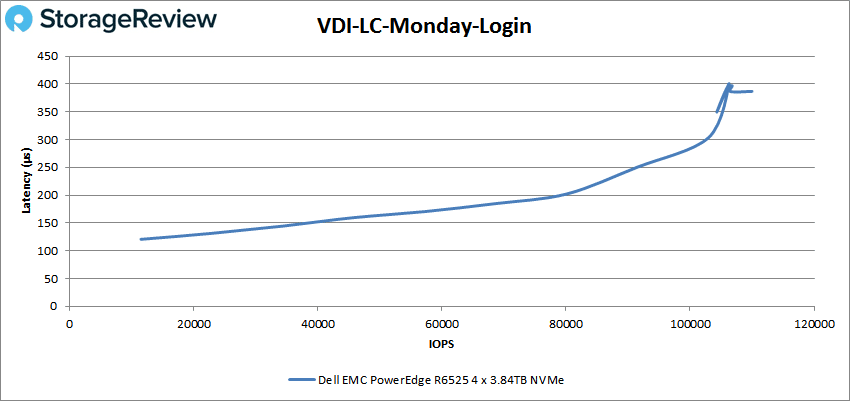

Finally, VDI LC Monday Login had a peak of 109,978 IOPS with a latency of just 286.8µs, with a bit of movement at the end.

Conclusion

The Dell EMC PowerEdge R6525 is a powerful 1U, dual socketed AMD server, that’s designed for situations that need dense computing with flexible storage options. The server can be configured with up to 4TB of RAM and up to 12 NVMe Gen4 SSDs via ten bays in front and two optional bays located at the back panel.

While Dell doesn’t have a lot of information on Gen4 support listed on their website or technical resources, we’ve been told that late-production samples of the PowerEdge R6525 should support Gen4 SSDs. The difference comes down to when the appropriate cabling was included for the connection between the motherboard and SSD backplane. Our review system didn’t connect to Gen4 SSDs at Gen4 speeds, indicating it most likely didn’t include the updated cabling. Thankfully for customers looking to purchase an EPYC platform, it’s a matter of updating cabling, not a completely new server to adopt Gen4 SSDs.

Our specific build to measure the server’s performance consisted of dual AMD EPYC 7742 64-Core Processors, 512GB of DDR4 3200MHz RAM, and 4x Micron 9300 3.84TB NVMe SSDs. On our VDBench workloads running inside a bare-metal CentOS 8 environment, the Dell EMC server had sub-millisecond latency throughout, with highlights including 2.6 million IOPS for 4K read and just over 800k IOPS for our 4K write, while 64K read and write posted reached 13.4GB/s, and 4.2GB/s, respectively.

With SQL we saw 819,928 IOPS for SQL workload, 719,148 IOPS for SQL 90-10, and 702,708 IOPS for SQL 80-20. In Oracle, the server hit 667,961 IOPS, 642,973 IOPS for Oracle 90-10, and 592,949 IOPS for Oracle 80-20. For our VDI test, the Dell EMC PowerEdge R6525 had Full Clone results of 623,036 IOPS boot, 186,801 IOPS Initial Login, and 175,193 IOPS Monday Login, while Linked Clone showed 306,695 IOPS for boot, 101,301 IOPS for Initial Login, and 109,978 IOPS for Monday Login.

Looking at application workload performance with the platform running ESXi 6.7u3, the Dell EMC PowerEdge R6525 performed fantastically across our SQL Server and MySQL Sysbench workloads. In SQL Server we maxed out the test in both 4VM and 8VM configurations, measuring an average of 1ms across each 1,500 scale VM, each with 15,000 virtual users hitting the given database. In our MySQL workload, the R6525 powered through the workload with the highest aggregate TPS measured from a dual-socket server at 16VMs peaking at 31,915. The only platform that beats it slightly is a 4U quad-processor server, which was around 600 TPS higher.

The Dell EMC PowerEdge R6525 is a promising effort for environments that can benefit from the density and raw compute power that AMD enables in this dual-proc, 1U box. Couple the well-established management tools Dell Tech offers and it’s clear the R6525 is a very good server. Our only issue is the somewhat disjointed go to market when it comes to PCIe Gen4 SSD support. Once resolved, we’ll revisit the platform with a batch of Gen4 SSDs to see how much more performance can be squeezed from this beast.

***Update 11/2/20***

After this review, we were supplied a Gen4 field upgrade kit for the server. We applied the kit and re-ran our synthetic performance tests using a set of SK hynix PE8010 SSDs. The updated data can be seen in the PowerEdge R6525 Gen4 Review. With the new backplane in place, we’ve opted to give this server an Editor’s Choice award, as the only thing holding back our full recommendation was the Gen4 support.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | RSS Feed