The Dell PowerEdge XE9680 stands as a testament to innovation in enterprise computing, giving customers the ultimate in GPU flexibility.

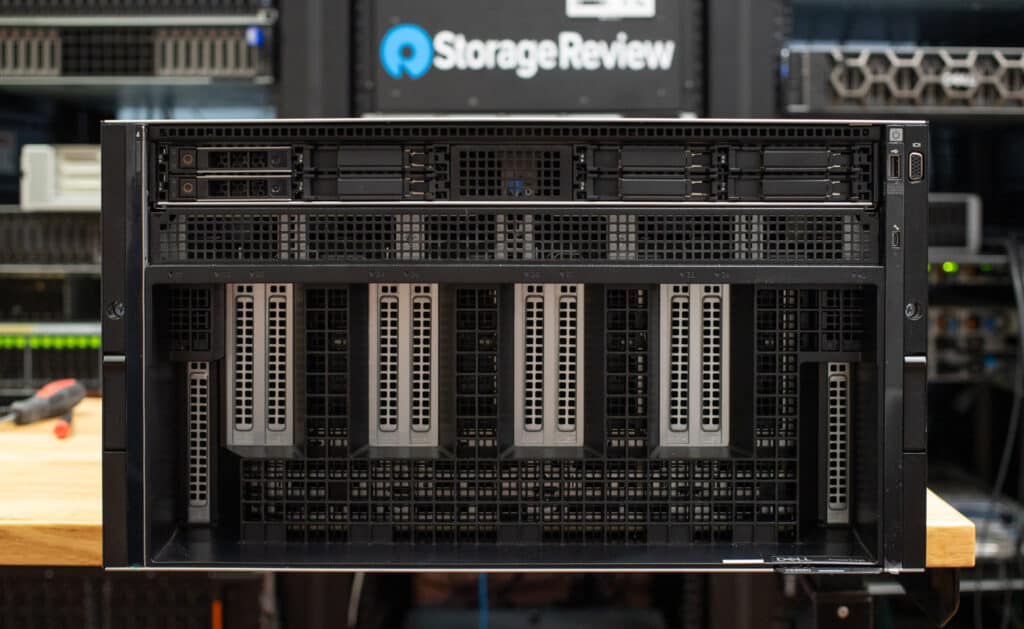

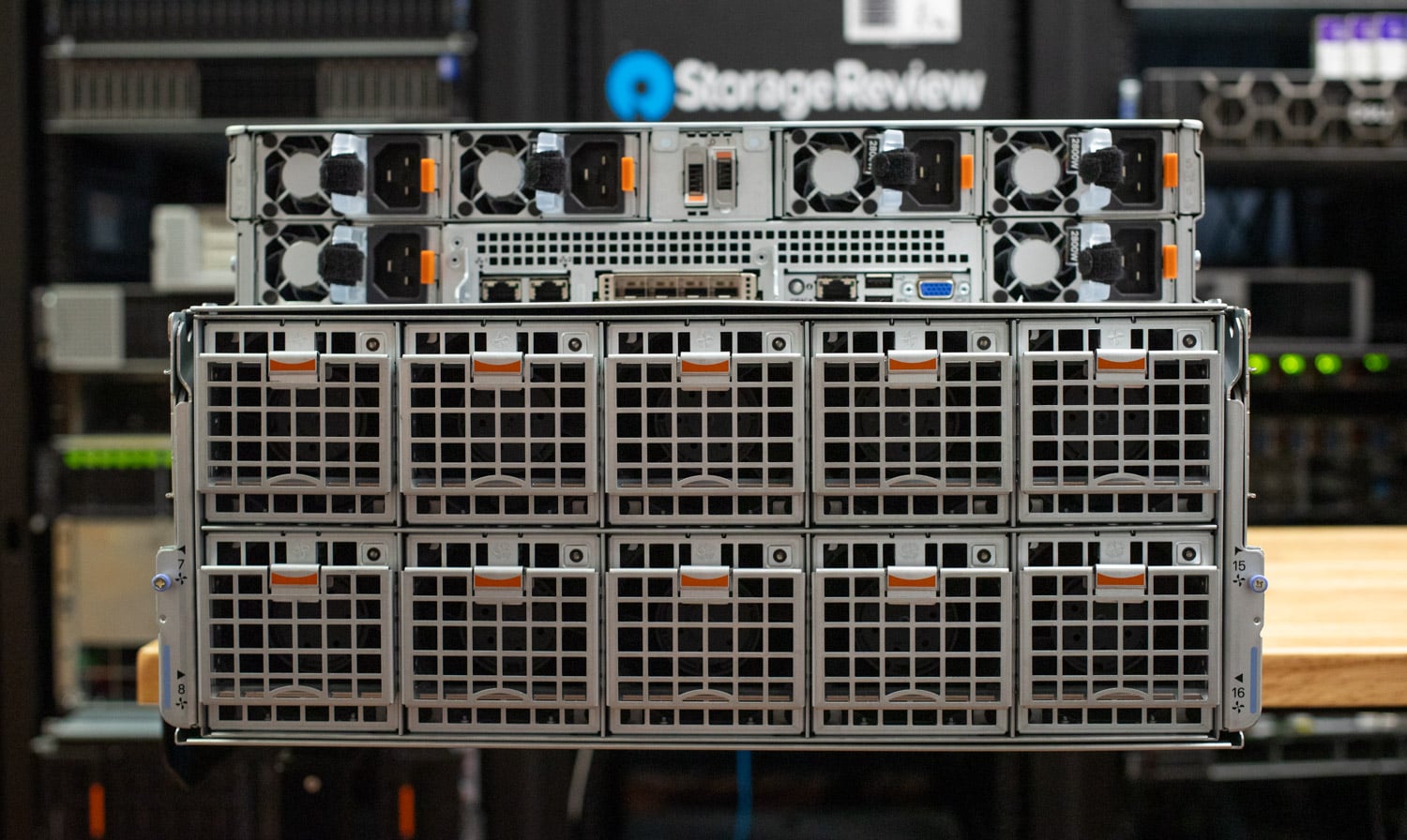

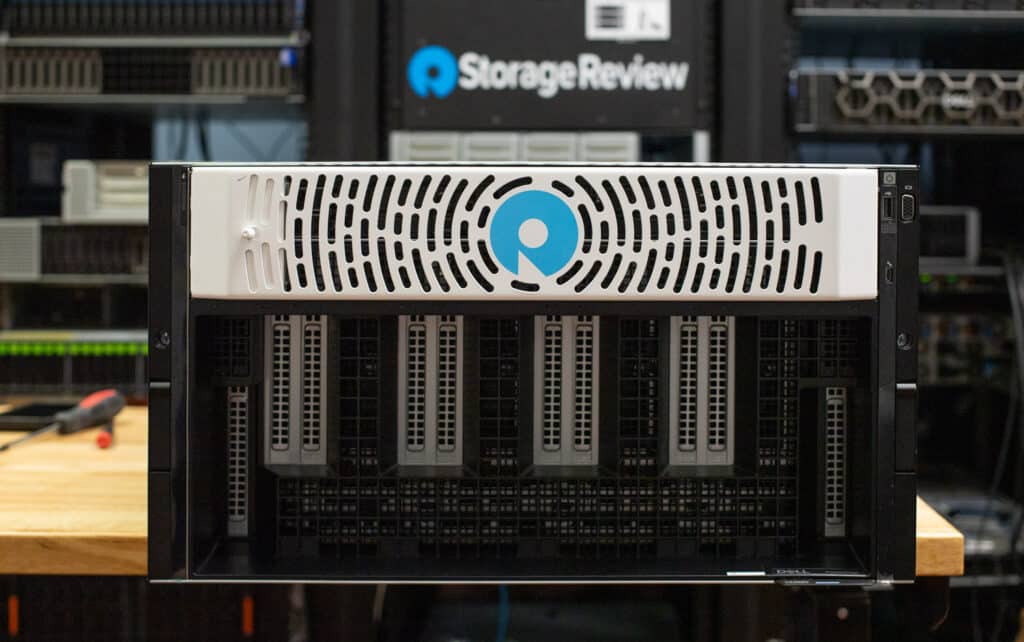

The PowerEdge XE9680 represents Dell’s most versatile AI infrastructure platform to date. It combines a PowerEdge R760-style 2U compute node with a massive 4U GPU drawer. This innovative 6U design combines the best of Dell’s enterprise server engineering with unprecedented GPU density and flexibility.

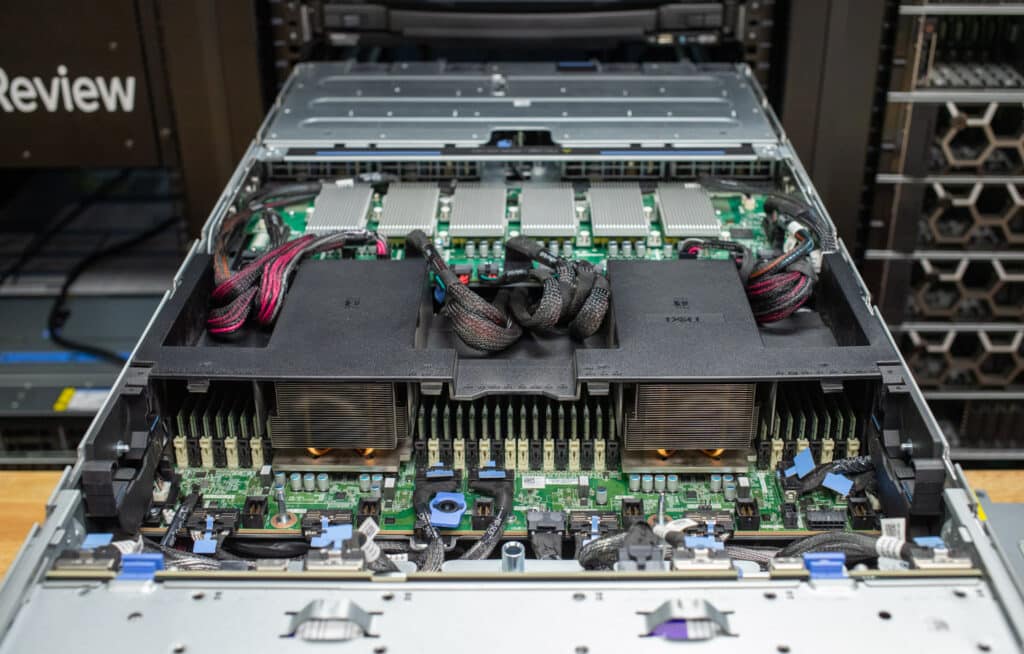

At its core, the XE9680 supports dual Intel Xeon Scalable processors, offering a choice between the 5th Generation with up to 64 cores per CPU or the 4th Generation with up to 56 cores. Memory capacity is substantial, supporting up to 4TB of DDR5 memory across 32 DIMM slots, running at up to 5600 MT/s with the latest processors.

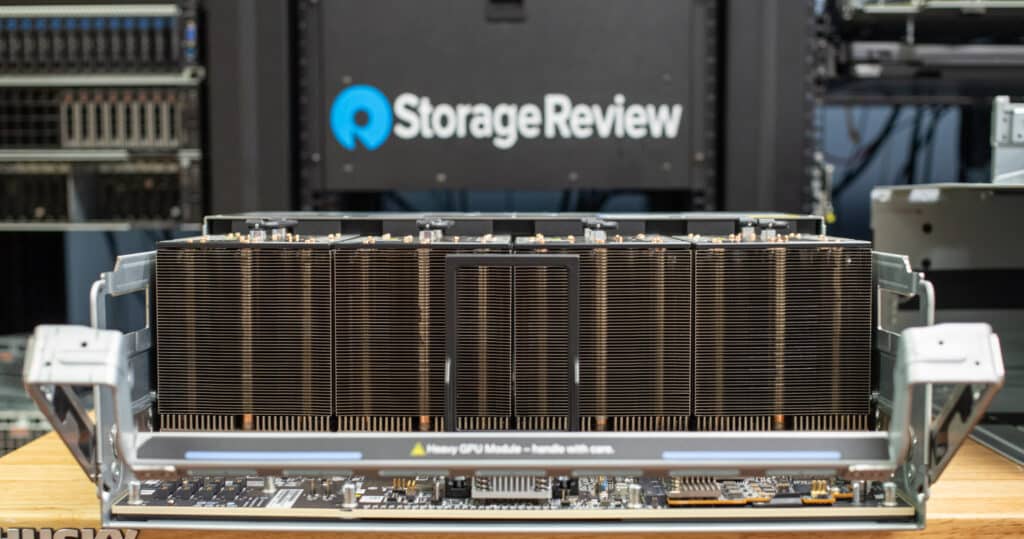

What truly sets the XE9680 apart is its GPU capabilities. The platform supports eight high-powered GPUs. Available configurations include NVIDIA’s HGX H200 (141GB) and H100 (80GB), AMD’s Instinct MI300X (192GB), and Intel’s Gaudi3 (128GB). The newly introduced XE9680L variant supports NVIDIA’s next-generation B200 GPUs and direct liquid cooling, pushing the envelope for density and performance.

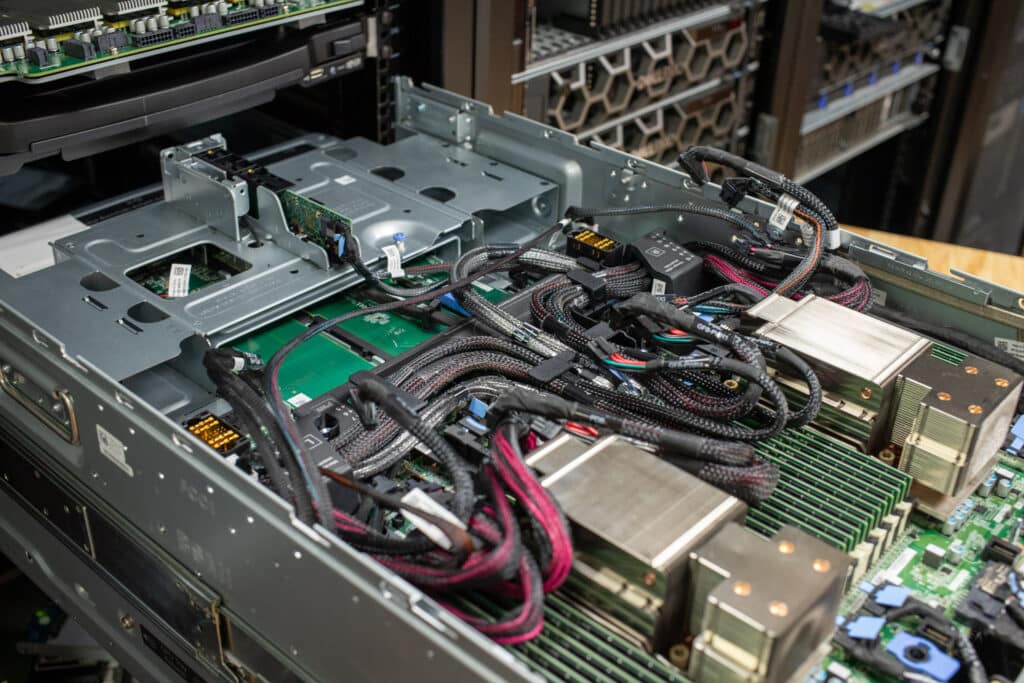

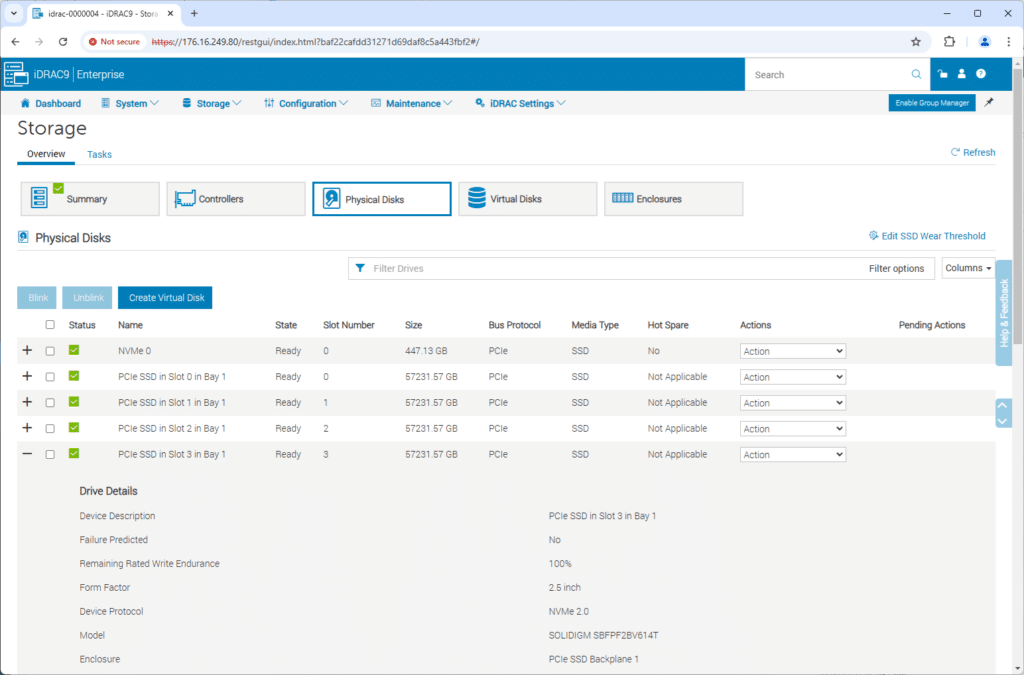

Storage configurations are equally flexible, offering 8x 2.5″ NVMe/SAS/SATA drives or 16x E3.S NVMe drives. The system can be equipped with Dell’s H965i NVMe PERC RAID card, simplifying storage redundancy significant for large KV caches during inference workloads.

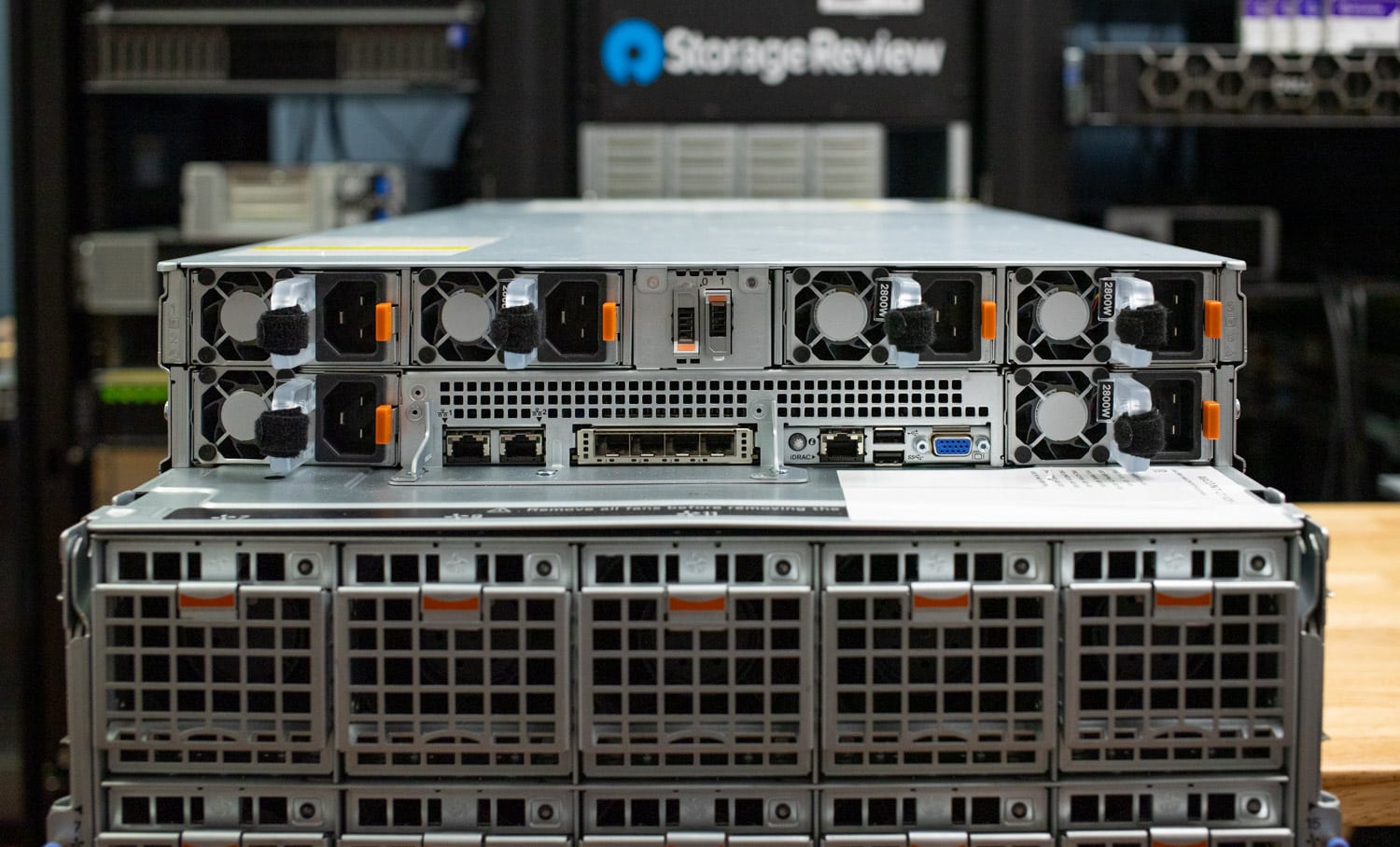

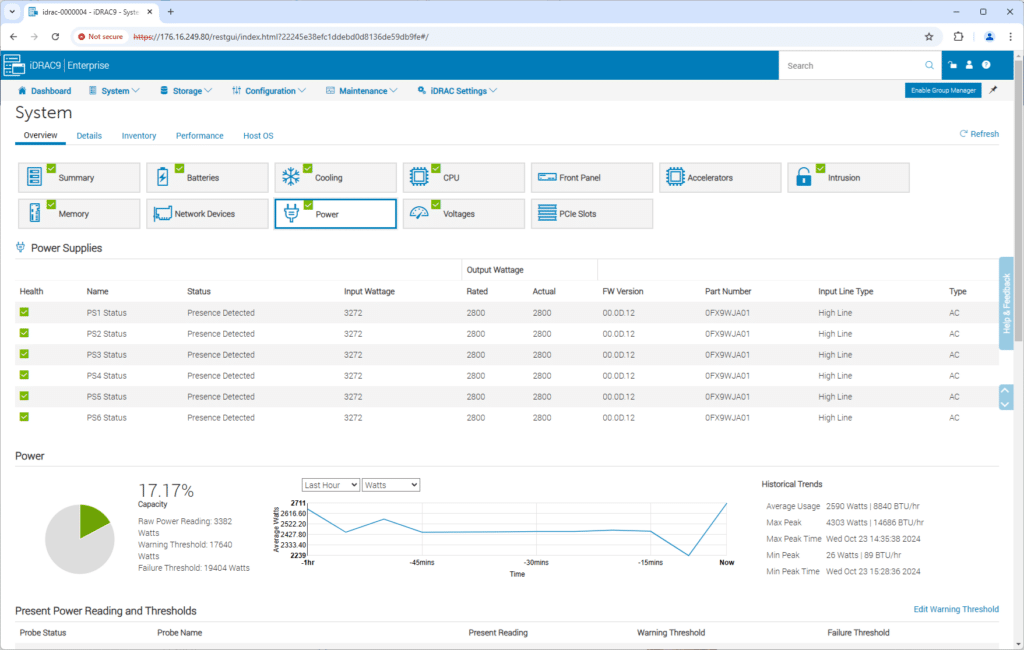

Power delivery is engineered for maximum reliability. Six power supplies totaling 19200W are configured in a 3+3 fault-tolerant redundant (FTR) arrangement. When two or more PSUs fail, the system enters a fault-tolerant redundant mode rather than shutting down. In this mode, the GPU power brake activates, throttling GPU clocks to one-fourth, resulting in approximately one-fifth of typical GPU performance.

This thoughtful design choice is invaluable in large-scale training environments where hundreds or thousands of GPUs work in concert. Rather than having a node go completely offline – which would require rescheduling and repeating training iterations on another node – the system can continue operating at a reduced performance until the next maintenance window. Such attention to detail in power management helps maintain high model training throughput (MFU) by minimizing disruptions.

Expansion capabilities are extensive, with up to 10 PCIe Gen5 x16 full-height, half-length slots, two of which support higher-powered cards beyond 75W. This abundance of PCIe connectivity enables various networking configurations, including DPUs and SmartNICs, essential for building modern AI infrastructure.

Full Specifications:

| Specification | Details |

|---|---|

| Processor | Up to two 5th Generation Intel® Xeon® Scalable processors (64 cores per CPU) Up to two 4th Generation Intel® Xeon® Scalable processors (56 cores per CPU) |

| GPU Options | XE9680: – NVIDIA HGX H200 (141GB) SXM5 700W – NVIDIA HGX H100 (80GB) SXM5 700W – AMD Instinct MI300X (192GB) OAM 750W – Intel Gaudi3 (128GB) OAM 900W |

| Memory | 32 DIMM slots 5600 MT/s (5th Gen) 4800 MT/s (4th Gen) |

| Storage | Front Drive Bays: 8x 2.5″ NVMe/SAS/SATA (122.88TB max) 16x E3.S NVMe (122.88TB max) |

| Storage Controllers | Internal Controllers: PERC H965i (Not supported with Intel Gaudi3)Internal Boot: Boot Optimized Storage Subsystem (NVMe BOSS-N1): HWRAID 1, 2 x M.2 SSDs |

| PCIE Slots | PCIe SlotsUp to 10x PCIe Gen5 x16 slots (8 slots with Intel Gaudi3) |

| Network | 1x OCP 3.0 (optional) 2x 1GbE LOM |

| Power Supplies | 3200W Titanium (277 VAC) 2800W Titanium (200-240 VAC) |

| Dimensions | Height: 10.36″ (263.20mm) Width: 18.97″ (482.00mm) Depth: 39.71″ (1008.77mm) with bezel |

| Weight | Up to 251.44 lbs (114.05 kg) |

| Form Factor | 6U rack server |

| Management | Embedded / At-the-Server: iDRAC9 iDRAC Direct iDRAC RESTful API with RedfishiDRAC Service ModuleConsoles: CloudIQ for PowerEdge plugin OpenManage Enterprise OpenManage Power Manager plugin OpenManage Service plugin OpenManage Update Manager plugin Tools: Dell System Update Dell Repository Manager Enterprise Catalogs iDRAC RESTful API with Redfish IPMI RACADM CLIOpenManageIntegrations: BMC Truesight OpenManage Integration with ServiceNow |

| Security | Cryptographically signed firmware Data at Rest Encryption (SEDs with local or external key mgmt) Secure Boot Secured Component Verification (Hardware integrity check) Secure Erase Silicon Root of Trust System Lockdown (requires iDRAC9 Enterprise or Datacenter) |

| Cooling | Air-cooled |

Dell PowerEdge XE9680 Build and Design

The PowerEdge XE9680 is an imposing piece of hardware, measuring 10.36 inches (263.20mm) in height, 18.97 inches (482.00mm) in width, and 39.71 inches (1008.77mm) in depth with its bezel attached. When fully loaded, it weighs 251.44 lbs (114.05 kg). The GPU selection will have the final say on weight, with the NVIDIA H100/H200 model coming in at 238 lbs, while the AMD MI300X unit tips the scales at 251 lbs.

This was the first server that required careful thought to load properly into our testing environment. When you consider server weight and the number of people needed to rack hardware, there is some wiggle room to go outside the boundaries, but at a certain point, one or two people aren’t lifting it alone. Dell is kind enough to give you a “lift table” to help you understand how this platform fits in. For all those wondering, Kevin loaded the XE9680 into the rack himself.

| Chassis Weight | Description |

|---|---|

| 40 pounds – 70 pounds | Recommend two people to lift. |

| 70 pounds – 120 pounds | Recommend three people to lift. |

| ≥ 121 pounds | A server lift is required. |

Despite its complexity and Dell’s recommendation for specialized service technicians, the XE9680 features remarkably user-friendly service elements. The server’s panels include detailed service instructions and clear graphics, making maintenance procedures surprisingly approachable for experienced IT staff. These visual guides proved invaluable during our hands-on time with the system, allowing us to service various components confidently.

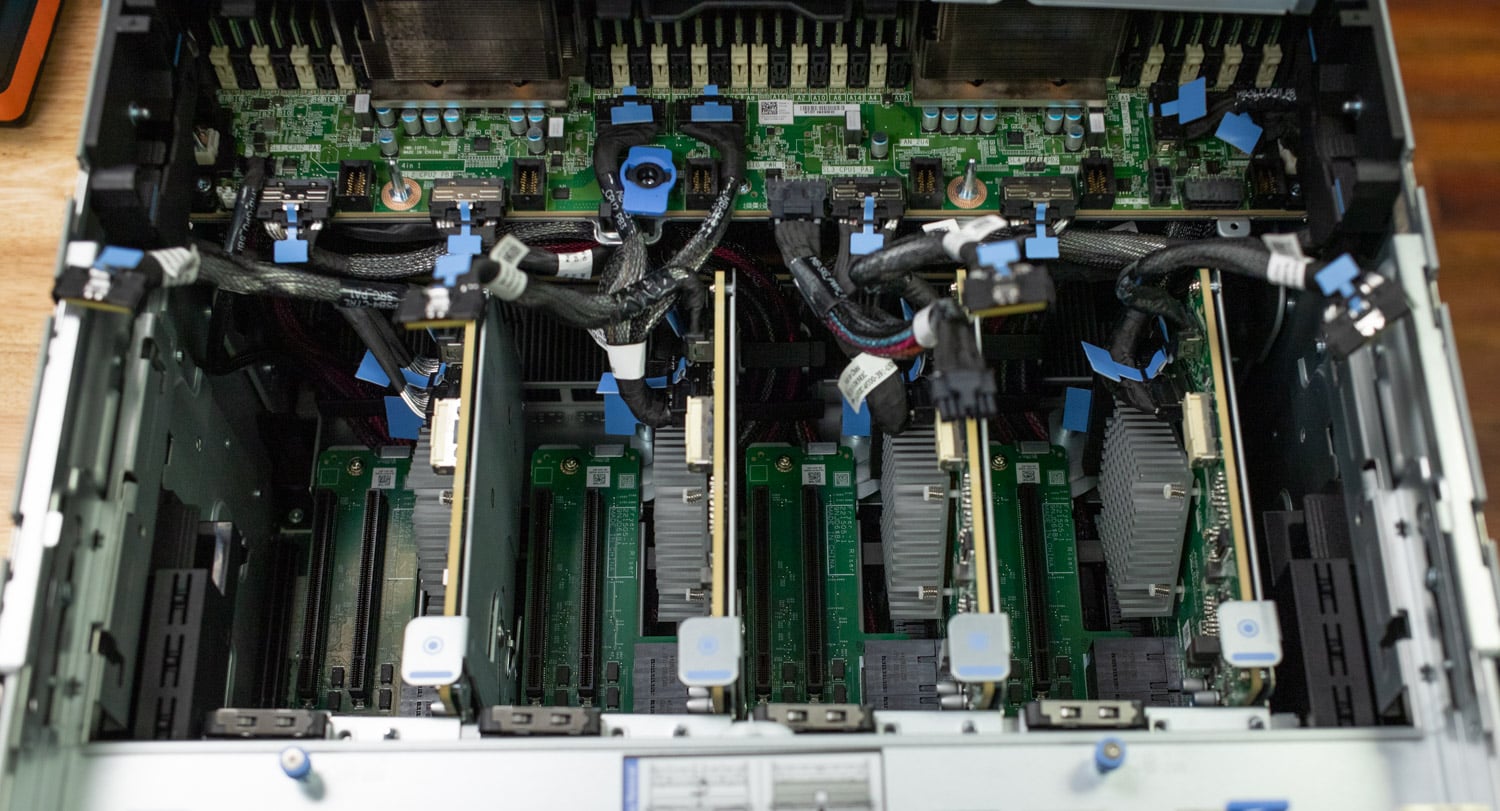

After opening the cover on the PowerEdge XE9680, once you get past the numerous power cables from the small power substation on top, it looks a lot like a PowerEdge R760. Our unit was powered by two Intel Xeon Platinum 8468 processors, each with 48 cores at 2.1Ghz. Each processor offers 80 PCIe lanes, which flow through quite a few PCIe switches in this unit to support the GPUs, NICs, and other hardware loaded into the XE9680.

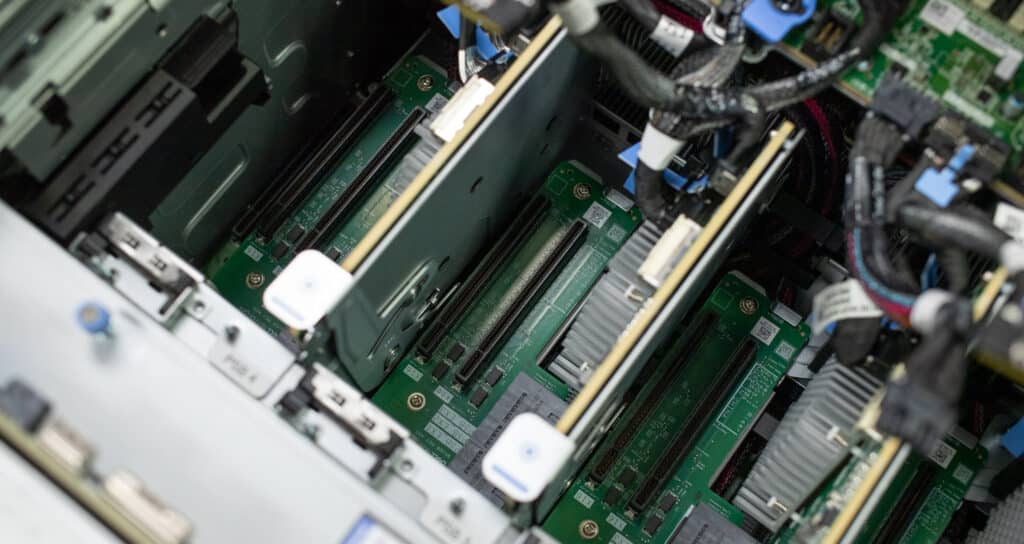

One of the most impressive engineering features is the PCIe Switch Board (PSB) design. These boards provide connectivity for up to 10 additional full-height, half-length PCIe cards (two of which can exceed 75W power draw) and integrate directly with the GPU baseboard. This direct integration enables GPU-direct technology, allowing SSDs and network cards to communicate directly with the GPUs, bypassing the CPU and reducing latency for I/O-intensive AI workloads.

Each expansion slot supports a full PCIe Gen5 x16 interface, including the two lower slots on the far left and right of the layout. While the upper eight slots are connected through their own PSB, the two lower slots connect directly to the PCIe Base Board (PBB). These two slots also support high-power draw cards. Additionally, it should be noted that the PCIe layout varies slightly depending on the GPU type chosen for the PowerEdge XE9680. The AMD-equipped models don’t support SmartNIC/DPUs, and the Intel Gaudi3 models have two slots blocked off due to airflow issues.

Cooling is another area where Dell’s engineering expertise shines. The system employs up to 16 high-performance gold-grade fans—six in the mid-tray and ten at the rear. The PowerEdge XE9680 supports a wide range of installation scenarios, with ambient temperatures ranging from 10 to 35C (30C with the Intel Gaudi3 GPUs). At full tilt, the server moves an impressive 1,200CFM into the hot aisle.

This robust cooling solution handles even the most demanding thermal loads, including the AMD MI300X, Intel Gaudi3, or NVIDIA H100 GPUs, while maintaining optimal operating temperatures. The PowerEdge XE9680 sings quite a bit under load in terms of noise output. Dell offers a full acoustical specification sheet for the XE9680 under different situations, but it’s pretty easy to say it will be a loud platform under load.

Management

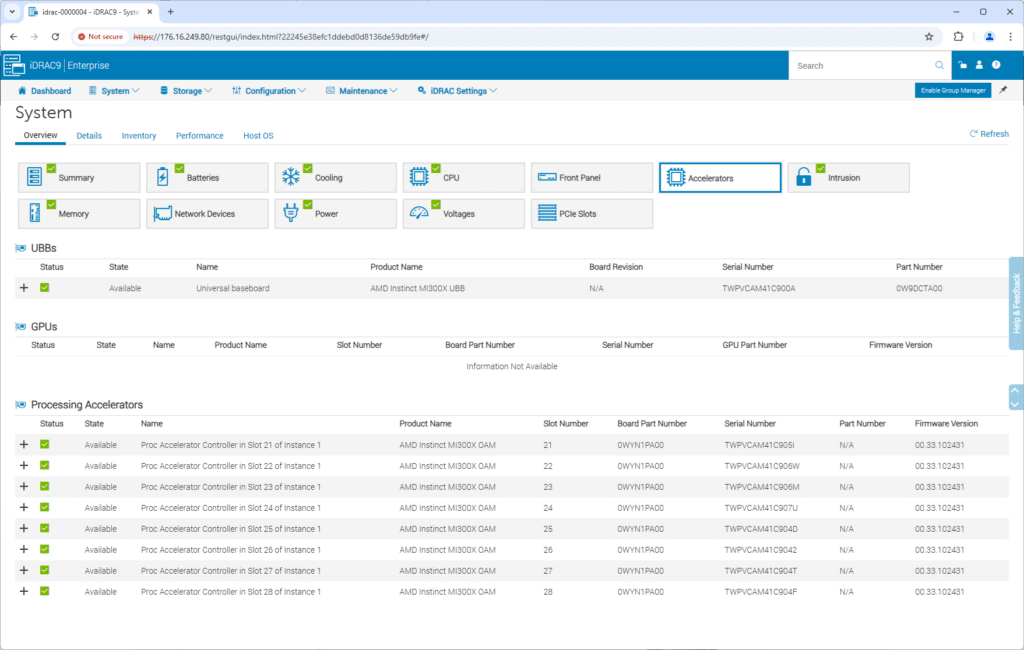

The XE9680’s management capabilities are built around Dell’s enterprise-proven iDRAC9, which provides comprehensive server lifecycle management and monitoring. This iteration of iDRAC brings several AI-optimized features, including detailed GPU telemetry, power consumption analytics, and extensive thermal monitoring designed for high-density AI workloads.

The platform’s management stack is particularly noteworthy for AI infrastructure deployments. Through iDRAC9’s RESTful API with Redfish support, organizations can programmatically monitor and manage GPU utilization, memory bandwidth, and thermal conditions – critical metrics for maintaining optimal AI training and inference performance. The system’s integration with OpenManage Enterprise enables fleet-wide management of multiple XE9680s through a unified console, which is essential for large-scale AI clusters.

Security and compliance are foundational elements of the management architecture. The platform implements Silicon Root of Trust and Secure Component Verification, ensuring hardware integrity from boot-up through operation. These features are especially valuable when running sensitive AI workloads or handling proprietary model weights.

The predictive failure analysis capability, powered by CloudIQ integration, uses machine learning to forecast potential hardware issues before they impact workloads. This proactive approach is especially crucial for long-running AI training jobs, where unexpected downtime can result in days of lost computation. When combined with Dell’s ProSupport Plus service, this predictive capability triggers automatic case creation and parts dispatch, often resulting in preventive maintenance before system degradation occurs.

For organizations requiring integration with existing management tools, the XE9680 supports various management frameworks through OpenManage integrations, including ServiceNow and BMC TrueSight, allowing seamless incorporation into established IT service management workflows.

The iDRAC9 interface provides detailed real-time monitoring of critical components through an intuitive dashboard. GPU monitoring displays comprehensive metrics, including temperature, power consumption, and utilization rates across all eight accelerators, essential for optimizing AI workload distribution.

The storage monitoring interface offers instant visibility into drive health, temperature, and performance metrics across the NVMe array, which is particularly valuable when managing high-throughput inference caches and training datasets.

Memory, Storage, and Scale

The eight AMD MI300X GPUs inside the Dell PowerEdge XE9680 represent a significant leap in GPU memory capacity, offering 192GB of HBM3 memory per card compared to NVIDIA H200’s 141GB. This 36% increase in memory capacity isn’t just a number on a spec sheet – it’s critical for large language model deployment.

This massive pool of memory, coupled with the MI300X’s 5.3 TB/s memory bandwidth, enables organizations to run multiple instances of smaller models or partition larger models across GPUs while maintaining high throughput and low latency.

To put this in perspective, Meta’s Llama 3.1 405B model, which requires north of 1TB of VRAM in BF16, can be comfortably distributed across a single XE9680 with MI300X GPUs without quantization and full 128k context length. This eliminates potential quality loss associated with quantization techniques and allows for more Tokens/Second compared to having the model distributed over two servers.

To maximize our storage footprint, we used the Solidigm 61.44TB drives to serve as a sophisticated extension to the memory, bridging the gap between high-speed GPU memory and traditional storage. The SSDs excel at storing key-value cache pairs during inference, effectively extending the GPU’s memory capacity for long-context generations. Their massive capacity and NVMe performance make them ideal for quick model weight access, enabling efficient model switching and warm starts.

In applications like the Metrum AI deployment we detail below, the SSDs pull double duty as the storage backend for vector databases, delivering the performance needed for real-time similarity searches while maintaining the capacity for extensive embedding storage.

The value of these high-capacity drives extends beyond inference to training workflows. They provide ideal local storage for queuing training batches, reducing network overhead by keeping data closer to the compute resources. During training, these drives excel at storing model checkpoints locally, which is critical for maintaining training progress and enabling quick recovery. This local storage strategy also helps to optimize network utilization by reducing the immediate network traffic after each processed layer and batch.

While the 61.44TB capacity across eight bays in the XE9680 sounds promising, there’s much more capacity coming. With Solidigm’s newly announced 122.88TB drive, the storage density in the XE can be doubled to nearly a petabyte for further training optimizations and longer-lived inference caches.

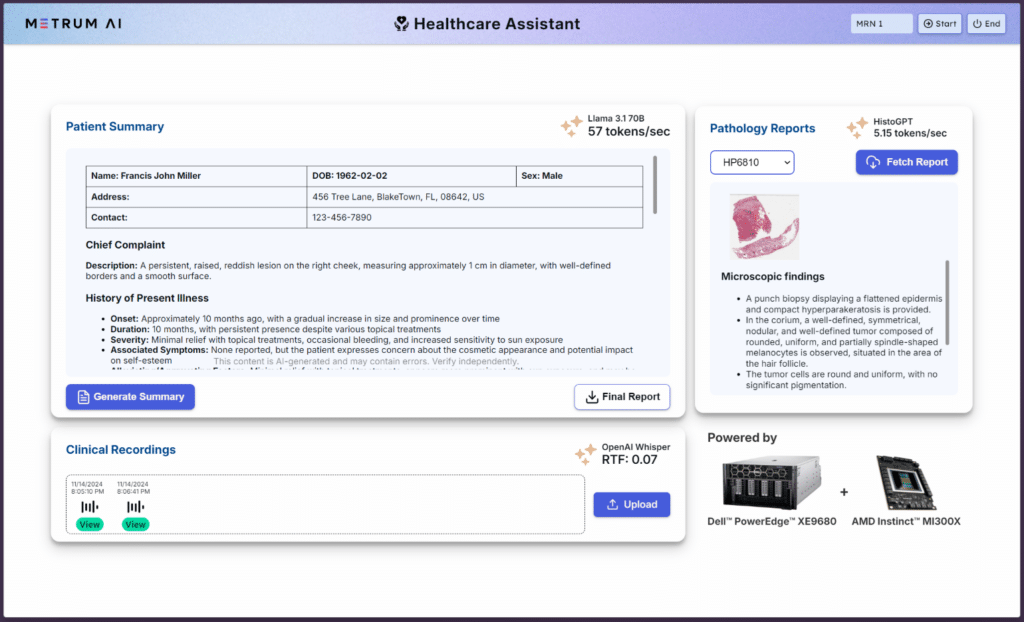

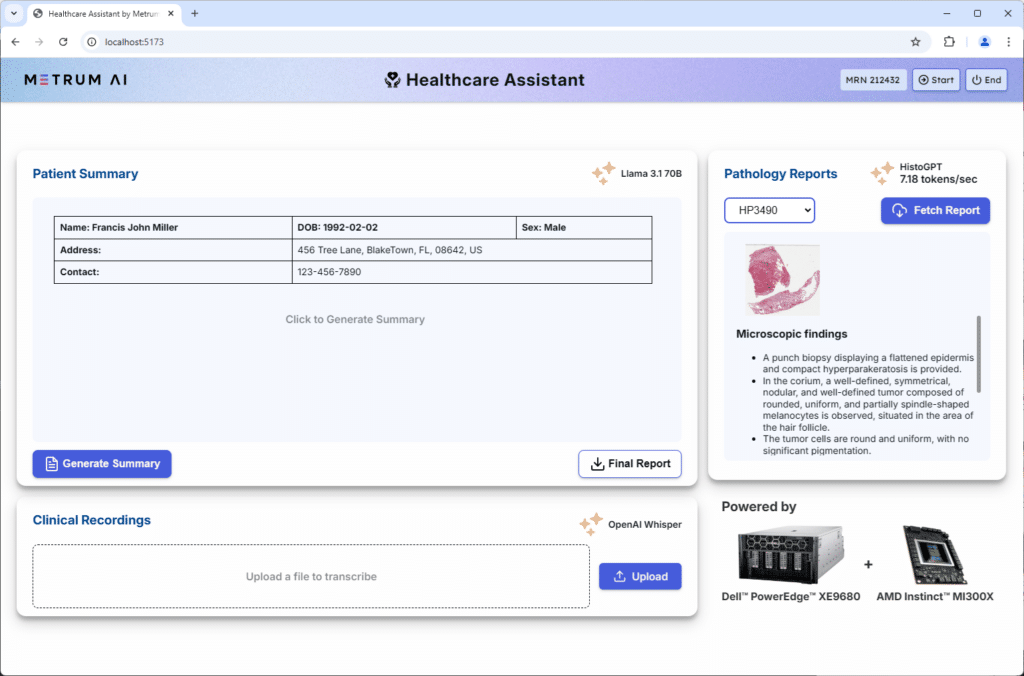

Metrum AI Healthcare Assistant – Revolutionizing Patient Care

The healthcare sector consistently faces a challenge in managing time-consuming patient documentation and record management, which often detracts from direct patient care. Metrum AI’s Healthcare Assistant, deployed on Dell PowerEdge XE9680 servers with AMD accelerators, exemplifies how advanced AI infrastructure can transform healthcare workflows, enhancing efficiency and improving patient outcomes.

The system utilizes Llama 3.1 70B Instruct as its primary language model, renowned for its comprehension of medical contexts. This allows it to process complex patient data easily. This language model is paired with the gte-v1.5 embedding model and Milvus Vector DB, providing a robust foundation for natural language processing and contextual understanding essential for handling medical data.

Metrum AI’s Healthcare Assistant also includes a multimodal approach incorporating HistoGPT for histopathology image analysis and OpenAI’s Whisper for real-time transcription of physician notes. Together, these models streamline clinical workflows, allowing doctors to speak naturally while the system transcribes, categorizes, and integrates information into patient records in real-time.

Metrum AI recognizes that even though individual patient data may be relatively small, the combined storage demands of high-traffic hospitals can escalate to hundreds of terabytes. The Dell PowerEdge XE9680 can address this with its local onboard NVMe storage. Our configuration offers eight 2.5″ U.2 NVMe storage bays operating at PCIe Gen4 speeds. While we tested the XE9680 with 61.44TB Soldigim D5-P5336 QLC SSDs, this capacity can scale even further. Soldigim recently launched their new D5-P5336 122.88TB QLC models, which doubles the capacity of their already massive SSDs while maintaining the same performance.

Metrum provided estimates of how patient data translates over time over different scenarios. When you work that out into total storage capacity, you can see how many additional patients a unit could support using the highest-capacity SSDs. Taking the estimated data footprint per patient and going against the usable capacity for each SSD (57TB for the 61TB SSD and 114TB for the 122TB SSD), we can see that having dense SSDs greatly increases what you can store on the server in a meaningful way per year.

| Total Annual Estimate per Patient | Notes | Estimated Storage | Patients per 61TB SSD | Patients per 122TB SSD |

|---|---|---|---|---|

| Enhanced Storage Needs (DICOM images/variants, augmentations, processed copies, audio transcriptions, detailed records) | Includes multiple image copies, audio transcriptions, and records | ~8.4 GB | 6,786 | 13,571 |

| High Storage Scenario (Heavy Processing, Frequent Visits) | Frequent visits, high image processing requirements | ~10.5 GB | 5,428 | 10,857 |

While the initial 1-year estimates seem pretty high, it’s important to note that patient data isn’t static. You will have new data captured and new visits scheduled, growing the demand for storage. This is where storage plays a significant role in the medical imaging space. Additional storage capacity directly affects how many patients a solution can effectively support.

| Total 10-Year Storage Estimate per Patient | Notes | Estimated Storage | Patients per 61TB SSD | Patients per 122TB SSD |

|---|---|---|---|---|

| Enhanced Scenario (Multiple Copies, Detailed Records, Audio, Augmentations) | Expanded records, frequent imaging, and processing | ~84 GB | 679 | 1,357 |

| High Scenario (Heavy Processing, Comprehensive History) | Maximum processing and storage needs over 10 years | ~105 GB | 543 | 1,086 |

The Dell PowerEdge XE9680, fitted with AMD MI300X accelerators and integrated with Metrum AI’s Healthcare Assistant, provides a scalable and efficient solution for healthcare providers. By automating time-consuming tasks and enabling rapid access to critical insights, this setup allows clinicians to focus more on patient care while managing growing demands. Through seamless integration of AI components across language, image, and voice modalities, the Healthcare Assistant represents a significant advancement in AI-driven healthcare solutions, reducing administrative burdens and improving overall patient outcomes.

Conclusion

In the evolving landscape of enterprise AI, Dell PowerEdge XE9680 sets a new standard, demonstrating how purpose-built hardware can revolutionize various industries. The Metrum AI Healthcare Assistant implementation showcases one of the countless possibilities—imagine financial institutions running complex risk analysis models in real-time or research laboratories processing vast datasets for drug discovery, all powered by this remarkable system.

The XE9680 offers exceptional versatility in GPU options, from NVIDIA’s H100s to AMD’s MI300X and Intel’s Gaudi3. This flexibility, combined with its robust memory capacity, storage options, and innovative cooling solutions, makes it more than just an AI server—it’s a complete enterprise computing platform capable of handling the most demanding workloads across various applications, be it in the data center or medical office.

From a storage perspective, the server has just eight NVMe bays, but thanks to Solidigm, we can use their 61.44TB SSDs to get nearly half a petabyte into the system as working space for the healthcare assistant we detailed above. If that’s not enough, Solidigm just announced they’ve doubled the capacity of the D5-P5336 to 122.88TB, meaning systems like this could fit roughly a petabyte of flash storage adjacent to their accelerator, enabling efficient AI workloads.

Dell’s engineering shines through in every aspect of the XE9680, from its thoughtful power management features to its user-friendly serviceability. The platform’s ability to maintain operation even during partial power supply failures demonstrates Dell’s deep understanding of AI requirements, where system reliability and continuous operation are paramount.

Backed by Dell’s comprehensive support infrastructure and commitment to advancing AI capabilities through various initiatives, the PowerEdge XE9680 stands as a testament to innovation in enterprise computing. Thanks to its combination of raw computational power, architectural flexibility, and enterprise-grade reliability, it has received a renewed Best of 2024 award.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed

This review was co-authored by Kevin O’Brien and Divyansh Jain