In an era dominated by data-driven applications, the traditional compute landscape, long dictated by CPUs and GPUs, is undergoing a paradigm shift. As businesses and institutions move into more complex computing environments, especially with the burgeoning fields of Artificial Intelligence (AI), big data analytics, and cloud-native applications, the demands on processing capabilities have transformed. The Data Processing Unit (DPU) brings another element to bear, but DPU lifecycle management in the enterprise is a little more complicated than it sounds.

NVIDIA BlueField DPU

DPUs, often referred to as SmartNICs or infrastructure processing units (IPUs), serve a pivotal role by becoming the nexus between compute (CPUs), graphics (GPUs), and storage networking infrastructure. Deployed in public cloud and hyperscale data centers for years, DPUs isolate resident workloads from networking, security, storage, and other infrastructure operations typically associated with data center functions. This enhanced capability frees CPUs and GPUs from these tasks, allowing them to focus on their primary function of computing and rendering.

The introduction of DPUs carries with it a new set of challenges—how to manage, optimize, and ensure the seamless operation of these units in tandem with existing infrastructure. DPU management becomes crucial because it introduces a new layer of complexity. Proper management ensures that DPUs are utilized to their full potential, offering benefits like reduced latency, increased throughput, and better overall system efficiency.

Integrating DPUs into the broader computational ecosystem and ensuring effective DPU management, businesses, and institutions can unlock new performance, agility, and scalability areas. As the world continues its relentless march towards more data-centric operations, embracing and managing DPUs will be fundamental to maintaining a competitive edge in the digital landscape.

VMware, The Driving Force Behind Interest in DPU Technology

DPUs have emerged as a transformative technology in the computing landscape, garnering significant attention and traction in the market today. As organizations grapple with the explosive growth of data, the increasing complexity of workloads, and the demand for higher performance and efficiency, DPUs have emerged as a powerful solution.

VMware had been working to bring its software stack to DPUs. However, the proprietary, vendor-specific software associated with DPU hardware made the integration task even more challenging. The doors were flung open when VMware extended support for vSphere to the DPU, enabling customers to achieve efficiencies typically associated with the cloud to their data centers using their preferred virtualization stack. DPUs are also a fundamental part of vSAN 8, offloading security and networking tasks.

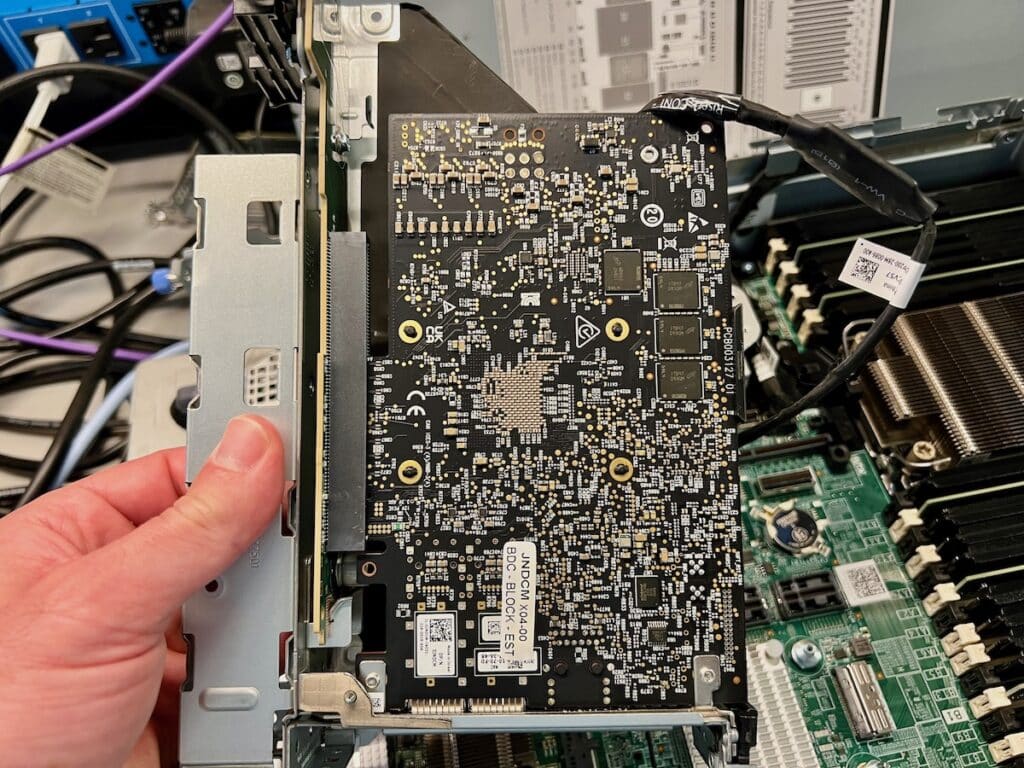

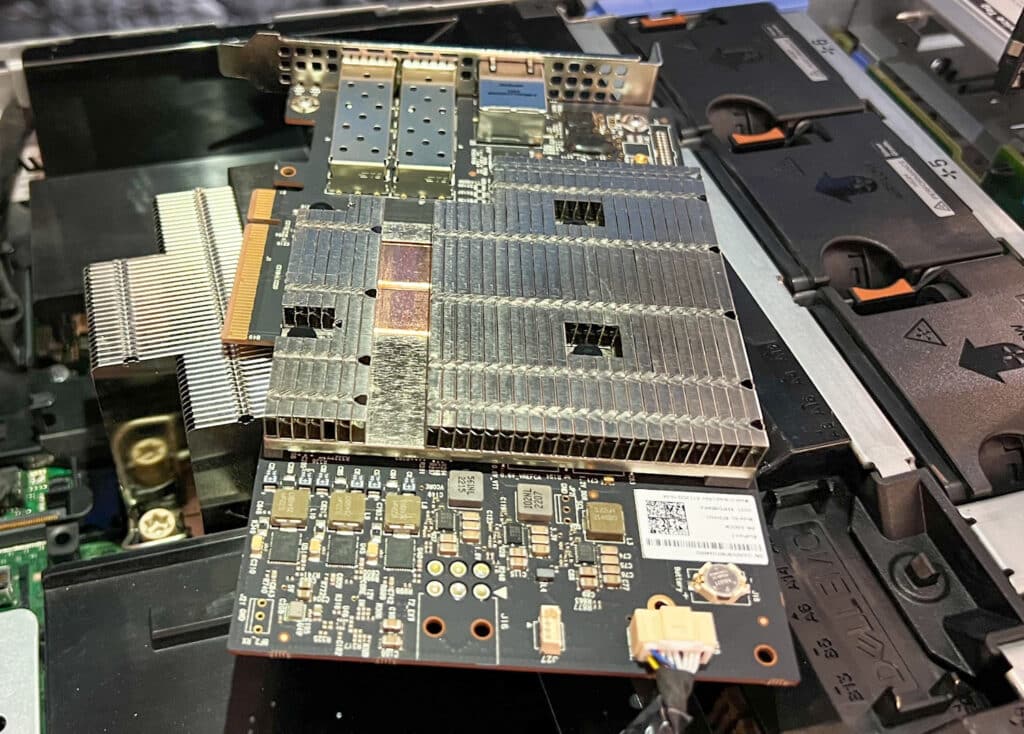

NVIDIA BlueField DPU in Riser 1

VMware clearly intends to continue to leverage DPUs even more over time; it’s not even totally out of the realm to consider certain vSphere nodes in the future to be entirely DPU-powered, with no x86 at all. Already, though, VMware is touting performance improvements thanks to DPUs.

VMware, running a REDIS key-value store on vSphere 8, found that a DPU-enabled host achieved performance similar to a non-DPU-enabled host with 20 percent fewer CPU cores. In another test, the DPU-enabled host achieved 36 percent better throughput and a 27 percent reduction in transaction latency.

With DPU interest rising thanks to VMware, vendors like Dell have had to sort out ways to include the DPU in the designs without forgetting about DPU lifecycle management. The thing is, DPUs are essentially their own computer, with management designed to be done locally over the Ethernet or other admin port. But that’s not consistent with how enterprises want to manage hardware lifecycle, so when Dell went to adopt DPUs, they had to get a little creative.

Dell PowerEdge, DPU and iDRAC

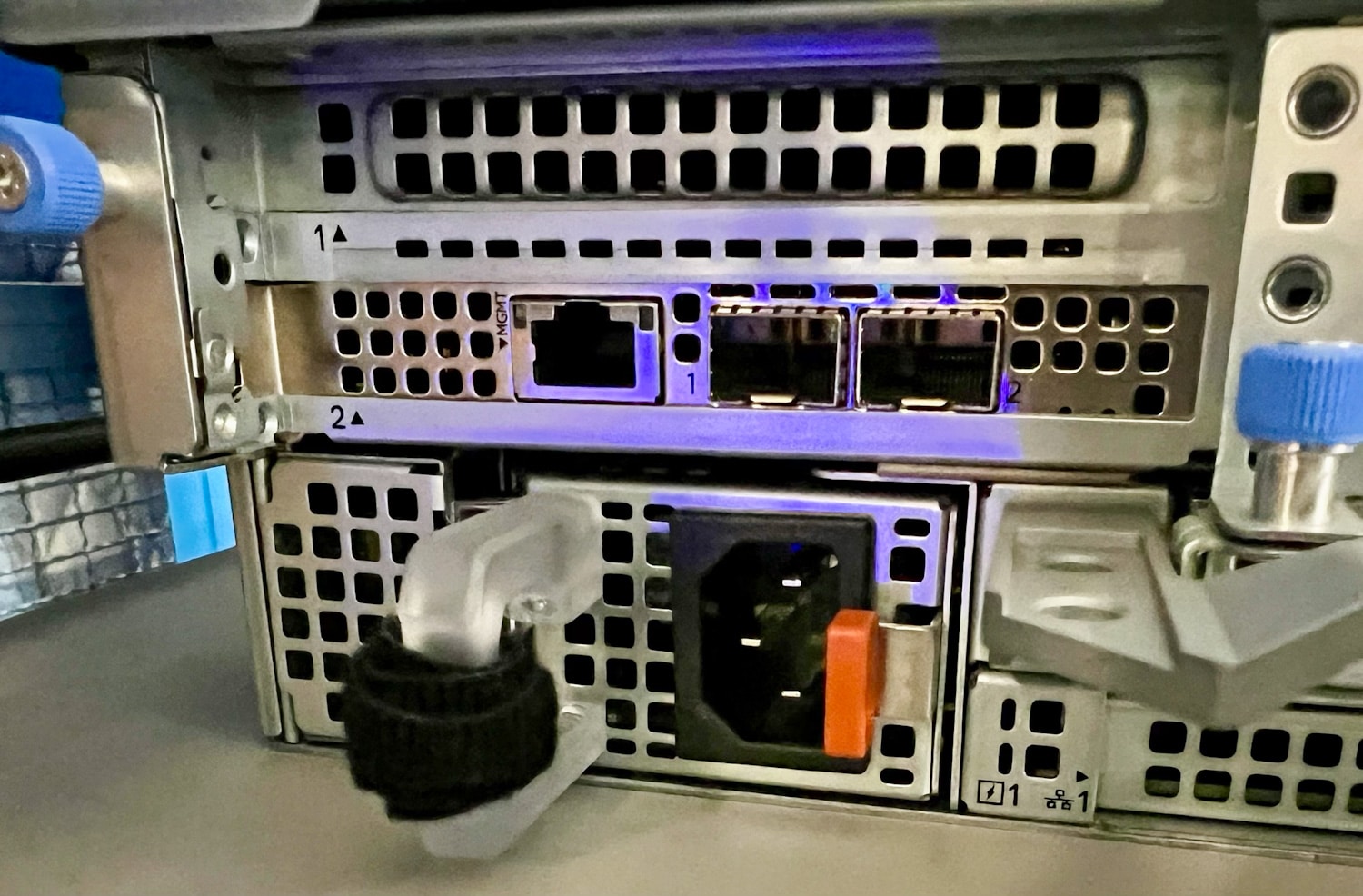

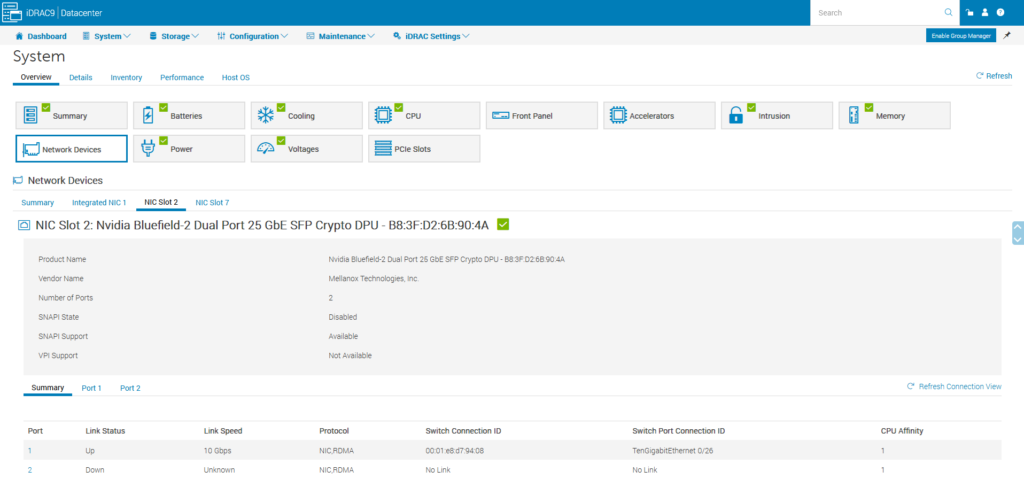

When you look at the port-side of an NVIDIA BlueField DPU, it looks just like a standard NVIDIA ConnectX NIC, with an extra Ethernet port for management. As noted, though, enterprises want to be able to manage all server components through a common lifecycle management console. In the case of Dell, that means iDRAC. So, Dell had to get a little creative to be able to make the hardware connections required for iDRAC to recognize the DPU.

NVIDIA BlueField DPU Ports

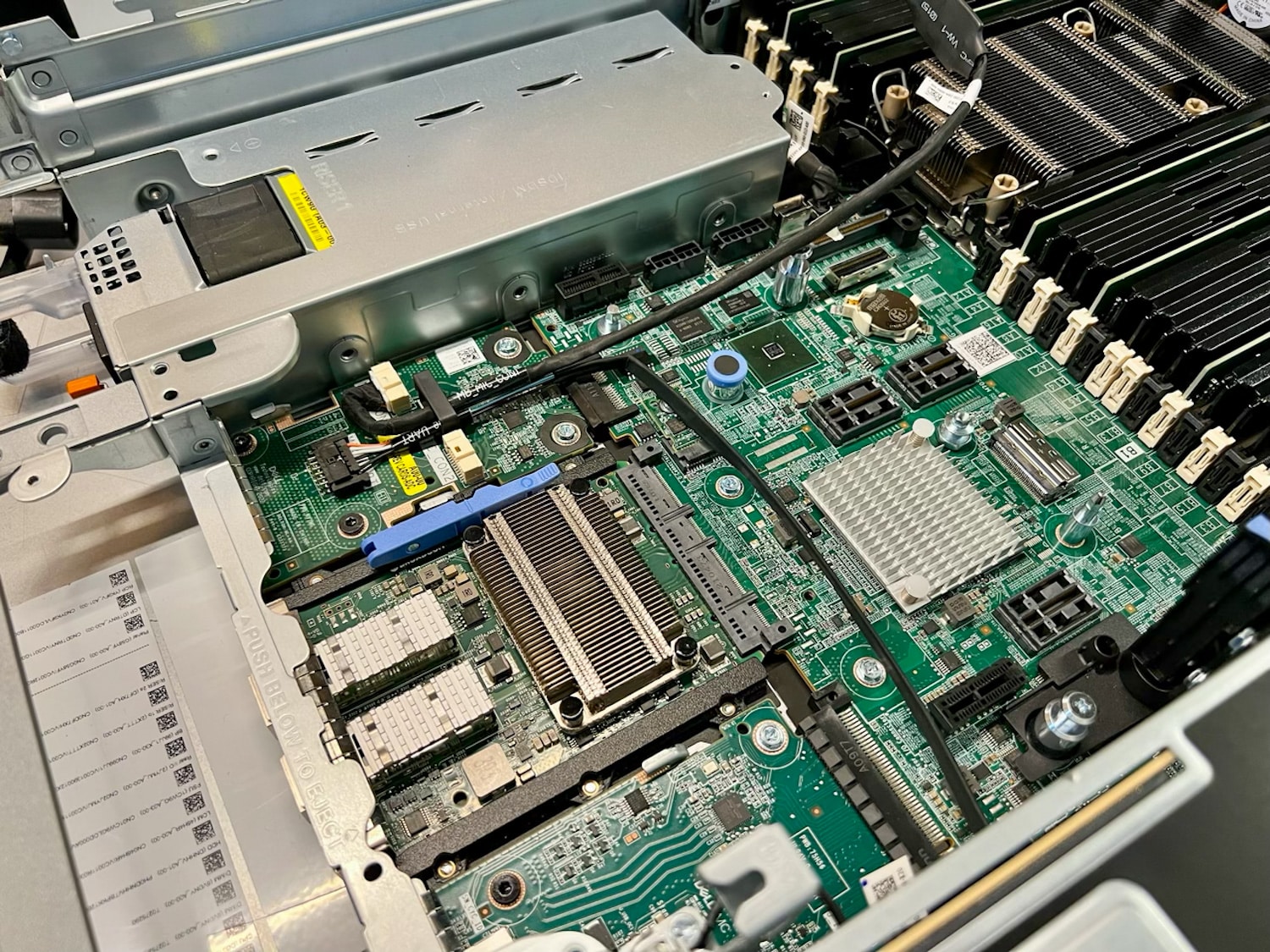

The DPU itself simply slots into the risers (Riser 1A Slot 2, to be specific), as any PCIe card would. That gives the DPU power and gets it on the system BUS. But the management of the card can’t be done over PCIe, like a GPU, at least not at the moment. What’s required is an additional hardware element to connect those dots. In the photo below of an R750 motherboard, the astute will notice a different card in the slot where the LOM (NIC) typically goes.

Dell PowerEdge Motherboard with Management Interface Card (MIC)

As we take a little closer look at the Management Interface Card (MIC), we can see it sitting in the space where the onboard LOM typically goes. The MIC enables a Network Controller Sideband Interface (NC-SI) between the DPU and the server BMC.

Dell PowerEdge Management Interface Card (MIC)

DPU Lifecycle Management with iDRAC

There are a couple of tools to help get the most from DPU technology. Dell’s integrated Dell Remote Access Controller (iDRAC) is a comprehensive management tool designed for Dell PowerEdge servers. It offers advanced features for remote server monitoring, administration, and control.

iDRAC provides real-time monitoring of server health parameters, allowing administrators to monitor critical components like CPU, memory, storage, power, and temperature. iDRAC simplifies updating firmware, including BIOS, RAID controllers, and network adapters. This centralized interface lets administrators manage and deploy firmware updates across multiple servers, ensuring consistency and reducing manual effort.

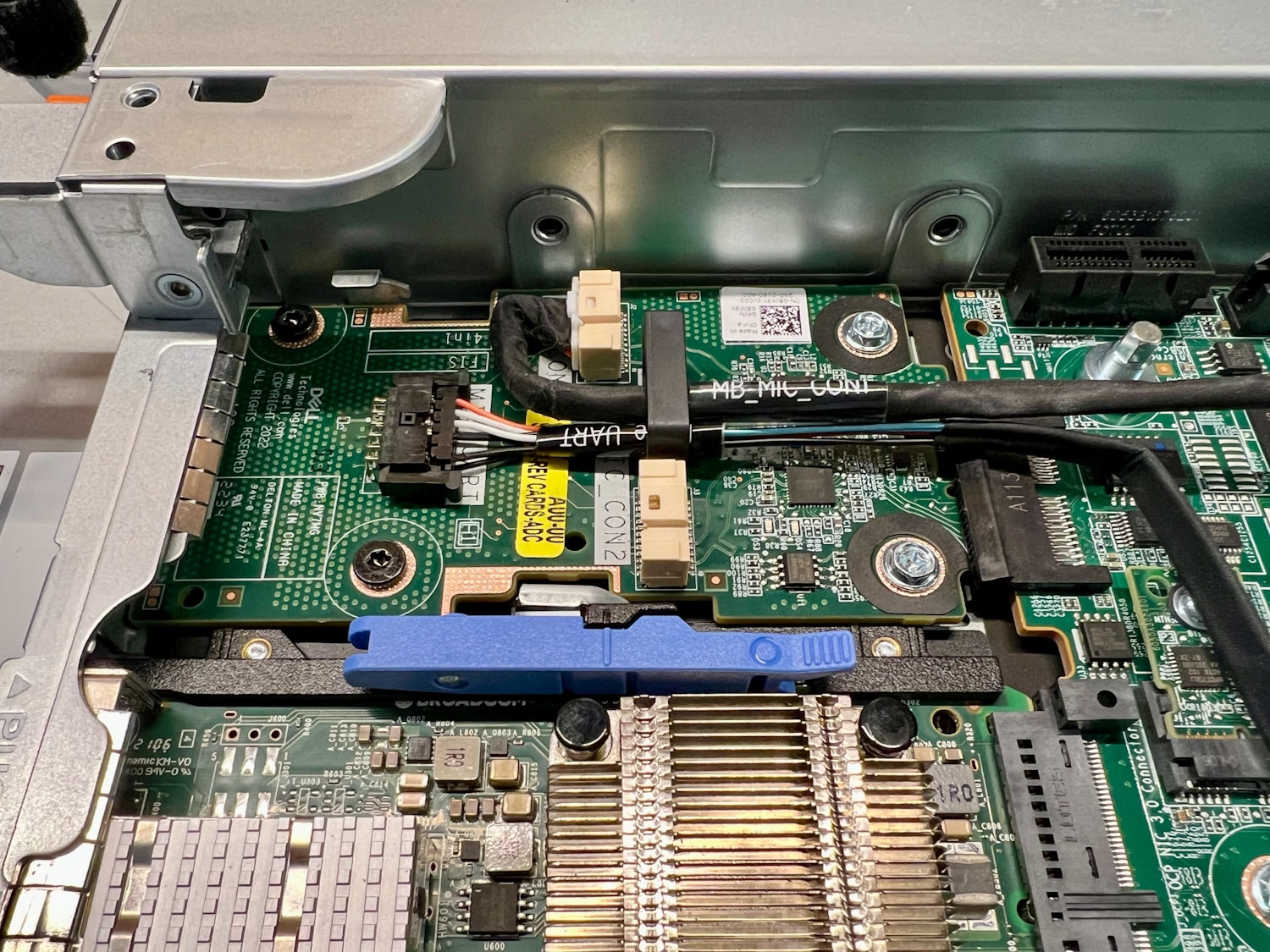

Because of the LOM for MIC swap and an extra cabling hardness, Dell can manage the DPU through iDRAC, bringing DPU lifecycle management in line with all other key components in the PowerEdge server. While this appears quite simple to the iDRAC admin, the work behind the scenes to enable this ease of management for the DPU is impressive.

DPU Lifecycle Management with iDRAC

Final Thoughts

It’s a bit premature to say every VMware vSphere or vSAN node should be configured with DPUs to take advantage of the vSphere Distributed Services Engine, but it’s hard to overlook the benefits. With the increasing volume and complexity of modern workloads and the demand for new software-defined services, server CPUs are being stretched, leaving fewer compute cycles for workload processing. DPUs are already broadly deployed in hyperscalers to tackle more infrastructure functions and free up CPU cycles for revenue-generating workloads.

NVIDIA BlueField DPU Management Port Cabled

While the benefits of DPUs to vSphere are obvious, introducing new accelerators into servers introduces new challenges that must be solved; in this case, that’s DPU Lifecycle Management. Thanks to some creative engineering by Dell, DPUs can be connected to the native BMC and managed through traditional lifecycle tools like iDRAC. This makes a big difference in adopting new technologies in the data center and should ease DPU adoption for Dell’s customers.

New vSphere 8 Features Overview

Amazon

Amazon