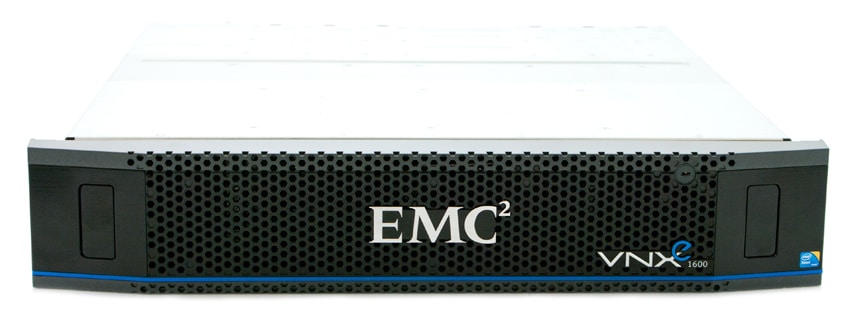

The VNXe1600 is a 2U storage array supporting 10G iSCSI and 16Gb FC from EMC that integrates VNX2 controllers and hybrid storage into a package offered at street prices below $9,000. These prices are unprecedented for a complete VNX storage solution, marking another step deeper into the SMB market for EMC. This direction was first signaled with the launch of the VNXe3200, the basis for this new SMB-oriented VNX2 array.

The VNXe1600 is powered by Intel Xeon E5 2.6Ghz dual-core CPUs in both of the array’s Storage Processor modules and, like the VNXe3200, the VNXe1600 offers both Fibre Channel and 10GbE connectivity options. When we had the first had the opportunity to work hands-on with the VNXe3200, it was the first and only VNX offering to utilize this 2U form factor that incorporated the controllers with the storage. The VNXe1600 underscores EMC’s intentions to bring VNX to a new market segment by streamlining the VNXe3200 into an offering even more affordable to midmarket customers.

The VNXe1600 is available with in two different 2U chassis options: a 25-drive array with 2.5” bays and a 12-drive array with 3.5” bays. Both options incorporate dual VNX Storage Processors (SPs). Each VNXe1600 SP includes two 6Gb/s x 4 SAS ports for expansion via EMC Disk Array Enclosures (DAEs). Mirroring the VNXe1600 array chassis configurations, the available DAEs include a 12-drive enclosure with 3.5” bays and a 25-drive enclosure with 2.5″ bays. The VNXe1600 can manage up to 200 disks depending on the expansion enclosures used. DAE types can be mixed within a deployment.

| Side by Side: VNX1600 vs. VNXe3200 | ||

| Detail | VNXe1600 | VNXe3200 |

| Chassis | 3.5″ x 12-drive DPE 2.5″ x 25-drive DPE |

3.5″ x 12-drive DPE 2.5″ x 25-drive DPE |

| CPU | Dual-core 2.6 GHz Ivy Bridge | Quad-core 2.2 GHz Sandy Bridge |

| Physical Memory size | 8GB (1 x 8GB DIMM) | 24GB (3 x 8GB DIMMs) |

| Bezel | Standard Bezel, no blue light | EMC-branded Bezel, with blue light |

| Integrated I/O | 2-port Converged Network Adapter (Qlogic) -2 x 8Gb or 16Gb FC, or -2 x 10GbE iSCSI, or -2 x TwinAX cable |

4-port 10GbE Copper RJ45 ports |

| eSLIC | 4-port 8Gb FC 4-port 1GbE iSCSI) 4-port 10Gb iSCSI) |

4-port 8Gb FC 4-port 1GbE iSCSI 4-port 10Gb iSCSI |

| DAEs | 2.5″ x 25 drives and/or 3.5″ x 12 drives | 2.5″ x 25 drives and/or 3.5″ x 12 drives |

| Max drive slots | 200 | 150 |

| Max capacity (TB) | 400TB | 500TB |

| Block | File | Yes | No | Yes | Yes |

When compared to the VNXe3200, the VNXe1600 array has lighter CPU and memory specifications along with some streamlining of the available data services. One other key change made in scaling down the VNXe3200 is that the VNXe1600 supports block storage only rather than the unified block and file services of the VNXe3200.

Like the VNXe3200, the VNXe1600 makes use of EMC’s MCx “Multicore Everything” architecture that provides CPU optimizations for VNX2 data services including multicore optimizations for hybrid storage performance such as FAST Cache. MCx also provides processor-level optimizations to support virtualized workloads. On the VNXe1600, EMC’s Multicore Cache, Multicore FAST Cache, and Multicore RAID are able to take advantage of MCx. Multicore Cache optimizes each Storage Processor’s DRAM and core usage to increase write and read performance. FAST Cache is a large secondary SLC SSD cache to serve applications with I/O spikes. Multicore RAID manages and maintains RAID functionality.

| Detail | VNXe1600 | VNXe3200 |

| Multicore FAST Cache | Yes (200GB maximum, limited to 2 x drive configurations) | Yes (400GB maximum) |

| Multicore Cache | Multicore RAID | Yes | Yes | Yes | Yes |

| FAST VP | No (only single-tiered storage pools) | Yes |

| Native Block Replication | Yes (an Ethernet port is required to use this feature) | Yes |

| RecoverPoint Support | No | Yes |

| VMware Integration | Adding vCenter/ESX hosts in Unisphere; VAAI; VSI; VASA | Adding vCenter/ESX hosts in Unisphere; VAAI; VSI; VASA |

| Storage Resources | LUNs, VMware VMFS | NAS Servers, File Systems, LUNs, VMware NFS, VMware VMFS |

We learned during a visit to EMC’s data center in Hopkinton, MA to benchmark the VNX5200 to that nearly 70% of VNX2 systems are now shipping in hybrid flash configurations. The VNXe3200 review includes a more comprehensive description of MCx and its role as a centerpiece of VNX2.

EMC VNXe1600 Specifications

- Min/Max Drives: 6 to 200 (400TB maximum raw capacity)

- Max FAST Cache: 200GB

- Drive Enclosure Options: 25×2.5” Flash/SAS drives (2U) or 12×3.5” Flash/SAS/NL SAS drives (2U)

- CPU/Memory per Controller: 1 x 2.6 GHz Xeon (Ivy Bridge) Dual Core/8 GB

- Embedded Host Ports per Controller: 2 per Converged Network Adapter (CNA) capable of either 8/16GB Fibre Channel or 10Gb Ethernet connectivity.

- Max Flex IO Modules per Controller: 1

- Raid Options: RAID 10/5/6

- Supported Pool LUNs: Up to 500

- Maximum LUN Size: 16TB

- Total Raw Capacity: 400TB

- Connectivity: DAS or SAN connectivity options through Ethernet iSCSI and Fibre Channel ports

- Flex IO Module Options

- IO Modules 1GbE: 4 ports per module

- 10GbE Optical: 4 ports per module

- 8Gb/s Fibre Channel Module: 4 ports per module

- Supported Disk Array Enclosures (DAEs):

- 12-Drive Enclosure: 3.5” SAS, NL-SAS, Flash (2U)

- 25-Drive Enclosure: 2.5” SAS, Flash (2U)

- Back-End (Disk) Connectivity: Each storage processor includes two 6 Gb/s x 4 Serial Attached SCSI (SAS) ports providing connection to additional disk drive expansion enclosures.

- Maximum SAS Cable Length (enclosure to enclosure): 6 meters

- Protocols Supported:

- iSCSI, Fibre Channel

- Routing Information Protocol (RIP) v1-v2

- Simple Network Management Protocol (SNMP)

- Address Resolution Protocol (ARP)

- Internet Control Message Protocol (ICMP)

- Simple Network Time Protocol (SNTP)

- Lightweight Directory Access Protocol (LDAP)

- Server Operating System Support:

- Apple MAC O/S 10.8 or greater

- Citrix XenServer 6.1

- HP-UX

- IBM AIX

- IBM VIOS 2.2, 2.3

- Microsoft Windows 7, Microsoft Windows 8 and Vista

- Microsoft Hyper-V

- Novell Suse Enterprise Linux

- Oracle Linux

- RedHat Enterprise Linux

- Solaris 10 x86, Solaris 10 Sparc

- Solaris 11 and 11.1 supported, SPARC & x86

- VMware and ESXi5.x

- VNXe1600 Base Software Package: Standard integrated management and monitoring of all aspects of VNXe systems including the Operating Environment 3.1.3, all protocols (as listed above), Unisphere Management with integrated support, FAST Cache, Block Snapshots, Remote Protection- Native Asynchronous Block Replication, and Thin Provisioning.

- Optional Software:

- Virtual Storage Integrator (VSI)

- PowerPath

- Client Connectivity Facilities:

- Block access by iSCSI and FC

- Virtual LAN (IEEE 802.1q)

- VMWare Integration:

- VMware vStorage APIs for Array Integration (VAAI) for Block improves performance by leveraging more efficient, array-based operations

- vStorage APIs for Storage Awareness (VASA) provides storage awareness for VMware administrators

- VNXe Physical Dimensions (Approximate):

- VNXe1600 Processor Enclosure (3.5” Drives)

- Dimensions (H/W/L): 3.40in x 17.5in x 20.0in/8.64cm x 44.45cm x 50.8cm

- Weight (max): 61.8lb/28.1kg

- VNXe1600 Processor Enclosure (2.5 Drives)

- Dimensions (H/W/L): 3.40in x 17.5in x 17.0 in/8.64cm x 44.45cm x 43.18cm

- Weight (max): 51.7lb/23.5 kg

- VNXe1600 Expansion Enclosure (12 x 3.5” Drives)

- Dimensions (H/W/L): 3.40in x 17.5in x 20.0 in/8.64cm x 44.45cm x 50.8cm

- Weight (max): 52.0lb/23.6kg

- VNXe1600 Expansion Enclosure (25 x 2.5” Drives)

- Dimensions (H/W/L): 3.45in x 17.5in x 13in/8.64cm x 44.45cm x 33.02cm

- Weight (max): 48.1lb/21.8kg

- VNXe1600 Processor Enclosure (3.5” Drives)

Build and Design

VNXe1600 drives use type, capacity, and speed labels to simplify visual identification. The first four drives in the VNXe1600 are system drives. The 12-drive 3.5” form factor uses a single LED for power and status, while the 25-drive 2.5” array uses separate LEDs for this function. VNXe1600 drive caddies include both metal and plastic components and are secured via a carrier with a handle and a latch and spring assembly.

The VNXe1600 incorporates two VNX2 Storage Processors (SP), the macro-level component that provides compute and I/O for the array. Each VNXe1600 SP consists of a CPU module with an Intel Xeon Dual Core 2.6-GHz processor and one DDR slot with 8GB of memory per SP. These storage processors are redundant along with the array’s power supplies, fans.

The VNXe1600 offers dynamic failover and failback and is designed to allow software and hardware upgrades and component replacement while operational. Storage Processors can be removed individually from the Disk Processor Enclosure (DPE). Each SP has three fan modules above it; at least two of the three fans on each SP must be active or the system will save the cache and shut down.

Each Disk Processor Enclosure (DPE) has two power supply modules. A 3-cell Lithium-Ion Battery Backup Unit (BBU) is located in each SP to provide enough power flush the VNXe1600’s SP cache contents to internal mSATA storage in the case of a power failure or SP removal from the chassis. This typed of attention to detail is a clear differentiation point between EMC’s SMB equipment and most of the other platforms currently competing in the space. The 32GB mSATA drive is located underneath each SP and contains a partition that holds the boot image that is read upon initial boot up as well as storage for cached data. If one mSATA drive becomes corrupted it can be recovered from the peer SP.

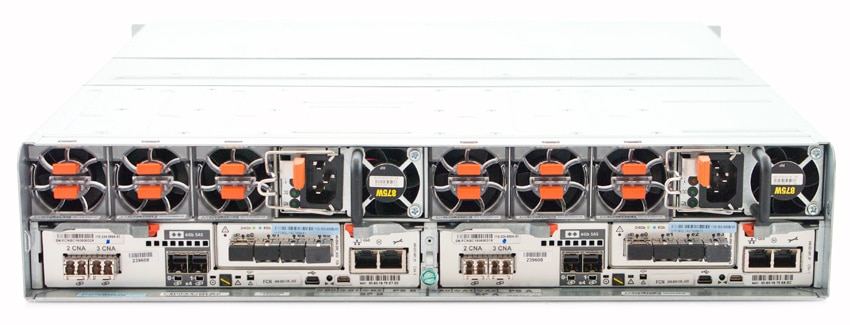

On the rear of the VNXe1600, two integrated Converged Network Adapter (CNA) ports can be configured at the factory for 10GbE Optical iSCSI or 8- or 16-Gb/s Fibre Channel. The CNA supports 10G optical SFP and 10G Active/Passive TwinAX cables and the Fibre Channel CNA module supports either 8- or 16Gb/s SFPs.

The VNXe1600 can also be deployed with additional interface modules to increase connectivity options. Both of the VNXe1600’s Storage Processors must have the same variety of I/O personality module installed, however. Three I/O personality modules are currently available for the VNXe1600:

- Four-port 1Gb/s copper Ethernet I/O personality module supporting 1 Gb/s

- Four-port 8-Gb/s Fibre Channel (FC) I/O personality module supporting 2/4/8Gb/s

- Four-port 10-Gb/s Optical Ethernet I/O personality module supporting 10Gb/s

The rear of the array incorporates two, four-lane 6Gb/s mini-SAS HD ports for expansion. The VNXe1600 includes one LAN management port and a serial over LAN service port. The rear also provides access to one mini-USB port, an NMI debut port, indicator LEDs, and access to a power supply module and three cooling fans.

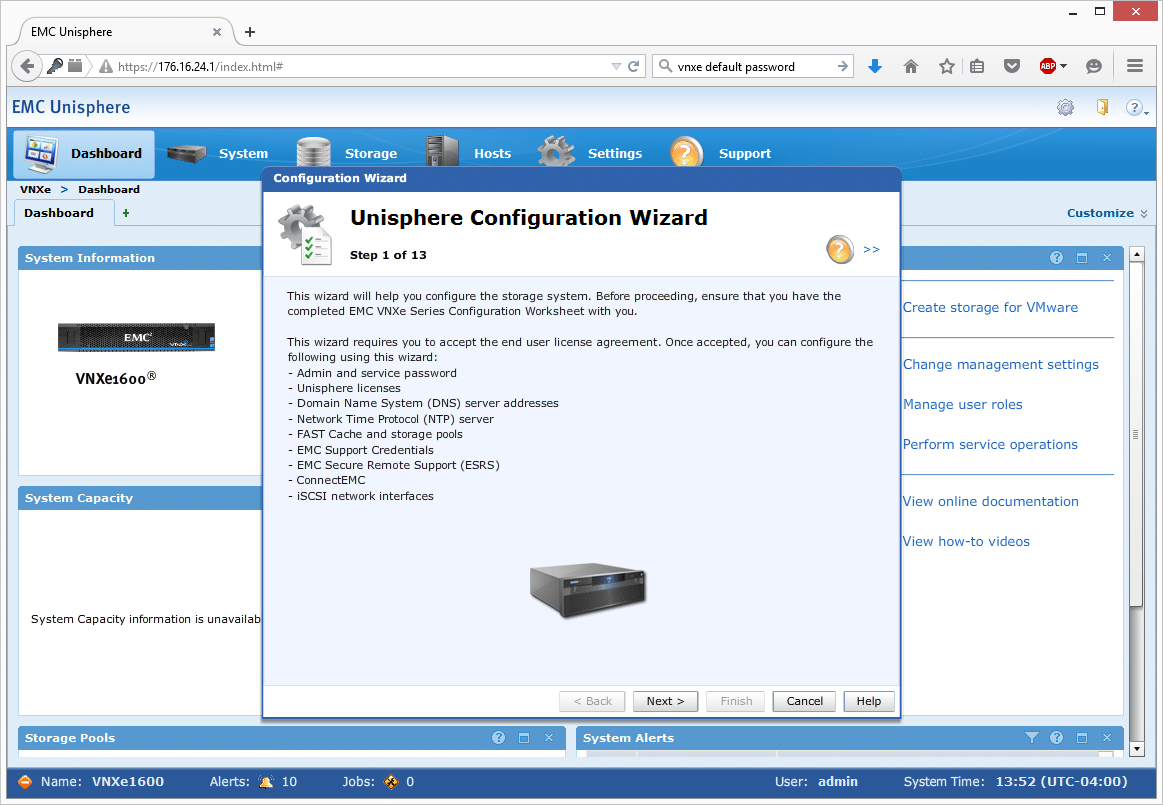

Management and Operating System

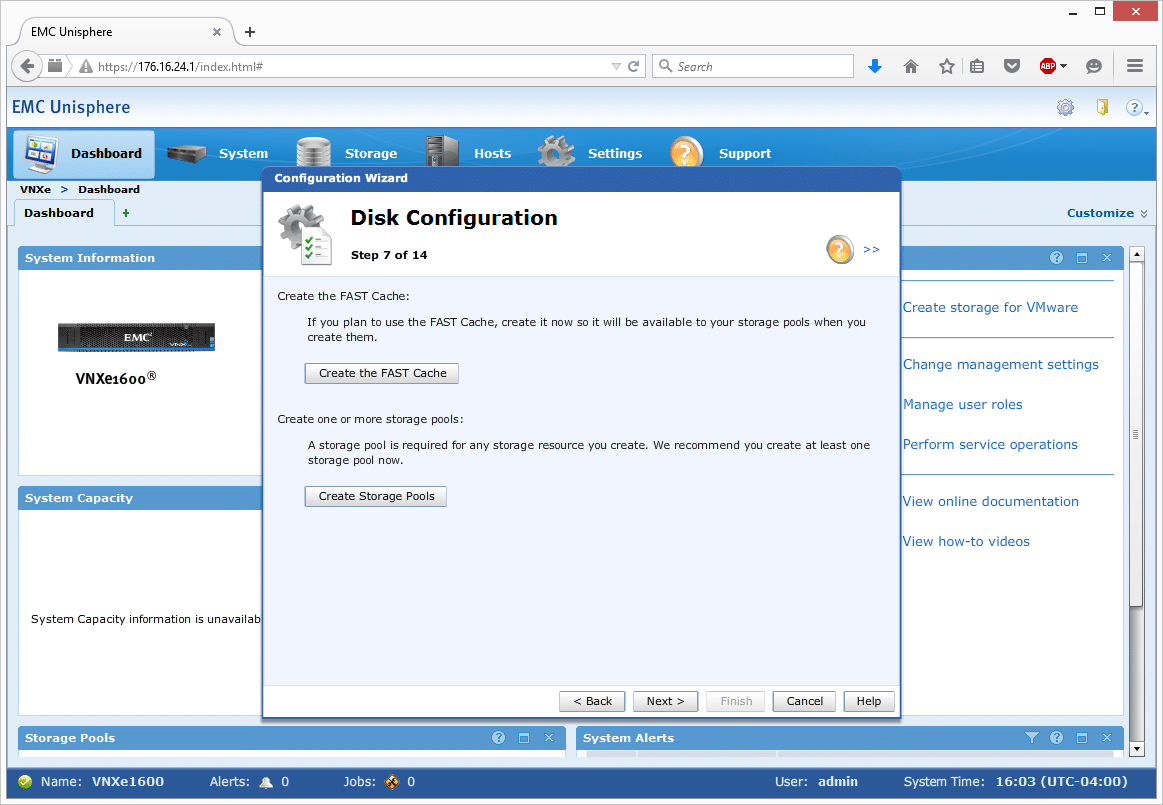

The VNX family of products use EMC’s Unisphere management software which we find offers a rewarding combination of accessibility and depth. Unisphere is streamlined enough to configure and manage at the scale of an individual array and is also designed for managing many machines at the datacenter and multi-site enterprise scale. Access to EMC’s management ecology is one of the selling points of this solution and the Unisphere interface is clean and had a straightforward learning curve for someone familiar with other storage array operating and management systems.

VNX software included with all VNXe1600 arrays include the VNXe Operating Environment, Unisphere Web Management Interface, EMC’s Integrated Online Support Ecosystem, Block Protocols: iSCSI (IPv4/6) or FC, Unisphere Central (multi-system, multi-site), SSD FAST Cache, Block-based Snapshots, Remote Protection-Native asynchronous block replication, and Thin Provisioning. The VNXe1600 features some of recent enhancements to the VNX2 platform including expanded RAID options, dynamic/automatic hot sparing, drive mobility, and Snapshots.

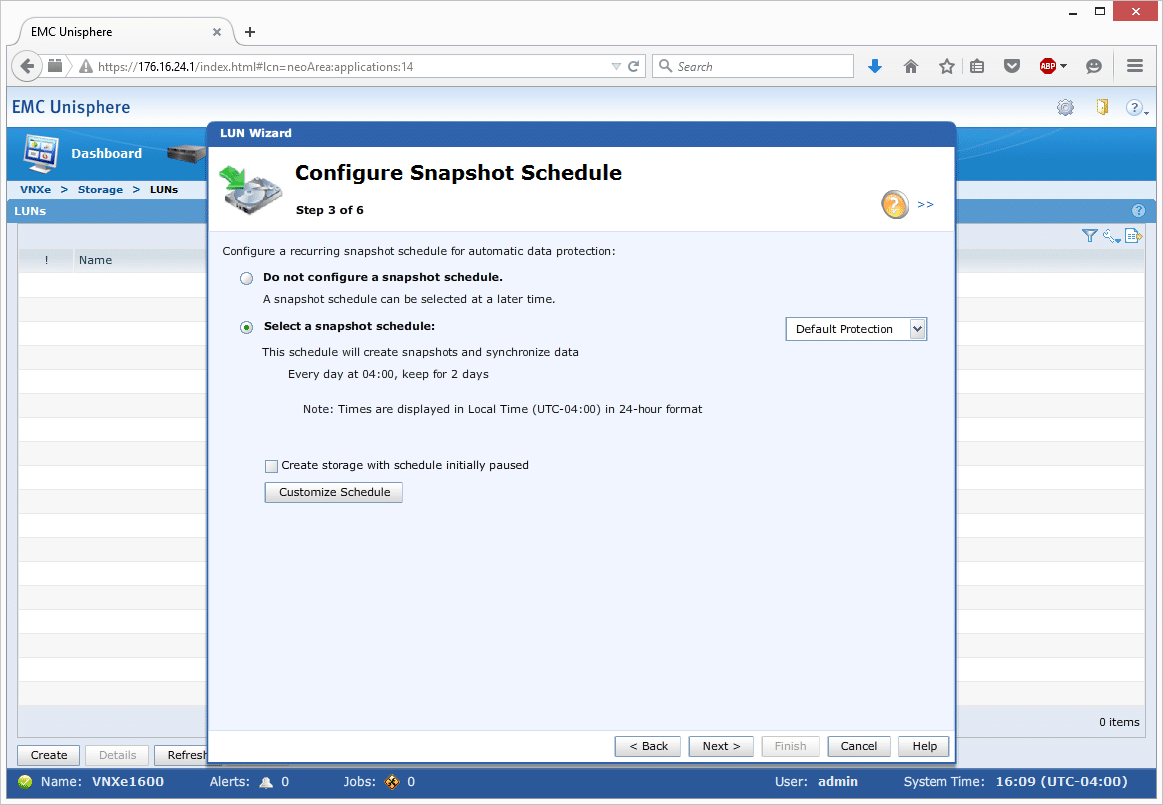

This snapshot technology is designed for quick copies of production data with support for automated scheduling and deleting of snapshots within configurable parameters such as available storage space. The VNXe1600 builds its native asynchronous replication support for LUNs, LUN Groups, and VMware VMFS Datastores on top of the VNX2 snapshot technology in order to provide automatic and manual synchronization. These snapshots use “redirect-on-write” technology with writes sent to a new location within the same pool, and support for hierarchical snaps (“snap of a snap”).

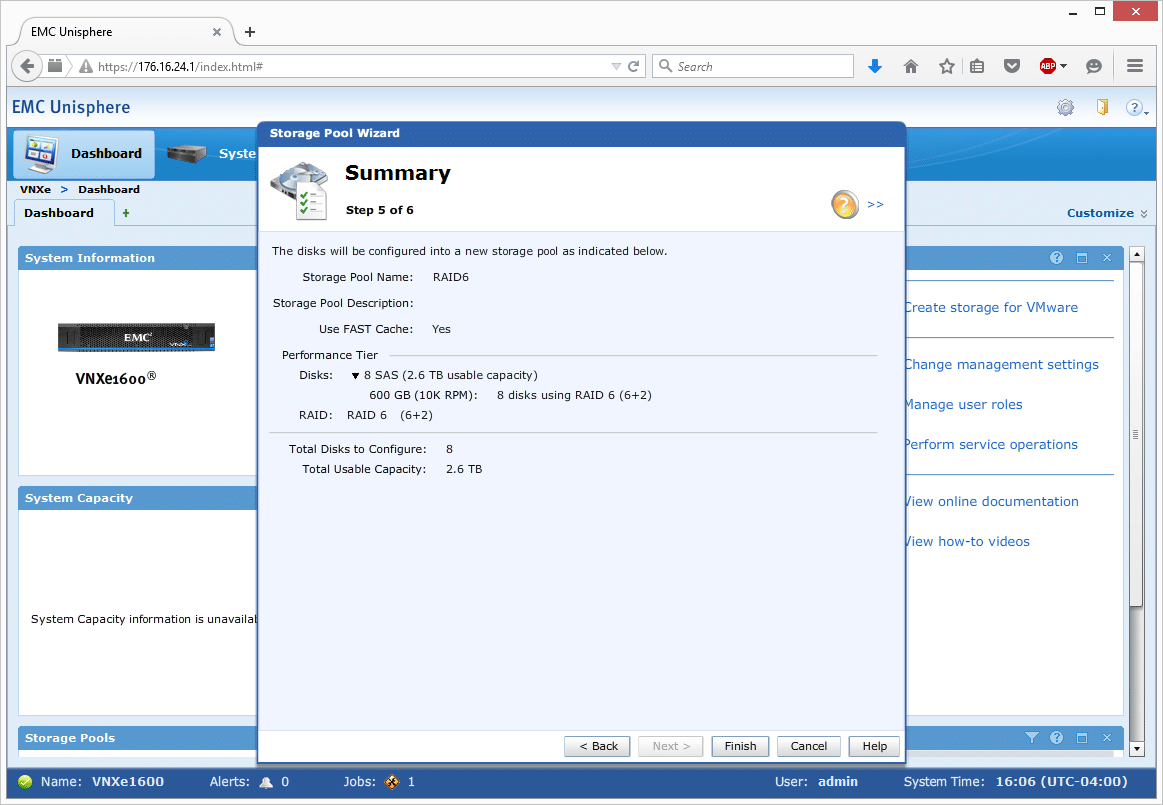

The VNXe1600 uses pool-based provisioning for Flash, SAS, and NL-SAS drives with no support for classic RAID groups. For the VNXe1600, all storage pools have to be composed of drives from the same storage tier: Flash, SAS or NL-SAS. Multiple storage pools may be created, each with a specific drive technology. The VNXe1600 does not support multitier pools or EMC’s FAST VP.

SP Cache optimizes the VNXe1600’s Storage Processor DRAM to increase host write and read performance. Instead of flushing a “dirty” cache page to disk, the page is copied to the disk but still kept in memory for near-term reuse before ultimately being removed from the cache.

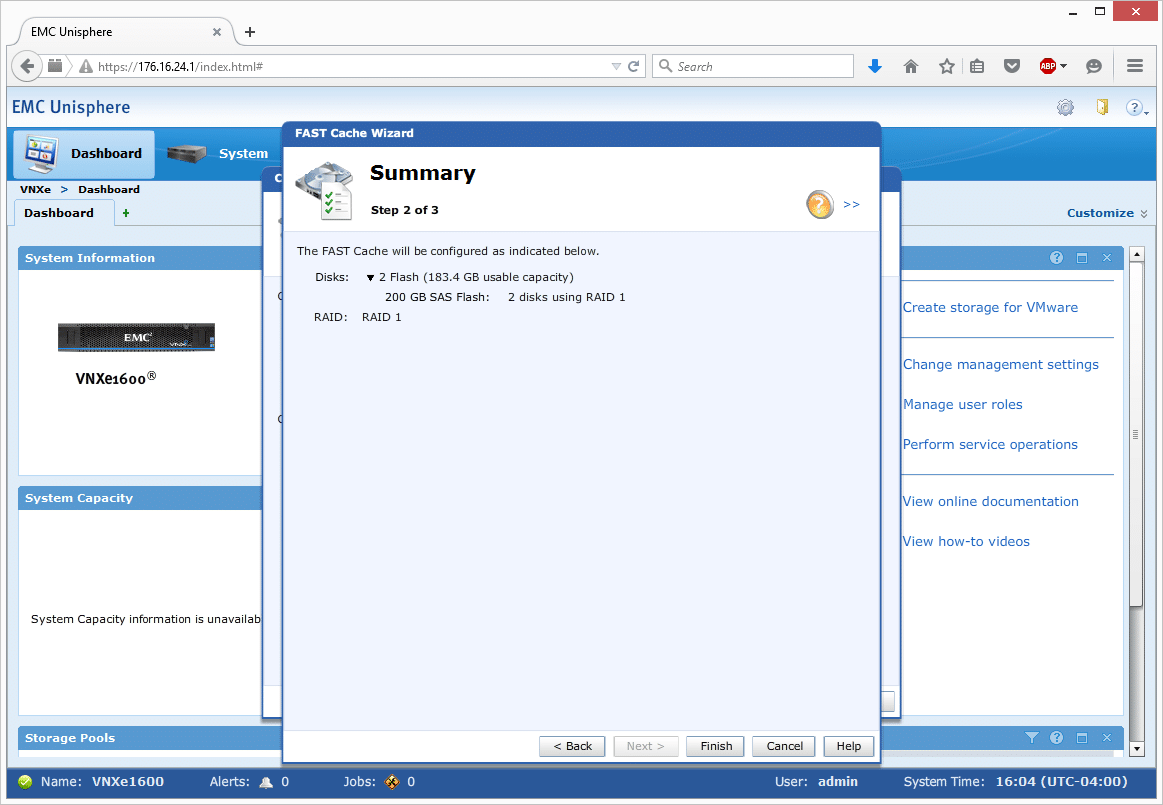

Multicore FAST Cache can be considered as a secondary cache that is built from SSDs, and enhances I/O activity between the VNXe1600 DRAM-based Multicore Cache and non-Flash storage pools managed by the array. Note that the initial VNXe1600 release only supports FAST Cache configurations with a total of two cache drives: EMC intimates that future releases may not feature this limitation.

Free downloadable plug-ins are available for VMware vCenter and Microsoft System Manager that can handle basic element management and provisioning of an array from within those management systems. EMC Virtual Storage Integrator (VSI) for VMware is also available to allow administrators to map virtual machines to storage and to self-provision storage from VMware vCenter. EMC Storage Integrator for Windows Suite (ESI) for Microsoft environments provisions applications and provides storage topology views scripting libraries. ESI also includes System Center integrations such as SCOM, SC O, and SCVMM.

EMC’s VASA, VAAI, and VMware Aware Integration are available to integrate with VMware vCenter and ESXi hosts. These integrations include storage monitoring from VMware interfaces and the creation of datastores from Unisphere. VMware Site Recovery Manager (SRM) is available for disaster recovery.

Testing Background and Comparables

We publish an inventory of our lab environment, an overview of the lab’s networking capabilities, and other details about our testing protocols so that administrators and those responsible for equipment acquisition can fairly gauge the conditions under which we have achieved the published results. To maintain our independence, none of our reviews are paid for or managed by the manufacturer of equipment we are testing.

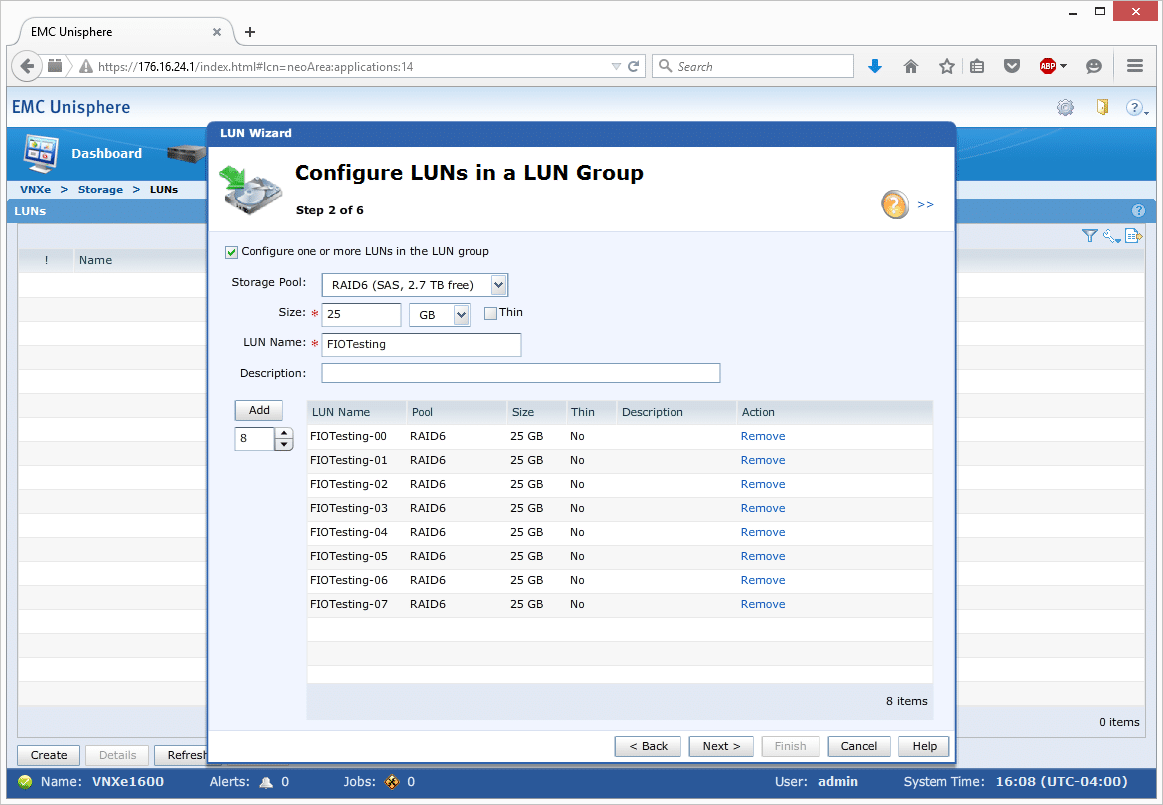

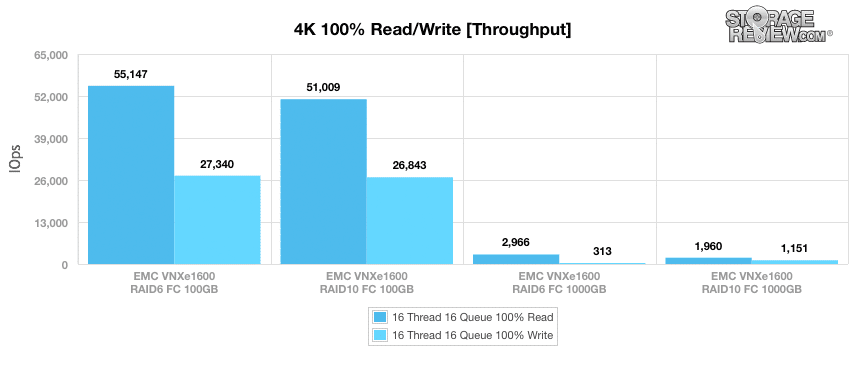

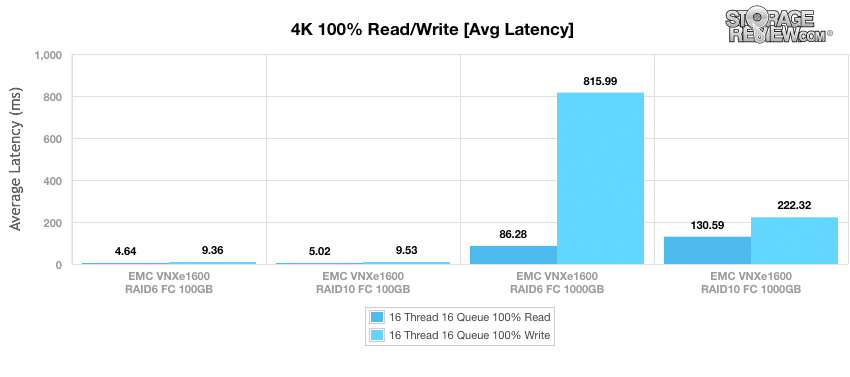

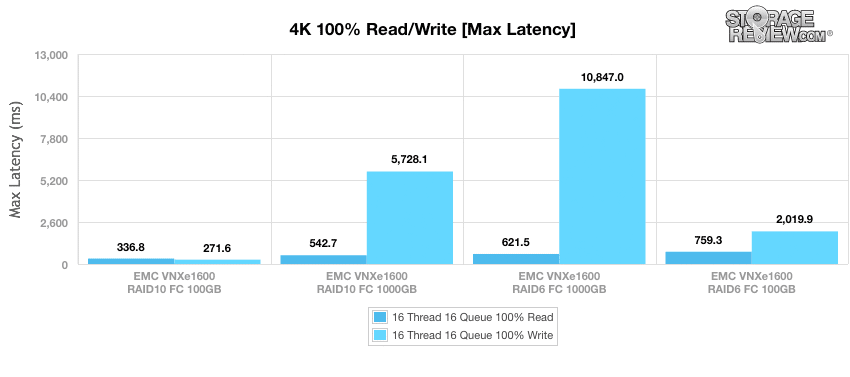

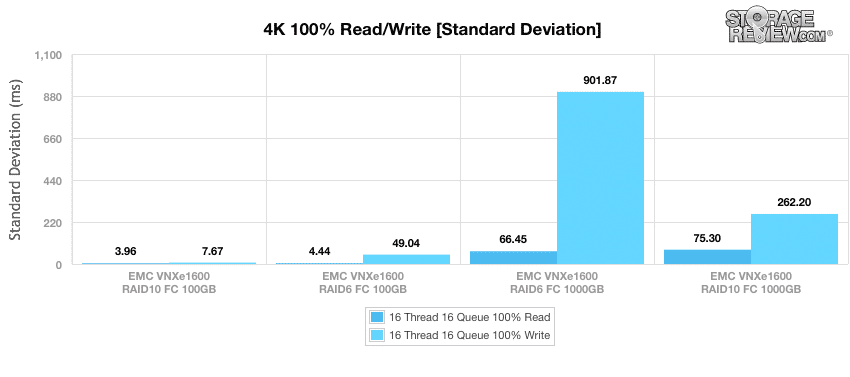

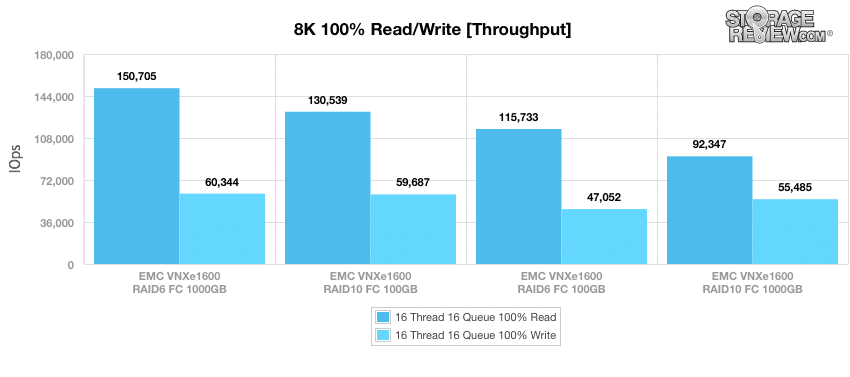

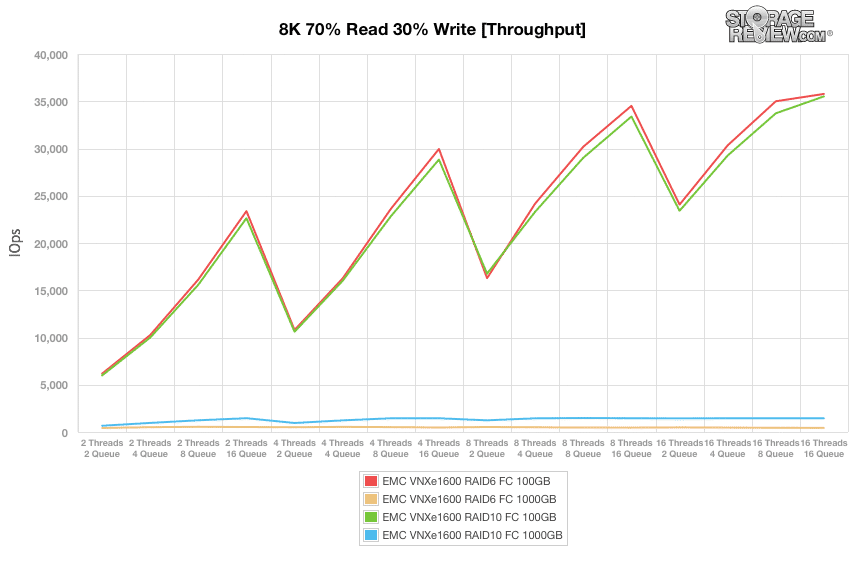

Our benchmarks of the VNXe1600 will utilize several configurations that might be seen in actual deployments. Performance will be examined using a 100GB RAID6 storage pool, a 1000GB RAID6 pool, a 100GB RAID10 pool, and a 1000GB RAID10 pools. Each of these pools will be accessed via Fibre Channel.

The 100GB test size is specifically chosen to shown in-FAST Cache performance performance, whereas the 1000GB tests spill outside of the 183GB of usable FAST Cache and show what the backend storage pool is capable of.

This array was benchmarked with our Dell PowerEdge R730 Testbed:

- Dual Intel E5-2690 v3 CPUs (2.6GHz, 12-cores, 30MB Cache)

- 256GB RAM (16GB x 16 DDR4, 128GB per CPU)

- 1 x Emulex 16GB dual-port FC HBA

- Aggregate bandwidth: 768Gb/s end-to-end full duplex

Enterprise Synthetic Workload Analysis

Prior to initiating each of the fio synthetic benchmarks, our lab preconditions the device into steady-state under a heavy load of 16 threads with an outstanding queue of 16 per thread. Then the storage is tested in set intervals with multiple thread/queue depth profiles to show random performance under light and heavy usage.

Preconditioning and Primary Steady-State Tests:

- Throughput (Read+Write IOPS Aggregated)

- Average Latency (Read+Write Latency Averaged Together)

- Max Latency (Peak Read or Write Latency)

- Latency Standard Deviation (Read+Write Standard Deviation Averaged Together)

This synthetic analysis incorporates four profiles which are widely used in manufacturer specifications and benchmarks:

- 4k random – 100% Read and 100% Write

- 8k sequential – 100% Read and 100% Write

- 8k random- 70% Read/30% Write

- 128k sequential – 100% Read and 100% Write

During the 4k transfer benchmark, the VNXe1600 scored its highest throughputs with a 100GB RAID6 volume at 55,147IOPS on read operations and 27,340IOPS for write operations. The 100GB RAID10 volume was a close second in 4k throughput. Among the 1000GB pools, the RAID6 volume was superior in terms of read throughput, while the RAID10 volume outperformed with 4k write operations.

The average latency results for 4k random transfers again show the RAID6 and RAID10 volumes to be competitive when configured with a 100GB storage pool. The average write latency results for the 1000GB RAID6 volume were very disproportionate to its equivalent 1000GB RAID10 volume, however.

The 1000GB RAID6 volume also experienced by far the largest latency value in the 4k random benchmark. The 100GB RAID10 share achieved the lowest 4k random maximum latency for read and write operations.

Standard deviation calculations for the 4k random show that the 100GB RAID10 volume sustained the most consistent latencies during this benchmark protocol. The 100GB RAID6 volume was also very consistent for read operation latencies, with a standard deviation of 4.44ms.

Shifting the benchmark to utilize 8k transfers in purely read and purely write transfers resulted in more competitive performance across the various configurations deployed to the VNXe1600. The best performance in read and write performance came from the 1000GB RAID6 pool, which achieved 150,705IOPS for read transfers and 60,344IOPS for write transfers.

With a fully-random 8K 70% read operations and 30% write synthetic workload, we look primarily at the performance advantage of in-FAST Cache vs. out-of-FAST Cache performance of the VNXe1600 in RAID6 and RAID10 over FC. The 100GB RAID6 measurement slightly edged out the 100GB RAID10 configuration, although both performed significantly higher than our 1000GB tests. Moving well outside of FAST Cache, RAID10 offered more throughput than RAID6, which isn’t entirely surprising.

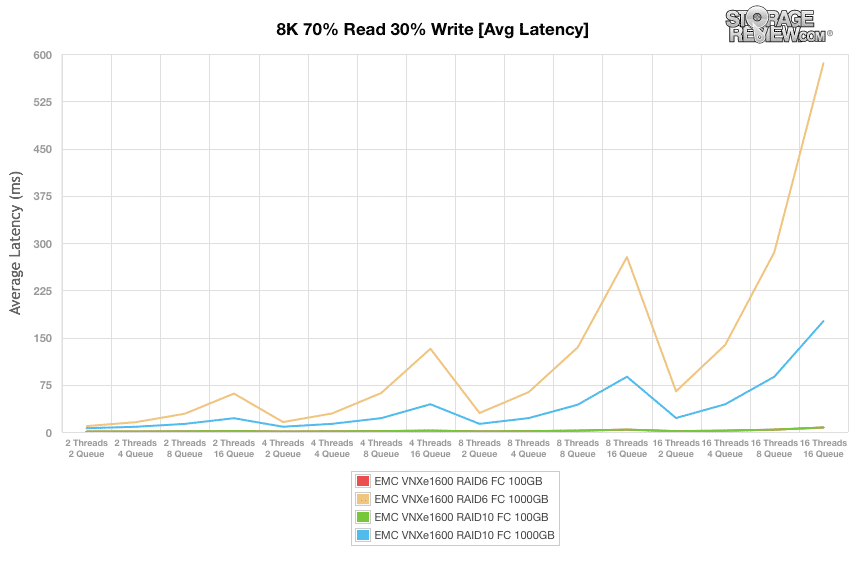

Average latency results for the 8k 70/30 benchmarks also show the 1000GB RAID6 pool’s performance at the bottom of the pack, compared to in FAST Cache 100GB RAID6 and RAID10 measurements with the lowest response times.

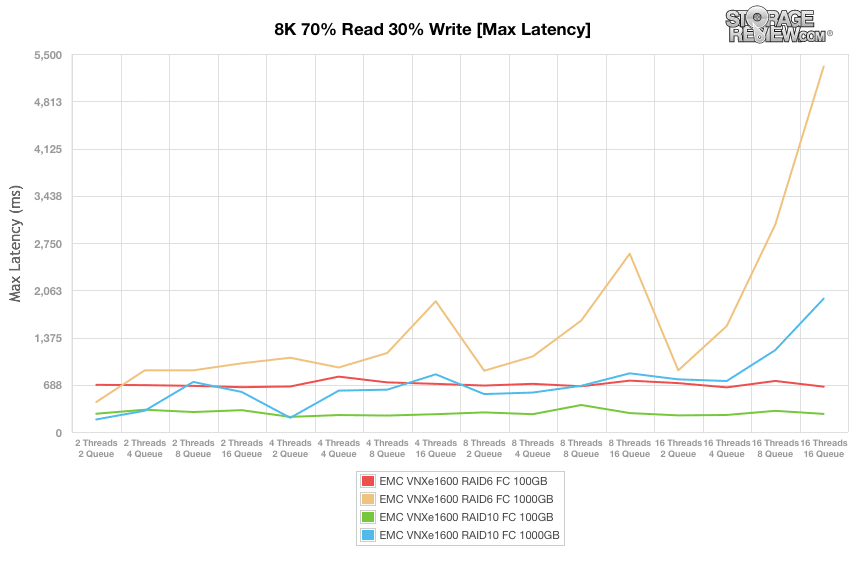

Maximum latencies recorded during the 8k 70/30 benchmark also reflect the struggles of the 1000GB RAID6 with deep queues. Maximum latencies for the other configurations were more mixed, with the VNXe1600 100GB RAID10 having the best results overall.

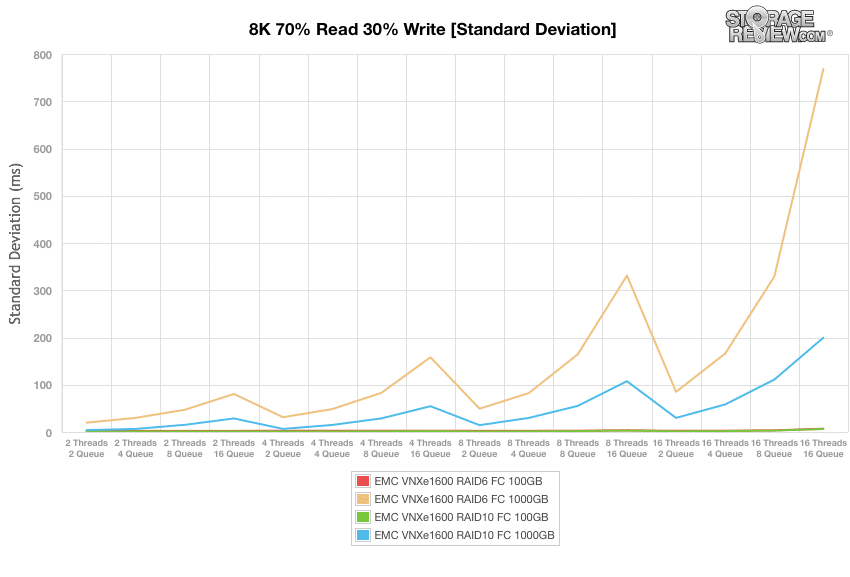

Standard deviation calculations for the 8k 70/30 benchmark underscore the uneven latency performance of the 1000GB RAID6 pool under incredible load, while also reflecting the buttery-smooth consistency of the 100GB RAID6 pool to the 100GB RAID10 pool in terms of latency.

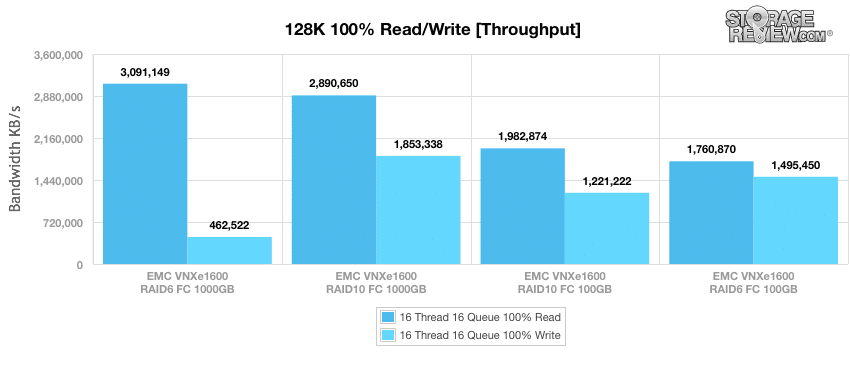

The final benchmark for this review uses 128k sequential transfers with both 100% read and then 100% write operations. The 1000GB RAID6 pool was able to sustain the highest read transfer in the test at 3.09GB/s although the 1000GB RAID10 pool was not far behind in read operations. The 1000GB RAID10 pool, on the other hand, dramatically outscored the 1000GB RAID6 pool in write performance to come out in the top position among the configurations tested.

Conclusion

The EMC VNXe1600 continues to advance VNX product family into the sub-$10k SMB market with a true enterprise-grade feature set and price tag that will make it appealing for hub and spoke deployments, offsite replication, and other applications for SMB. Larger organizations that may already have VNX infrastructure would find the VNXe1600 useful for internal departments and remote offices. With its low starting price, EMC is looking to strike fear in the hearts of most entry-enterprise competitors that have generally felt safe and comfortable in this price band. Generally speaking, features such as dual active-active controllers, 8/16Gb FC connectivity, replication support are only seem in more upstream systems. Going as far as including onboard battery backup that can flush system DRAM to flash in the event of power failures almost seems like overkill for what many customers might shop in this range, but this is the difference a vendor such as EMC brings to the table compared to a tier-2 or tier-3 vendor that has generally only competed on price or a BYOD (Bring You Own Drives) model.

In terms of performance the EMC VNXe1600 offers quite a punch, although more so with workloads capable of sitting inside its optional 200GB FastCache. We saw strong fully-random results, with 4K measuring upwards of 55k IOPS read and 30k IOPS write depending on backend storage pool configuration. 8k 70/30 performance peaked at over 35k IOPS, showing the dual-core CPU controllers still offered plenty of punch compared to the quad-core CPUs found in the VNXe3200. Sequential performance was also strong, measuring over 3GB/s read and 1.8GB/s write over 16Gb FC.

There are hybrid arrays on the market that reach lower price points than the VNXe1600, but the VNXe is distinguished by its access to VNX2 functionality and interoperability which smaller customers may have felt priced out of in the past. The VNXe is one of the smaller segments of the EMC business, but in terms of what’s being accomplished in their respective price bands, the VNXe1600 and VNXe3200 are extraordinary machines. Factoring in feature set, caching performance, and support infrastructure, the VNXe1600 is a clear leader when looking at arrays that start out sub $10k. Quite frankly, the VNXe1600 is so well executed that it’s difficult to make the case for other options in this category.

Pros

- EMC VNX-grade storage at a price accessible to customers who may have been priced out in the past

- Flexible hybrid drive configurations and connectivity options including iSCSI and Fibre Channel

- Heavy emphasis on data integrity, including a battery-powered mSATA flash backup for system cache

Cons

- FAST Cache is currently limited to two cache drives

The Bottom Line

EMC makes only a few compromises to bring the power and support of EMC’s VNX2 family to a new market at an unprecedented price point in the integrated 2U form factor of the VNXe1600.

Sign up for the StorageReview newsletter