We've spent a good deal of time talking about EMC ScaleIO's capabilities in a two-layer, or storage online configuration. In this next series of the review, we break out results with ScaleIO set up as a hyper-converged system. It should be noted that ScaleIO can actually work in a third mode where it combines both of these options. Fundamental to the HC setup, though, is the fact that both the SDS (ScaleIO Data Server) and the SDC (ScaleIO Data Client) run in the same environment. In this configuration the applications and storage share the same compute resources. In our case, that means the VxRack Node's high-performance 2U 4-node PF100 chassis that resides in our lab.

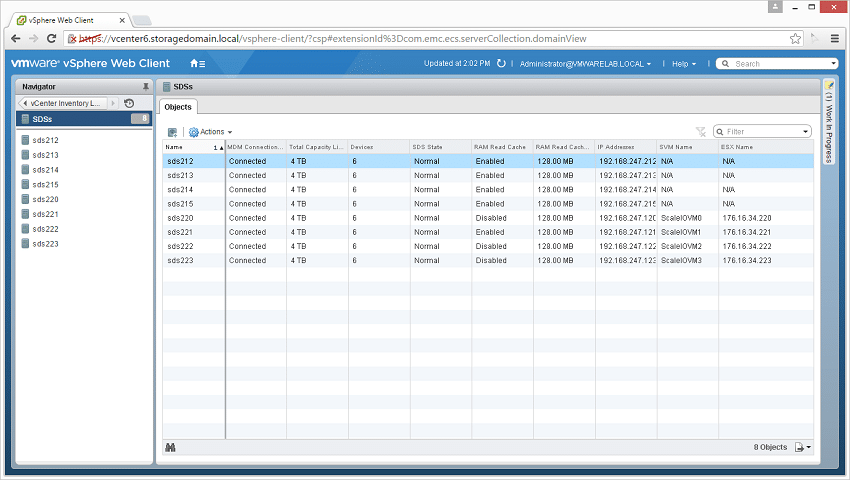

Practically speaking, managing ScaleIO in a hyper-converged configuration isn't much different than two-layer. The admin uses the same software to configure the system, provision storage and to view reporting. This makes a good deal of sense, since ScaleIO is often deployed in one configuration or the other, and many times in a mixed configuration as organizations transition their workloads from two-layer to hyper-converged. Part of the reason for this flexibility is the way EMC employs the MDM (Meta Data Manager) which is a lightweight monitoring process that keeps track of everything going on in the system. Currently 1024 nodes can be managed by a single MDM.

When it comes in running in HCI, ScaleIO supports almost all modern operating systems. This includes Windows 2008 and newer, Hyper-V, KVM, major Linux distributions like Red Hat, CentOS, SUSE and Ubuntu, VMware ESXi and XEN. What's more, SacelIO supports more than just one of these at once, with very little overhead from the MDM. Glancing around at the rest of the HCI space, generally vendors align with or prefer a very specific hypervisor or operating environment. Being generally agnostic makes ScaleIO all that much more flexible when being put to work in large environments that may have to support a wider range of software.

Just like in 2-layer, ScaleIO as HCI can be consumed in a number of ways. The nodes can be purchased directly from EMC and their partners. VCE offers a VxRack product that is a single SKU, omes configured with all the required licenses, and is supported entirely by VCE. And there continue to be flexible configurations that support all-flash for high-performance needs, as well as disk or hybrid solutions.

VCE VxRack Node (Performance Compute All Flash PF100) Specifications

- Chassis – # of Node: 2U-4 node

- Processors Per Node: Dual Intel E5-2680 V3, 12c, 2.5GHz

- Chipset: Intel 610

- DDR4 Memory Per Node: 512GB (16 x 32GB)

- Embedded NIC Per Node: Dual 1-Gbps Ethernet ports + 1 10/100 management port

- RAID Controller Per Node: 1x LSI 3008

- SSDs Per Node: 4.8TB (6x 2.5-inch 800GB eMLC)

- SATADOM Per Node: 32GB SLC

- 10GbE Port Per Node: 4x 10Gbps ports SFP+

- Power Supply: Dual 1600W platinum PSU AC

- Router: Cisco Nexus C3164Q-40GE

HCIbench Test Configuration

- ESXI 6.0 Hypervisor

- 16 VMs

- 10 VMDK per VM

- 40GB VMDK (6.4TB footprint)

- Full-write storage initialization

- 1.5 hour test intervals (30 minute preconditioning, 60 minute test sample period)

For testing the ScaleIO HCI cluster we deployed one heavy-weight configuration for our workload profiles. This consisted of a 6.4TB data footprint out of 8TB usable.

StorageReview's HCIbench Workload Profiles

- 4K Random 100% read

- 4K Random 100% write

- 8K Random 70% read / 30% write

- 32K Sequential 100% read

- 32K Sequential 100% write

HCIbench Performance

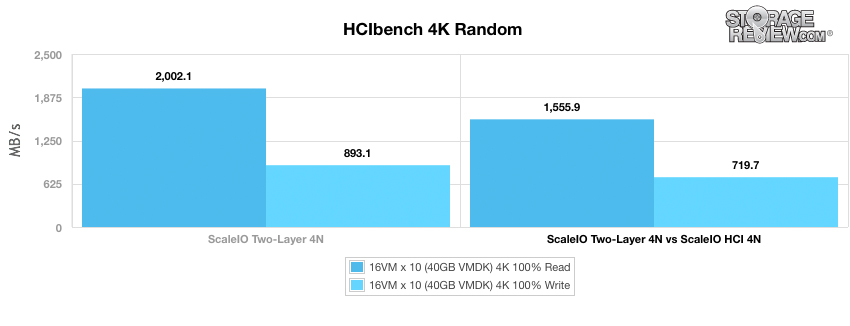

What we've done is test in a way that allows a straight line comparison between the two-layer VxRack Node configuration with external compute against the VxRack Node running in hyper-converged mode. While our two-layer VxRack Node benchmark included four Dell PowerEdge R730 servers acting as loadgens, the VxRack Node HCI config uses its own internal compute resources to stress its own storage.

In our first test measuring 4K random transfer bandwidth, the HCI testing is slightly behind with 1.6GB/s read and 720MB/s write.

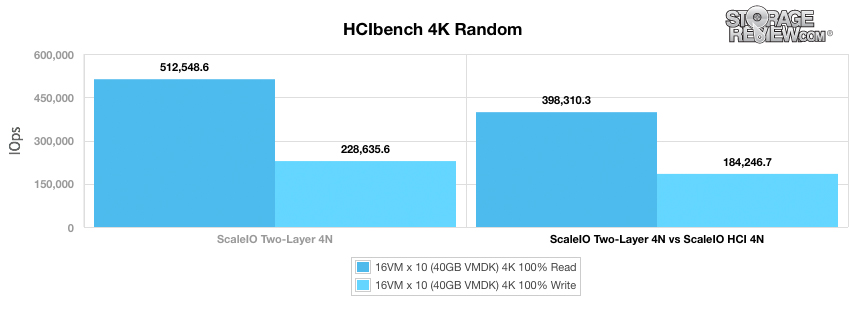

4K random transfers speeds were strong again, measuring 398.3K IOPS read and 184.3K IOPS write, although trailed the two-layer which topped at 512k IOPS read and 228.6K IOPS write.

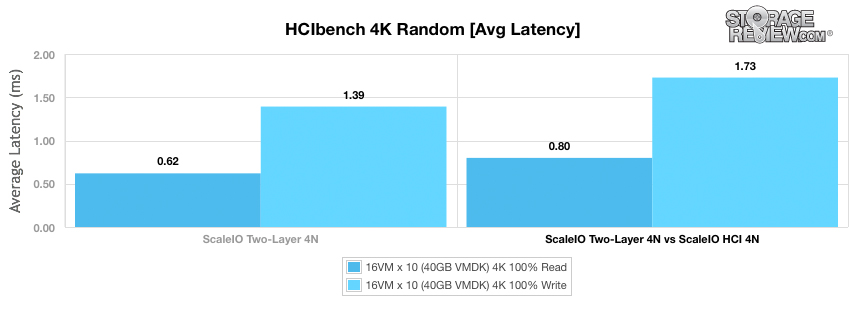

Average latency in our 4K random transfer test measured 0.8ms read and 1.73ms write, slightly higher than the two-layer version.

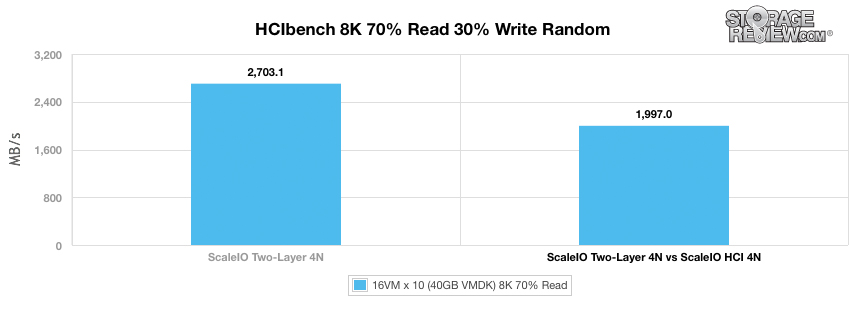

With our 8K random transfer profile in HCIbench with a 70/30 R/W mix, we saw bandwidth dip 700MB/s behind the two-layer's 2.7GB/s.

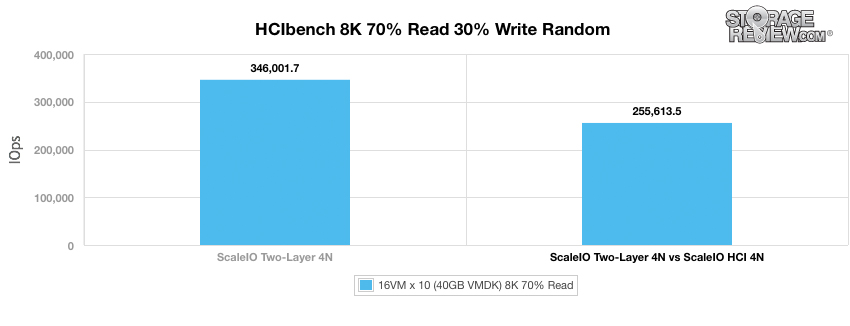

Throughput in our 8K 70/30 HCIbench test measured 255.6K IOPS versus the two-layer configuration that measured 346k IOPS.

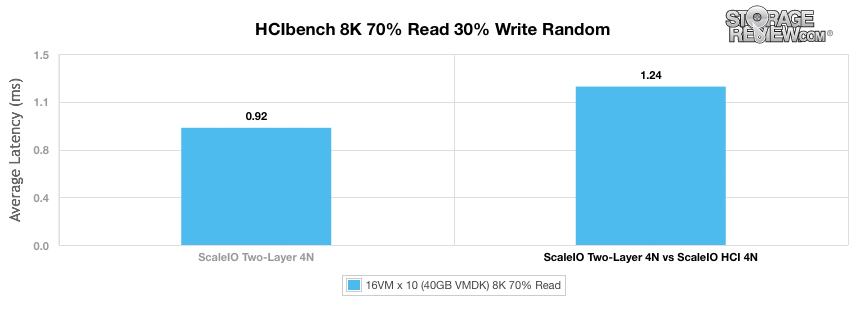

8K 70/30 average latency measured 1.24ms, up slightly from the two-layer's 0.92ms.

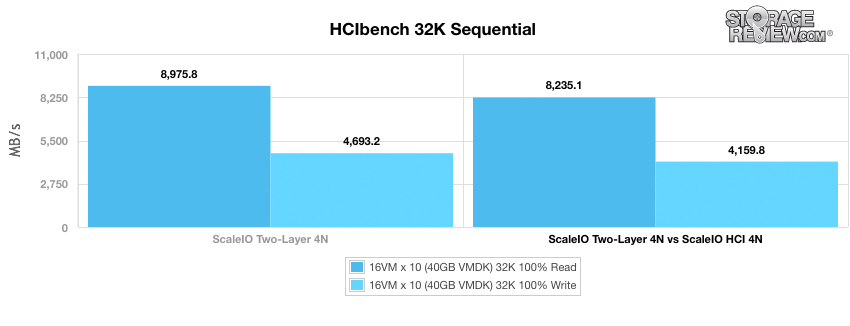

Similar to the EMC VxRack Node Two-Layer configuration, the HCI platform offers tremendous large-block transfer performance. With a 32K block-size we measured sequential read bandwidth of 8.24GB/s read and 4.16GB/s write.

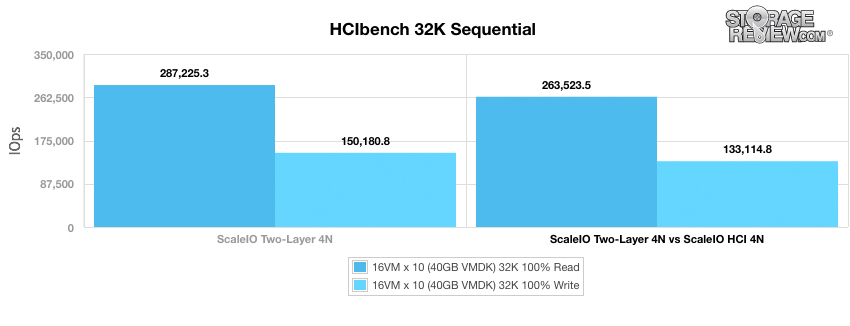

Large-block 32K sequential throughput from the VxRack Node HCI configuration measured 263.5K IOPS read and 133.1K IOPS write.

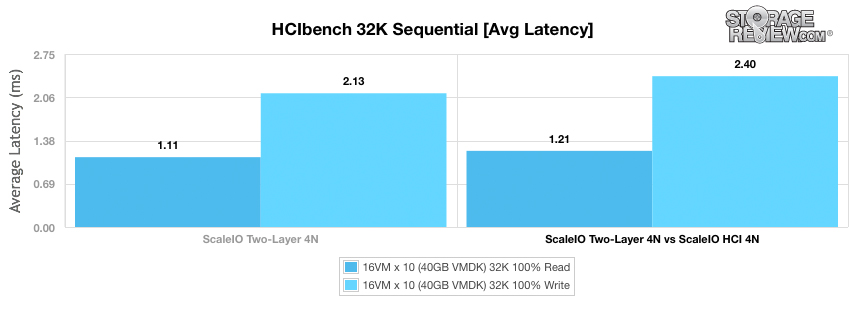

Average latency came in at 1.21ms read and 2.4ms for the HCI configuration, up slightly from two-layer which measured 1.11ms read and 2.13ms write.

Conclusion

As we compare the EMC VxRack Nodes in 2-layer versus HCI with HCIbench there are a couple points of note. First, HCIbench isn't useful to determine maximum capabilities of the system. The point here is a comparative one; HCIbench acts as a good tool to get as apples to apples as possible, in traditionally disparate environments. With that said, we see in HCI mode the VxRack Nodes operating in parity in some places and giving up moderate performance in others. The enterprise benefit, however, is that what would be 10U of rack space in the two-layer architecture, now takes 2U in HCI, and depending on workload, there may not be much performance impact. Further, the Nodes are managed the exact same way and can be leveraged in the most broad software ecosystem of any HCI solution. In this case we used VMware, but EMC has done well to support just about anything else an enterprise could want. This is just the start. We'll be running ScaleIO in HCI through all of the same application tests as we did in two-layer, which provides a true performance comparison between the configuration options ScaleIO offers.

EMC VxRack Node Review: Overview

EMC VxRack Node Powered By ScaleIO: Scaled Sysbench OLTP Performance Review (2-layer)

EMC VxRack Node Powered By ScaleIO: SQL Server Performance Review (2-layer)

EMC VxRack Node Powered By ScaleIO: Synthetic Performance Review (2-layer)

EMC VxRack Node Powered By ScaleIO: SQL Server Performance Review (HCI)

EMC VxRack Node Powered By ScaleIO: VMmark Performance Review (HCI)

Amazon

Amazon