We’ve looked at the Graid SupremeRAID card and software several times and are perpetually impressed with the extreme storage performance Graid enables. Both traditional hardware and software RAID leave much to be desired in terms of performance, which opens the door for Graid to come in with a better mousetrap. With Gen5 SSDs shipping in volume now, we put together a well-equipped server to see what’s possible when you let the flash fly.

We’ve looked at the Graid SupremeRAID card and software several times and are perpetually impressed with the extreme storage performance Graid enables. Both traditional hardware and software RAID leave much to be desired in terms of performance, which opens the door for Graid to come in with a better mousetrap. With Gen5 SSDs shipping in volume now, we put together a well-equipped server to see what’s possible when you let the flash fly.

Why Graid SupremeRAID vs Hardware RAID?

The Graid solution comprises two core elements: a GPU and a software-defined storage (SDS) stack. Like a RAID card, the GPU takes most of the drive management and data protection tasks off of the CPU, freeing it up to run applications. Unlike a RAID card, however, SupremeRAID is much more efficient. It addresses the drives directly over the PCIe BUS without needing extra cabling or complicated chassis configurations. And because the GPU is more dynamic than a RAID card’s ASIC, performance scaling with Graid is greatly improved.

The scaling advantage is immediately evident when looking at where bottlenecks occur within a server. Current RAID cards are limited to Gen4, which tops out at 28GB/s. Four decent Gen4 SSDs can saturate a single RAID card. The system would need several RAID cards to take advantage of all the drives in a 24-bay server. On the other hand, SupremeRAID can support 32 drives in a single system and has none of the PCIe slot bandwidth limitations.

The performance issues for hardware RAID get further compounded with each generational interface leap. To support Gen5 SSDs, a new hardware RAID ASIC is required. But even then, hardware RAID will suffer from the same scaling issue articulated above. The SupremeRAID GPU uses a Gen4 interface today, and to be fair, that’s just an Intel/AMD/NVIDIA issue for now. But that doesn’t stop it from unleashing the performance of Gen5 drives. That means performance levels of up to 260GB/s and 28M IOPS. When Gen5 GPUs come to market, Graid can further improve the IOPS numbers.

One last note on the Graid GPU: today, most of their implementations are on the SR-1010 product, which leverages an NVIDIA A2000 GPU. We bring this up to note that Graid doesn’t require an expensive or hard-to-find GPU for SupremeRAID, nor does it need to use one with external power. If, for some reason, a user would prefer an alternate card, Graid’s software runs on just about any NVIDIA silicon we’ve tested on an A2 in our lab with excellent results. In any event, the GPU is easy to install and requires no extra battery.

Why Graid SupremeRAID vs Software RAID?

Software RAID has picked up steam in recent years because of the cost, complexity, and moderate performance of early NVMe RAID cards. We’re guilty of deploying Windows Storage Spaces, Linux MD, or ZFS RAIDZ when we need a quick and easy way to get NVMe SSDs grouped together and online. But as with any storage software that doesn’t use hardware acceleration, there’s a cost. The host CPU must run the drive management and data protection, taking cycles away from applications. Graid’s GPU-based offering doesn’t have this limitation, ensuring the best possible performance for both storage and the applications on the server.

Additionally, with software RAID, the operating system selection limits the choices. Graid runs on almost anything, including over half a dozen Linux distributions and Windows. To be fair, Graid is a slightly heavier lift to get operational over software RAID; a GPU must be installed in the system, and the additional effort is arguably negligible. The returns, though, are amazing, as you’ll see below. We’re talking an order of magnitude with SupremeRAID over software RAID.

Graid SupremeRAID Gen5 Performance

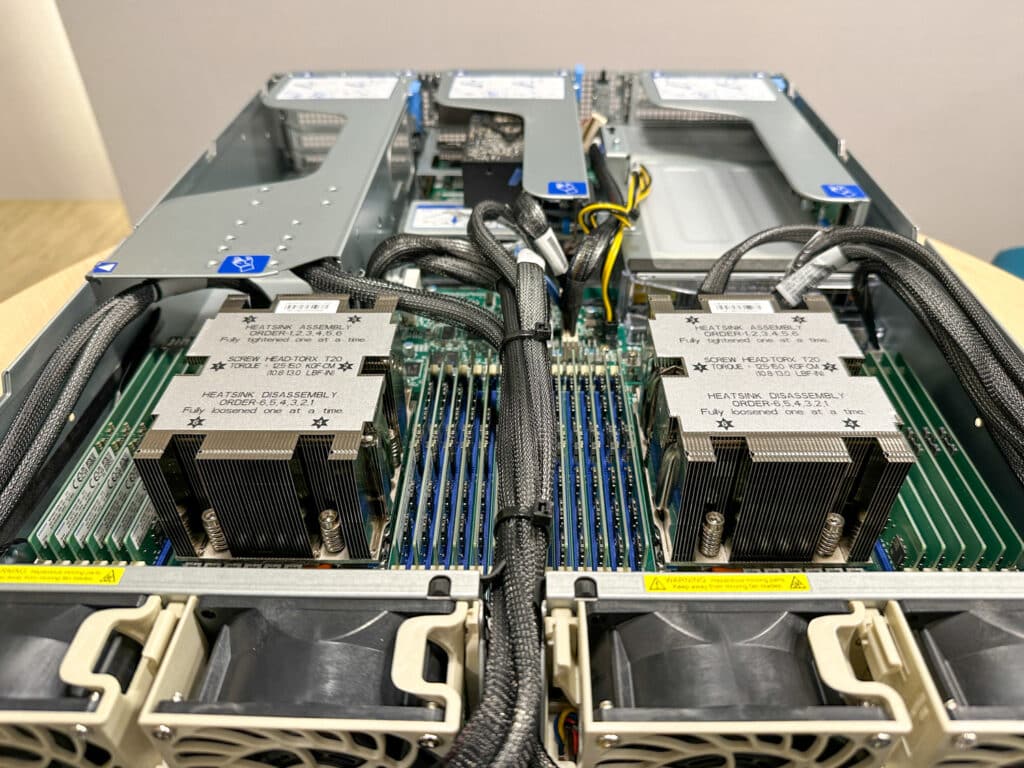

For this test, we put together a Supermicro AS-2125HS-TNR server with two AMD EPYC 9654 CPUs, 384GB DRAM, and 24 3.84TB KIOXIA’s CM7-R Gen5 SSDs.

We configured the drives into a RAID5 configuration for both SW RAID and Graid. For stripe size, we used a 4K stripe for Graid, with 4K, 64K, and 512K chunks for mdadm. The varying chunk size for software RAID was required to show peak 4K transfer speeds in an optimized configuration and peak large-block bandwidth in its best light. This was not as important for Graid, which handled the different block sizes without a performance detriment.

- Server: Supermicro AS-2125HS-TNR

- CPU: 2 x AMD EPYC 9654 96-Core Processor x 2

- Memory: 24 x Samsung M321R2GA3BB6-CQKVS DDR5 4800 MT/s 16GB x 24

- NVMe Drive: 24 x KIOXIA CM7-R 3.84T KCMY1RUG3T84 x 24

- RAID Controller: SupremeRAID SR-1010

- SupremeRAID Driver: 1.5.0-659.g10e76f72.010

- Linux OS: Ubuntu 22.04.1 LTS

| RAID 5 FIO Performance |

||||

| Test | SW RAID5 4KB Chunk |

SW RAID5 64K Chunk |

SW RAID5 512K Chunk |

SupremeRAID 4KB Stripe |

| 1MB sequential write (192T/16Q) | 1.22GB/s | 3.51GB/s | 801MB/s | 148GB/s |

| 1MB sequential read (192T/16Q) | 21.8GB/s | 279GB/s | 235GB/s | 279GB/s |

| 64K random write (192T/16Q) | 822MB/s | 627MB/s | 795MB/s | 30.2GB/s |

| 4K random write (192T/32Q) | 49.8k IOPS (61.6ms) | 205k IOPS (15.01ms) | 78.7k IOPS (39ms) | 2.02M IOPS (1.52ms) |

| 4K random read (192T/32Q) | 5.6M IOPS (1.1ms) | 5.5M IOPS (1.11ms) | 5.53M IOPS (1.11ms) | 28.5M IOPS (.22ms) |

Comparing the performance of software RAID and Graid was quite eye-opening. In terms of peak bandwidth, we ended up increasing the mdadm chunk size during this evaluation from 4K to 64K and 512K since, at 4K, the peak read bandwidth was low. Mdadm wasn’t great overall, but the highest sequential read speed was in the 64K chunk size, measuring 279GB/s, matching the speed of the Graid HW RAID configuration. Sequential write performance for SW RAID topped at 3.51GB/s in a 64K chunk size, although that was nothing compared to Graid, which measured 148GB/s.

Moving to a large-block random write transfer of 64K, SW RAID ranged from 627MB/s to 822MB/s, while Graid blew that out of the water, measuring 30.2GB/s.

In the final area, looking at random 4K transfer speeds, we measured the greatest SW RAID performance at a 4K chunk size, measuring 5.6M IOPS at 1.1ms. Graid came in at an impressive 28.5M IOPS in this same test. 4K write speed saw its best SW RAID performance with the 64K chunk, measuring 205k IOPS at 15.01ms, compared to Graid with 2.02M IOPS at 1.52ms.

Final Thoughts

We’ve been hands-on with just about all of the modern RAID flavors, ranging from dedicated hardware cards to various software-based solutions. We’ve also tested the Graid solution many times across three different GPUs and a variety of SSD media types and NVMe interfaces. To be fair, many data sets, such as backup and recovery, large data lakes, file shares, and many others that don’t have a serious performance requirement, would be perfectly happy with any of these solutions. But if an application needs full-tilt access to the underlying flash, Graid is playing on another level altogether.

While most customers look at NVMe hardware and assume performance will be great no matter what, it’s important to understand how those systems will perform once the drives are combined—and then add a RAID layer on top of it. In a Linux environment, software RAID is really showing its limitations in keeping up with NVMe devices, especially Gen5 SSDs.

While individual drive performance is strong, not all RAID solutions fit best. Comparing optimized configurations against each other, Graid offered bandwidth in excess of 279GB/s read and 148GB/s write across 24 KIOXIA CM7-R Gen5 SSDs, while SW RAID managed 279GB/s read and 3.51GB/s write. In 4K random transfers, we saw an incredible 28.5M IOPS read, and 2.02M IOPS write from Graid, with SW RAID offering just 5.6M IOPS read and 205k IOPS write. SW RAID might be “fast enough” for some environments, but it barely compares to Graid’s SupremeRAID for those that demand the highest performance levels possible.

For maximizing NVMe SSD performance in a single host like this, we haven’t seen anything on the market that can touch the Graid SupremeRAID Gen5 solution. It’s fantastic, and in this testing, we’re doing the work on an inexpensive NVIDIA A2000 GPU. Any organization looking to maximize their investment in Gen5 flash would be wise to take on a Graid PoC to see how impactful their technology can be.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed