Intel launched the Optane DC Persistent Memory Module in the spring of 2019 as a way to bridge the gap between volatile DRAM and high-performance SSDs. A little over a year later, Intel has built on the platform with Intel Optane Persistent Memory 200 Series or Optane PMem 200 for short. The new modules are optimized for the new 3rd Gen Intel Xeon Scalable Processors, making the combination, with Intel SSDs, very much a platform play. PMem 200 is quoted to deliver 32% more memory bandwidth than Gen1, which is a nice boost and something we’ll be putting to the test in this review.

Intel launched the Optane DC Persistent Memory Module in the spring of 2019 as a way to bridge the gap between volatile DRAM and high-performance SSDs. A little over a year later, Intel has built on the platform with Intel Optane Persistent Memory 200 Series or Optane PMem 200 for short. The new modules are optimized for the new 3rd Gen Intel Xeon Scalable Processors, making the combination, with Intel SSDs, very much a platform play. PMem 200 is quoted to deliver 32% more memory bandwidth than Gen1, which is a nice boost and something we’ll be putting to the test in this review.

By way of background, We have extensive prior coverage on PMem. This iteration isn’t much different than the first one, so most of the prior work is still quite relevant today in terms of architecture, benefits and so on. Here are a few pieces to hit if you need to get up to speed on PMem:

- Podcast #60: Kristie Mann, Intel Persistent Memory

- Intel Optane DC Persistent Memory NoSQL Performance Review

- Supermicro SuperServer with Intel Optane DC Persistent Memory First Look Review

- Intel Explains Benefits Of PMem 200 With DAOS

In this review, we have pulled together a great combination of technology. On the hardware side, we have an Intel OEM box, well-equipped with PMem 200 modules and the latest Xeon Scalable CPUs. We’ve layered on top MemVerge memory Machine v1.2, which is purpose-built software to best leverage the persistent memory modules.

What’s New With Intel Optane Persistent Memory 200 Series

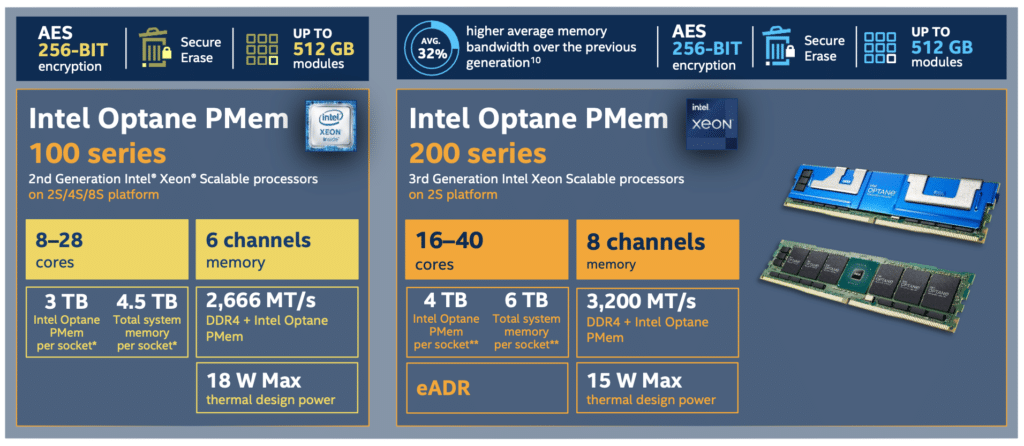

Most of the benefits of the 200 Series are related to the uplift from the 3rd Gen Intel Xeon Scalable Processors. Clearly the largest difference in the memory bandwidth throughput, with PMem 200 picking up 3,200 MT/s support. But there are several other benefits geared around absolute performance.

Core count in the previous version ranged from 8-28 cores, in the 200 series it starts at 16 cores and goes up to 40. With the first PMem, users could add 3TB of PMem for a total memory per socket of 4.5TB, now the total is 6TB per socket, with 4TB of PMem 200 being added. The maximum thermal design power dropped from 18W to 15W. And the newest persistent memory comes with eADR, extended Asynchronous DRAM Refresh.

PMem 100 vs 200 Performance Differences (512GB)

| PMem | Intel Optane | Intel Optane 200 |

| Endurance 100% Writes 15W 256B | 300 PBW | 410 PBW |

| Endurance 100% Write 15W 64B | 75 PBW | 103 PBW |

| Bandwidth 100% Read 15W 256B | 5.3GB/s | 7.45GB/s |

| Bandwidth 100% Write 15W 256B | 1.89GB/s | 2.60GB/s |

| Bandwidth 100% Read 15W 64B | 1.4GB/s | 1.86GB/s |

| Bandwidth 100% Write 15W 64B | 0.47GB/s | 0.65GB/s |

Intel Optane Persistent Memory 200 Series Specifications

| Compatible Processor | 3rd Gen Intel Xeon Scalable processors on 4-socket platforms | |||||

| Form Factor | Persistent Memory Module | |||||

| SKU | 128 GB | 256 GB | 512 GB | |||

| User Capacity | 126.7 GB | 253.7 GB | 507.7 GB | |||

| MOQ | 4 | 50 | 4 | 50 | 4 | 50 |

| Technology | Intel Optane Technology | |||||

| Limited Warranty | 5 years | |||||

| AFR | ≤ 0.44 | |||||

| Endurance 100% Writes 15W 256B | 292 PBW | 497 PBW | 410 PBW | |||

| Endurance 67% Read; 33% Write 15W 256B |

224 PBW | 297 PBW | 242 PBW | |||

| Endurance 100% Write 15W 64B |

73 PBW | 125 PBW | 103 PBW | |||

| Endurance 67% Read; 33% Writte 15W 64B |

56 PBW | 74 PBW | 60 PBW | |||

| Bandwidth 100% Read 15W 256B |

7.45 GB/s | 8.10 GB/s | 7.45 GB/s | |||

| Bandwidth 67% Read; 33% Write 15W 256B |

4.25 GB/s | 5.65 GB/s | 4.60 GB/s | |||

| Bandwidth 100% Write 15W 256B |

2.25 GB/s | 3.15 GB/s | 2.60 GB/s | |||

| Bandwidth 100% Read 15W 64B |

1.86 GB/s | 2.03 GB/s | 1.86 GB/s | |||

| Bandwidth 67% Read; 33% Write 15W 64B |

1.06 GB/s | 1.41 GB/s | 1.15 GB/s | |||

| Bandwidth 100% Write 15W 64B |

0.56 GB/s | 0.79 GB/s | 0.65 GB/s | |||

| DDR FREQUENCY | 3200 MT/s | |||||

| MAX TDP | 15W | 18W | ||||

| TEMPERATURE (MAX) | ≤ 83°C (85°C shutdown, 83°C default) media temperature | |||||

| TEMPERATURE (AMBIENT) | 48°C @ 2.4m/s for 12W | |||||

| TEMPERATURE (AMBIENT) | 43°C @ 2.7m/s for 15W | |||||

MemVerge Management

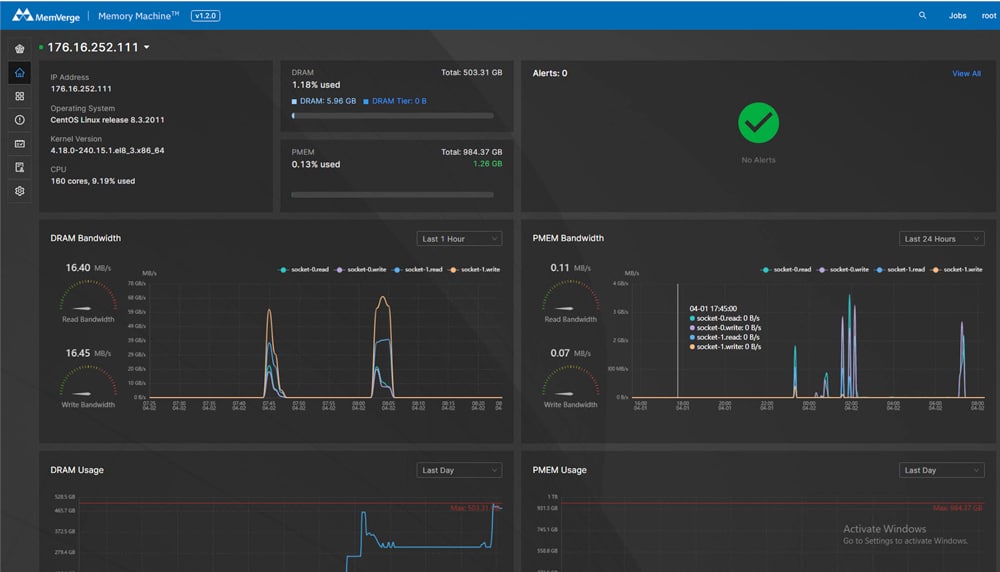

MemVerge Memory Machine v1.2 still leverages the same GUI we saw in our original review. The Global Dashboard sets itself apart by focusing on DRAM and PMem where most GUIs look at things like CPU, Memory, Storage, and Networking. For IO-intensive applications, dashboards that show storage usage across multiple systems can be valuable. For memory-centric applications, the Memory Machine Global Dashboard provides the unique ability to visualize memory usage, node status, events, and alerts across multiple servers.

As it is the focus, we can monitor DRAM and PMem bandwidth as we are testing, and as most users are leveraging the technology. The DRAM and PMEM usage data is a guide to sizing decisions by system admins by helping them understand their workload’s behavior which is needed for performance tuning and debugging. For instance, an admin can see constant memory usage when a workload reaches peak memory usage, or if it is allocating and deallocating memory periodically. This is especially important when an application crashes due to OOM. Admins can see memory usage data to quickly identify exactly when it happened.

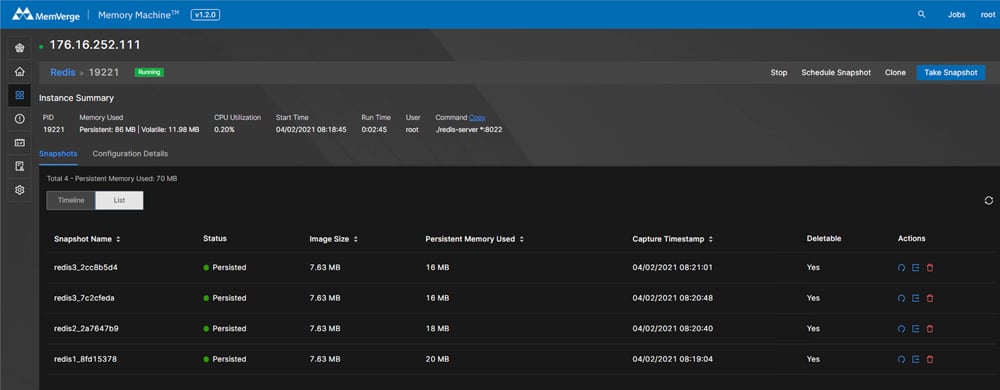

Under the instances tab, we can see the Redis instances and their summaries.

The MemVerge Memory Machine management interface can help administrators through a number of use cases:

Crash recovery – The snapshot GUI is used to quickly bring back the database and/or troubleshoot the cause. The database log and Memory Machine Dashboard data determine the time of the crash, allowing the admin to select and restore a snapshot that is closest to the crash time. Developers can then use that restored instance for debugging.

Accelerating Animation & VFX with Memory DVR – Artists want to explore different options on a base Maya scene. They load the base scene, apply the changes, and save it as a different project. They can save many separate scenes, but to show these options they must be repetitively reloaded, which takes a long time. With Memory DVR functionality, you can load a base scene once, take a snapshot as the basic snapshot, then apply your changes and take another snapshot. To apply a different effect, simply restore the snapshot, edit, and take another snapshot. The restore speed of in-memory snapshots is a few seconds compared to minutes for reloading scenes from storage.

Accelerating Genomic Analytics with Memory DVR – Scientists want to experiment with a machine learning algorithm using different parameter settings. They load the data, set the parameter, run the algorithm, and check out the results. if the results are not good, the data is reloaded, a different set of parameters is applied, and the algorithm is run again. With Memory DVR functionality, you can load the data once and take a snapshot. From that point on, if the results are not good, restore the base data and another run with new parameters is done in seconds.

Intel Optane Persistent Memory 200 Series Performance

While PMem can be tested as block storage, which we’ve done in the past, the real benefits of PMem show up with you can leverage it at the byte level with the appropriate software. In many cases, application developers like SAP, tune their application to be able to leverage PMem. While that works for some applications, there’s another option. Leverage a software-defined solution that’s built from the ground up to help businesses leverage all of the performance and persistence benefits PMem 200 offers. To test this latest generation of PMem, that’s exactly what we did.

MemVerge offers one of the most comprehensive offerings when it comes to leveraging persistent memory. We took a look at the MemVerge Memory Machine earlier this year. MemVerge has released an update to their software to take advantage of the new Xeon CPUs, PMem 200, and all of the new storage Intel has released. MemVerge Memory Machine is now on v1.2 with several new benefits the first two being support for third-generation Intel Xeon Scalable processors and support for Intel Optane Persistent Memory 200 Series.

Memory Machine v1.2 offers support for Microsoft SQL Server on Linux where they said they can double the OLTP performance at the same memory cost. It also supports KVM hypervisors now with the dynamic tuning of the DRAM:PMEM ratio per VM. In-memory database clusters like Redis and Hazlecast now have HA with coordinated in-memory snapshots. And finally, v1.2 has centralized memory management for DRAM and PMem across the data center.

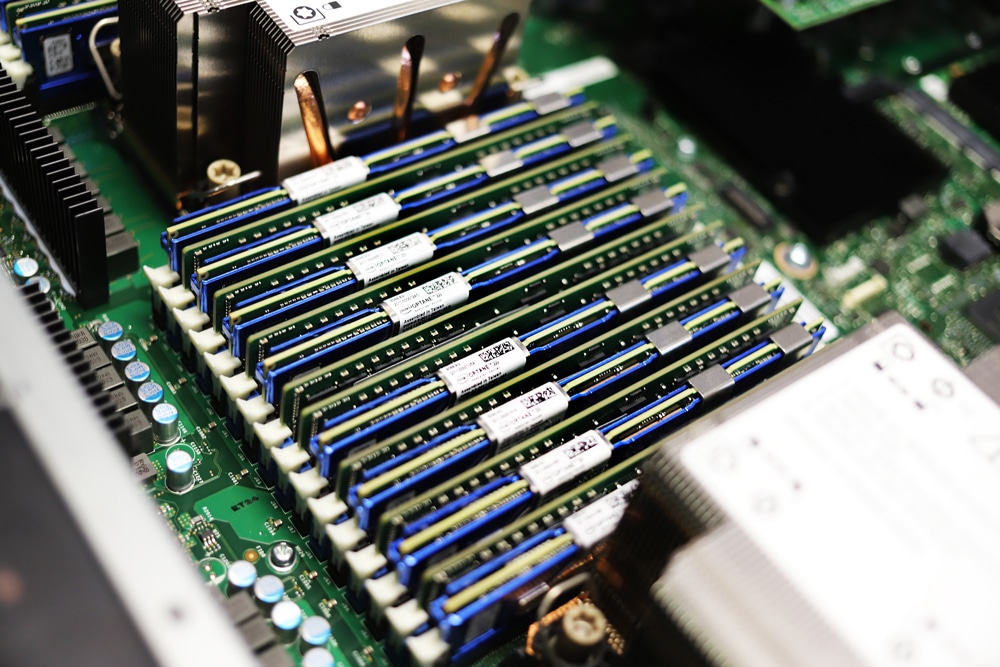

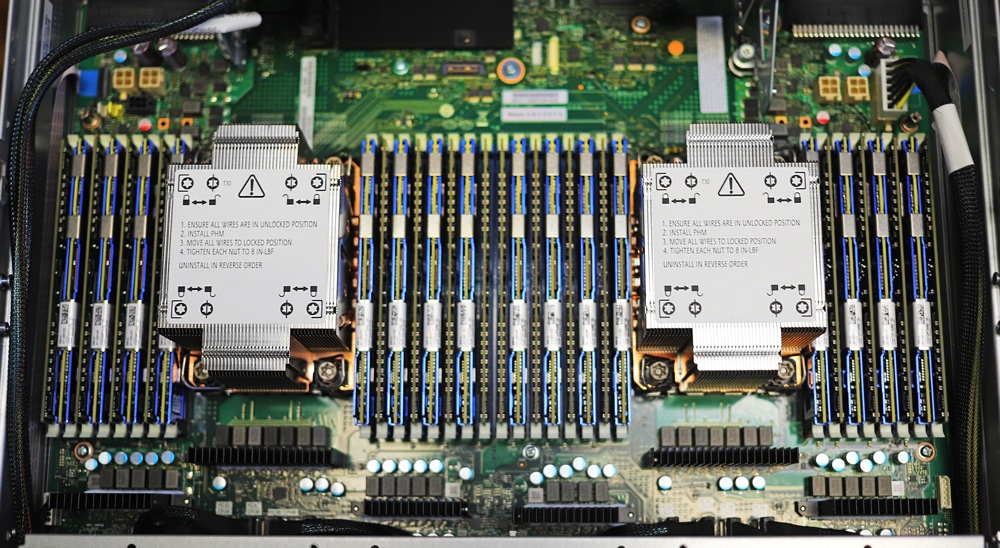

Ice Lake Platform – Intel OEM Server

- 2 x Intel Xeon Platinum 8380 @ 2.3GHz 40-cores

- 16 x 32GB DDR4 3200MHz

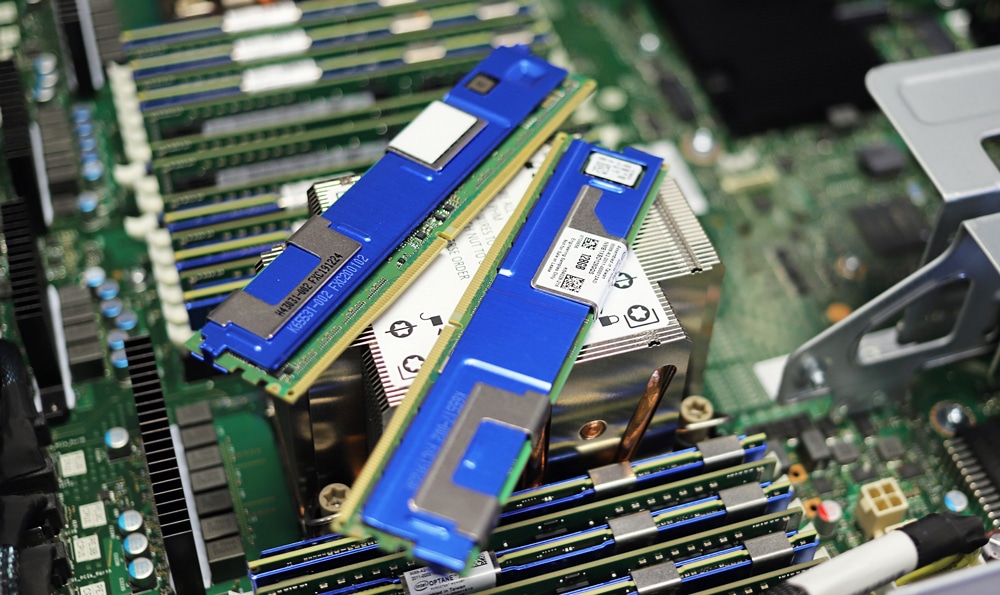

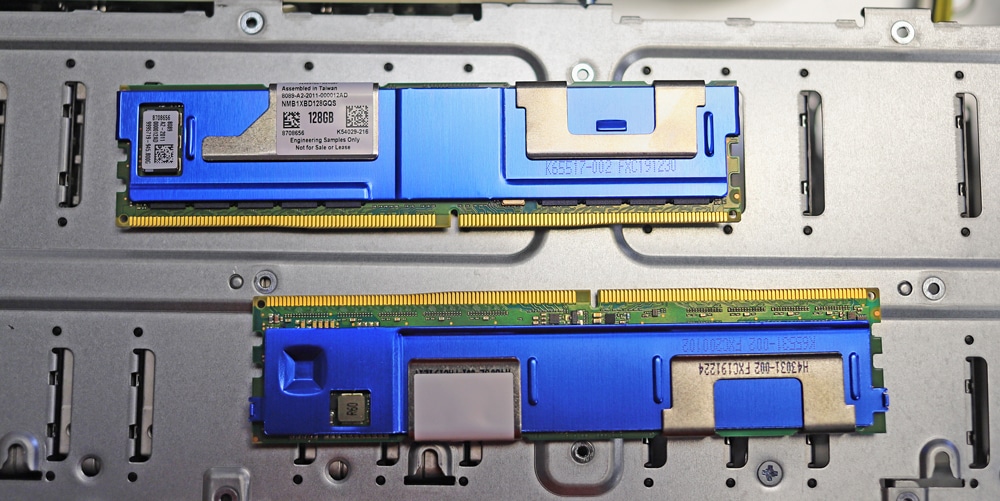

- 16 x 128GB Intel Persistent Memory 200 Series

- Boot SSD: Intel 1TB SATA

- Database SSD: Intel P5510 7.68TB

- OS: CentOS 8.3.2011

Cascade Lake Platform – Supermicro SYS-2029U-TN24R4T

- 2 x Intel Xeon Platinum 8270 @ 2.70GHz 26-cores

- 12 x 16GB DDR4 192GB

- 12 x 128GB Intel Persistent Memory 100 Series

- Boot SSD: 1TB SATA SSD

- OS CentOS 8.2.2004

Both Optane and MemVerge Memory Machine are better leveraged for in-memory applications. Our benchmarks are typically seen as normal to high-stress workloads that would be seen in real life during IT operations. Instead, here we will be looking at a few different tests and we will be looking specifically at things like DRAM versus PMem versus DRAM + PMem and how each shakes out. For this review, we will be using KDB Performance both bulk insert and read test as well as Redis Quick Recovery with ZeroIO Snapshot and Redis Clone with ZeroIO Snapshot.

KDB Performance Testing

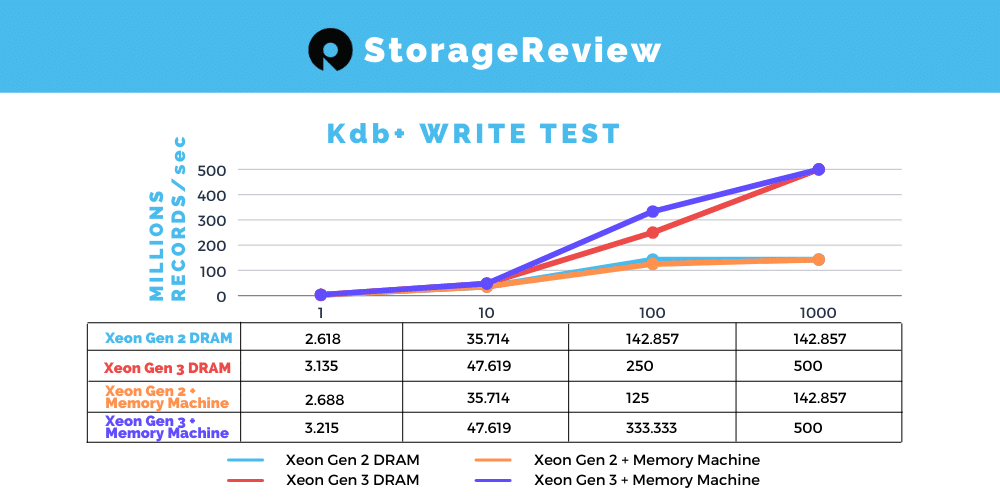

Kx’s kdb+ is a time-series in-memory database. It is known for its speed and efficiency and for that reason very popular in Financial Service Industry. One big constraint for kdb is the limitation of DRAM capacity. MemVerge Memory Machine fits perfectly here so kdb can take full advantage of PMem for expanded Memory space with similar performance to that of DRAM. For the bulk insert test, we looked at a single insert, 10, 100, and 1000 inserts and measured in millions of bulk inserts per second. We look at just DRAM and Memory Machine with DRAM tiering.

With the KX kdb+ bulk we are looking both at Cascade Lake and Ice Lake. Results are recording in Million Record/second (MR/s). Starting with Cascade Lake, at one batch all three were about the same. Once we started going up, DRAM pulled ahead with until it hit a peak of about 142 MR/s. MM w/DRAM tiering caught up at the 1000 batch mark.

The same test on the Ice Lake starts off about the same: one batch sees both about equal, at 10 batches DRAM and MM w/DRAM tiering are the same, but at 100 MM w/DRAM tiering pulls ahead this time with 333 MR/s. The two catch back up at 500 MR/s at 1000 batches, this is over 3.5 times higher than the Cascade Lake top peak.

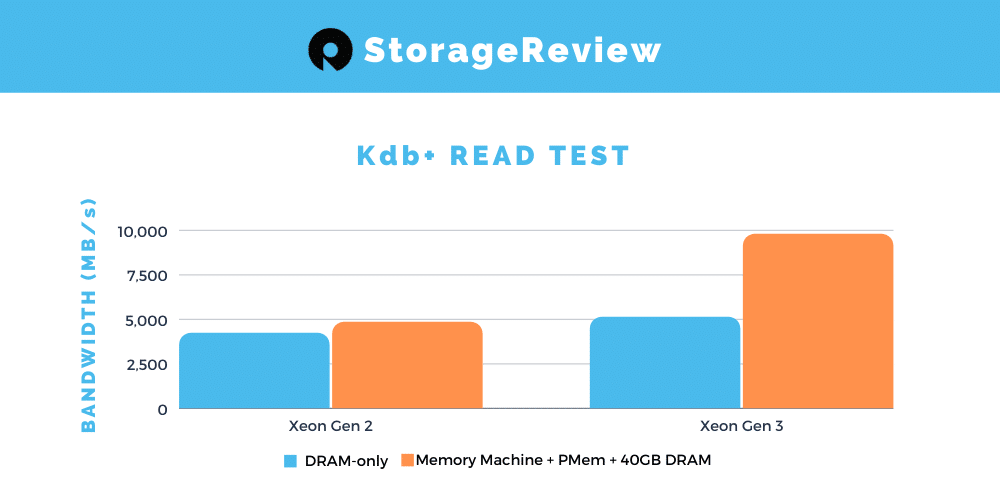

Next, we looked at kdb+ with a Read test. Here the test set-up is a bit different. The read test is the same throughout but this time we looked at DRAM only and then Memory Machine with either 40GB DRAM tiering. On Xeon Gen 2 DRAM only was able to hit 4.22GB/s while MM w/ 40G DRAM tiering hit 4.83GB/s.

The same test on the new processors gave us 5.13GB/s with DRAM and a whopping 9.77GB/s with MM w/ 40G DRAM tiering.

Conclusion

With new processors comes new PMem, the Intel Optane Persistent Memory 200 Series the company took an existing product and made improvements where they would be the most effective. The company claims a 32% improvement in performance over the original with cores up to 40 now and support for 3200MT/s. While they come in the same capacity for modules as the last version, 128GB, 256GB, and 512GB, Intel has made it so users can add more modules per socket to bring the total RAM footprint to 6TB. To test the new PMem we worked with MemVerge and their newly released Memory Machine v1.2.

In application testing of the new Intel Xeon Gen3 platform leveraging MemVerge Memory Machine v1.2, we saw huge gains over the previous generation Intel Xeon platform. In the Kdb+ write test measuring the speed of bulk inserts of single, 10, 100 or 1000 batches, we measured huge gains from the Gen3 Xeon platform as a whole over a near top-spec Gen2 platform. At its peak of 1000 batch inserts, we saw a difference of around 142 Million Records/second (MR/s) on Xeon Gen2 versus 500 MR/s on Xeon Gen3, a massive 3.5x difference. In the Kdb+ read test, comparing Memory Machine + Pmem + 40GB DRAM tiering, we measured 4.83GB/s on Xeon Gen2, while Xeon Gen3 scaled up to an impressive 9.77GB/s.

Overall there is a lot to like with the new Intel Xeon Gen3 release alongside Intel Optane Persistent Memory 200 Series as we saw with our testing with MemVerge. While the biggest changes to the Intel platform include much faster processors, faster DRAM, and Gen4 PCIe support, Intel’s PMem 200 with the right applications can really change the equation for a number of mission-critical use cases. Applications like SAP HANA that natively interact with PMem, will be glad to have access to all of these Intel technologies. For all others who want to take advantage of PMem 200, MemVerge offers an easy path of adoption.

Engage with StorageReview

Newsletter | YouTube | LinkedIn | Instagram | Twitter | Facebook | TikTok | RSS Feed