The Lenovo ThinkSystem SR630 V2 is a 1U, two-socket server supporting third-generation Intel Xeon Scalable processors. Its versatile configurations suit it for compute density, cloud, and even database applications, with support for three graphics cards and 12 2.5-inch drives. It also features advanced remote management from Lenovo’s XClarity Controller. This is an ideal high-density server for general deployment.

The Lenovo ThinkSystem SR630 V2 is a 1U, two-socket server supporting third-generation Intel Xeon Scalable processors. Its versatile configurations suit it for compute density, cloud, and even database applications, with support for three graphics cards and 12 2.5-inch drives. It also features advanced remote management from Lenovo’s XClarity Controller. This is an ideal high-density server for general deployment.

Lenovo ThinkSystem SR630 V2 Specifications

The ThinkSystem SR630 V2 supports two third-generation Intel Xeon Scalable processors, up to 40 cores/80 threads and 270 watts per socket. These chips support eight-channel memory, of which the SR630 V2 makes full use; it has 16 DIMMs per socket (32 total), with a total memory ceiling of 8TB using 256GB 3DS RDIMMs. Up to 16 Intel Persistent Memory 200 Series DIMMs are also supported.

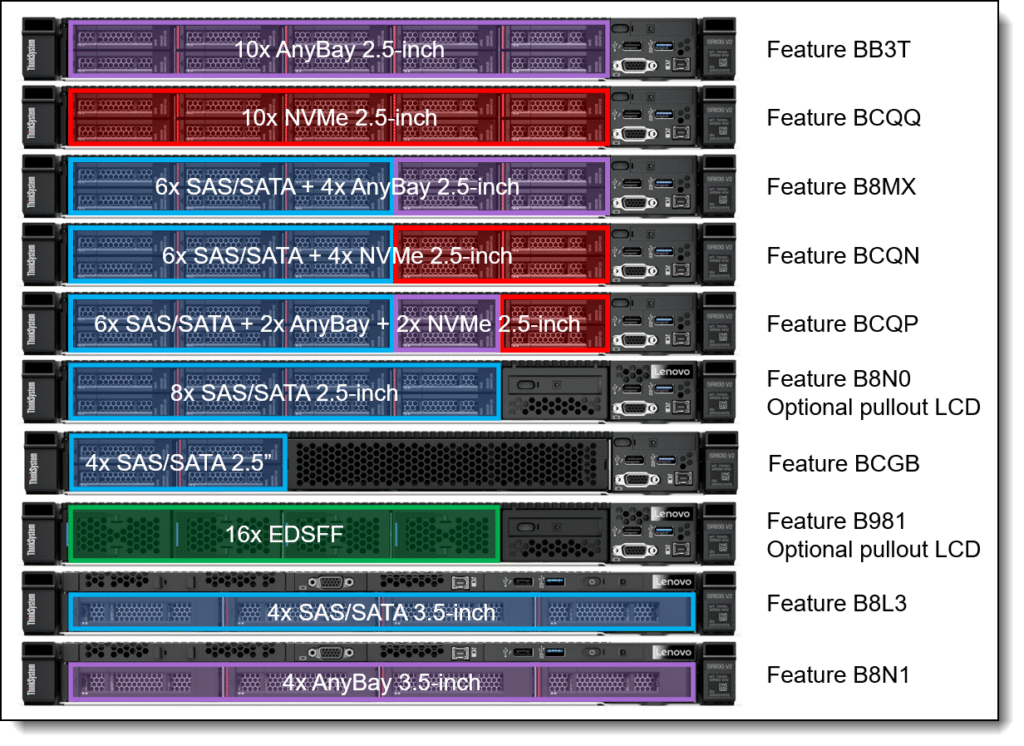

Storage is where this 1U server makes strides. The front bays support 10 2.5-inch drives four 3.5-inch drives, or 16 EDSFF E1.S drives. Configurations with 2.5-inch drives have the added advantage of the SR630 V2’s internal RAID card, eliminating the need to use an expansion slot.

For more power and expansion than this server offers, Lenovo offers the 2U, two-socket ThinkSystem SR650 V2 (look for our full review soon) and the liquid-cooled ThinkSystem SR670 V2. And for a more compact server that doesn’t require a data center, Lenovo offers the ThinkEdge SE450.

The ThinkSystem SR630 V2’s full specifications are as follows:

| Components | Specification |

| Machine types |

|

| Form factor | 1U rack. |

| Processor | One or two third-generation Intel Xeon Scalable processor (formerly codenamed “Ice Lake”). Supports processors up to 40 cores, core speeds of up to 3.6 GHz, and TDP ratings of up to 270W. |

| Chipset | Intel C621A “Lewisburg” chipset, part of the platform codenamed “Whitley” |

| Memory | 32 DIMM slots with two processors (16 DIMM slots per processor). Each processor has 8 memory channels, with 2 DIMMs per channel (DPC). Lenovo TruDDR4 RDIMMs and 3DS RDIMMs are supported. DIMM slots are shared between standard system memory and persistent memory. DIMMs operate at up to 3200 MHz at 2 DPC. |

| Persistent memory | Supports up to 16x Intel Optane Persistent Memory 200 Series modules (8 per processor) installed in the DIMM slots. Persistent memory (Pmem) is installed in combination with system memory DIMMs. |

| Memory maximum | With RDIMMs: Up to 8TB by using 32x 256GB 3DS RDIMMs With Persistent Memory: Up to 12TB by using 16x 256GB 3DS RDIMMs and 16x 512GB Pmem modules |

| Memory protection | ECC, SDDC (for x4-based memory DIMMs), ADDDC (for x4-based memory DIMMs, requires Platinum or Gold processors), and memory mirroring. |

| Disk drive bays | Up to 4x 3.5-inch or 12x 2.5-inch or 16x EDSFF hot-swap drive bays:

See Supported drive bay combinations for details. AnyBay bays support SAS, SATA or NVMe drives. NVMe bays only support NVMe drives. Rear drive bays can be used in conjunction with 2.5-inch front drive bays. The server supports up to 12x NVMe drives all with direct connections (no oversubscription). |

| Maximum internal storage |

|

| Storage controller |

|

| Optical drive bays | No internal optical drive. |

| Tape drive bays | No internal backup drive. |

| Network interfaces | Dedicated OCP 3.0 SFF slot with PCIe 4.0 x16 host interface. Supports a variety of 2-port and 4-port adapters with 1GbE, 10GbE and 25GbE network connectivity. One port can optionally be shared with the XClarity Controller (XCC) management processor for Wake-on-LAN and NC-SI support. |

| PCI Expansion slots | Up to 3x PCIe 4.0 slots, all with rear access, plus a slot dedicated to the OCP adapter. Slot availability is based on riser selection and rear drive bay selection. Slot 3 requires two processors.

Four choices for rear-access slots:

For 2.5-inch front drive configurations, the server supports the installation of a RAID adapter or HBA in a dedicated area that does not consume any of the PCIe slots. Note: Not all slots are available in a 1-processor configuration. See the I/O expansion for details. |

| GPU support | Supports up to 3x single-wide GPUs |

| Ports | Front: 1x USB 3.1 G1 (5 Gb/s) port, 1x USB 2.0 port (also for XCC local management), External diagnostics port, optional VGA port.

Rear: 3x USB 3.1 G1 (5 Gb/s) ports, 1x VGA video port, 1x RJ-45 1GbE systems management port for XCC remote management. Optional DB-9 COM serial port (installs in slot 3). Internal: 1x USB 3.1 G1 connector for operating system or license key purposes |

| Cooling | Up to 8x N+1 dual-rotor redundant hot-swap 40 mm fans, configuration dependent. One fan integrated in each power supply. |

| Power supply | Up to two hot-swap redundant AC power supplies, 80 PLUS Platinum or 80 PLUS Titanium certification. 500 W, 750 W, 1100 W and 1800 W AC options, supporting 220 V AC. 500 W, 750 W and 1100 W options also support 110V input supply. In China only, all power supply options support 240 V DC. Also available is a 1100W power supply with a -48V DC input. |

| Video | G200 graphics with 16 MB memory with 2D hardware accelerator, integrated into the XClarity Controller. Maximum resolution is 1920×1200 32bpp at 60Hz. |

| Hot-swap parts | Drives, power supplies, and fans. |

| Systems management | Operator panel with status LEDs. Optional External Diagnostics Handset with LCD display. Models with 8x 2.5-inch front drive bays can optionally support an Integrated Diagnostics Panel. XClarity Controller (XCC) embedded management, XClarity Administrator centralized infrastructure delivery, XClarity Integrator plugins, and XClarity Energy Manager centralized server power management. Optional XClarity Controller Advanced and Enterprise to enable remote control functions. |

| Security features | Chassis intrusion switch, Power-on password, administrator’s password, Trusted Platform Module (TPM), supporting TPM 2.0. In China only, optional Nationz TPM 2.0. Optional lockable front security bezel. |

| Operating systems supported | Microsoft Windows Server, Red Hat Enterprise Linux, SUSE Linux Enterprise Server, VMware ESXi. See the Operating system support section for specifics. |

| Limited warranty | Three-year or one-year (model dependent) customer-replaceable unit and onsite limited warranty with 9×5 next business day (NBD). |

| Service and support | Optional service upgrades are available through Lenovo Services: 4-hour or 2-hour response time, 6-hour fix time, 1-year or 2-year warranty extension, software support for Lenovo hardware and some third-party applications. |

| Dimensions | Width: 440 mm (17.3 in.), height: 43 mm (1.7 in.), depth: 773 mm (30.4 in.). See Physical and electrical specifications for details. |

| Weight | Maximum: 26.3 kg (58 lb) |

Lenovo ThinkSystem SR630 V2 Build and Design

The ThinkSystem SR630 V2 has standard 1U blade server measurements of 17.3 by 1.7 by 30.4 inches (WHD) and weighs a maximum of 58 pounds. Drive bays dominate the front panel. The 2.5-inch and 3.5-inch options support anybay, a mix of SAS, SATA, and NVMe drives for excellent versatility. Our unit has 8 2.5-inch bays populated, with two unpopulated for this review.

The front panel has expected functionality, including USB 3.2 Gen 1 (5Gbps), VGA, the power button, and status lights. The USB 2.0 port and external diagnostics port are for communicating with the XClarity Controller (XCC), the SR630’s management system, which we’ll look at later.

XCC data can be viewed in several ways. For high-security data centers, Lenovo offers the SR630 V2 with a built-in diagnostics display, which would take up two of the 2.5-inch front bays, reducing the total front 2.5-inch drives to eight.

Lenovo also offers an external diagnostic handset, which is essentially the LCD display but on a dongle that plugs into the front of the server. It’s magnetized for convenience. A third option is to plug in a mobile device to the front USB 2.0 port and use the XClarity app.

Under the port cluster is a pull tab showing the XCC’s MAC and local network address. Lenovo offers an optional lockable front security bezel to prevent unauthorized access. For further security, the SR630 V2 has a chassis intrusion prevention switch.

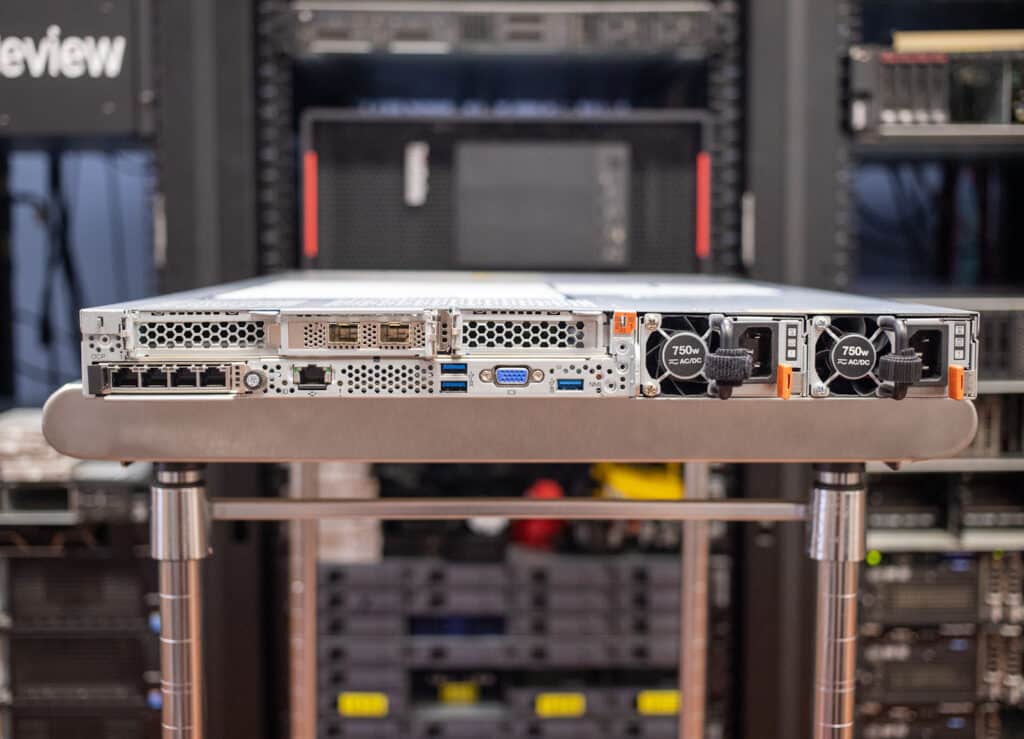

Around the back, the three PCIe 4.0 x16 expansion slots and an OCP 3.0 slot are visible; the latter, at the bottom left, comes out with a thumbscrew. A second CPU must be installed to use the third expansion slot. A low-profile card can be installed in each, or you could mix and match one slot with a full-height/half-length card and use a low-profile card in the other available slot. Up to three 75W GPUs, like the NVIDIA T4 and A2, are supported.

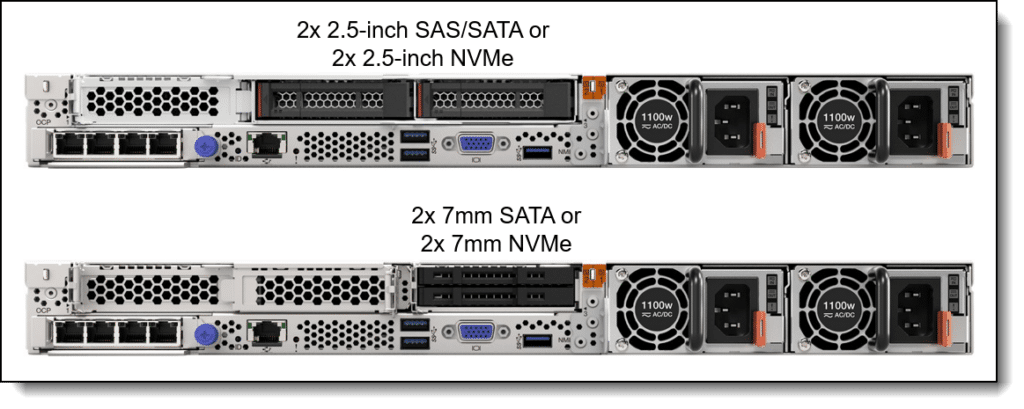

Though the SR630 V2 supports M.2 boot storage, it also still supports hot-swap boot storage in the form of two 2.5-inch 7mm drives installed in a rear bay, which would take up the expansion slot next to the power supplies. You could also opt for two 2.5-inch 9.5mm height bays back here, each taking up a slot and leaving you with just one free, but increasing the total 2.5-inch drive capacity to 12.

The SR630 V2’s dual hot-swap redundant power supplies are offered in 500W, 750W, 1100W, and 1800W options, with the expected 80 Plus ratings of Platinum or Titanium. Rear port selection includes 1GbE for IPMI, VGA, and three USB 3.2 Gen 1 Type-A (5Gbps) ports. An optional serial port can go in the third expansion slot. Our model has a four-port networking card in the OCP slot; there are 1GbE, 10GbE, and 25GbE options.

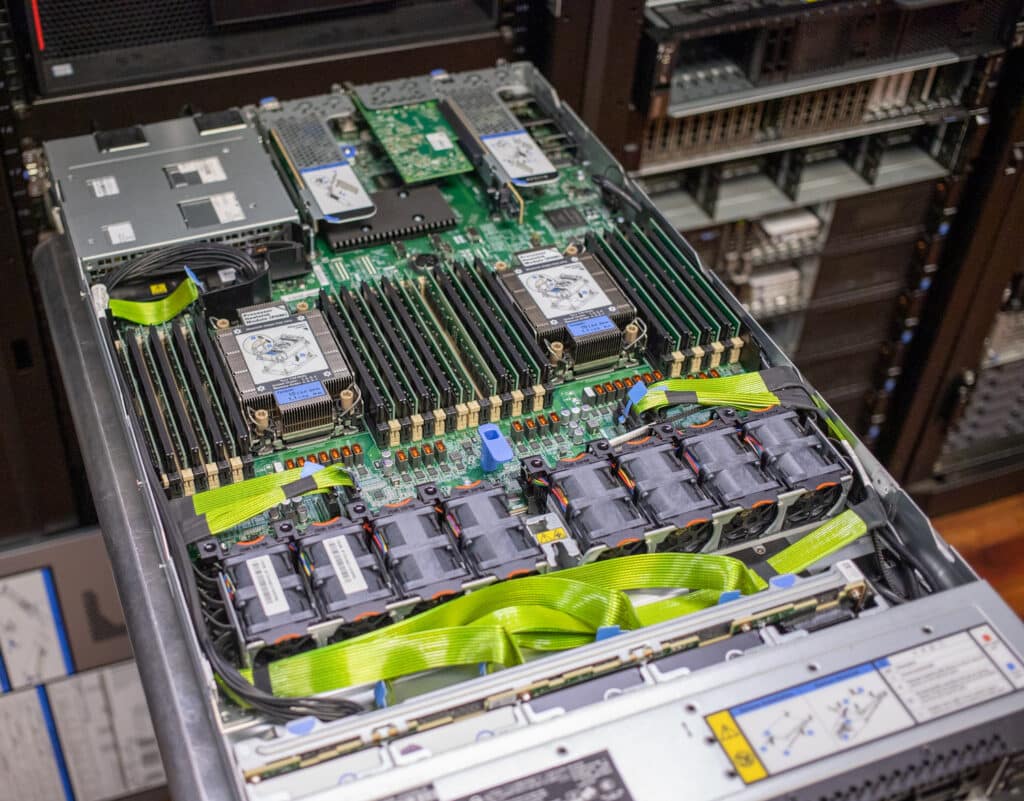

Let’s go inside. Configured with front 2.5-inch drive bays like our model, the SR630 V2 has room for a built-in RAID card powerful enough to handle all the drives, eliminating the need to use an expansion slot. Configurations with 3.5-inch drives don’t have this luxury, but all drive configurations support M.2 boot drives; these go in an adapter behind the front drive bays. One or two M.2 drives are supported; two-drive configurations are RAID 1.

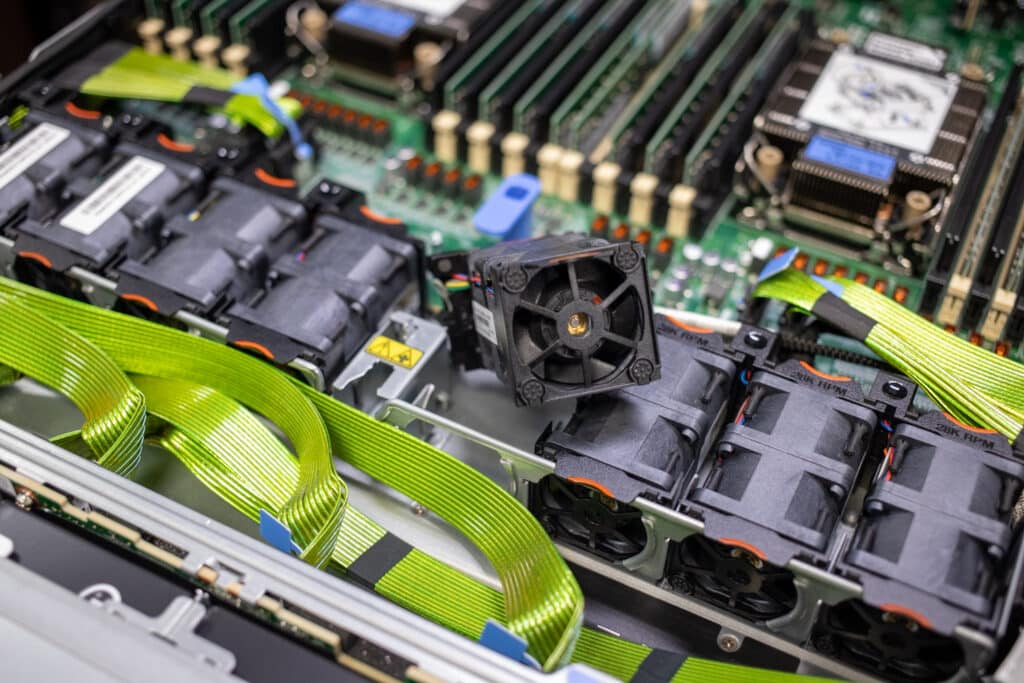

The CPU sockets straddle the centerline, each with 16 DIMM slots. Airflow comes through the front and through the CPU heatsinks. Here’s one of the eight hot-swap fans. The first generation SR630 only had seven fans.

The heatsinks on the CPUs in our review model don’t have the extra radiators on them (used for the highest TDP CPUs; also new for the SR630 V2), but they’d extend in front of the fans. The SR630 V2’s most effective cooling setup is with EDSFF drives because they allow more airflow through the front panel.

Here are the expansion card slots removed; notice the risers.

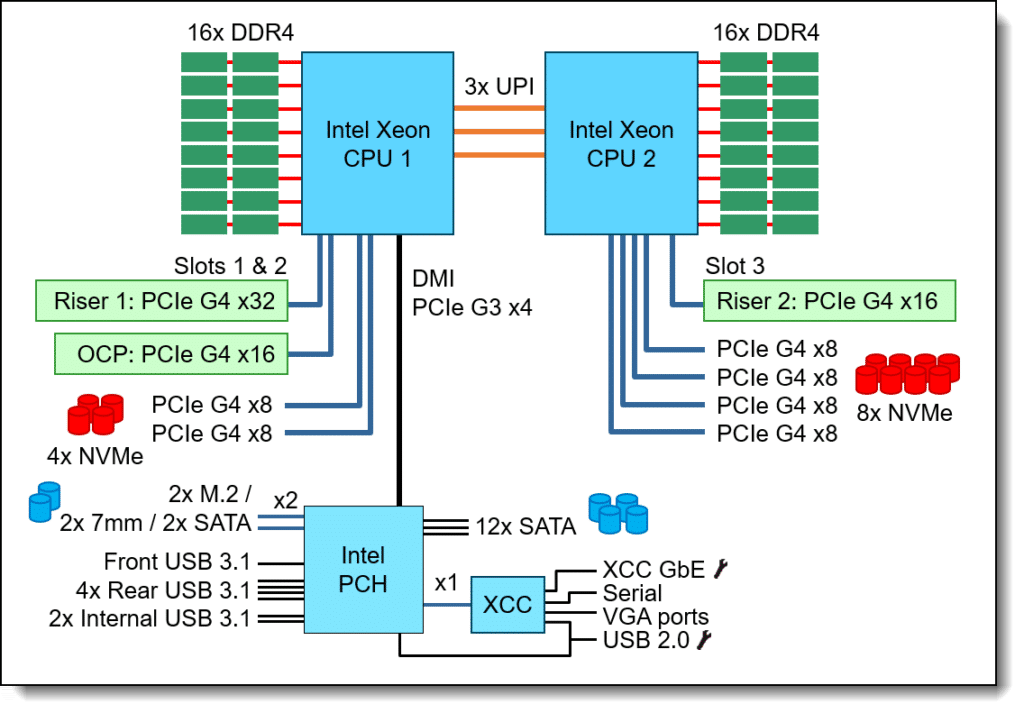

And that’s about all there is to see inside the SR630 V2. It’s cleanly designed and easy to service. Most importantly, Lenovo maximized all available space; it’s impressive how much can fit into a 1U server. The architectural diagram is below for those that want to pry more into that detail.

Lenovo ThinkSystem SR630 V2 Remote Management

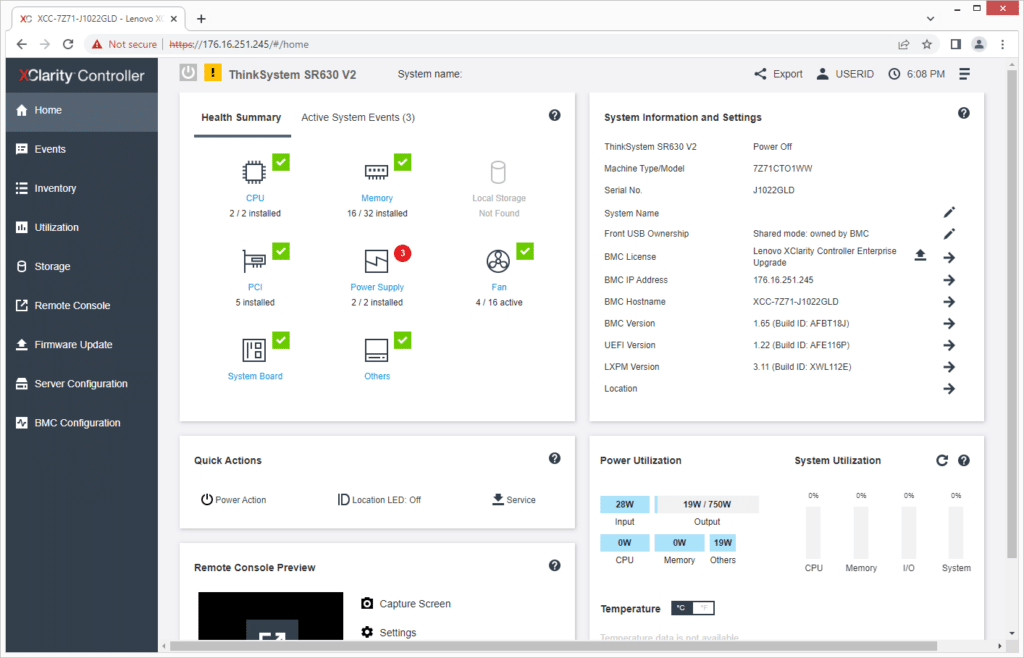

Before we get to benchmarking, here’s what you can expect from the SR630 V2’s XClarity remote management through the browser interface. The Home tab gives you a quick health summary, system information, and quick actions.

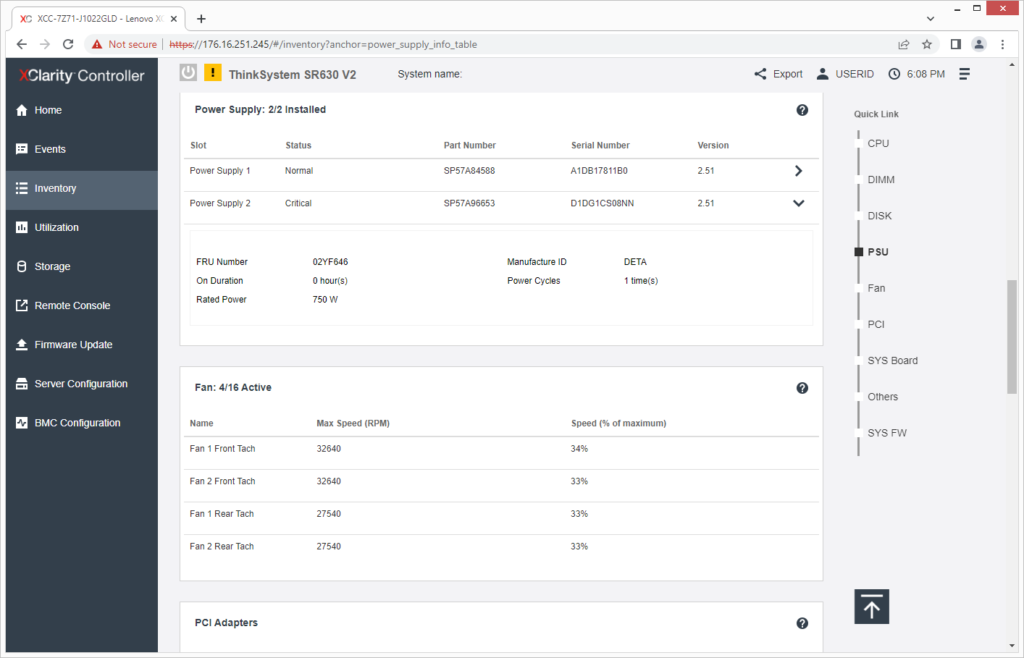

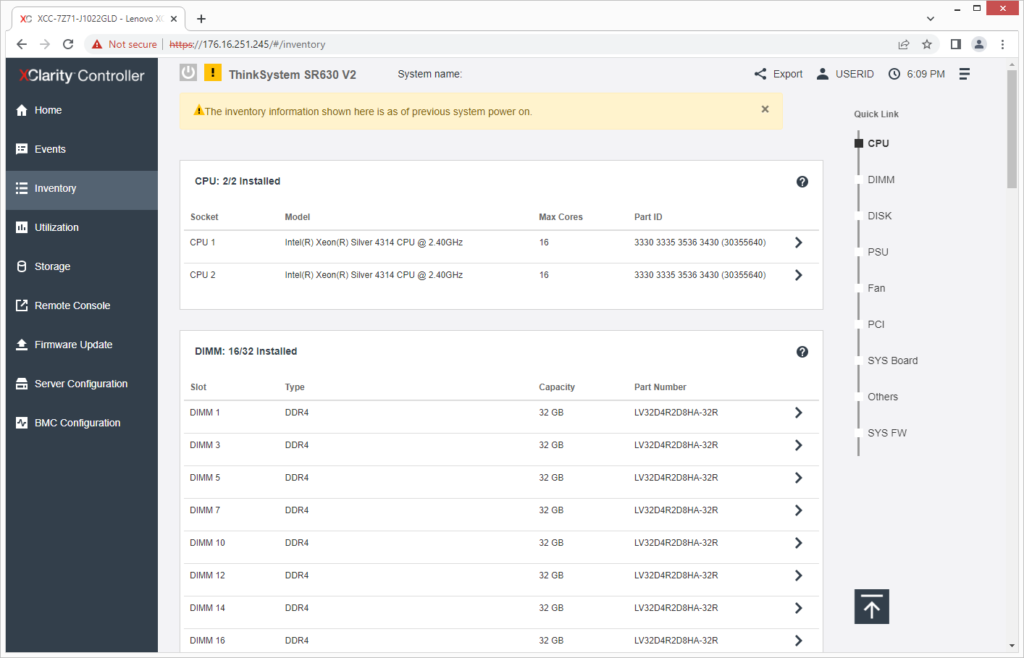

The inventory shows what’s installed, from processors and memory to fans. The screenshot immediately below shows a warning that we had one of the power supplies unplugged. (See the Critical status next to Power Supply 2.)

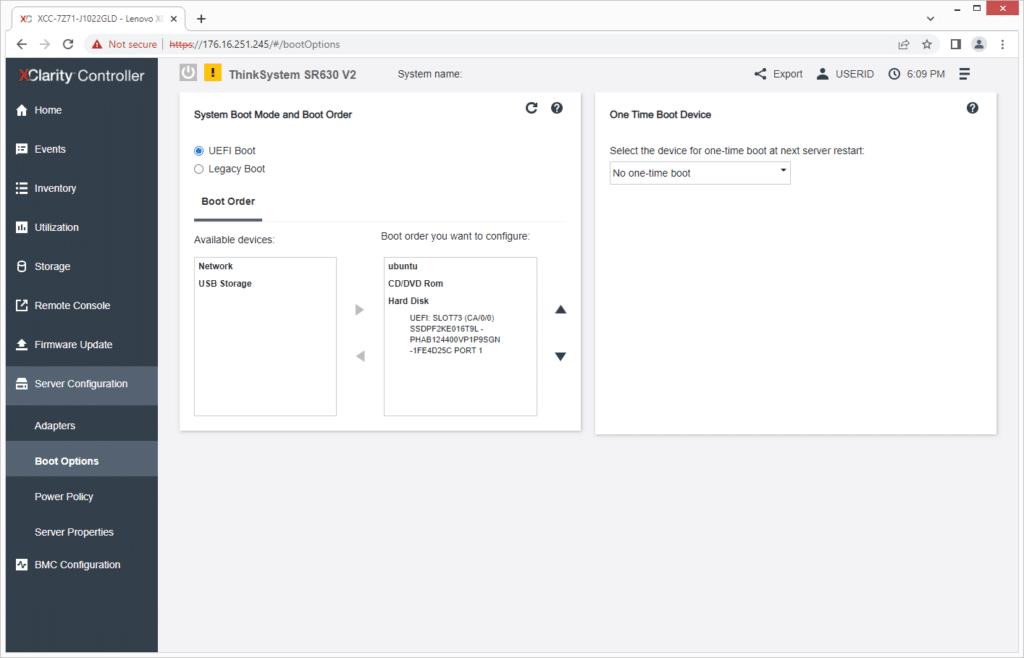

The Server Configuration section has several subsections, including Boot Options.

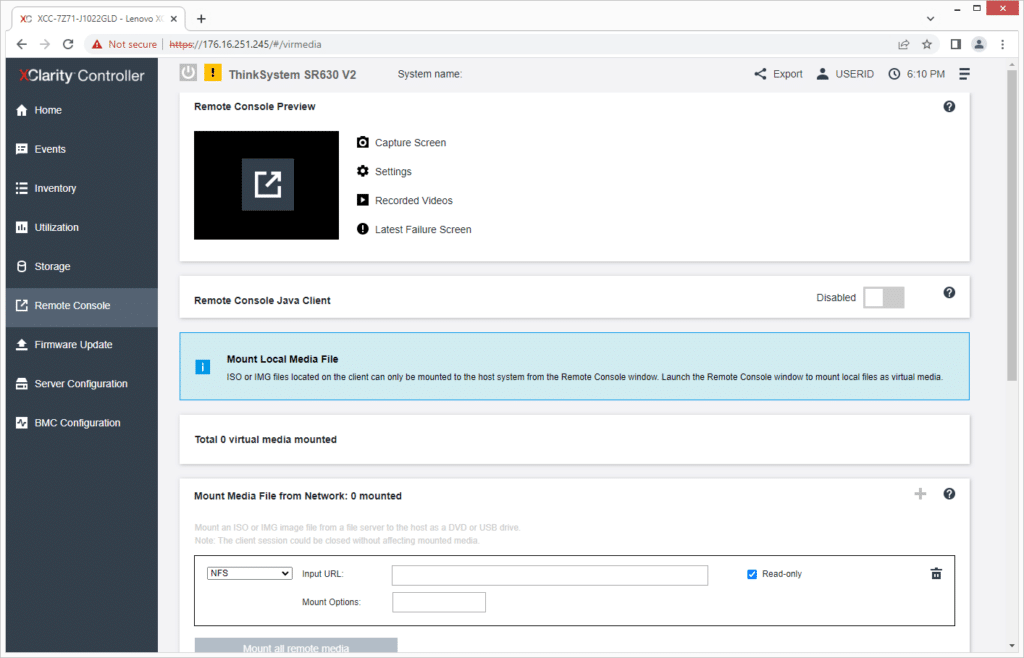

Remote Console allows opening a remote console and mounting media files.

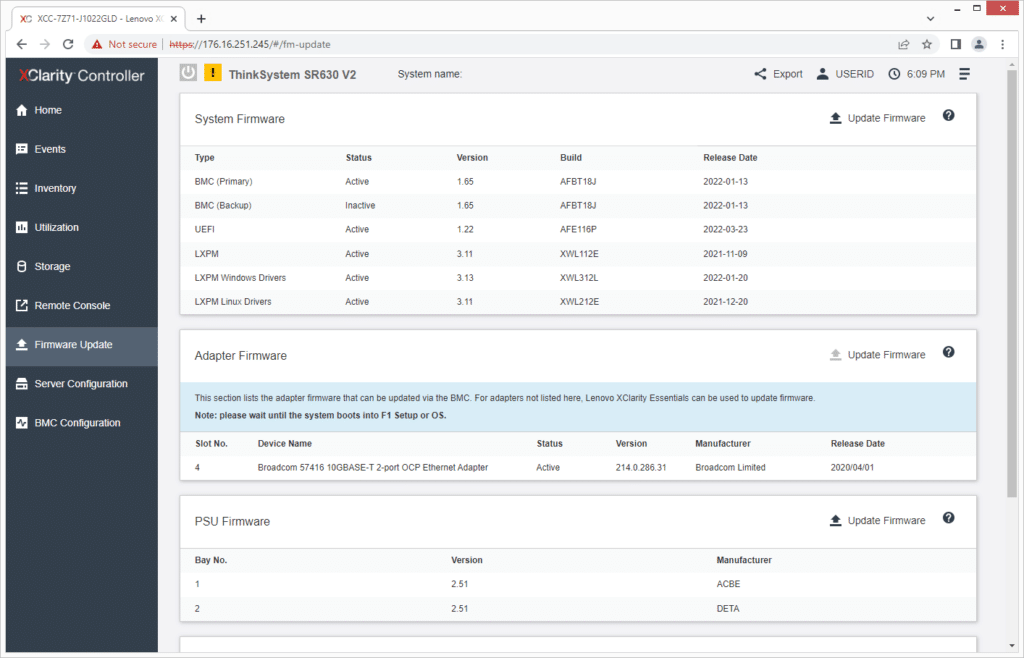

XClarity also allows you to update the SR630 V2’s components, including expansion card firmware.

Lenovo ThinkSystem SR630 V2 Performance

For our performance testing, our ThinkSystem SR630 V2 review unit is configured as follows:

- 2x Intel Xeon Silver 4314 processors, each:

- 16 cores/32 threads

- 2.4GHz base frequency; 3.4GHz Turbo

- 2 UPI links

- 135W TDP

- 512GB DDR4-2667 RAM (256GB and eight channels per socket) via 16x 32GB RDIMMs

- 1 x 1.6TB Intel P6500 Gen4 SSD Boot

- 8 x 7.68TB Solidigm P5520 Gen4 SSDs tested in JBOD

This configuration balances value and performance. The Xeon Silver CPUs only support two UPI links. On the upside, these chips have a low TDP and thus don’t require the larger CPU heatsinks or power supplies. Eight NVMe drives ought to give it plenty of IOPS.

VDBench Workload Analysis

When it comes to benchmarking storage devices, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions.

These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices.

Profiles:

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

- 4K Random Write: 100% Write, 128 threads, 0-120% iorate

- 64K Sequential Read: 100% Read, 32 threads, 0-120% iorate

- 64K Sequential Write: 100% Write, 16 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

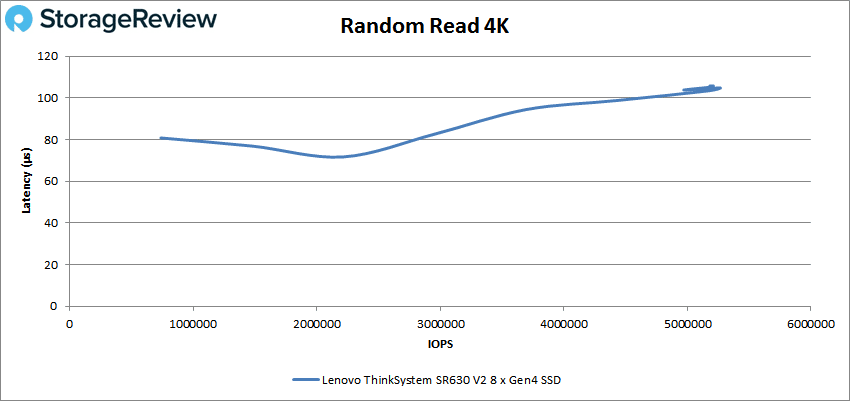

Starting with 4K random read, the SR630 V2 shows itself a strong performer, thanks to the eight NVMe drives in our configuration. It maintained low latency, topping out at 106µs and an impressive 5.18 million IOPS, with a touch of instability at the end.

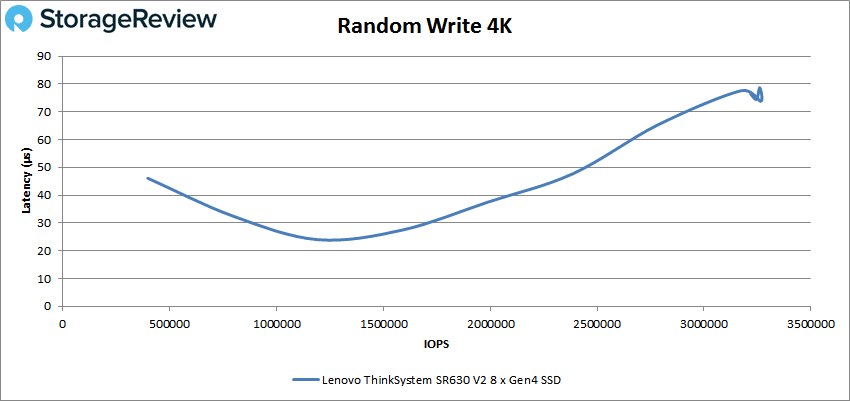

Random write 4K results continued showing low latency, starting at 46µs and just shy of 4 million IOPS going down to 24µs at 11.9 million IOPS, then peaking at 76µs and 32.1 million IOPS, again showing some slight instability at the tail end, but nothing out of the ordinary for this test.

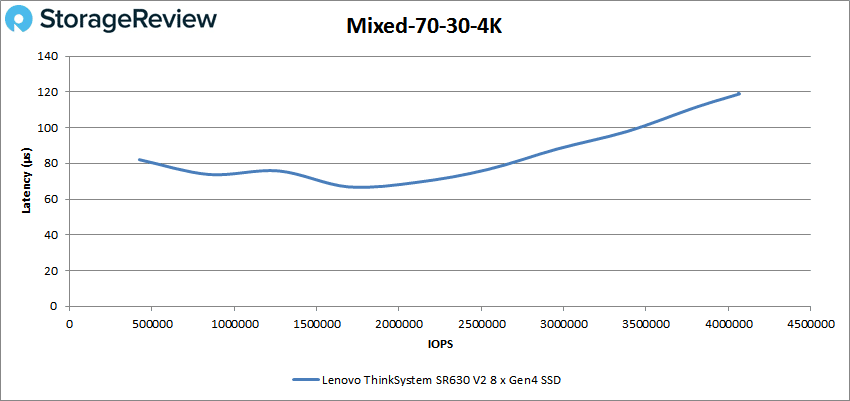

We also have our mixed 70/30 4K test, where the SR630 V2’s curve wasn’t too different from that of the other 4K tests. It ended with 4.06 million IOPS at 119µs.

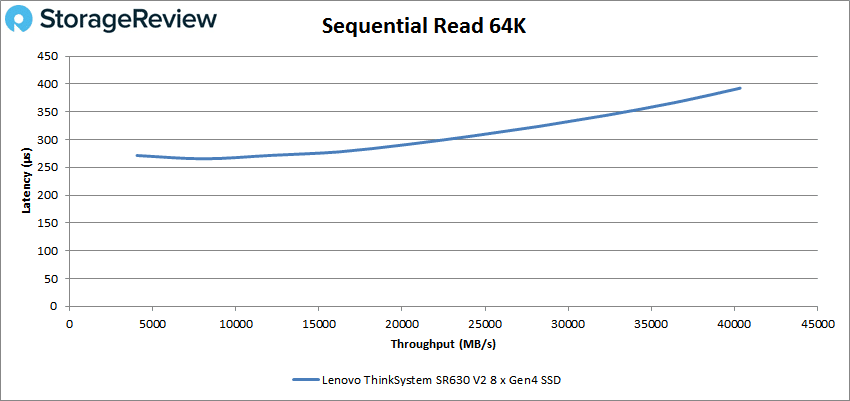

Moving onto the 64K sequential tests, in read the SR630 V2 showed very stable results. It started at 271µs at 4,046MB/s and ended with 393µs and 40,342MB/s, or 634,476 IOPS.

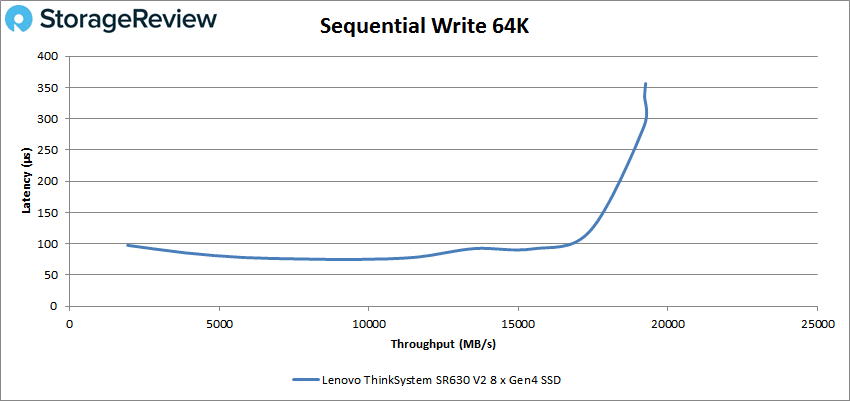

The SR630 V2 started out strongly in sequential write 64K, with latencies under 100µs, until it took a sharp spike. The ending number was 356µs and 19,255MB/s.

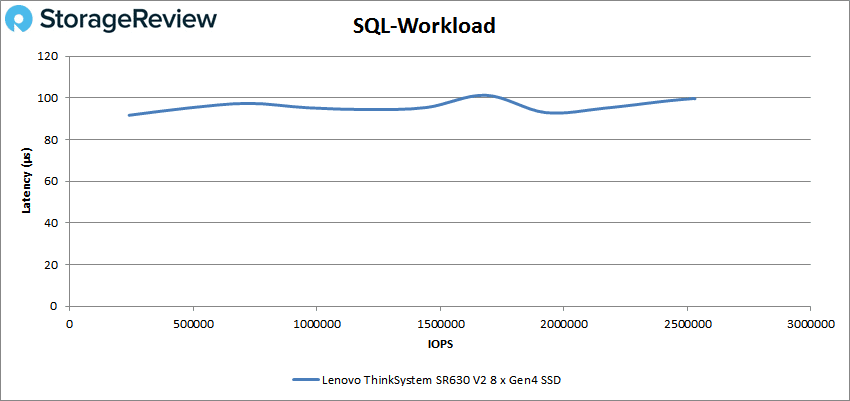

Moving on, our SQL workloads are SQL, SQL 90-10, and SQL 80-20. The SQL workload saw the SR630 V2 doing well, with relatively straight-line performance; it achieved 2.53 million IOPS with just under 100µs latency and no instability.

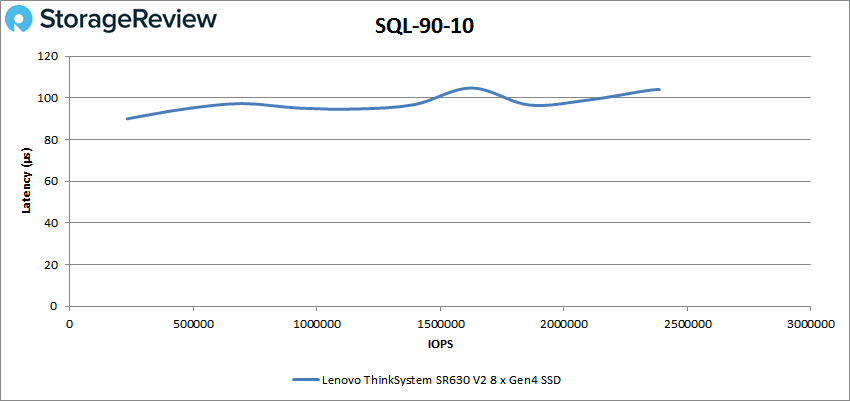

The SR630 V2’s curve was similar in SQL 90-10. The final number was 2.39 million IOPS at 104µs latency.

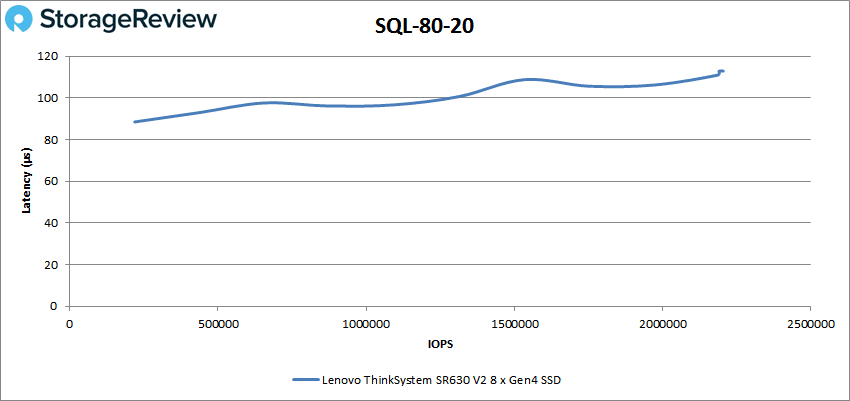

Last in the SQL workload tests is SQL 80-20, where the SR630 V2 shows some tiny instability at the end, finishing with 2.2 million IOPS and 113µs latency.

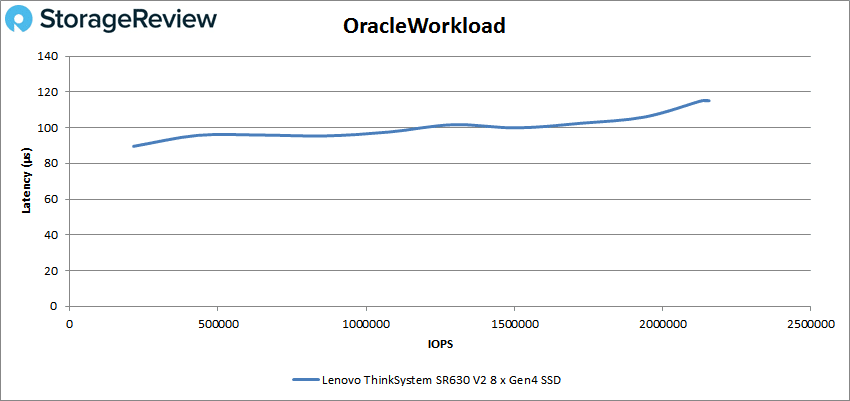

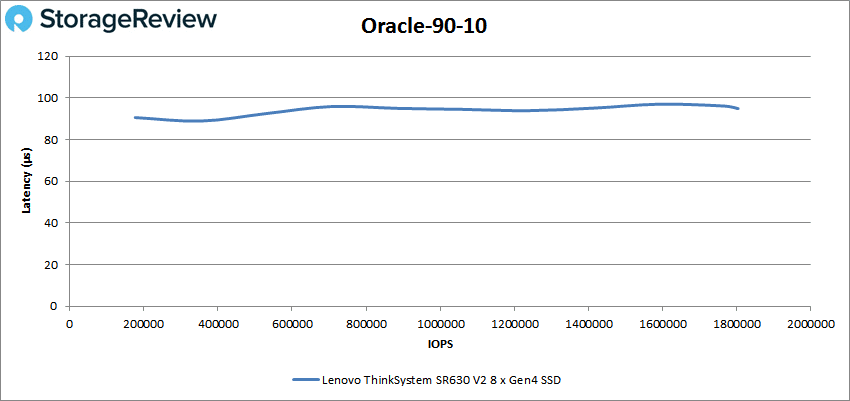

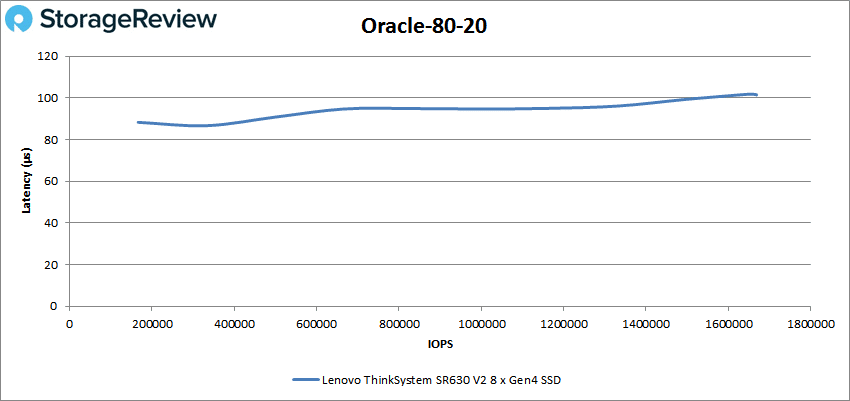

Our Oracle workload tests mirror our SQL tests; they include Oracle, Oracle 90-10, and Oracle 80-20. In Oracle workload, the SR630 V2 maintained around 100µs latency throughout. It finished the test at 2.14 million IOPS and 115µs latency.

We again see stable performance in Oracle 90-10, with even lower latency. The SR630 V2’s final number was 1.8 million IOPS at just 95µs.

The SR630 V2 remained consistent in the last Oracle workload test, Oracle 80-20. It started at 1.66 million IOPS and 88µs and ended at about 16.7 million IOPS and just 101µs.

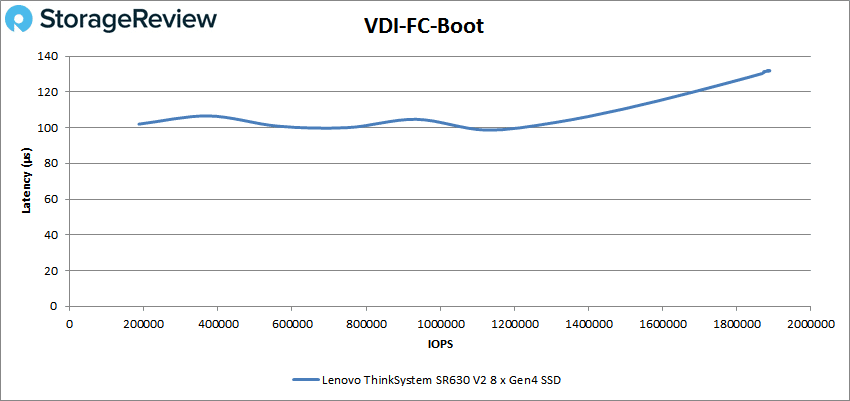

Our final tests in this group are VDI Full Clone (FC) and Linked Clone (LC). Starting with VDI FC Boot, the SR630 V2 finished at 1.88 million IOPS and 132µs.

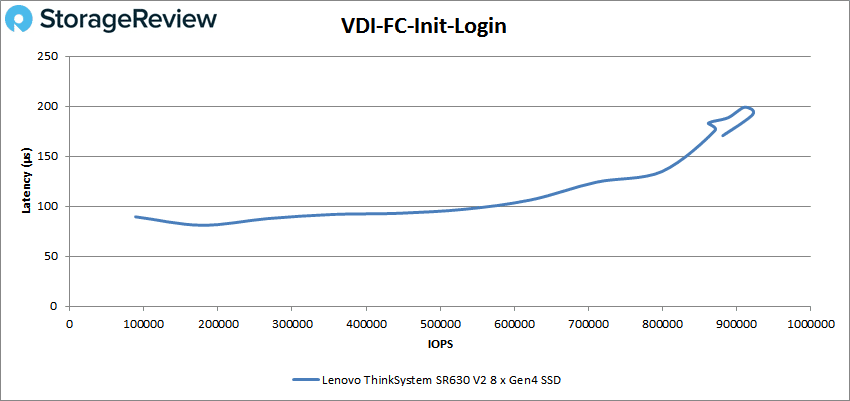

In VDI FC Initial Login, the SR630 V2 did well up until about 850,000 IOPS, where it saw some spikes that aren’t unusual in this test. Its final number was 881,346 IOPS at 171µs.

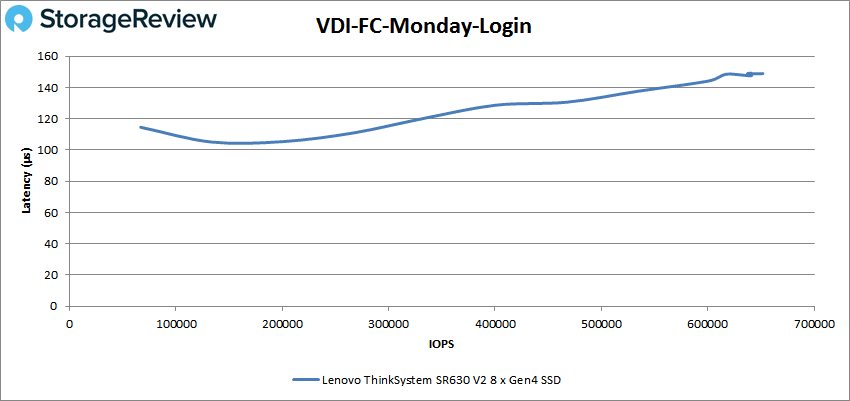

In the last FC test, the SR630 V2 showed more stable performance and good numbers, ending at 637,390 IOPS and 149µs latency.

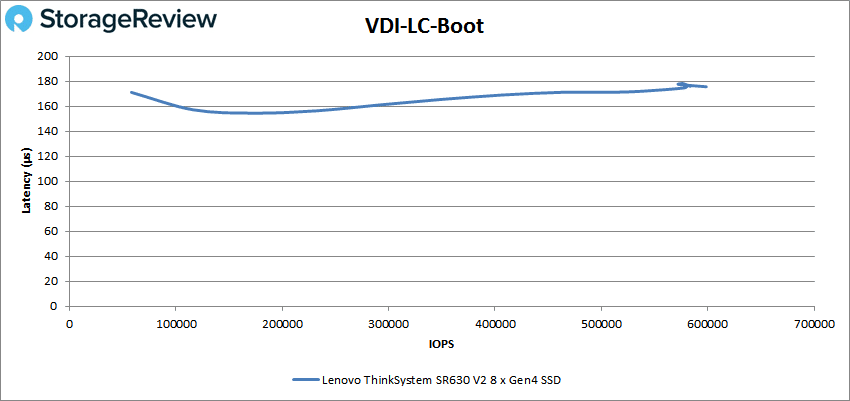

Moving onto the VDI LC tests, we start with boot. Minus a spike at the end, the SR630 V2 did well and maintained stable latency; the last number was 598,490 IOPS at 176µs.

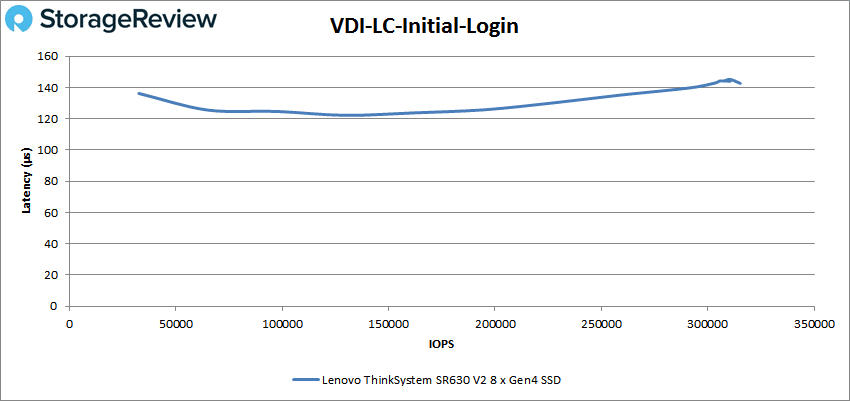

The VDI LC Initial Login test saw the SR630 V2’s stable performance continue, ending at 315,286 IOPS at143µs latency.

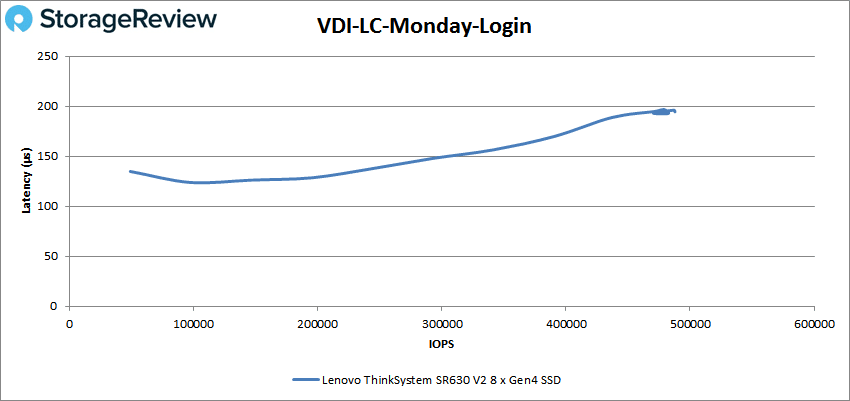

Last up is VDI LC Monday Login. The SR630 V2 did well right up until a minor spike at the end. Its final number was 487,825 IOPS at 195µs.

Conclusion

The Lenovo ThinkSystem SR630 V2 is a strong 1U, two-socket rackmount server for a variety of usages. Support for two third-generation Intel Xeons up to 270W per socket, 32 total DIMM slots, and three low-profile GPUs suit it for compute-intensive applications. It’s also a good choice for high IOPS scenarios, such as high-performance databases, thanks to supporting up to 16 NVMe drives and 16 Intel PMem 200 series DIMMs. Both 2.5- and 3.5-inch configurations support anybay and 2.5-inch configurations have the added advantage of not requiring a separate RAID card. Configurability is a real strength for this server.

Remote management is another strength, thanks to the SR630 V2’s built-in XClarity Controller. The server can show diagnostic information on the front panel, through a diagnostic handset, or a mobile device, and of course through IPMI.

As we tested it with dual Xeon Silver chips and eight NVMe drives, the SR630 V2 achieved over 5.18 million IOPS at 106µs in our 4K random read test and 32.1 million IOPS at 76µs in 4K random write. It also showed remarkably stable performance in our SQL and Oracle workloads and VDI Full Clone and Linked Clone tests.

Overall, the ThinkSystem SR630 V2 impressed us with its configurability and strong performance.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | RSS Feed