The Lenovo ThinkSystem SR685a V3 and SR680a V3 GPU servers are the company’s latest 8-way GPU servers, tailored to meet diverse enterprise AI needs.

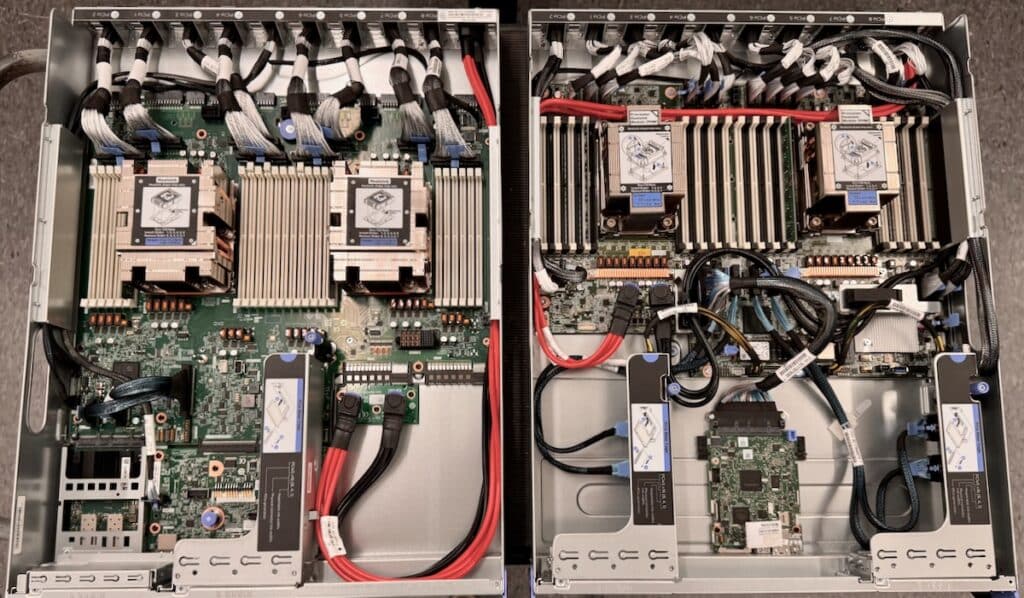

The Lenovo ThinkSystem SR685a V3 and SR680a V3 GPU servers are the company’s latest 8-way GPU servers, tailored to meet diverse enterprise AI needs. Despite having distinct model names, they share a modular foundation, allowing for interchangeable components within each system.

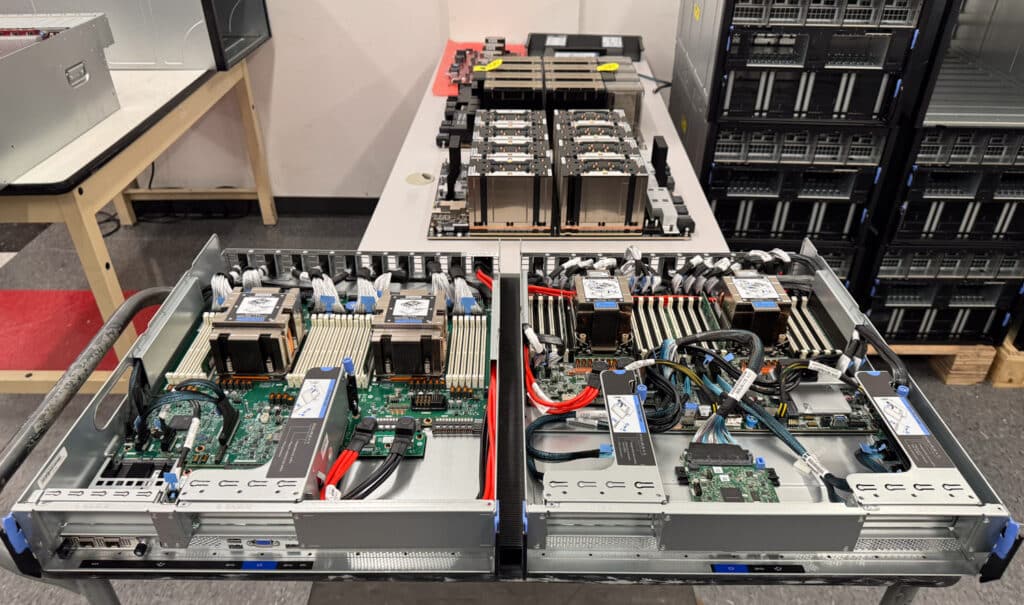

Fundamentally, these servers have three or four core components, depending on how you count. First, an outer casing makes up the chassis shell with embedded fans in the rear. There are internal rails that hold a 2U modular server up top. The bottom 6U houses the GPUs, switches, and PCIe fabric. The 2U servers have I/O that varies based on the AMD or Intel offerings. Lenovo supports NVIDIA and AMD GPU boards today, with future Intel Guadi 3 support.

The Lenovo SR685a V3 (little a for AI) utilizes dual 4th Generation AMD EPYC processors and is specifically optimized for high bandwidth GPU-to-GPU communications, making it ideal for generative AI applications. Conversely, the SR680a V3, equipped with 5th Gen Intel Xeon Scalable processors, supports versatile AI and computational applications, accommodating NVIDIA and AMD GPUs for diverse industry needs. Both models adopt an innovative approach to modularity, allowing users to customize and scale their systems to align precisely with specific operational demands.

These servers are highly sought after (though very hard to get a hold of at the moment) and generated significant buzz at Lenovo’s conference last year in Austin. There’s great excitement surrounding these GPU servers and their potential to advance AI capabilities.

Configurable Models and Customization

The Lenovo ThinkSystem SR685a V3 and SR680a V3 systems are offered in various configure-to-order (CTO) models that serve as a framework for customization. Having the ability to customize configuration models extends to the detailed selection of GPUs, where models are defined based on the specific GPUs selected. For example, the base feature codes listed in the Lenovo configurator allow selection between the AMD MI300X and the NVIDIA H100/H200, each catering to different performance and computational needs. The platform design gives Lenovo greater flexibility in integrating new accelerators as they come to market.

Lenovo ThinkSystem SR685a V3 Specifications

| Components | Specification |

| Form factor | 8U rack |

| Processor | Two AMD EPYC 9004 Series processors (formerly codenamed “Genoa”). Supports processors up to 64 cores, core speeds up to 3.1 GHz, and TDP ratings up to 400W. Supports PCIe 5.0 for high-performance I/O. |

| GPUs | Choice of:

|

| Memory | 24 DIMM slots with two processors (12 DIMM slots per processor). Each processor has 12 memory channels, with 1 DIMM per channel (DPC). Lenovo TruDDR5 RDIMMs are supported up to 4800 MHz. |

| Memory maximum | Up to 1.5TB with 24x 64GB RDIMMs Up to 2.25TB with 24x 96GB RDIMMs |

| Memory protection | ECC, SDDC, Patrol/Demand Scrubbing, Bounded Fault, DRAM Address Command Parity with Replay, DRAM Uncorrected ECC Error Retry, On-die ECC, ECC Error Check and Scrub (ECS), Post Package Repair |

| Disk drive bays | Up to 16x 2.5-inch hot-swap drive bays supporting PCIe 5.0 NVMe drives. |

| OS boot drives | Support for an M.2 adapter with integrated RAID-1; support for 2x NVMe M.2 drives for OS boot and data storage functions |

| Maximum internal storage | 51.2 TB using 16x 3.2TB 2.5-inch NVMe SSDs |

| Storage controller | Onboard NVMe (non-RAID) |

| Network interfaces | It supports 8x high-performance network adapters up to 400 Gb/s connectivity with GPU Direct support. It supports an NVIDIA BlueField-3 2-port 200Gb adapter for the User/Control Plane and a choice of OCP network adapter for management. The OCP 3.0 slot has a PCIe 5.0 x16 host interface, with one port optionally shared with the XClarity Controller 2 (XCC2) management processor for Wake-on-LAN and NC-SI support. |

| PCI Expansion slots | 10x PCIe 5.0 x16 slots:

|

| Ports | Front: 1x USB 3.2 G1 (5 Gb/s) port, 1x USB 2.0 port (also for XCC local management), 1x VGA video port. Rear: 3x USB 3.2 G1 (5 Gb/s) ports, 1x VGA video port, 1x RJ-45 1GbE systems management port for XCC remote management. |

| Cooling | 5x front-mounted dual-rotor fans for the CPU and storage subsystem, N+1 redundant. 10x rear-mounted dual-rotor fans for the GPU subsystem, N+1 redundant. One fan is integrated into each power supply. Front-to-rear airflow. |

| Power supply | Eight hot-swap redundant AC power supplies with up to N+N redundancy. 80 PLUS Titanium certification. 2600 W AC power supplies requiring 220 V AC supply. |

| Video | Embedded video graphics with 16 MB memory and a 2D hardware accelerator are integrated into the XClarity Controller. Two video ports (front VGA and rear VGA) cannot be used simultaneously; using the front VGA port disables the rear VGA port. Maximum resolution is 1920×1200 32bpp at 60Hz. |

| Hot-swap parts | Drives, power supplies, and fans. |

| Systems management | Integrated Diagnostics Panel with status LEDs and pull-out LCD display. XClarity Controller 2 (XCC2) embedded management based on the ASPEED AST2600 baseboard management controller (BMC). Dedicated rear Ethernet port for XCC2 remote access for management. XClarity Administrator for centralized infrastructure management, XClarity Integrator plugins, and XClarity Energy Manager centralized server power management. Optional XCC Platinum will enable remote control functions and other features. |

| Security features | Power-on password, administrator’s password, Root of Trust module supporting TPM 2.0, and Platform Firmware Resiliency (PFR). |

| Operating systems supported | Ubuntu Server. |

| Limited warranty | Three-year or one-year (model dependent) customer-replaceable unit and onsite limited warranty with 9×5 next business day (NBD). |

| Service and support | Optional service upgrades are available through Lenovo Services: 4-hour or 2-hour response time, 6-hour fix time, 1-year or 2-year warranty extension, software support for Lenovo hardware, and some third-party applications. |

| Dimensions | Width: 447 mm (17.6 in.), height: 351 mm (13.8 in.), depth: 924 mm (36.3 in.). |

| Weight | Maximum: 108.9 kg (240 lb) |

Lenovo ThinkSystem SR680a V3 Specifications

| Components | Specification |

| Form factor | 8U rack |

| Processor | Two 5th Gen Intel Xeon Scalable processors (formerly codenamed “Emerald Rapids”). Supports a processor with 48 cores, core speed of 2.3 GHz, and TDP rating of 350W. Supports PCIe 5.0 for high-performance I/O. |

| Chipset | Intel C741 “Emmitsburg” chipset, part of the platform codenamed “Eagle Stream” |

| GPUs | Choice of:

|

| Memory | 32 DIMM slots with two processors (16 DIMM slots per processor). Each processor has 8 memory channels, with 2 DIMMs per channel (DPC). Lenovo TruDDR5 RDIMMs are supported. DIMMs operate at up to 5600 MHz at 1 DPC and up to 4400 MHz at 2 DPC. |

| Memory maximum | Up to 2TB with 32x 64GB RDIMMs |

| Memory protection | ECC, SDDC (for x4-based memory DIMMs), ADDDC (for x4-based memory DIMMs excluding 9×4 RDIMMs, requires Platinum or Gold processors), and memory mirroring. |

| Disk drive bays | Up to 16x 2.5-inch hot-swap drive bays supporting PCIe 5.0 NVMe drives. |

| OS boot drives | Support for two M.2 drives with optional Intel VROC NVMe RAID support for OS boot and data storage functions |

| Maximum internal storage | 51.2 TB using 16x 3.2TB 2.5-inch NVMe SSDs |

| Storage controller | Onboard NVMe (Non-RAID) |

| Network interfaces | Supports 8x high-performance network adapters up to 400 Gb/s connectivity with GPU Direct support. Supports an NVIDIA BlueField-3 2-port 200Gb adapter for the User/Control Plane and a Mellanox ConnectX-6 Lx 2-port 10/25GbE adapter for management. |

| PCI Expansion slots | 10x PCIe 5.0 x16 slots:

|

| Ports | Front: 1x USB 3.2 G1 (5 Gb/s) port, 1x USB 2.0 port (also for XCC local management), 1x Mini DisplayPort video port. Rear: 2x USB 3.2 G1 (5 Gb/s) ports, 1x VGA video port, 1x RJ-45 1GbE systems management port for XCC remote management. |

| Cooling | 5x front-mounted dual-rotor fans for the CPU and storage subsystem, N+1 redundant. 10x rear-mounted dual-rotor fans for the GPU subsystem, N+1 redundant. One fan is integrated into each power supply. Front-to-rear airflow. |

| Power supply | Eight hot-swap redundant AC power supplies with up to N+N redundancy. 80 PLUS Titanium certification. 2600 W AC power supplies requiring 220 V AC supply. |

| Video | Embedded graphics with 16 MB memory and a 2D hardware accelerator are integrated into the XClarity Controller 2 management controller. Two video ports (front Mini DisplayPort and rear VGA); both can be used simultaneously if desired. Maximum resolution of both ports is 1920×1200 at 60Hz. |

| Hot-swap parts | Drives, power supplies, and fans. |

| Systems management | Integrated Diagnostics Panel with status LEDs and pull-out LCD display. XClarity Controller 2 (XCC2) embedded management based on the ASPEED AST2600 baseboard management controller (BMC). Dedicated rear Ethernet port for XCC2 remote access for management. XClarity Administrator for centralized infrastructure management, XClarity Integrator plugins, and XClarity Energy Manager centralized server power management. Optional XCC Platinum enables remote control functions and other features. |

| Security features | Power-on password, administrator’s password, Root of Trust module supporting TPM 2.0, and Platform Firmware Resiliency (PFR). |

| Operating systems supported | Ubuntu Server. |

| Limited warranty | Three-year or one-year (model dependent) customer-replaceable unit and onsite limited warranty with 9×5 next business day (NBD). |

| Service and support | Optional service upgrades are available through Lenovo Services: 4-hour or 2-hour response time, 6-hour fix time, 1-year or 2-year warranty extension, software support for Lenovo hardware, and some third-party applications. |

| Dimensions | Width: 447 mm (17.6 in.), height: 351 mm (13.8 in.), depth: 924 mm (36.3 in.). |

| Weight | Maximum: 108.7 kg (239.8 lb) |

Lenovo ThinkSystem SR685a V3 and SR680a V3 Design and Build

The front of the system supports up to 16 hot-swap PCIe Gen5 NVMe drive bays–an unusually generous amount for GPU-centric servers, which typically offer fewer bays and lanes for expansion. Below the drive bays are the eight front-accessible PCIe Gen5 FHHL (Full Height, Half Length) slots and PCIe switching complex. These slots are equipped with GPU Direct technology (eight NDR 400Gb/s InfiniBand adapters), allowing for high-speed networking and data transfers to reduce latency and increase data processing speeds.

The system houses five hot-swappable fans at the top of the chassis, designed to cool the server, which takes the top 2U, including the CPU, memory, and rear slots. An additional ten fans are mounted at the rear of the chassis to cool the drive bays, adapters, and GPUs.

The front panel also houses essential connectivity and management ports, including three USB 3.2 Gen1 ports and a video output, facilitating direct management and local console interactions.

The rear is equally well-equipped, including the 2U server expansion capabilities. The AMD variant offers one PCIe Gen5 x16 FHHL slot alongside an OCP 3.0 slot equipped with a PCIe Gen5 x16 interface. Conversely, the Intel model has two PCIe Gen5 x16 FHHL slots. An OCP 3.0 slot provides versatility in networking and acceleration options by accommodating various adapter cards adhering to open standards. NVIDIA BlueField-3 DPU adapters can be installed to enable a software-defined, hardware-accelerated IT infrastructure, optimizing various IT operations such as networking and security.

The rear of the GPU unit houses eight 2,600W power supplies, each linking to a central distribution board. This setup includes connectors on the back side of the board—referred to by Lenovo as the ‘blind mate’—which facilitate seamless connection to the 2U compute shuttle.

Additionally, as mentioned above, the rear view reveals the server’s extensive cooling system, including ten hot-swap rear fans designed to maintain optimal thermal conditions across the GPUs, switches, and PCIe fabric. This cooling system is crucial for maintaining hardware performance stability and longevity, especially during continuous high-load operations.

Power, Cabling, and Switching

The AMD and Intel versions of this server family share a common power layout, allowing for greater modularity, which is the major highlight of these systems.

The PCIe signaling flows through ribbon cables connecting the compute sled to the PCIe switching sled. On the other side of the compute sled, there is a blind connection. The compute sled mates to these connectors, passing the PCIe signaling to the rest of the chassis. The bracket on the back of the sled (labeled with its designated PCIe connection) allows you to change between the compute shuttles without altering the bottom of the server.

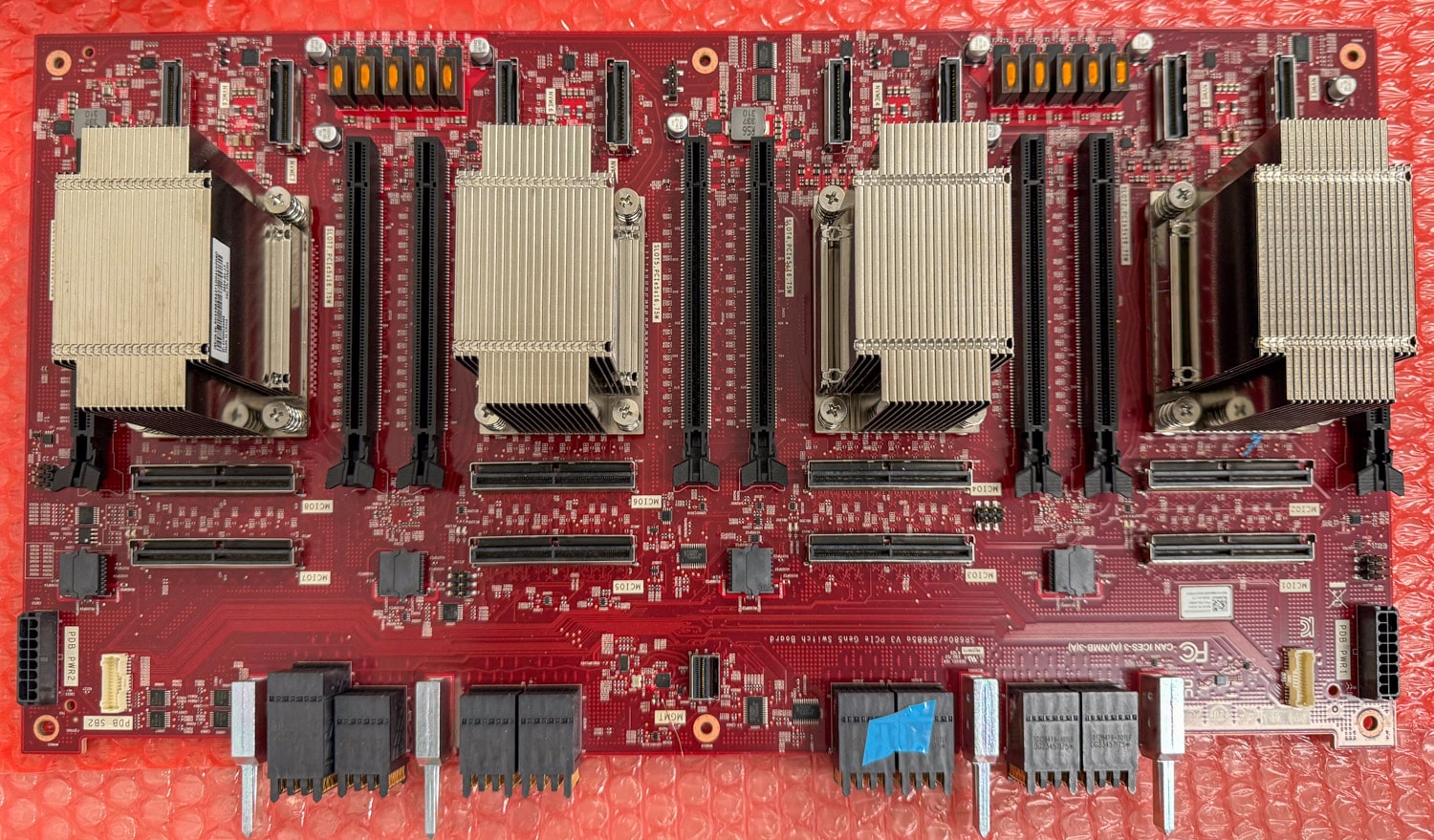

The PCIe switching board includes four Broadcom switches surrounded by PCIe slots, allowing the server to connect to a high-speed networking fabric. Additionally, there are eight MCIO cable connectors linking to the motherboard. The smaller PCIe connectors at the top are designated for the drive backplanes for the front-mount NVMe SSDs.

Memory and Internal Storage

The AMD compute supports up to 24 DDR5 memory DIMMs, with each processor interfacing with 12 DIMMs through 12 memory channels, allowing one DIMM per channel configuration. These DIMMs operate at a speed of 4800 MHz, enhancing the overall memory throughput and efficiency. Depending on the configuration, the server can support either 1.5TB of system memory using 24x 64GB RDIMMs or 2.25TB using 24x 96GB RDIMMs, providing ample capacity for even the most memory-demanding tasks.

The Intel compute (SR680a V3) leverages Lenovo TruDDR5 memory that operates at speeds up to 5600 MHz. It also supports a higher capacity than the AMD with up to 32 DIMMs across two processors, utilizing 8 memory channels to support 2 DIMMs per channel (DPC). Depending on the memory configuration, the server can support up to 2TB of system memory using 32x 64GB RDIMMs.

The operating speeds of the DIMMs vary based on the number of DIMMs per channel: with 1 DIMM per channel, the memory can reach speeds up to 5600 MHz, whereas configurations with 2 DIMMs per channel will operate at up to 4400 MHz. This flexible speed adjustment helps optimize performance based on the specific memory load and configuration.

Additionally, the server accommodates two M.2 NVMe drives on an M.2 adapter with integrated RAID functionality, which is ideal for operating system boot processes and fast data access.

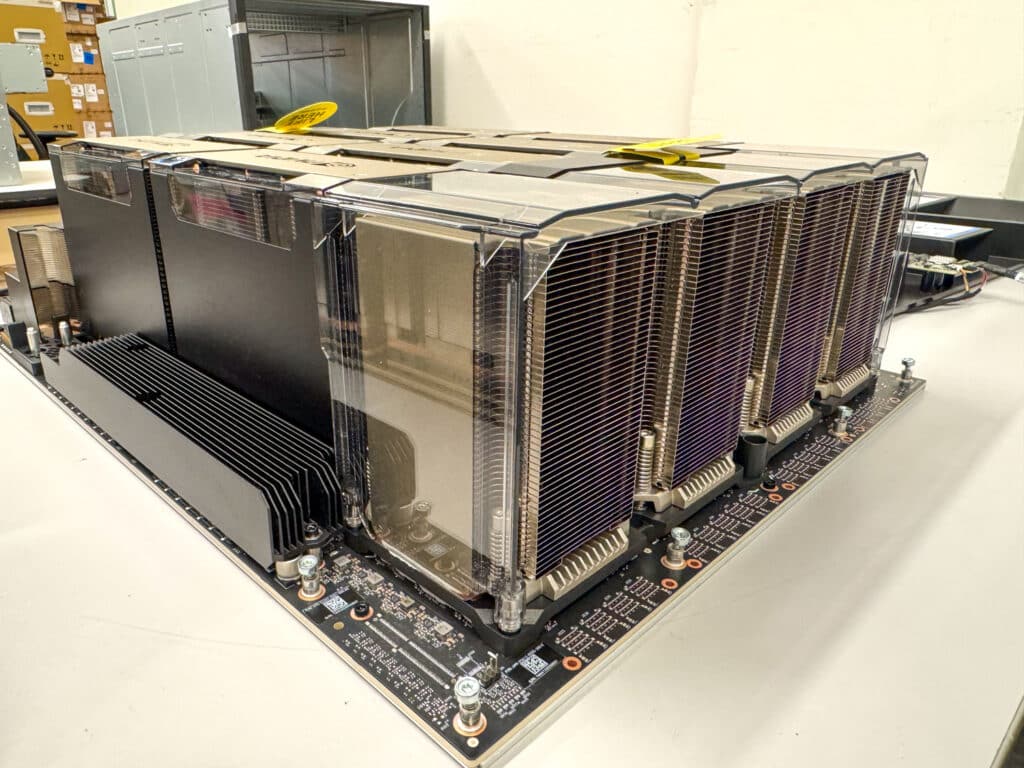

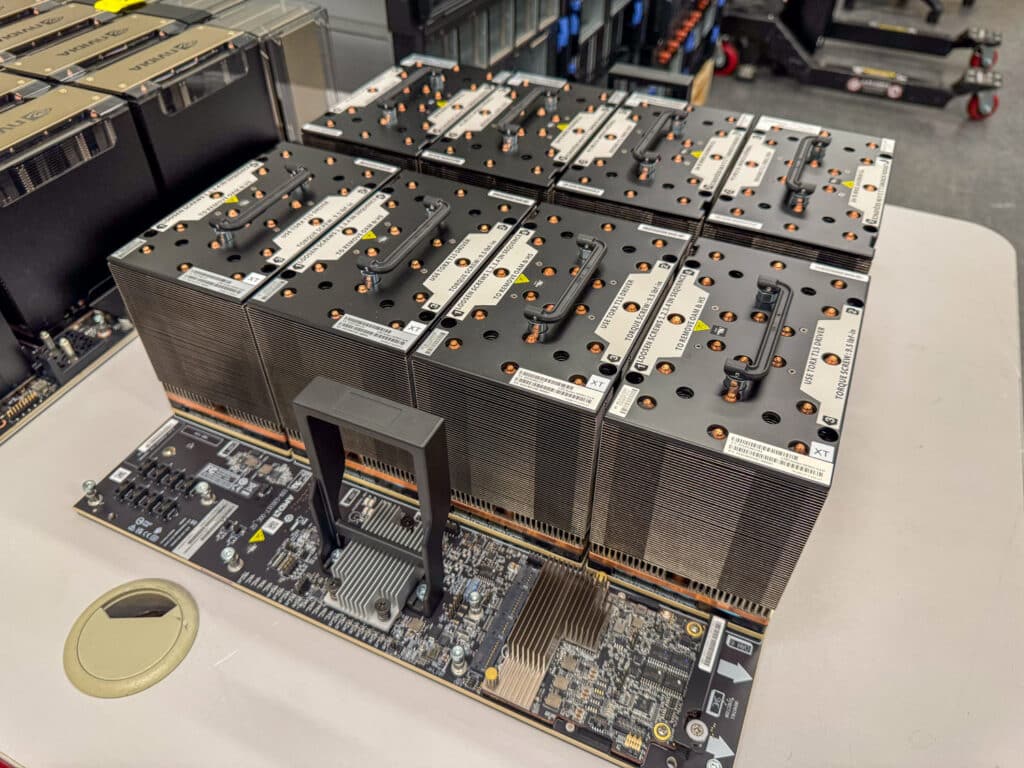

AMD and NVIDIA GPU Boards

The NVIDIA H100/H200 board is tightly packed with eight NVIDIA GPUs and features a retractable handle making carrying and installing the board easier. Once installed, the handle tucks neatly next to the GPUs to avoid obstruction. The connectors between the AMD and NVIDIA boards are identical. The Intel Gaudi 3 board will have a different connector.

The AMD MI300 board is similar to the NVIDIA board but has a standard handle that protrudes significantly. While still useful, it is not as elegant as the retractable handle on the NVIDIA board. Customers won’t care either way, so we added the footnote because the design difference caught our attention.

Final Thoughts

The Lenovo ThinkSystem SR685a V3 and SR680a V3 GPU servers offer impressive modularity, supporting powerful NVIDIA H100/H200 and AMD MI300X GPUs, as well as both AMD EPYC 9004 and 5th Gen Xeon Intel CPUs. This flexibility and an air-cooled design make them easy to integrate into existing ecosystems. The servers also support more storage than typical GPU servers (via 16 hot-swap PCIe Gen5 NVMe drive bays), enhancing their utility for data-intensive tasks. Additionally, including Lenovo’s XClarity for management ensures streamlined operations and monitoring, further simplifying the management of complex infrastructures.

Despite not having conducted performance tests, the design of these servers is remarkable. The modular architecture lets Lenovo easily offer their customers AMD and NVIDIA GPUs today, with Intel or AMD computer servers. With more GPU support, like Intel Guadi 3 and NVIDIA B200, Lenovo can let customers mix and match the compute and GPU components to tune the servers for specific applications.

Overall, these servers are very well thought out, and we look forward to logging hands-on time with them; Jordan has been anxiously stroking his beard with anticipation. While we’re currently working on a project with the Lenovo SR675 v3 with four NVIDIA L40S GPUs, these 8-way servers are a different animal and have a wide range of AI use cases. This is a good reminder, though, that Lenovo offers an AI platform for everyone.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed