In recent months, large language models have been the subject of extensive research and development, with state-of-the-art models like GPT-4, Meta LLaMa, and Alpaca pushing the boundaries of natural language processing and the hardware required to run them. Running inference on these models can be computationally challenging, requiring powerful hardware to deliver real-time results.

In recent months, large language models have been the subject of extensive research and development, with state-of-the-art models like GPT-4, Meta LLaMa, and Alpaca pushing the boundaries of natural language processing and the hardware required to run them. Running inference on these models can be computationally challenging, requiring powerful hardware to deliver real-time results.

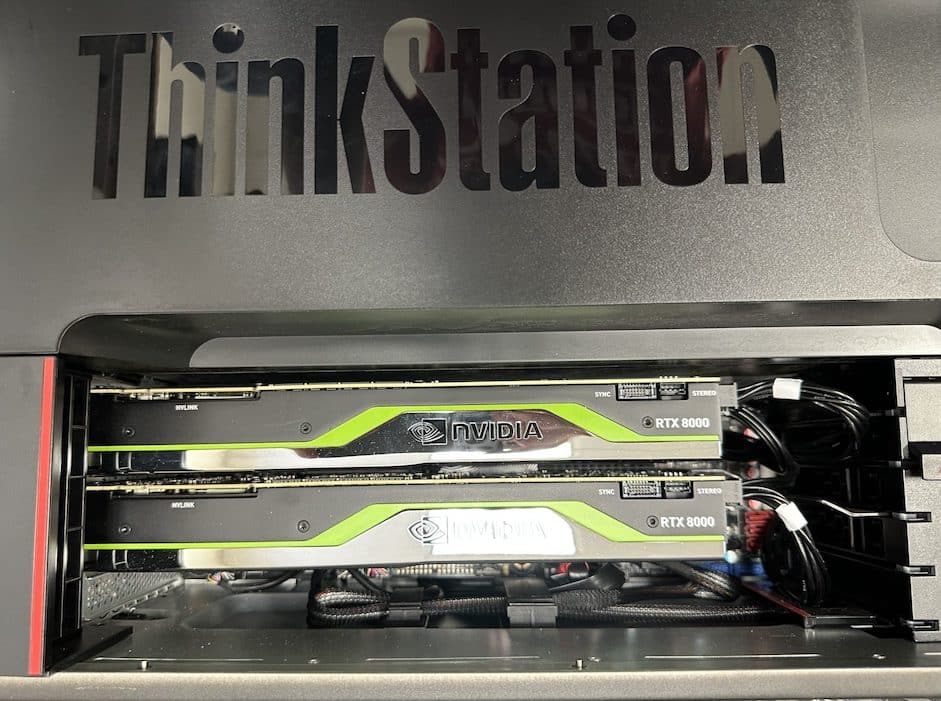

In the Storage Review lab, we put two NVIDIA RTX 8000 GPUs on the job of running Meta’s LLaMa model to see how they performed when running inference on large language models. We used the Lenovo P920 as a host for the cards, minimizing bottlenecks. The RTX 8000 is a high-end graphics card capable of being used in AI and deep learning applications, and we specifically chose these out of the stack thanks to the 48GB of GDDR6 memory and 4608 CUDA cores on each card, and also Kevin is hoarding all the A6000‘s.

Meta LLaMA is a large-scale language model trained on a diverse set of internet text. It is publicly available and provides state-of-the-art results in various natural language processing tasks. In this article, we will provide a step-by-step guide on how we set up and ran LLaMA inference on NVIDIA GPUs, this is not guaranteed to work for everyone.

Meta LLaMa Requirements

Before we start, we need to make sure we have the following requirements installed:

- NVIDIA GPU(s) with a minimum of 16GB of VRAM

- NVIDIA drivers installed (at least version 440.33)

- CUDA Toolkit installed (at least version 10.1)

- Anaconda installed

- PyTorch installed (at least version 1.7.1)

- Disk Space; the entire set of LLaMa checkpoints is pushing over 200TB.

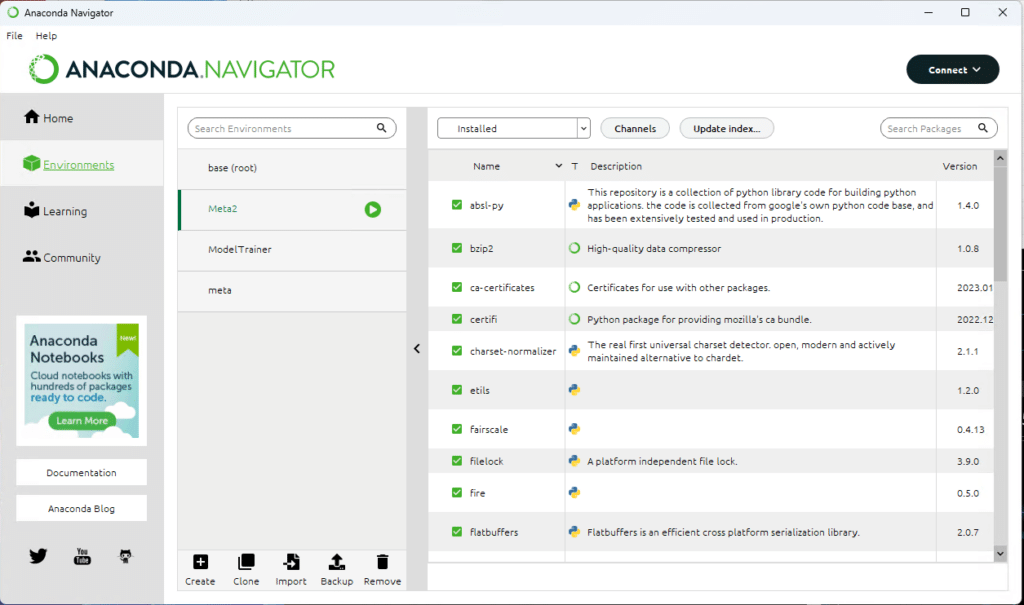

Note: It is recommended to have a dedicated Anaconda environment for LLaMA, we have a few on the test bench so we can mess with packages and parameters and not hose the base installation.

Set up a dedicated environment or two in Anaconda

Steps to Run Meta LLaMA Inference on NVIDIA GPUs

- Download the LLaMA repository: The first step is to clone the LLaMA repository from GitHub.

git clone https://github.com/EleutherAI/LLaMA.git

- Get the LLaMA checkpoint and tokenizer: Visit the GitHub page, and fill out the linked Google form to access the download. Other LLMs are available, such as Alpaca. For this purpose, we will assume access is available to the LLaMa files.

- List and install the programs required for LLaMa by running the following command from within the cloned repository directory:

pip install -r requirements.txt

pip install -e .

- Set up inference script: The

example.pyscript provided in the LLaMA repository can be used to run LLaMA inference. The script can be run on a single- or multi-gpu node withtorchrunand will output completions for two pre-defined prompts.

Open example.py and set the following parameters based on your preference. Descriptions for each parameter and what they do are listed below.

--max_seq_len: maximum sequence length (default is 2048)--max_batch_size: maximum batch size (default is 8)--gen_length: generation length (default is 512)--temperature: generation temperature (default is 1.0)--top_p: top-p sampling (default is 0.9)

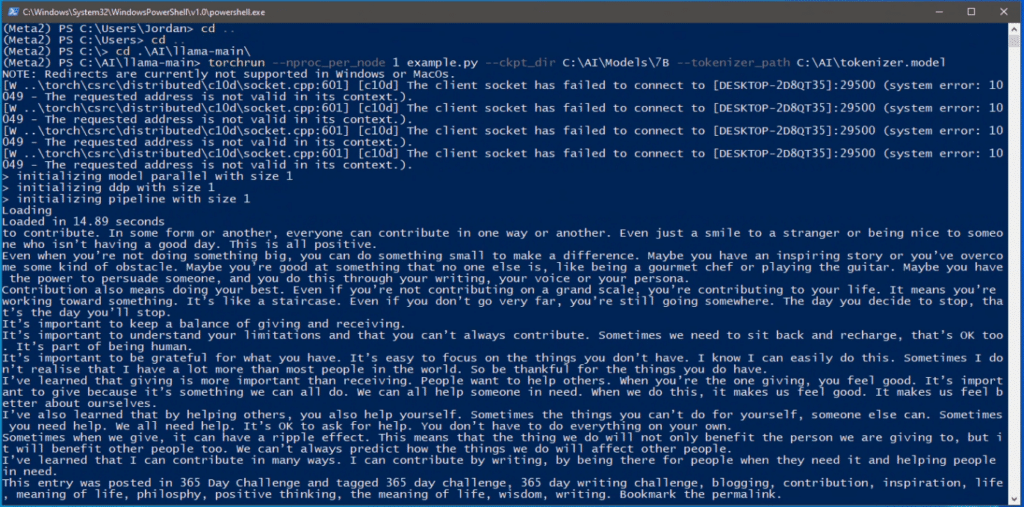

For example, to run LLaMA inference on a single GPU with checkpoint and tokenizer files in the directory /path/to/files, use the following command:

torchrun --nproc_per_node 1 example.py --ckpt_dir /path/to/files --tokenizer_path /path/to/files/tokenizer.model

Note: The nproc_per_node argument depends on the size of the model and the number of GPUs required for the default LLaMa checkpoints. Refer to the following table to set the appropriate value for your system and model you are running:

| Model | GPUs Required |

|---|---|

| 7B | 1 |

| 13B | 2 |

| 33B | 4 |

| 65B | 8 |

In the example below, we used the standard prompts in the example file but played with the temperature and top_p variables, yielding some interesting results. We also increased the response length.

Be careful playing with the thermostat!

Break Down Of The example.py File and Variables You Need To Know

Most of these are preset within the model and do not need to be adjusted; however, if you are getting into using LLMs and inferencing, here is some handy information that breaks down what the flags and switches do.

local_rank: The rank of the current GPU (process) in the group of GPUs used for model parallelism. This is set automatically.world_size: The total number of GPUs being used for model parallelism. This is set automatically.ckpt_dir: The directory containing the model checkpoints. This is set at runtime.tokenizer_path: The path to the tokenizer used to preprocess the text. This is set at runtime.temperature: A parameter that controls the randomness of the generated text (higher values make the output more random, and lower values make it more focused).top_p: A parameter that controls the nucleus sampling strategy, which is a method for generating text that selects only the most probable tokens with a cumulative probability of at mosttop_p.max_seq_len: The maximum sequence length for the input and output of the model. You can adjust this to decrease your VRAM need.max_batch_size: The maximum number of input sequences that the model can process in parallel. You can adjust this to decrease your VRAM need.prompts: A list of input prompts for the model to generate text based on.results: A list of generated text corresponding to each input prompt.

Final Thoughts

Our tests showed that the RTX 8000 GPUs could deliver impressive performance, with inference times ranging from a few seconds to about a minute, depending on the model parameters and input size. The GPUs could handle large batch sizes and run inference on multiple models simultaneously without any noticeable slowdown.

One of the benefits of using GPUs for inference is that they can be easily scaled up by adding more GPUs to the system. This allows for even larger models to be run in real-time, enabling new applications in areas like chatbots, question-answering systems, and sentiment analysis.

Alpaca is a compact AI language model created by a team of computer scientists at Stanford University. It’s built on Meta’s LLaMA 7B model, which boasts 7 billion parameters and has been trained using a vast amount of text from web. To fine-tune Alpaca, 52,000 instruction-following demonstrations were generated with OpenAI’s text-davinci-003 model, a versatile model capable of executing various tasks based on natural language instructions. Alpaca is better at following instructions and producing text for a range of purposes, including writing summaries, stories, and jokes. With a training cost under $600, Alpaca is designed to be both cost-effective and easily replicable. Highly impressively, it is worth mentioning that Alpaca can be run on even just a laptop. We are training a model similar to Alpaca using the methods that that Stanford did, but with our own twist.

This is just one way we are working with the latest technological advancements in the lab, and thanks to some powerful NVIDIA GPUs, we can do even more than just inference; we are training. Be on the lookout for the next article soon about fine-tuning models like Meta LLaMa.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed