At the forefront of edge AI, NVIDIA’s Jetson Orin Nano Super Developer Kit delivers a robust solution for AI applications outside the traditional data center. It is a powerful, affordable tool for AI enthusiasts and professionals.

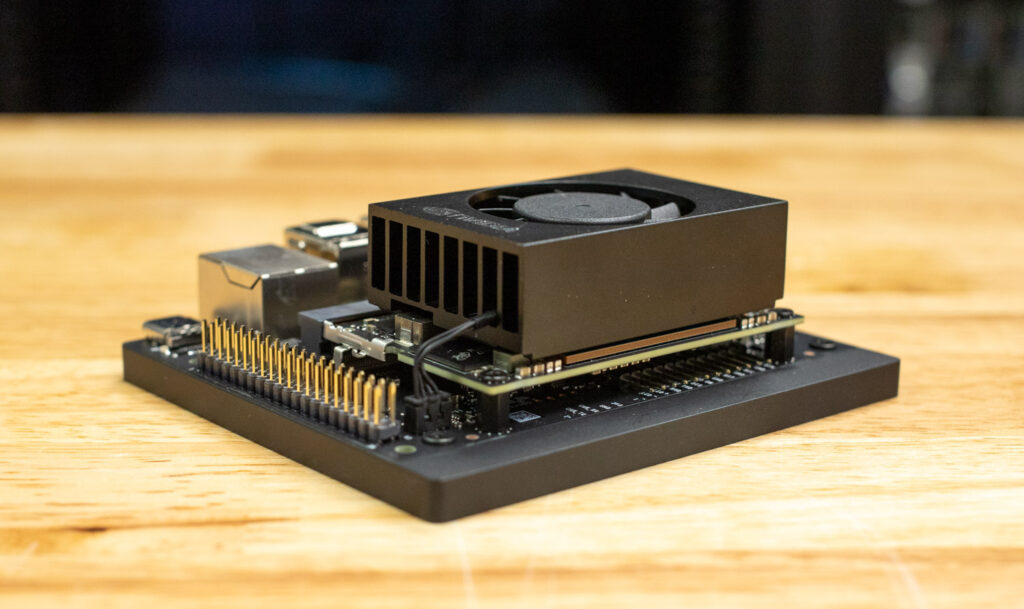

The Jetson Orin Nano Super is a compact computing powerhouse that brings sophisticated AI capabilities to edge devices. It blends performance with affordability and solid integration options, making it an ideal candidate for prototyping and commercial product development. Whether employed in robotics kits or integrated into larger machinery, its flexible design allows engineers to deploy AI in scenarios that demand efficiency and low power consumption – for just $249.

The Jetson platform is designed specifically for edge deployments, ensuring projects in environments with limited space or power can still harness high-end AI performance. With a scalable form factor and extensive connectivity options, it provides a gateway to innovative solutions in robotics, smart surveillance, and even wildlife conservation.

The Jetson platform is designed specifically for edge deployments, ensuring projects in environments with limited space or power can still harness high-end AI performance. With a scalable form factor and extensive connectivity options, it provides a gateway to innovative solutions in robotics, smart surveillance, and even wildlife conservation.

The Jetson Orin Nano Super is well-known for building projects that require AI at the edge, whether in traditional robotics kits using classic programming or in more advanced setups featuring frameworks like ROS (Robot Operating System). Its availability as a complete developer kit and a standalone SoC daughterboard allows seamless integration into a wide range of products and machinery. This versatility makes it popular for applications ranging from small-scale educational projects to full-scale industrial deployments.

Jetson Orin Nano Super Developer Kit Specifications

The Jetson Orin Nano Super packs impressive features into a compact form factor. The 6-core Arm Cortex-A78AE CPU builds a sturdy foundation for computation, while the 1024-core NVIDIA Ampere GPU with Tensor Cores accelerates various workloads, including deep learning and computer vision tasks. With 67 TOPS (Tera Operations Per Second) of AI performance and high-bandwidth 8GB LPDDR5 memory, this platform is designed to perform complex operations at the edge.

| Specification | Details |

|---|---|

| CPU | 6-core Arm Cortex-A78AE v8.2 64-bit CPU, 3MB L2 + 4MB L3 |

| GPU | 1024-core NVIDIA Ampere architecture GPU with 32 Tensor Cores |

| AI Performance | 67 TOPS |

| Memory | 8GB 128-bit LPDDR5 102GB/s |

| Storage | 16GB eMMC 5.1, microSD, M.2 Key M NVMe SSD support 1x M.2 Key M slot with x4 PCIe Gen3 1x M.2 Key M slot with x2 PCIe Gen3 |

| Networking | 1x Gigabit Ethernet |

| Display | 1x HDMI, 1x eDP 1.4 |

| Connectivity | 4x USB 3.2 Type A Ports, 1x USB Type C Port |

| Power Input | DC Barrel Jack accepts 7V to 20V power |

| Camera | 2x MIPI CSI Camera Connectors |

| Expansion | 40-pin GPIO Expansion headers |

| Power Consumption | 7W – 25W configurable |

| Operating System | Linux Ubuntu-based with NVIDIA JetPack SDK |

| Dimensions | 103mm x 90.5mm x 34.77mm |

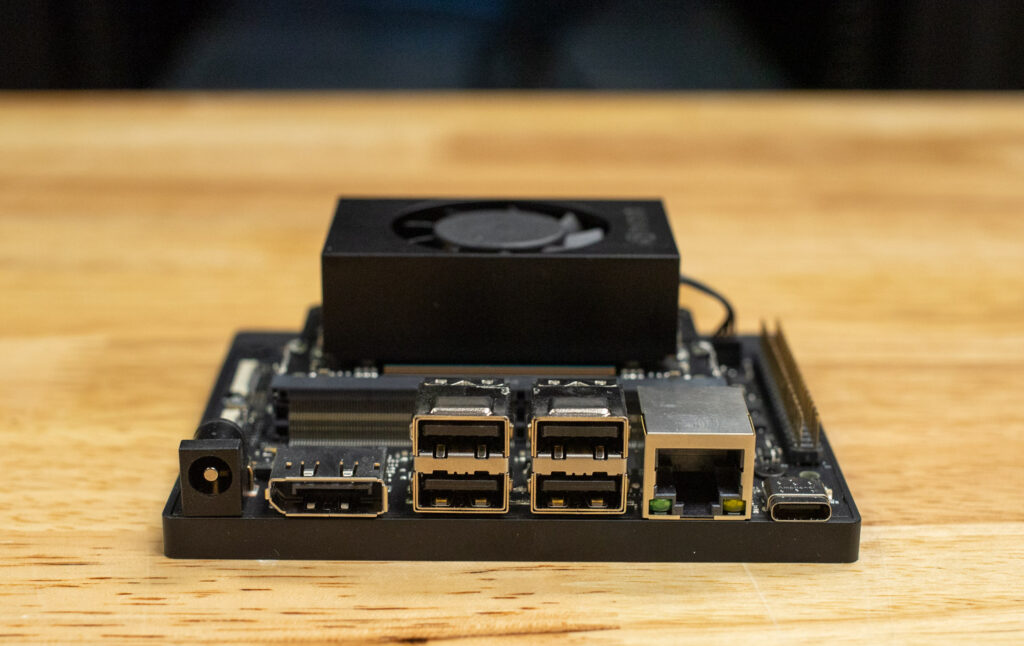

Connectivity options are plentiful, making the Nano Super highly versatile for numerous applications. Four USB 3.2 Type-A ports and a USB Type-C port allow you to easily connect a range of peripherals, from external storage devices to input devices or sensors. The integrated Gigabit Ethernet ensures reliable networking, while the dual MIPI CSI camera connectors allow the integration of two cameras. This feature is particularly beneficial for applications requiring depth perception, essential in robotics and autonomous systems where accurate environmental mapping is critical.

The storage capabilities include 16GB eMMC 5.1, microSD, and dual M.2 NVMe SSD support via dedicated slots with PCIe Gen3 connectivity. This provides ample storage for operating systems, software, and datasets and supports high-speed data transfers necessary for real-time analytics and AI inference tasks. Moreover, including HDMI and eDP 1.4 interfaces allows the Nano Super to support displays, making it ideal for kiosk-like applications or digital signage.

Pushing the Nano Super to Its Limits: LLM Inference at the Edge

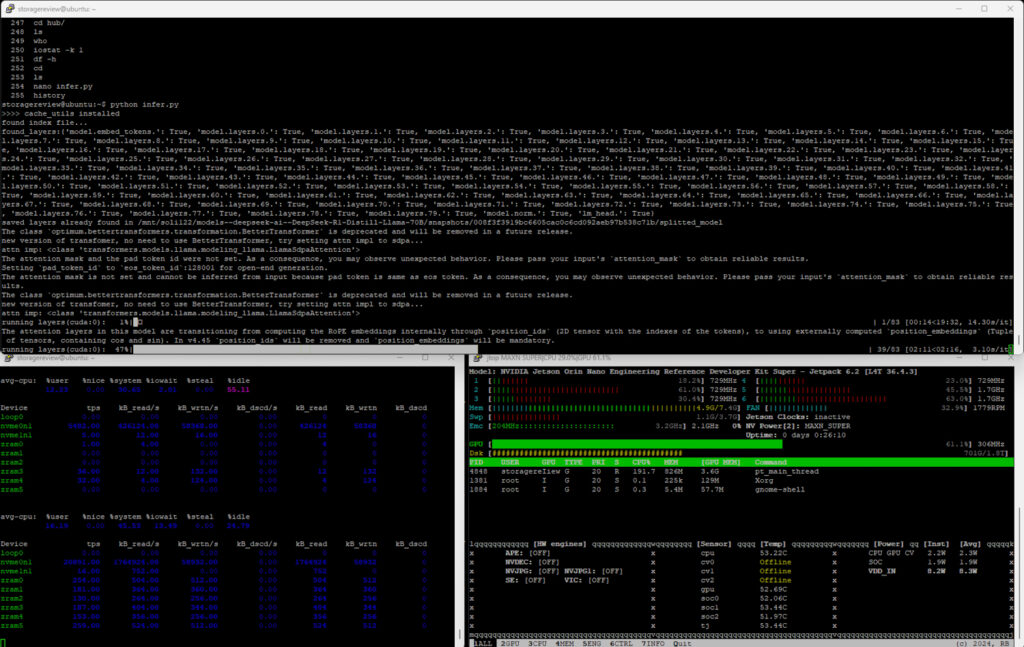

Our work with the Nano Super focused on exploring its potential for performing AI development tasks, specifically large language model (LLM) inference. We recognized that onboard memory limitations challenge running models with billions of parameters, so we implemented an innovative approach to bypass these constraints. Typically, the Nano Super’s 8GB of graphics memory restricts its capability to smaller models, but we aimed to run a model 45 times larger than what would traditionally fit.

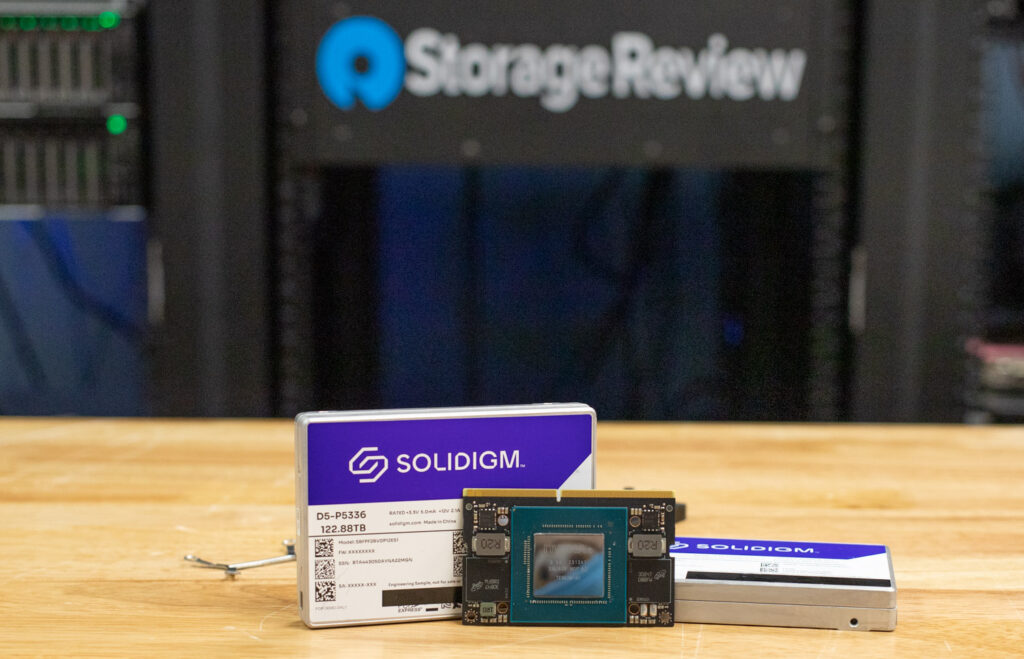

We upgraded the Nano Super’s storage by integrating the newly launched Solidigm D5-P5336 122.88TB SSD, an ultra-high-capacity NVMe drive designed for data center environments, to support the ambitious task.

The Solidigm 122TB D5-P5336 SSD is a groundbreaking storage solution for data-intensive workloads, particularly in AI and data centers. Here are the detailed specifications:

- Capacity: 122.88TB

- Technology: Quad-Level Cell (QLC) NAND

- Interface: Gen 4 PCIe x4

- Performance: Up to 15% better on data-intensive workloads compared to previous models

- Form Factor: U.2 Approximately the size of a deck of cards

- Use Cases: Ideal for AI training, data collection, media capture, and transcode

Performance Metrics

- Sequential Read/Write Speeds: Up to 7.1 GB/s (read) and 3.3 GB/s (write)

- Random Performance: Up to 1,269,000 IOPS

Lifespan Metrics

- Endurance: The Solidigm 122TB SSD is designed for data-intensive workloads and offers a high endurance rating. You can use the Solidigm SSD Endurance Estimator to calculate the expected lifespan based on specific workloads.

Power Metrics

- TB per watt=122 TB25 W=4.88 TB/WTB per watt=25 W122 TB=4.88 TB/W. With these power metrics, this drive offers approximately 4.88 terabytes of storage per watt of power consumed, highlighting its efficiency for data-intensive applications.

The Nano Super includes two M.2 NVMe bays, which we tested as part of this review. Both slots offer a PCIe Gen3 connection, with a 30mm slot supporting 2 PCIe lanes and an 80mm slot supporting a full 4 PCIe lanes. We used the 80mm slot paired with a breakout cable to drive the most bandwidth to the Solidigm D5-P5336 122TB QLC SSD. Our USB-C power cable wasn’t ready for the demo, so we used an ATX power supply that provided 12V and 3.3V to the U.2 drive.

The result was an overpowered storage solution that allowed us to manage massive models and highlighted the role of robust storage in edge AI workflows. This setup allowed us to store and carry most of the popular models from Hugging Face while still retaining ample extra space.

How did we run DeepSeek R1 70B Distilled, a model 45 times larger than expected, for such a device? To achieve this feat, we used AirLLM, a project that sequentially loads model layers into memory as needed rather than loading the entire weight set at once. This layer-by-layer approach allowed us to perform inference on a model that far exceeds the VRAM limitations of the device. There’s a catch: the compute performance. In terms of storage performance, over the 4-lane PCIe 3 connection, the NVIDIA Orin Nano could pull up to about 2.5GB/s from the 122TB Solidigm D5-P5336 QLC SSD. With our inference workload operating off the QLC SSD, read speeds hovered around 1.7GB/s.

Even though we successfully bypassed VRAM’s limitations, we were still stuck with 67 TOPS of performance. Moreover, as the model size expands, so does the layer size, which means time per token increases. So, we went from a couple of tokens per second with smaller LLMs, such as ChatGLM3-6B, to one per 4.5 minutes with DeepSeek R1 70B Distilled.

Practical Applications of Large Storage and Edge AI

While our LLM experiment was more of a proof of concept, combining the Jetson Orin Nano Super and a high-capacity Solidigm drive has practical applications. The Jetson’s SODIMM-like form factor makes it easy to integrate into custom PCBs, making the attachment of enterprise-grade U.2 drives more straightforward and plausible. This configuration benefits long-term, low-power AI deployments in remote or sensitive environments.

AI is increasingly being used in wildlife conservation. In a previous article, we discussed how AI is helping track hedgehog populations. Similarly, Indigenous nations in British Columbia are using AI to monitor fish populations. These installations often need to operate undisturbed for years, requiring large storage capacities, low power consumption, and minimal physical environmental disruption. A Jetson Orin Nano Super-based solution with a high-capacity drive can meet these needs while consuming as little as 15W (or 50W at maximum performance). With backup batteries and a small solar panel, such a setup can be the size of a standard desk phone, making it unassuming and practical for long-term use.

Another intriguing use case is using the system as a large local repository for model distribution. While downloading hundreds of models from Hugging Face, we noticed that not all models were the same. More popular models were downloaded faster than older or less popular ones. However, all downloads are typically very slow at the edge, even with Starlink. In such cases, a package like the Nano Super, equipped with an additional NIC and a large-capacity drive, would serve perfectly as a cache or intermediate store to redistribute models efficiently edge.

Ample Use Cases

Here are compelling use cases for leveraging an NVIDIA Jetson device with substantial storage capacity:

- Autonomous Vehicles: Storing and processing vast amounts of sensor and camera data in real-time for navigation and obstacle detection.

- Smart Surveillance: Managing high-resolution video feeds from multiple cameras for security and monitoring purposes, with the ability to store and analyze footage locally.

- Healthcare Diagnostics: Real-time processing and storage of medical imaging data for immediate diagnostics and treatment decisions in remote or resource-limited settings.

- Industrial Automation: Enhancing factory automation with AI-driven quality control and predictive maintenance, storing large datasets for analysis and model training.

- Retail Analytics: Analyzing customer behavior and inventory data in real-time to optimize stock levels and enhance the shopping experience.

- Environmental Monitoring: Using AI to track and analyze ecological data, such as air and water quality, to support conservation efforts and public health initiatives.

- Smart Agriculture: Monitoring crop health and soil conditions using AI-powered sensors and cameras to optimize farming practices and increase yield.

- Telecommunications: Managing and processing data at cell towers to improve network performance and reduce latency.

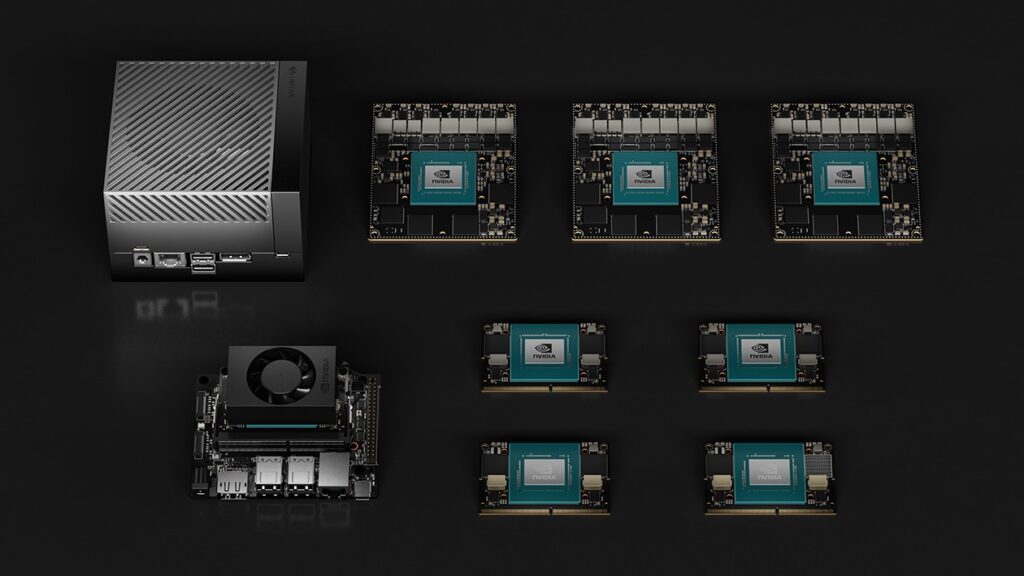

Conclusion: Finding Its Place in the Jetson Family

The Jetson Orin Nano Super sits in the sweet spot of NVIDIA’s Jetson lineup, offering a balance of high performance and energy efficiency for edge AI tasks. The Jetson family ranges from entry-level models like the Jetson Nano, designed for basic AI and robotics applications, to the powerful Jetson AGX Orin, which delivers up to 275 TOPS for demanding autonomous machine workloads. In between, the Jetson Orin Nano Super offers flexible performance and power profiles, catering to developers needing more horsepower without the bulk of a total AGX platform.

Solidigm’s QLC SSD lineup offers a range of high-capacity storage solutions designed for read-intensive workloads. The lineup includes models like the D5-P5336, with up to 122.88TB of storage and smaller drive capacities starting at 7.68TB. These SSDs are optimized for performance, density, and cost-efficiency, making them ideal for applications such as content delivery networks, AI, data pipelines, and object storage. With QLC technology, Solidigm SSDs deliver substantial storage capacity while maintaining strong read performance and proven reliability.

The Nano Super’s ability to bring serious AI capabilities to compact, power-constrained environments makes it stand out. While the original Jetson Nano was a favorite for hobbyists and lightweight AI tasks, the Nano Super elevates this by delivering 67 TOPS—enough to handle complex LLM inference and other demanding AI applications. This makes it a compelling option for developers looking to deploy sophisticated AI models at the edge without the overhead of larger, more power-hungry systems. Paired with a high-capacity QLC offering, such as the 122TB Solidigm D5-P5336 SSD, it allows edge locations to operate with a wide range of AI models and no capacity constraints requiring storage to be swapped out once provisioned.

The Nano Super costs $249. Although it’s pricier than a Raspberry Pi, it delivers significantly better performance and includes all necessary components. The heatsink, equipped with a fan, allows you to operate at maximum power even in a poorly ventilated 3D-printed enclosure. It also comes with a power adapter, making it ideal for those interested in AI.

StorageReview thanks the Solidigm team for the new 122TB D5-P5336 SSD. This drive’s capacity and speed enabled us to complete much of the testing.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed