We revisit the Jetson AGX Orin and show how to get a Chat-GPT Type LLM on the low-power device.

Editors note: We got the opportunity to sit down and re-dig into the NVIDIA Jetson platform with a new member of our team. Check out our article from last year where we ran a vision model on a final product version of Jetson, the Lenovo SE70

With NVIDIA’s Jetson platform, developers are able to explore AI options specifically tailored for edge AI development. These systems allow for GPU-enabled server performance in a package you can hold in one hand. Huge thank you to NVIDIA for giving us the Jetson AGX Orin Development Kit to try out and see just how easy it can be to have your own Local LLM.

The Jetson AGX Orin DevKit comes in a small form factor design, only 11cm or about 4.3in width and length and 7.2cm (about 2.8in) tall. On the inside of the Jetson AGX Orin Dev Kit, sits a 2048-core NVIDIA Ampere architecture GPU with 64 Tensor Cores and a max frequency of 1.3GHz. There is also an Arm Cortex CPU 12-core A78AE v8.2 64-bit CPU with 3MB L2 cache, 6MB L3 cache, and a max frequency of 2.20GHz.

The Jetson AGX Orin DevKit comes in a small form factor design, only 11cm or about 4.3in width and length and 7.2cm (about 2.8in) tall. On the inside of the Jetson AGX Orin Dev Kit, sits a 2048-core NVIDIA Ampere architecture GPU with 64 Tensor Cores and a max frequency of 1.3GHz. There is also an Arm Cortex CPU 12-core A78AE v8.2 64-bit CPU with 3MB L2 cache, 6MB L3 cache, and a max frequency of 2.20GHz.

Those two power components, coupled with 64GB of LPDDR5 unified memory with speeds at 204.8GB/s, combine to create this small machine’s most impressive feat: 275 TOPS in models with 64GBs from the small GPU and DLA. That’s 8.6 times the number of TOPS as NVIDIA’s predecessor, the Jetson AGX Xavier, which only delivered 32 TOPS.

Also under the hood are two M.2 slots: a PCIe Gen 4×4 Key M for any additional storage beyond the 64GB eMMC, and a Gen 4×1 Key E for wireless connections. Online connectivity isn’t an issue though, with a 10 gigabit RJ45 connector. Plus there’s a 40-pin header (for UART, SPI, I2S, I2C, CAN, PWM, DMIC, and GPIO), a 12-pin automation header, a 10-pin audio panel header, a 10-pin JTAG header, a 4-pin fan header, a 2-pin RTC battery backup connector, as well as 16-lane MIPI CSI-2 connector for CSI cameras.

Hidden underneath a magnetic cover, lies the external PCIe Gen 4×16 slot. In addition, the external PCIe slot supports up to a PCIe 4×8 connection. With no way to internally power a GPU, the slot is best suited for something like a high-speed NIC. For a dedicated display option, the Orin has a DisplayPort 1.4.

Jetson AGX Xavier vs. Jetson AGX Orin

| Feature | Jetson AGX Xavier 64GB | Jetson AGX Orin 64GB Dev Kit |

|---|---|---|

| AI Performance | 32 TOPS | 275 TOPS |

| GPU | 512-core NVIDIA Volta GPU with 64 Tensor Cores | 2048-core NVIDIA Ampere GPU with 64 Tensor Cores |

| GPU Max Frequency | Not specified | 1.3GHz |

| CPU | 8-core NVIDIA Carmel Arm v8.2 64-bit CPU, 8MB L2 + 4MB L3 | 12-core Arm Cortex-A78AE v8.2 64-bit CPU, 3MB L2 + 6MB L3 |

| CPU Max Frequency | 2.2GHz | 2.2GHz |

| DL Accelerator | 2x NVDLA v1 | Not specified |

| DLA Max Frequency | 1.4GHz | Not specified |

| Vision Accelerator | 2x PVA | 1x PVA v2 |

| Memory | 64GB LPDDR4x, 136.5GB/s | 64GB LPDDR5, 204.8GB/s |

| Storage | 32GB eMMC 5.1, 64GB available in industrial version | Not specified |

| Video Encode | 4x 4K60 (H.265), 8x 4K30 (H.265), 16x 1080p60 (H.265), 32x 1080p30 (H.265) | Not specified |

| Video Decode | 2x 8K30 (H.265), 6x 4K60 (H.265), 12x 4K30 (H.265), 26x 1080p60 (H.265), 52x 1080p30 (H.265) | Not specified |

| CSI Camera | Up to 6 cameras (36 via virtual channels), 16 lanes MIPI CSI-2, 8 lanes SLVS-EC, D-PHY 1.2 (up to 40 Gbps), C-PHY 1.1 (up to 62 Gbps) | Not specified |

| PCIe | 1×8, 1×4, 1×2, 2×1 (PCIe Gen4, Root Port & Endpoint) | x16 PCIe slot supporting x8 PCIe Gen4, M.2 Key M slot with x4 PCIe Gen4, M.2 Key E slot with x1 PCIe Gen4 |

| USB | 3x USB 3.2 Gen2 (10 Gbps), 4x USB 2.0 | USB-C for power supply (15-60W), Single USB-C for flashing and programming, Micro B for serial debug, 2x USB 3.2 Gen2 (USB Type-C), 2x USB 3.2 Gen2 (USB Type-A), 2x USB 3.2 Gen1 (USB Type-A), USB 2.0 (USB Micro-B) |

| Networking | 1x GbE | RJ45 connector with up to 10 GbE |

| Display | 3 multi-mode DP 1.4/eDP 1.4/HDMI 2.0 | 1x DisplayPort 1.4a (+MST) connector |

| Other I/O | 5x UART, 3x SPI, 4x I2S, 8x I2C, 2x CAN, PWM, DMIC, GPIOs | 40-pin header (UART, SPI, I2S, I2C, CAN, PWM, DMIC, GPIO), 12-pin automation header, 10-pin audio panel header, 10-pin JTAG header, 4-pin fan header, 2-pin RTC battery backup connector, microSD slot, DC power jack, Power, Force Recovery, and Reset buttons |

| Power | 10-30W | 15-60W (via USB-C) |

AI Side/NVIDIA SDK Set-Up

Large Language Models (LLMs) are AIs, such as ChatGPT or Ollama, that have been trained on large quantities of data. In such a small footprint, it’s hard to believe that you would be able to run a local, private AI model. Currently, we are seeing “AI PC” laptops popping up in the market from Intel, AMD, and Snapdragon with dedicated NPUs. Those devices, similar to the Jetson platform, run dedicated silicon on the die, that have additional AI acceleration features. Conceptually, these components are designed to function similarly to our brain (hence the “neural” in NPU), and allow large amounts of data to be processed simultaneously. The inclusion of NPUs means that the CPU and GPU are freed up to process other tasks, leading to a far more efficient computer, both power and processing-wise.

However, the 40 TOPS produced by Intel’s Lunar Lake, or AMD’s 50 TOPS platform is still not as great as the combined power of the Jetson Orin Devkits’ GPU and CPU, making an advertised 275 TOPS. There is more than enough power to have an AI locally in your office, or even in your house/homelab! Other components assisting with AI are the two NVDLA v2 Deep Learning (DL) accelerators, facilitating the speed at which the system is able to do AI processes; and a single Vision accelerator, which speeds up the rate at which Computer Vision is able to process images.

Setting up the system to run AI is streamlined by NVIDIA’s numerous guides. To get started, you must make sure that you flash your Jetson with Ubuntu, then follow these 6 steps:

Step 1: Install NVIDIA SDK Manager

Full instructions and downloads will be available on the NVIDIA SDK site. A free developer account is required for this process.

Step 2: Open NVIDIA SDK Manager installed on Ubuntu

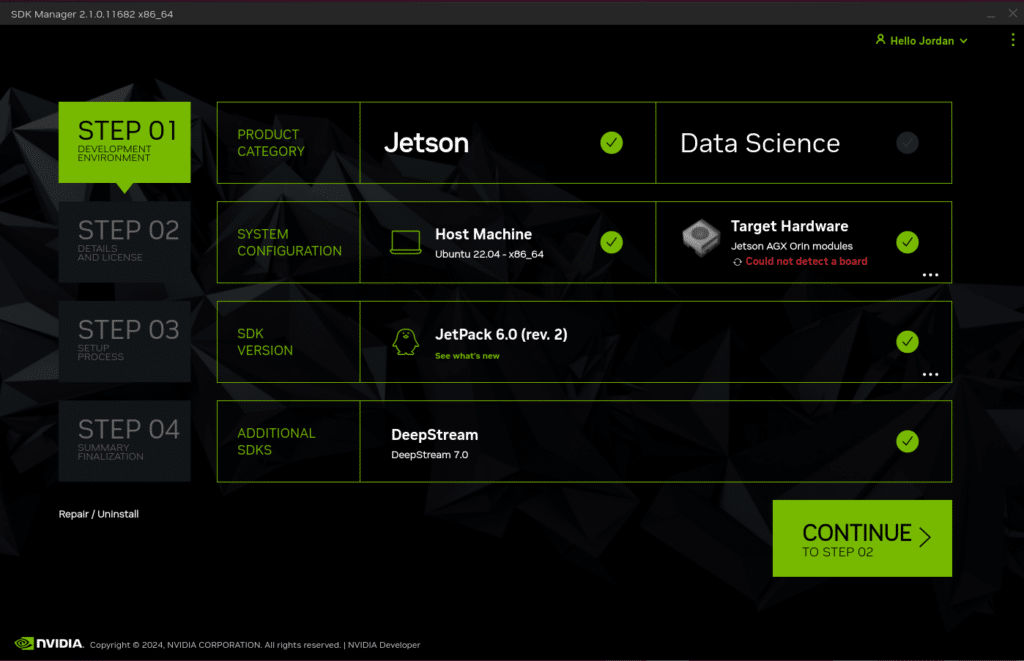

Step 3: Development Environment

This step is to confirm that you have all of your ducks in a row. Confirm your product, system configurations, SDK version, and additional SDKs. For our setup, we used the Jetson AGX Orin Development Kit, Ubuntu 22.04, JetPack 6.0, and Deep Stream 7.0.

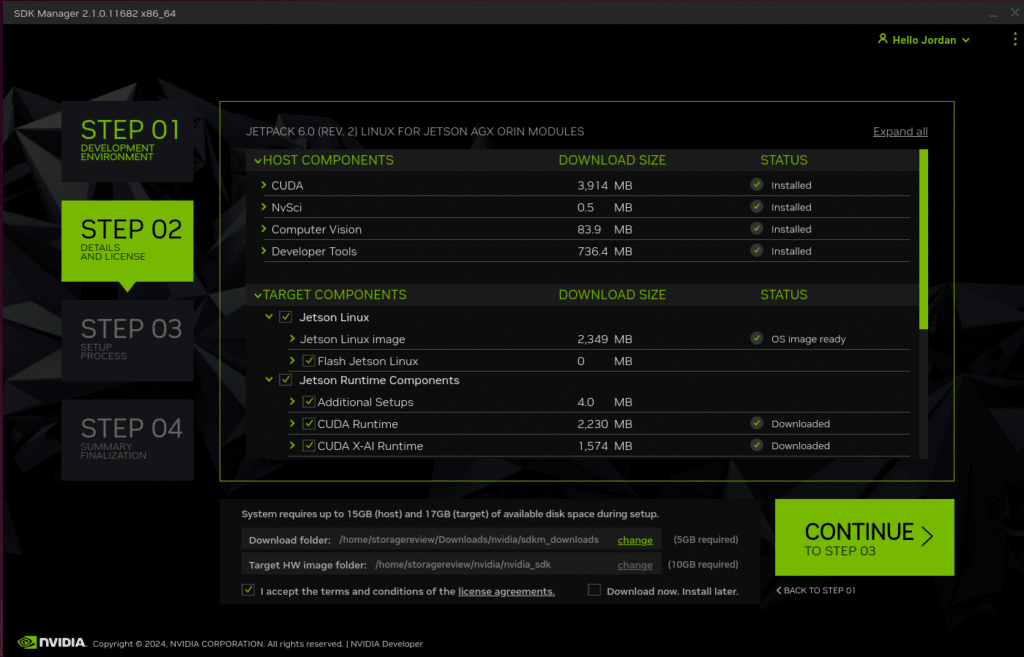

Step 4: Details and Licensing

This step serves as an installation screen, ensuring that all Host components and target components are downloaded and installed. This is also the place to select the proper download location. The host system requires 15GBs of storage and the target system requires 17GBs of storage.

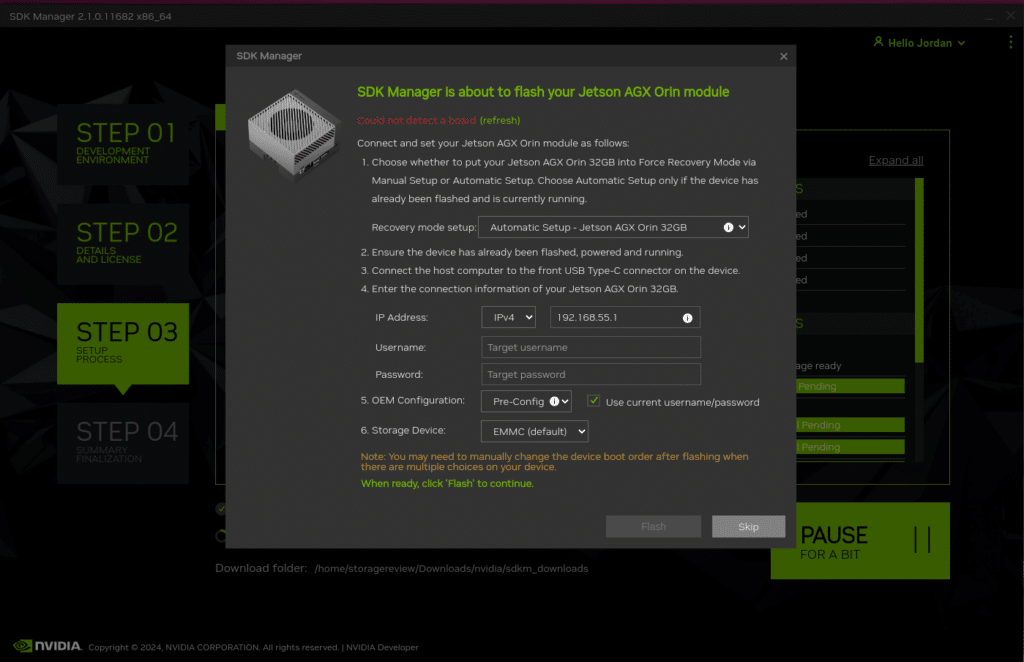

Step 5: Setup Process

This step serves as a confirmation window to finish the setup. Here you will select the Recovery mode, choosing whether it would be a manual or automatic forced recovery mode, automatic being for when you have already had the system flashed and running. From here, you can set up/confirm your IP address, add a username and password, choose your OEM configuration, and the target storage device. Once all of that is set, you’ll be able to click the Flash option.

Step 6: Summary Finalization

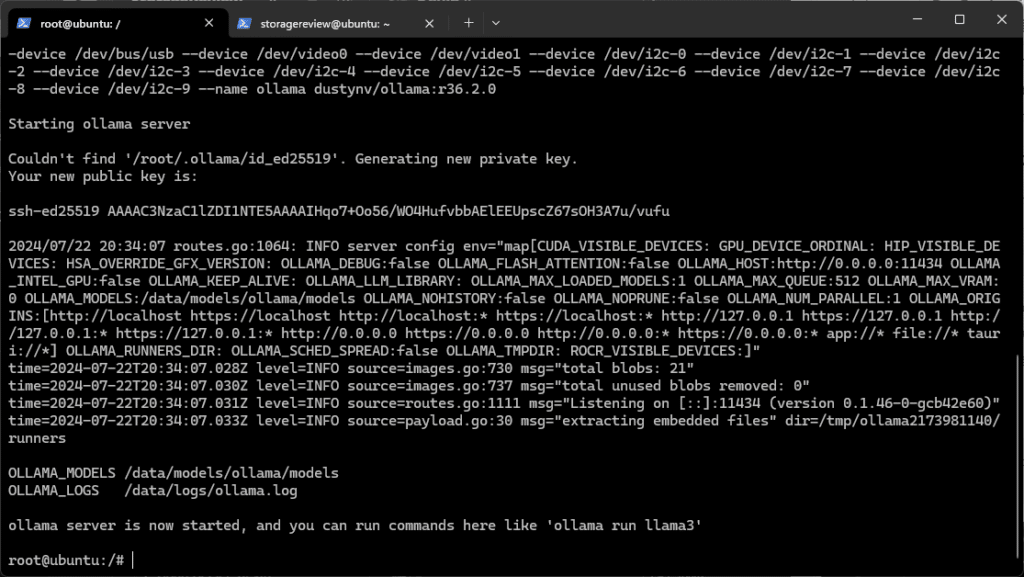

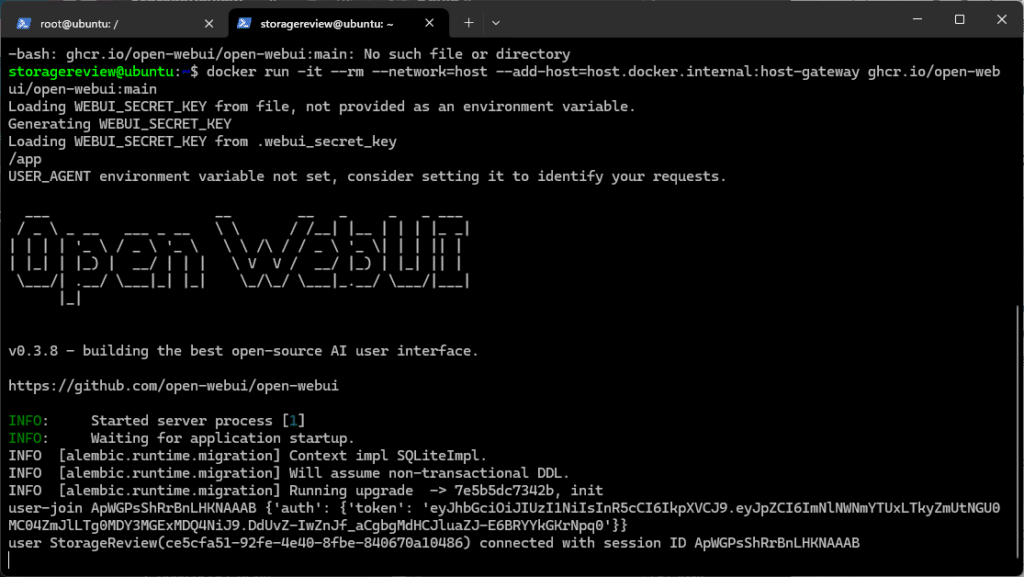

Finally, this step will run the system. After this, you will be able to run the code:

jetson-containers run --name ollama $(autotag ollama)Running the first line of code will launch the Ollama LLM. Ollama is a popular platform that makes local setup and development of LLMs simple and easy, even being able to be set up inside or outside the container. It includes a built-in model library of pre-quantized weights, and will be automatically downloaded and run using llama.cpp behind the scenes as an inference. The Ollama container was compiled with CUDA support, making it perfect for use on the Jetson AGX Orin. Then by running the code:

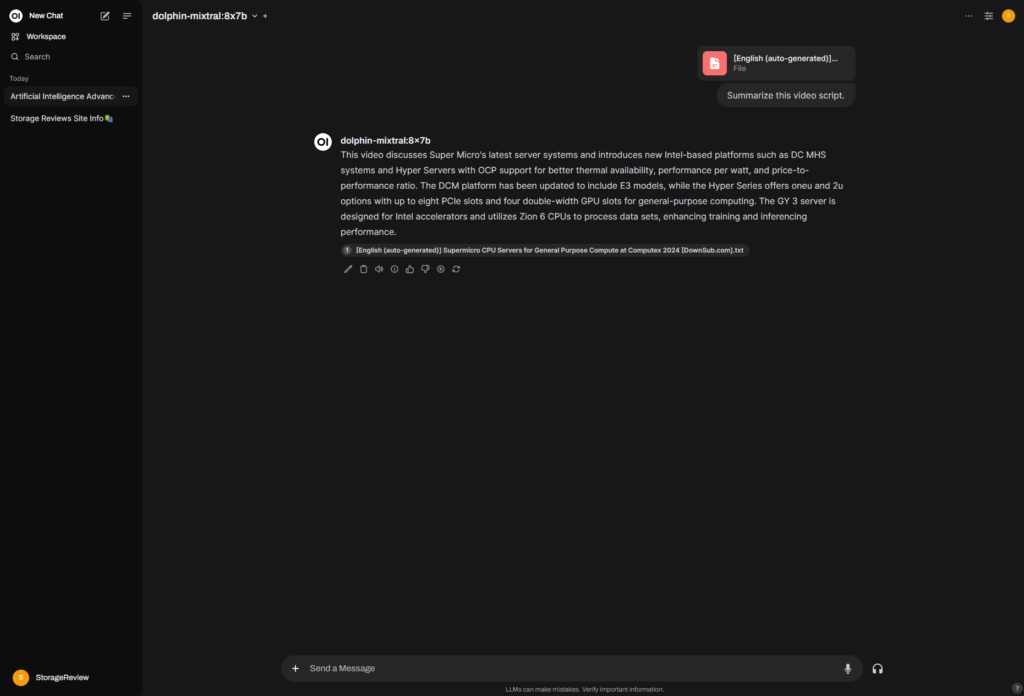

docker run -it --rm --network=host --add-host=host.docker.internal:host-gateway ghcr.io/open-webui/open-webui:main

You will then be able to access the Open Web User Interface (OWUI) on the device IP or DNS address on port 8080, which will function as a chatbot. The OWUI serves as a plug-in to the API of the Ollama server, but can also use OpenAI’s ChatGPT, Meta’s Llama-3, or Microsoft’s Phi-3 Mini as plug-ins.

While on such a low power budget, the time to first token for larger models is notably slow, the platform is still able to deliver acceptable performance once loaded.

Conclusion

The Jetson AGX Orin Development Kit offers significant performance in a compact form factor. As AI PC solutions become increasingly relevant, the Jetson platform stands out, especially when considering the TOPS limitations of NPUs integrated into new CPU releases. The Jetson AGX Orin provides a robust stepping stone for developers, particularly those requiring ARM-native applications, aiding in model validation and refinement.

While this is a development kit, its ease of use and ample power make it an excellent starting point for businesses embarking on their AI journey. The Jetson platform showcases the immense potential of small form factor AI solutions—elegantly designed, extremely power-efficient, and capable of delivering 275 TOPS of AI performance. This combination makes the Jetson platform comparable to much larger, rack-mounted AI servers.

NVIDIA’s comprehensive guides simplify the process of flashing and deploying a variety of AI models, with Generative AI being just one piece of the puzzle. For businesses ready to develop and deploy AI, the Jetson AGX Orin Development Kit offers a perfect blend of power efficiency, small footprint, and outstanding AI performance, making it an ideal choice for exploring and implementing AI technologies.

Jetson AGX Orin Development Kit

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed