StarWind Software is an industry leader in software-defined storage (SDS) thanks to flexible deployment modalities, overall system performance, and their urge to embrace emerging technologies. We saw this first hand at the end of last year when we looked at their NVMe-oF Initiator for Windows. This time we’re looking at the StarWind SAN & NAS software, which is adding support for Fibre Channel, and the GRAID NVMe accelerator card, which is quite ambitious for an SDS solution.

StarWind Software is an industry leader in software-defined storage (SDS) thanks to flexible deployment modalities, overall system performance, and their urge to embrace emerging technologies. We saw this first hand at the end of last year when we looked at their NVMe-oF Initiator for Windows. This time we’re looking at the StarWind SAN & NAS software, which is adding support for Fibre Channel, and the GRAID NVMe accelerator card, which is quite ambitious for an SDS solution.

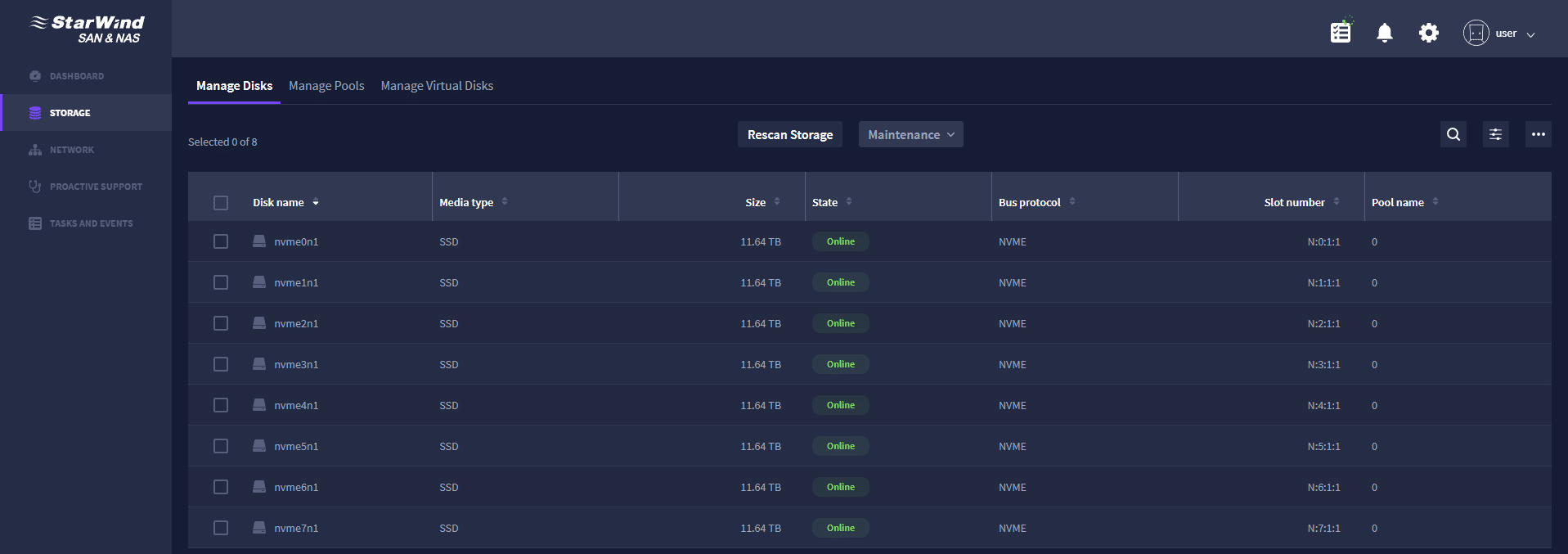

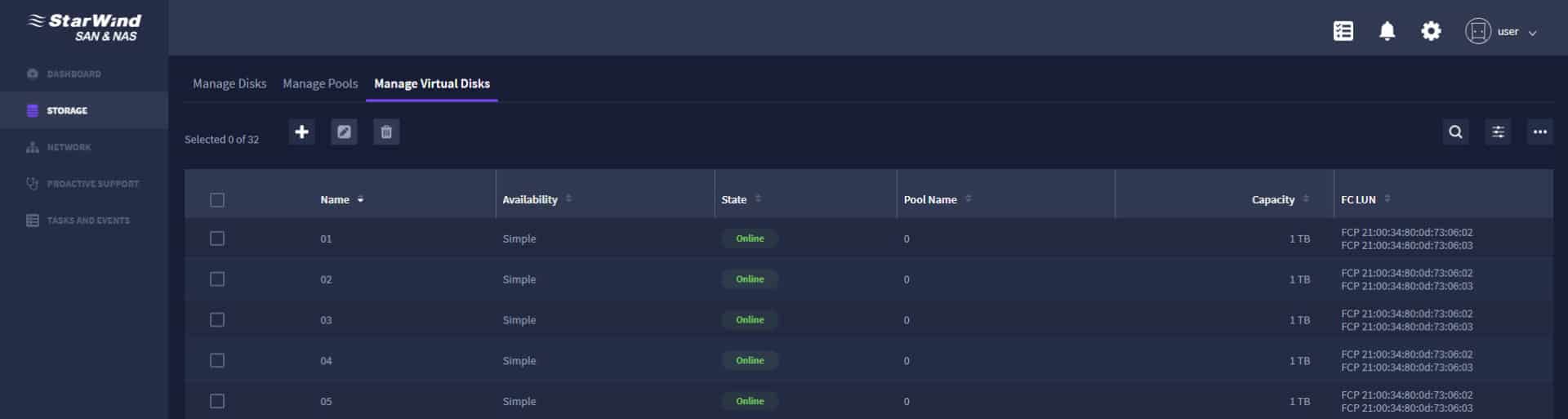

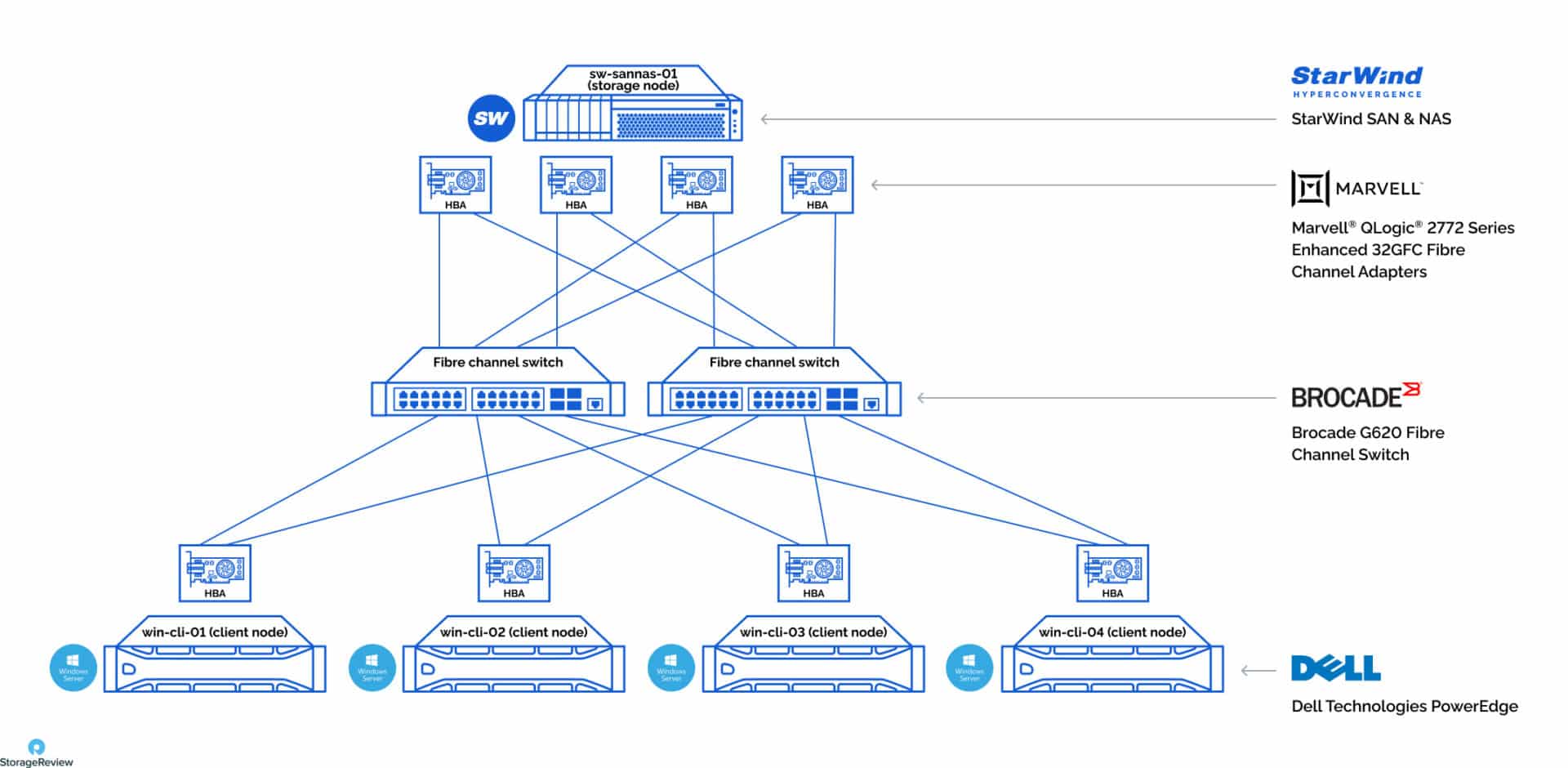

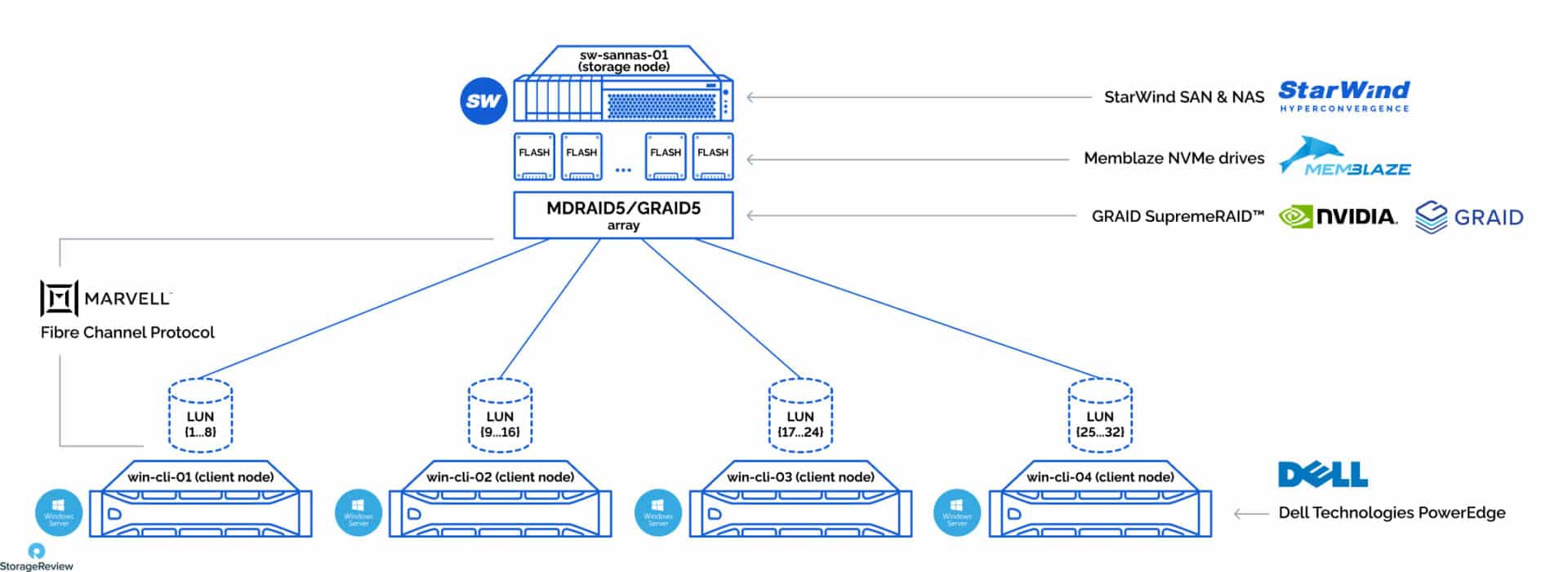

The entire testbed is articulated below, but the short story is that we took the best components available and combined them to create a robust storage platform with reliable networking and sufficient clients for load. StarWind can tie the Memblaze NVMe SSDs together thanks to the GRAID accelerator and share the storage over the network via a Brocade switching fabric and Marvell QLogic 32G FC HBAs.

This is a remarkable feat for StarWind since most SDS platforms aren’t capable of this level of engineering. Integrating Fibre Channel is no simple task, which is why most SDS solutions are based on Ethernet. That said, many organizations want the reliability and latency benefits a FC infrastructure offers. StarWind SAN & NAS over Fibre Channel will be available soon to help these organizations take advantage of SDS architecture.

StarWind SAN & NAS

StarWind SAN & NAS is designed to repurpose existing hardware running industry-standard hypervisor into high-performing storage. The solution is a fully certified shared storage for VMware vSphere Hypervisor ESXi and Microsoft Hyper-V Server.

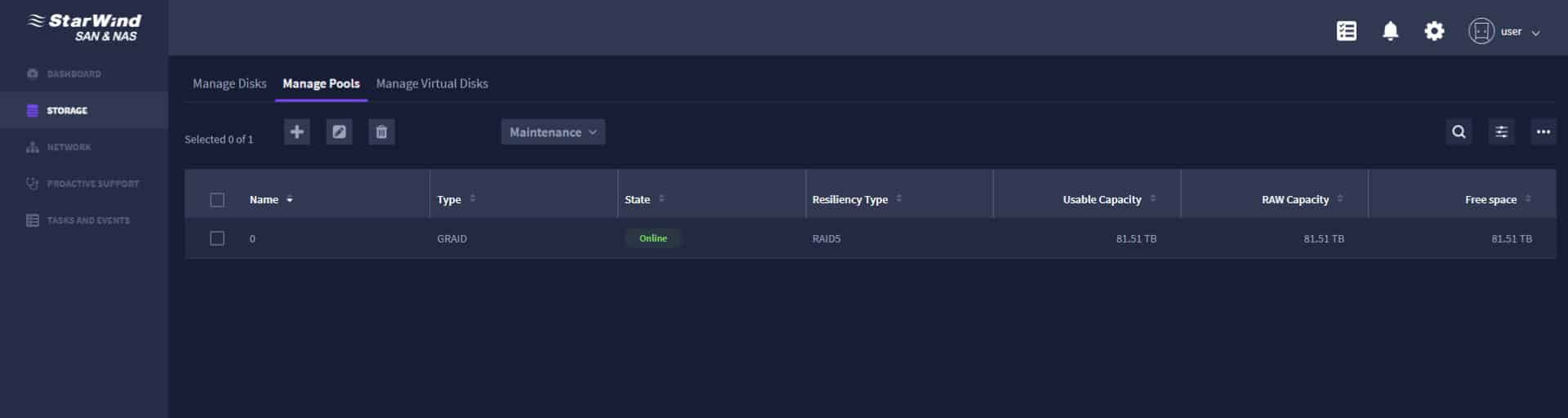

StarWind SAN & NAS supports hardware and software-based storage redundancy configurations. The solution can turn an existing server with internal storage into a redundant storage array presented as NAS or SAN, exposing standard protocols such as iSCSI, SMB, and NFS. Multiple management and configuration options include a Web-based UI, Text-based UI, vCenter Plugin, and a Command-line interface for cluster-wide operations.

Shipped as a ready-to-go Linux-based virtual machine (VM) deployed onto your hypervisor, Microsoft Hyper-V, or VMware vSphere, the solution shares the same software-defined storage (SDS) features as StarWind VSAN, using ZFS. StarWind SAN & NAS is easy to install with the Installation Wizard and web-based storage management user interface (UI) and increases return on investment (ROI) by repurposing aging servers.

StarWind SAN & NAS features include:

File and Block Storage: Supports all industry-standard block and file protocols, like SMB3, NFSv3, NFSv4, NFSv4.1, and iSCSI (including VVols on iSCSI, NVMe-over-Fabrics, and iSER).

Redundancy Options: Choose the preferred redundancy configuration for the local disks among ZFS, Hardware RAID, or Linux MD/RAID.

Architecture: Network-attached storage and storage area network from StarWind is built on Linux, ZFS, and StarWind Virtual SAN and is easily deployed as a VM on top of the hypervisor of choice, VMware ESXi or Microsoft Hyper-V.

Certified and Ready-to-Work: Easy to install and certified to work with vSphere or Hyper-V.

Partners

StarWind has an impressive list of partners using several during this test. All StarWind products are tested with the published hardware and software. Partner vendors independently test products to ensure quality and compatibility to deliver solutions that work. We have highlighted the vendors included in this specific set of tests.

Dell Technologies

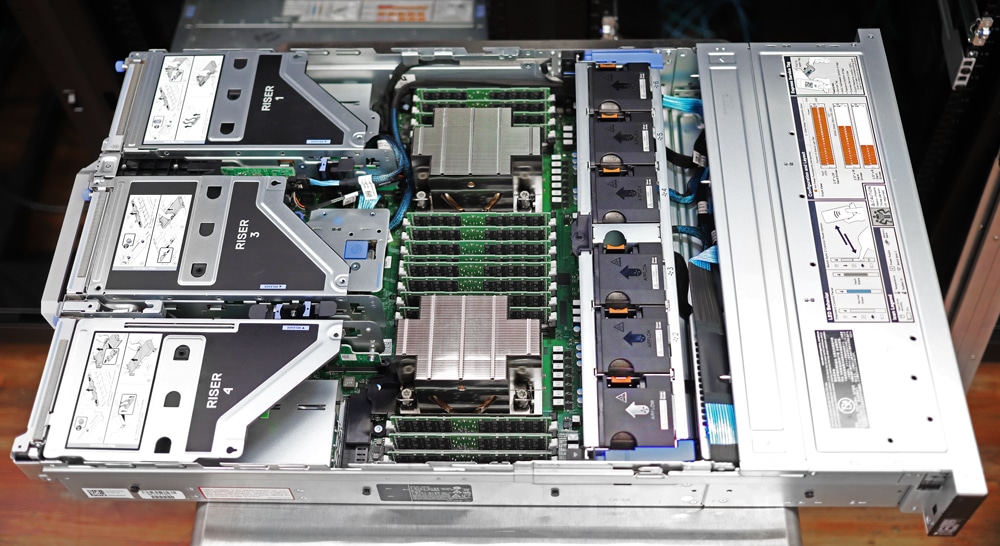

StarWind Software is a Dell Technology Alliance Partner and verified to deploy Dell servers as part of its turnkey virtualization solution, StarWind HyperConverged Appliance. In this test scenario, StarWind deployed Dell’s PowerEdge R750 for the server and PowerEdge R740xd as the client.

The Dell EMC PowerEdge R750 is powered by the 3rd Generation Intel Xeon Scalable processor to address application performance and acceleration. The server is a dual-socket/2U rack server that supports 8-channels of memory per CPU and up to 32 DDR4 DIMMs @ 3200 MT/s speeds. In addition, to address substantial throughput improvements, the PowerEdge R750 supports PCIe Gen 4 and up to 24 NVMe drives with improved air-cooling features and optional Direct Liquid Cooling to support increasing power and thermal requirements.

The PowerEdge R740xd is a 2U two-socket platform well suited for software-defined storage, service providers, or virtual desktop infrastructure. The system R740xd supports up to 24 NVMe drives with the ability to mix any drive type to create the optimum configuration of NVMe, SSD, and HDD for either performance, capacity, or both. The R740xd is the platform of choice for software-defined storage and is the foundation for VSAN or the PowerEdge XC.

GRAID Technology

GRAID SupremeRAID is designed for a modern software composable environment. GRAID Technology is delivering a future-proofed RAID card that protects not only direct-attached flash storage but also those connected via NVMe over Fabrics.

The SupremeRAID SR-1010 is the first NVMe and NVMe-oF RAID card to unlock the full potential for SSD performance. The SupremeRAID card directly processes the I/O, relieving the CPU of this duty. Because the card is a GPU, there’s tremendous computational power on the card, which doesn’t exist on standard RAID cards.

The SupremeRAID SR-1010 is feature-rich, offering compression, encryption, and thin provisioning. Installation is as easy as plug & play and does not require cabling or reworking the motherboard layout.

Memblaze

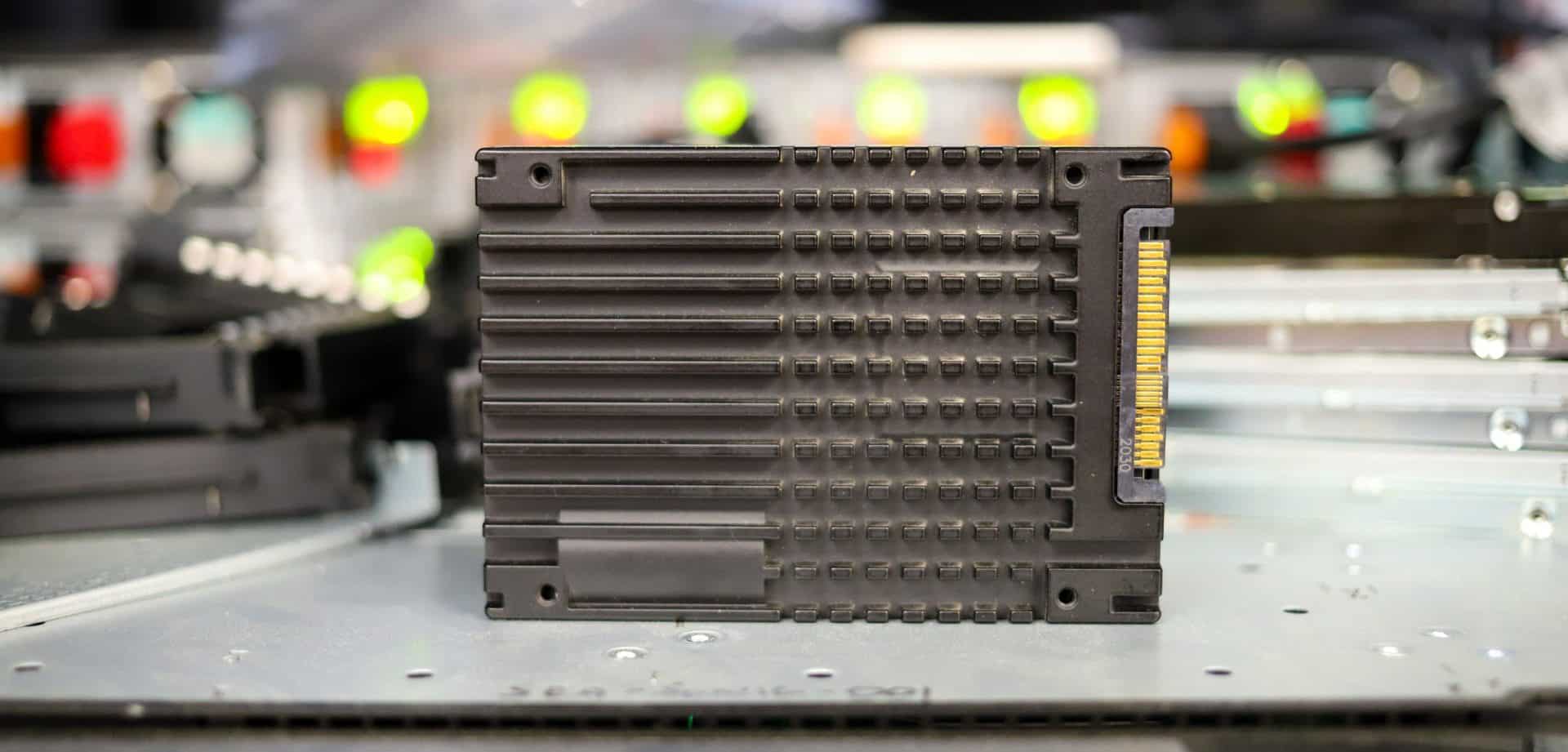

Memblaze is a leading provider of enterprise-class NVMe SSD products. Founded in 2011, Memblaze is one of the earliest companies to develop enterprise-class SSD products globally. The PBlaze series enterprise-class SSD launched by Memblaze has been widely used in databases, virtualization, cloud computing, big data, artificial intelligence, and other fields, providing stable and reliable high-speed storage solutions for customers in industries like Internet, cloud service, finance, and telecommunications.

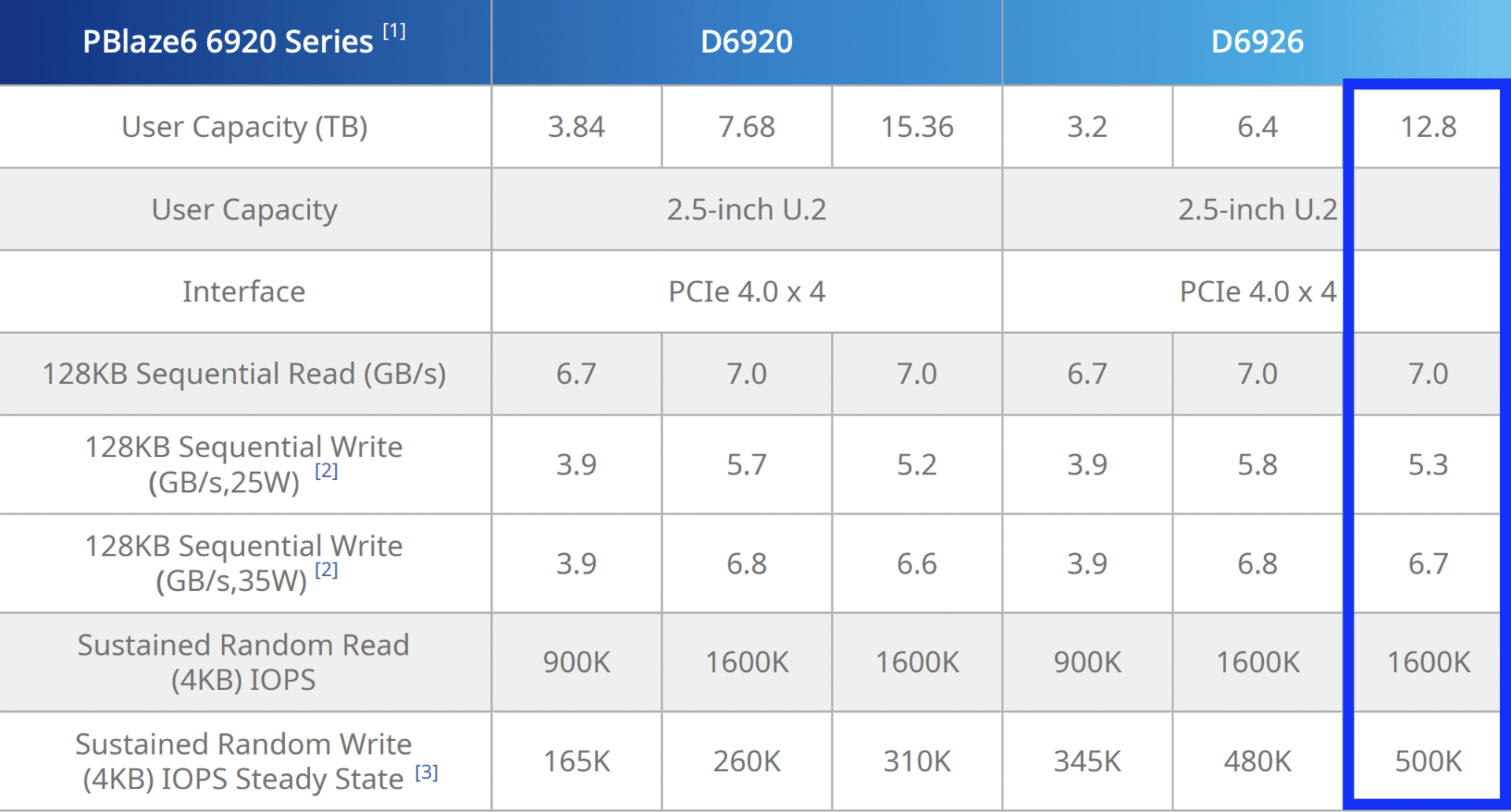

Memblaze PBlaze6 6920 Series SSDs offer consistent performance up to 1600K random read IOPS, up to 7GB/s sequential read bandwidth, up to 6.8GB/s sequential write bandwidth, and up to 11μs in write latency. The drive comes in a wide range of capacities, with the lower endurance drive coming in 3.84TB, 7.68TB, and 15.36TB capacities. The higher endurance version comes in 3.2TB, 6.4TB, and 12.8TB capacities.

Marvell QLogic

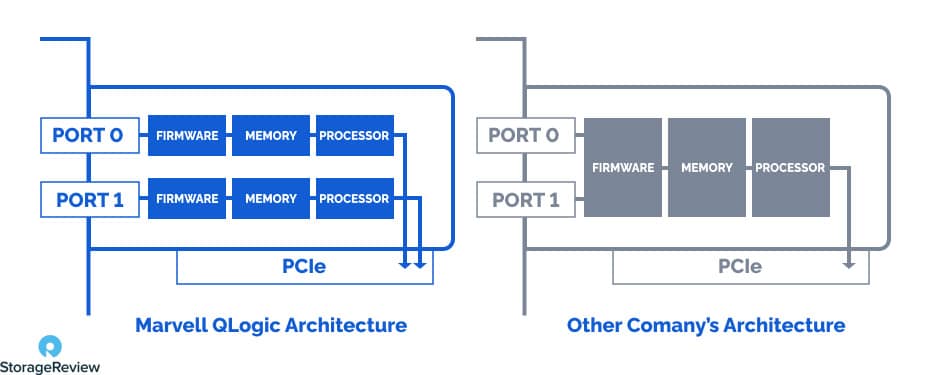

QLogic is a global provider of high-performance networking, delivering adapters, switches, and ASICs for data, storage and server networks. The company offers a diverse portfolio of networking products, including Converged Network Adapters for FCoE, Ethernet adapters, Fibre Channel adapters and switches, and iSCSI adapters.

The QLogic 2772 adapters support low-latency access to scale-out NVMe with full support for the FC-NVMe protocol. They can simultaneously support FC-NVMe and FCP-SCSI storage traffic on the same physical port, enabling customers to migrate to NVMe at their own pace. The adapters bring the best of both worlds by offering up to 2 million IOPS and line rate 32GFC performance while delivering low-latency access to NVMe and SCSI storage over a Fibre Channel network.

Check out our deep dive into QLogic’s FC-NVMe story.

SAN & NAS Testbed Detail

The testbed for this work consists of the StarWind SAN & NAS storage node with Memblaze PBlaze6 6926 12.8TB SSDs with the GRAID SupremeRAID SR-1010 accelerator card. The fabric consisted of Brocade G620 32G Fibre Channel switching and Marvell QLogic 2772 32G FC HBAs. Load generation took place via four client nodes. The storage node is based on a Dell PowerEdge R750 server, and the client nodes are Dell PowerEdge R740 servers. The details are enumerated in the tables below.

| Storage Node | |

|---|---|

| Server | Dell PowerEdge R750 |

| CPU | Intel® Xeon® Platinum 8380 CPU @ 2.30GHz |

| Sockets | 2 |

| Cores/Threads | 80/160 |

| DRAM | 1,024GB |

| Storage | 8x Memblaze PBlaze6 6926 12.8TB |

| Accelerator Card | GRAID SupremeRAID SR-1010 |

| HBAs | 4x Marvell QLogic 2772 Series Enhanced 32GFC Fibre Channel Adapters |

| StarWind SAN & NAS Software | Version 1.0.2 (Build 2175 – FC) |

| Client Node | |

|---|---|

| Server | Dell PowerEdge R740xd |

| CPU | Intel® Xeon® Gold 6130 CPU @ 2.10GHz |

| Sockets | 2 |

| Cores/Threads | 32/64 |

| DRAM | 256GB |

| Storage | 1x Marvell® QLogic® 2772 Series Enhanced 32GFC Fibre Channel Adapter |

| OS | Windows Server 2019 Standard Edition |

StarWind SAN & NAS Performance Test Results

With a wide range of moving parts, the focus of the performance testing was divided into local and remote tests over Fibre Channel. The first goal was to show the capabilities and performance of the underlying Memblaze NVMe storage and the benefits of GRAID’s HW RAID versus SW RAID.

The second step was to compare the performance of each over 32Gb FC using Marvell QLogic HBAs, again with GRAID HW RAID versus SW RAID. The benchmark was determined using the Flexible I/O (fio) utility. Fio is a cross-platform tool used for benchmark and stress/hardware verification and is considered an industry standard for testing local and shared storage.

Testing patterns:

- 4k Random 100% Read/100% Write

- 4k Random Mixed Read/Write 70/30

- 1MB Sequential 100% Read/100% Write

Test duration:

- Single test duration = 600 seconds

- Before starting the write benchmark, storage has been first warmed up for 2 hours

Testing stages

- Confirming single NVMe drive performance to get reference numbers

- Testing MDRAID and GRAID RAID5 arrays performance locally

- Running benchmark remotely from client nodes

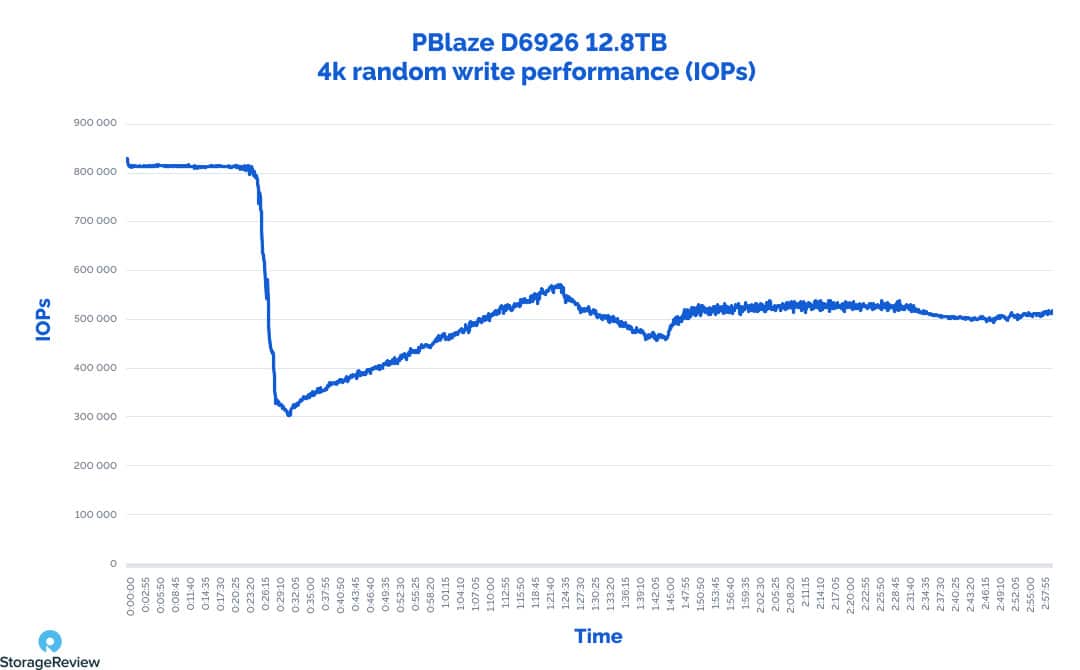

During the initial preparation for the array testing, the individual Memblaze PBlaze6 D6926 12.8TB SSDs were checked for baseline performance figures to compare against spec sheet values and to verify how long it took for each SSD to reach steady-state performance. In this testing stage, we were able to measure 4K random performance of 1.5M IOPS read, and 537k IOPS write with the drive requiring about 2 hours to reach steady-state. Increasing the block size to 64K with a random workload, each SSD measured 6.5GB/s read and 2.6GB/s write. Finally, with a 1MB transfer size and a sequential transfer, each SSD measured 6.6GB/s read and 5.4GB/s write.

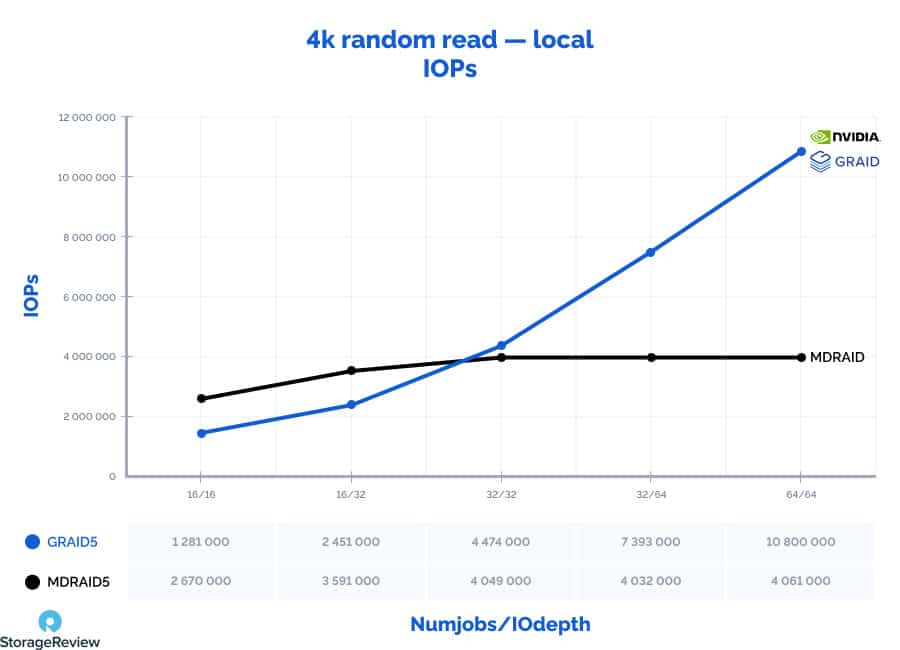

Local performance was measured across eight Memblaze D6926 12.8TB SSDs, resulting in strong 4K random read performance with a massive benefit towards GRAID’s HW RAID. While SW RAID had a slight lead at lower queue and thread counts, it capped out at 4M IOPS, compared to GRAID’s 10.8M IOPs. GRAID’s CPU utilization of the underlying host during this workload was dramatically less than SW RAID. At low queue/thread counts, CPU utilization measured 3% to 7% and peaked at 25% versus 40%.

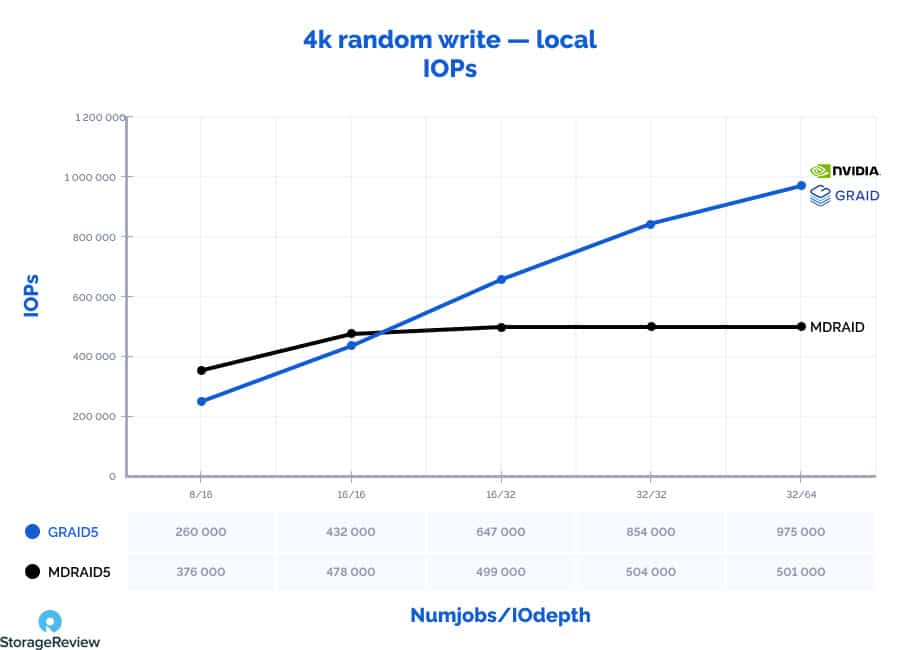

The 4K random write test resulted in a slight edge towards SW RAID at a low-queue and thread count, with the GRAID HW RAID quickly surpassing it as the workload scales up. Performance for SW RAID scaled from 376K IOPS to 501k IOPS write, with GRAID HW RAID scaling from 260k IOPS to 975k IOPS. It should also be noted that GRAID’s performance can increase to 1.5M IOPS write with a full Gen4x16 slot for the GPU. Based on how we had our Dell PowerEdge R750 configured, the GPU was located in a Gen4x8 slot holding it back slightly. During this test, CPU utilization for SW RAID scaled from 8% to 21%, whereas HW RAID increased somewhat from 1% to 3%.

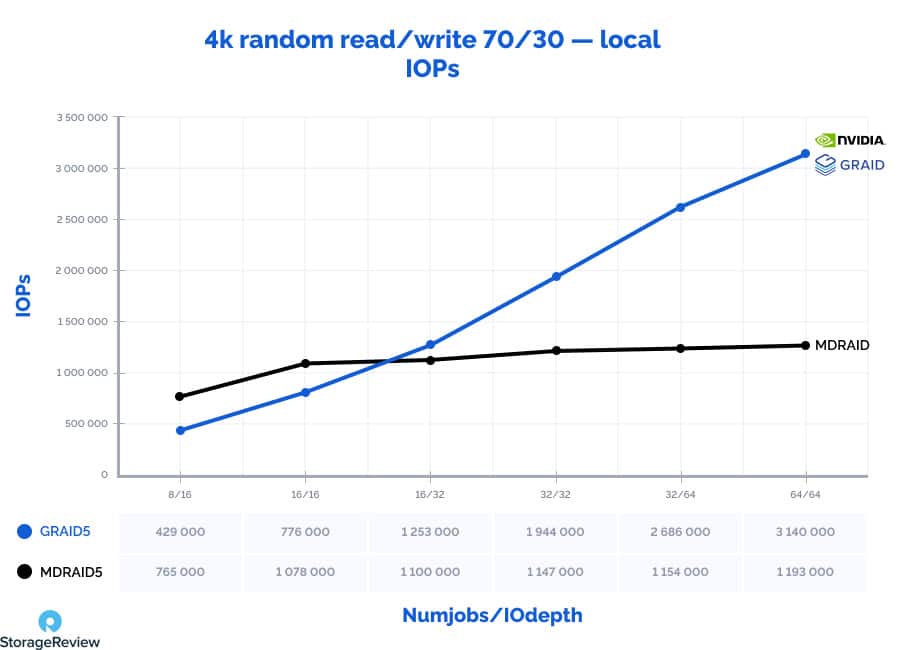

In a 70% read/write mix, using a 4K transfer size, the GRAID configuration led as the workload scaled up. The measured SW RAID performance scaled from 765k IOPS to 1.2M IOPS, compared to HW RAID measuring 429k IOPS up to 3.14M IOPS. CPU utilization was dramatically lower for HW RAID. SW RAID measured between 5% to 49%, while GRAID measured from 1% to 8%.

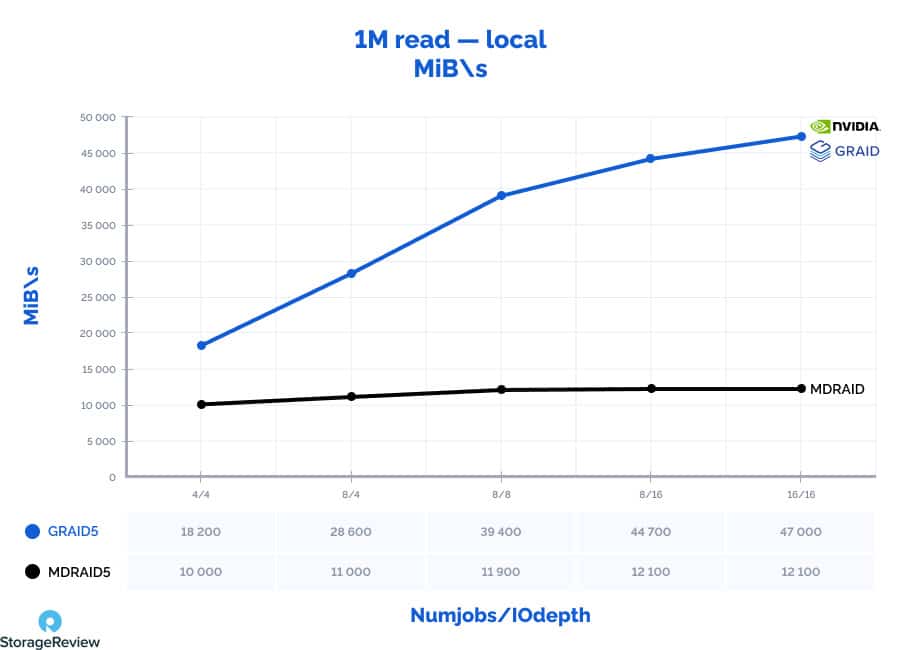

To measure large-block bandwidth, the block size was increased to 1MB. GRAID was the clear standout across the workload, ranging from 18.2GB/s to 47GB/s, compared to SW RAID, which started at 10GB/s and scaled up to 12.1GB/s. CPU utilization throughout this test ranged between 3% and 10% with SW RAID and 0% to 1% with HW RAID.

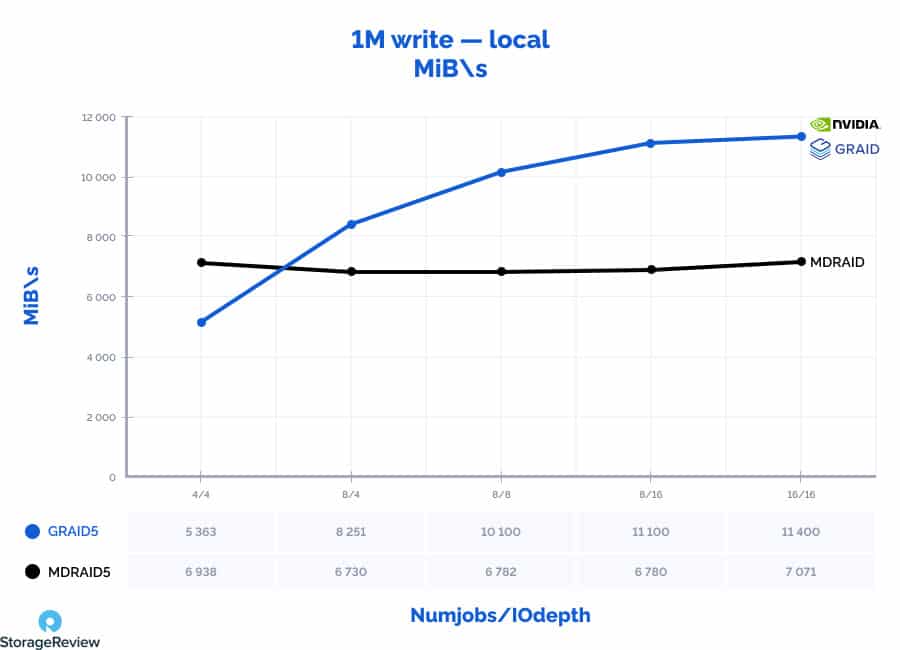

The last local benchmark focused on large-block sequential write performance, where SW RAID had a slight edge at 4T/4Q before being quickly surpassed by GRAID. Here SW RAID measured 6.9GB/s to 7.1GB/s, whereas GRAID ramped from 6.4GB/s to 11.4GB/s. CPU utilization with SW RAID scaled from 9% to 17%, while HW RAID measured 1% to 3%.

With the local performance baseline captured from both a single SSD and eight SSDs in SW RAID5 and HW RAID5 with GRAID, the next step was FCP testing over 32Gb FC. The essential takeaways from the local performance tests were how much GRAID HW RAID improved overall performance as the workload increased and kept CPU utilization down.

The FCP tests included four Dell R740xd client nodes running Windows, each attached back to two 32Gb FC switches. Each client system used the same 32Gb Marvell QLogic HBA as the storage side, giving us a total theoretical bandwidth of 8 x 32Gb FC ports or 25.6GB/s.

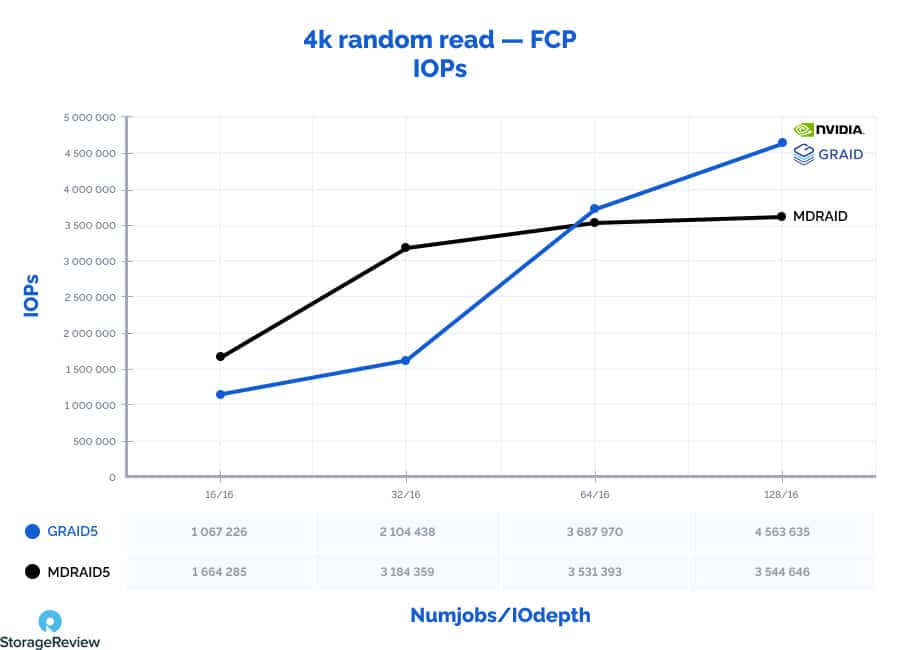

With the four Dell PowerEdge R740xd loadgens attached to the StarWind NAS & SAN server, we start by looking at aggregate 4K random read performance over the wire, with SW RAID scaling from 1.66M IOPS to 3.5M IOPS and GRAID from 1.1M IOPS to 4.6M IOPS.

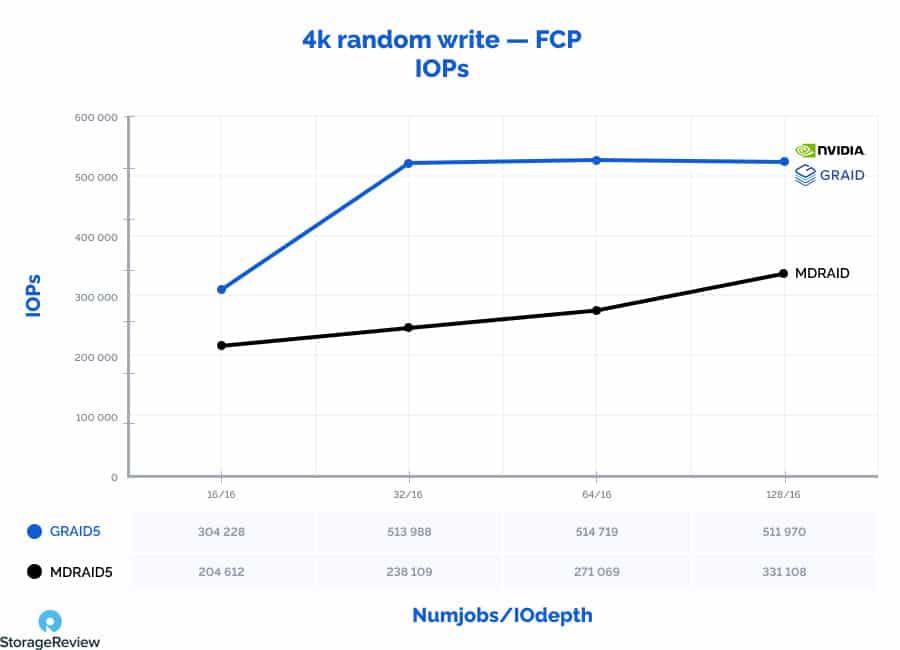

Moving to 4K random write, SW RAID scaled from 204k IOPS to 385K IOPS. The HW RAID in the backend offered significant gains, with GRAID scaling from 304k IOPs to 498k IOPS at its peak.

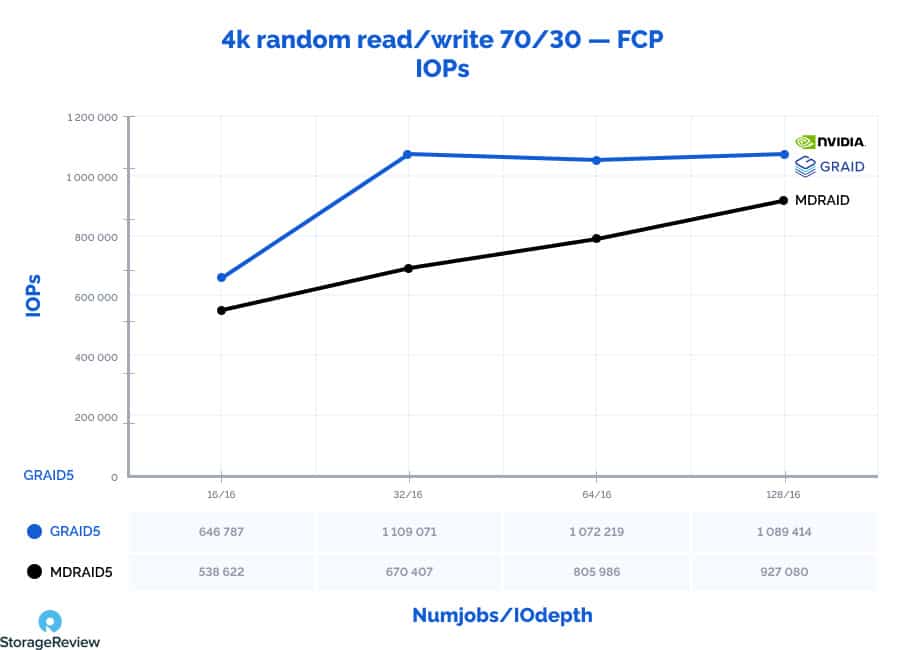

With a mix of read and write traffic in a random 4k 70/30 mixed workload, the HW RAID configuration served more I/O than SW RAID alone. SW RAID scaled from 538k IOPS to 998k IOPS, with HW RAID scaling from 647k IOPS to 1.1M IOPS.

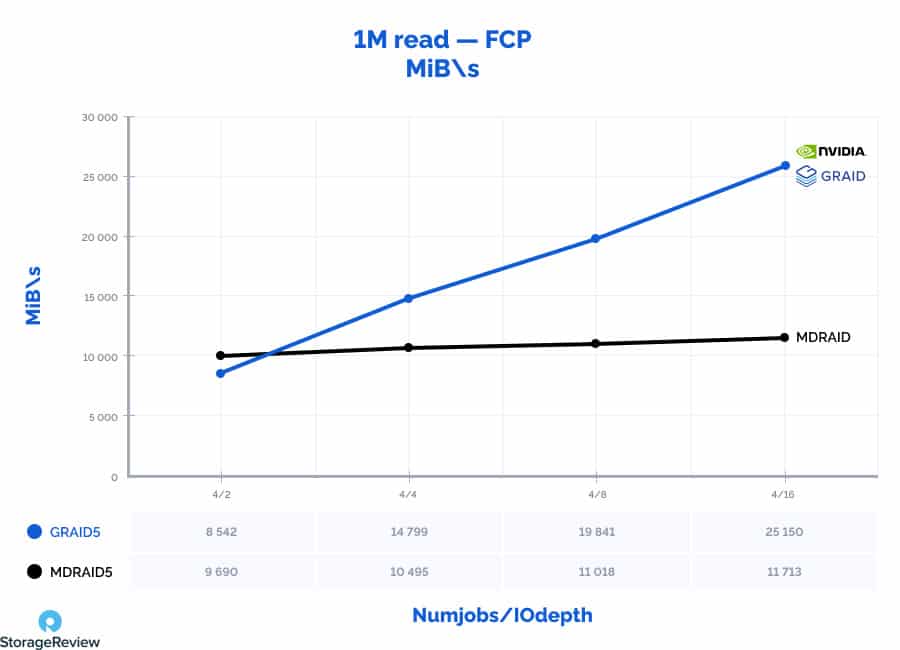

Returning to large-block transfers to measure peak bandwidth from the StarWind NAS & SAN array to the four clients, we effectively saturated the 8x 32Gb FC ports. The SW RAID scaled from 9.7GB/s to 11.7GB/s, whereas HW RAID managed to push out 8.5GB/s at the low end and 25.2GB/s at its peak. We hit our number with the theoretical max of 25.6GB/s across eight 32Gb ports.

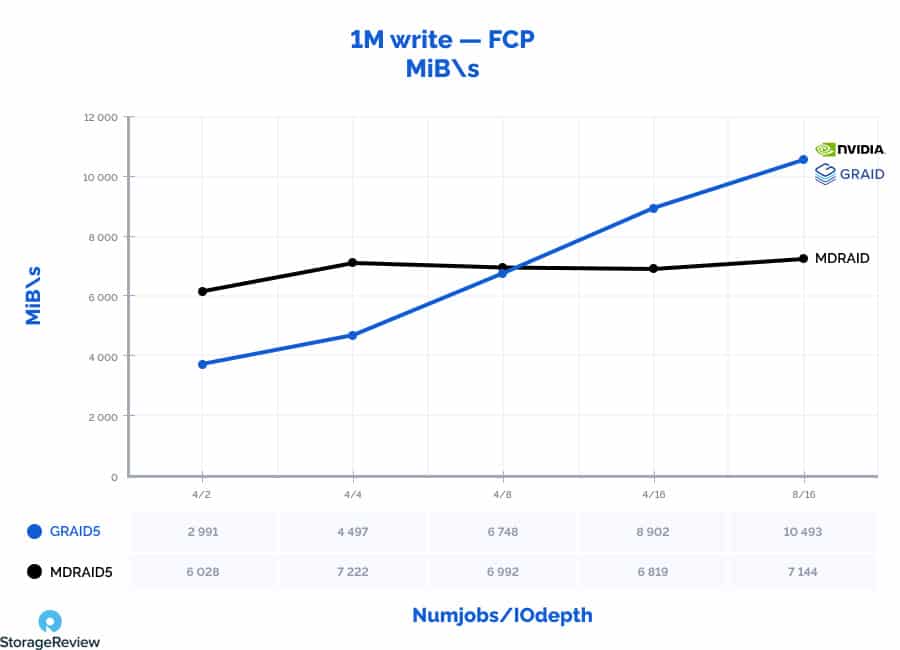

In the final test measuring 1M sequential write bandwidth, SW RAID had a slight edge at lower thread and queue levels, reaching parity at 4T/8Q. However, HW RAID quickly surpassed SW RAID, scaling from 6GB/s to 7.1GB/s, and HW RAID measuring between 2.99GB/s to 10.5GB/s.

Final Thoughts

A hardware solution would be expected to outperform a software solution in a typical RAID scenario. However, when implementing a Software-defined storage solution, the potential to get mixed results increases. The numbers don’t lie in this case, and the StarWind SAN & NAS outperformed our expectations.

The StarWind solution is, as mentioned above, ambitious. It incorporates FCP, NMVe SSDs, GRAID hardware, and software to pull it all together. Taking full advantage of the compute power in the GRAID SupremeRAID card, the performance of the NVMe SSDs, and the low latency and reliability of Fibre Channel, this configuration ticks all the boxes. To get these performance numbers from a traditional hardware RAID card would be impossible without installing multiple cards in the servers.

StarWind SAN & NAS takes full advantage of the GPU processing power in the GRAID card. In each of the test scenarios, the StarWind solution was able to meet expectations. With GRAID offloading I/O processing to the GPU, the CPU utilization was significantly reduced compared to running software RAID solutions. The CPU usage on the storage node was 2-10 times lower than when using SW RAID freeing the CPU resources for other tasks. Even with the StarWind solution, the SW RAID tests practically achieved the full performance that a typical RAID array would provide but with a higher latency cost.

Essentially, the most impressive shared storage performance was presented by a redundant GRAID storage array full of PBlaze6 6920 Series NVMe SSDs with StarWind SAN & NAS on top and running over Fibre Channel to client nodes, using Marvell Qlogic 2772 Fibre Channel adapters. GRAID is the only technology to guarantee probably the highest performance software-defined shared storage can get as of now. The GRAID build has received around 50% of the local RAID array performance with approximately the same latency as the local storage.

StarWind SAN & NAS makes it possible to achieve full GRAID performance potential. NVMe-oF and RDMA will be included in subsequent builds.

Click the links to find more information about the GRAID SupremeRAID, NVMe-oF, and StarWind NVMe-oF initiator performance.

StarWind SAN & NAS Product Page

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | Facebook | RSS Feed