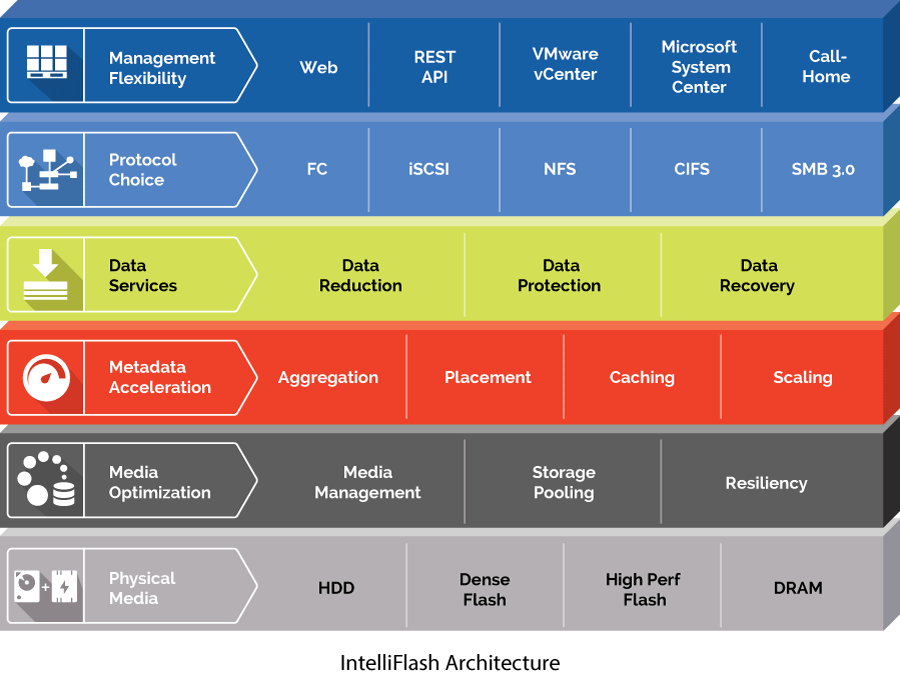

Tegile’s IntelliFlash arrays are built on a hybrid architecture that offers customers the flexibility to configure their array with a mix of HDD and SSD storage with latencies and storage density that is appropriate for individual use cases. A key item that Tegile leverages in the crowded storage market, that many of their competitors don't offer, is their inline deduplication and compression technology, which can greatly extend the available capacity to users. By extending their data reduction to disk, where most in the market cannot, Tegile gets the benefit of touting very impressive cost/TB metrics while also retaining the benefits of flash for hot data. Our review of the Tegile HA2300 array deploys IntelliFlash in conjunction with one expansion shelf (scale-up), putting both controllers to work through a wide range of workloads.

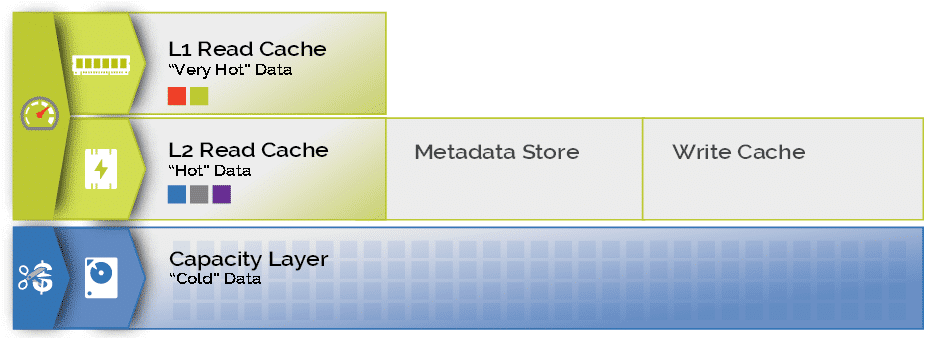

Like other members of Tegile’s Intelligent Flash Array portfolio, the HA2300 features redundant active/active controllers and can be deployed with the amount of eMLC SSD storage chosen by the customer. That flash storage and the array’s DRAM, along with any HDD storage that has been incorporated, are leveraged by Tegile’s adaptive Caching and Scaling Engine, which caches frequently-accessed data to DRAM and high-performance flash storage while less frequently-accessed data remains on high-density flash or hard drive tiers.

The headlining features that make Tegile’s arrays distinctive in the marketplace are the company’s deduplication and compression engines. Deduplicating and compressing all data inherently has some overhead impact on performance, but IntelliFlash makes use of a proprietary approach to metadata management that is designed in part to mitigate this performance overhead. Rather than interleaving data and metadata, Tegile arrays avoid metadata fragmentation by storing metadata in dedicated DRAM and flash media that also allows the array to access metadata using dedicated high-speed retrieval paths.

Tegile’s Caching and Scaling engine is the means by which hybrid configurations can incorporate media with differing latencies. This caching solution uses mirrored DRAM and SSDs to establish two "read cache" tiers for frequently-accessed data and also tracks the wear level of flash storage media in order to make eMLC wear more uniform throughout the array.

The Intelligent Flash Array family supports access via the FC, iSCSI, NFS, and CIFS protocols, with SMB 3.0 functionality expected via a future software update. All protocols can be used simultaneously across all compatible ports due to IntelliFlash’s redundant media fabric design, which consolidates connectivity across both controllers. All writes are synchronous, with new data beginning its lifecycle in a persistent flash write cache.

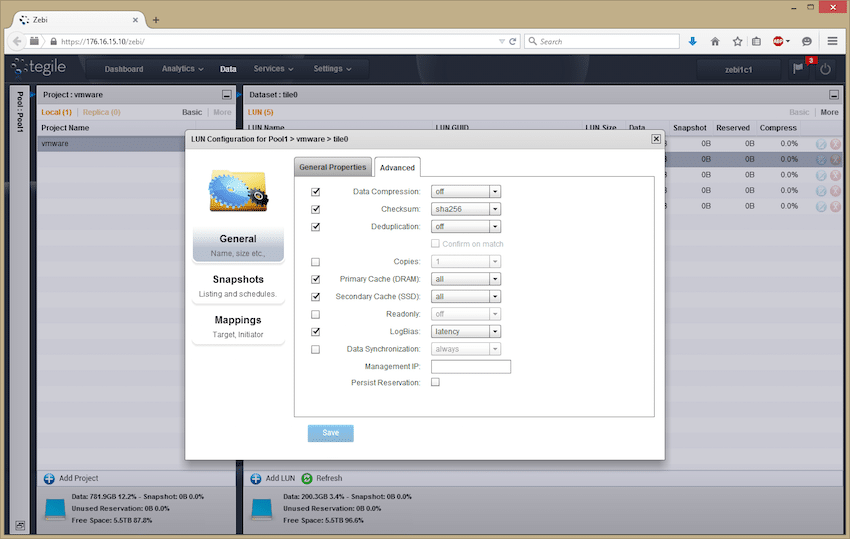

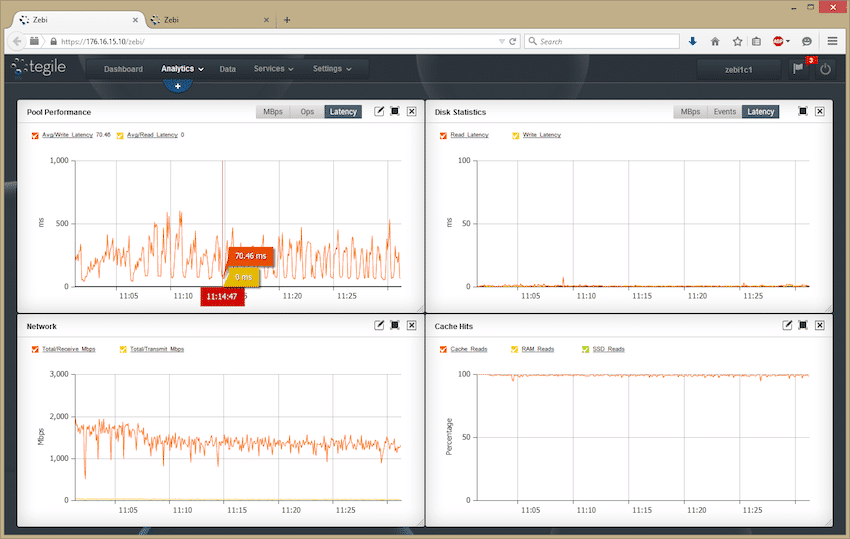

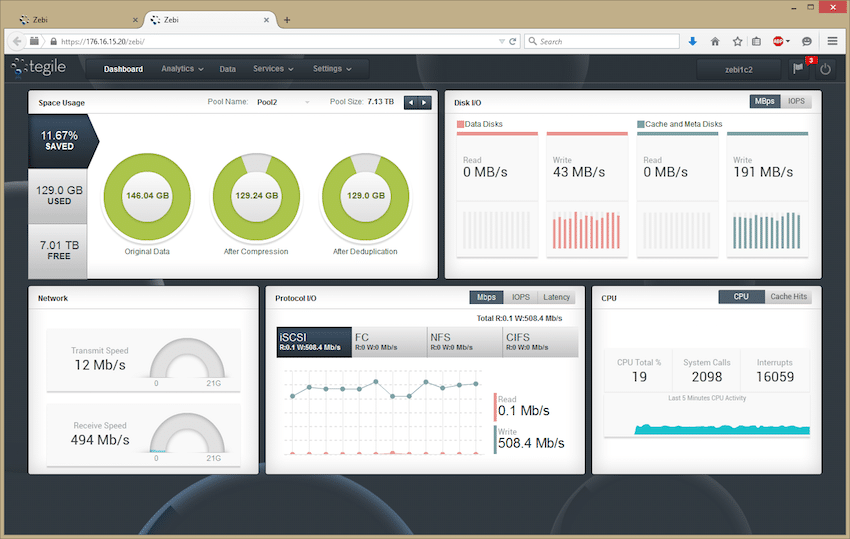

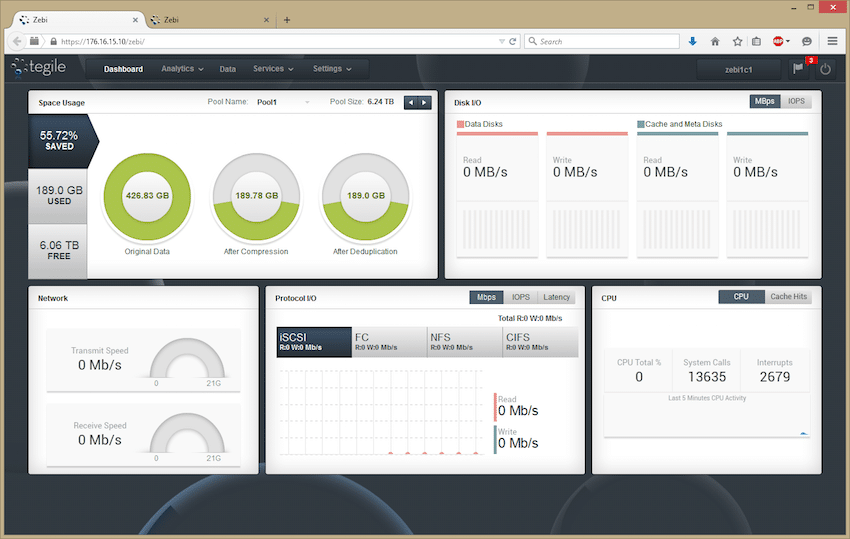

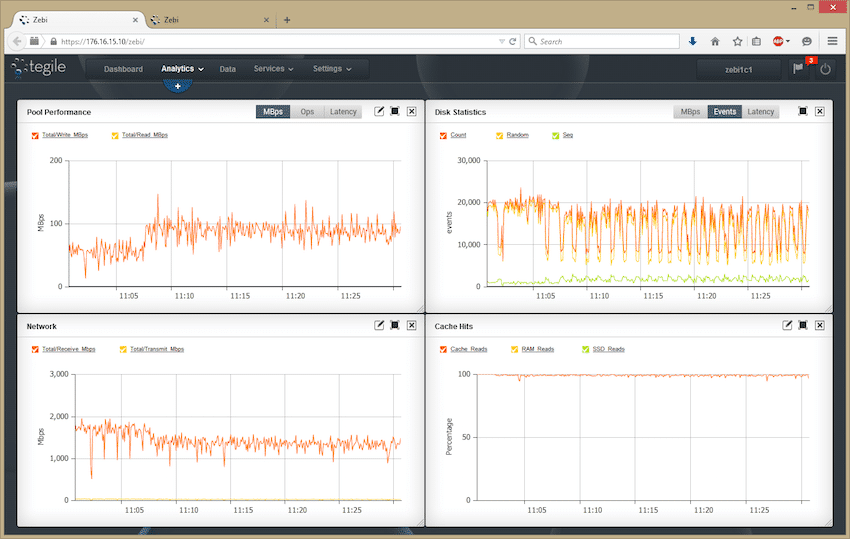

While much of Tegile's benefit messaging is around their caching technologies and data reduction, the units also benefit from a strong ease of use argument. Their administration GUI is clean and easy to use, with most tasks like LUN provisioning taking just a few clicks. The Tegile administrator is also greeted with high-level metrics and Tegile's iconic "doughnuts" image on the dashboard to illustrate the space savings the array offers.

While our review is on the HA2300 with shelf, Tegile offers a wide variety of configurations based on customer need. Their latest family of T3000 hybrid arrays for instance come in a number of pre-configured suggestions based on use case including capacity optimized, performance optimized, business-critical workloads, and so on. Tegile also offers several all-flash models that take advantage of their core technologies to deliver configurations ranging from the ESH-20 at 18TB raw to the ESF-145 at 144TB raw. Tegile expects customers to see additional capacity benefits of 3-5x in those models depending on workload with data reduction.

Tegile HA2300 Specifications

- Platform Configuration:

- Processor: 4x Xeon E5620

- DRAM Memory: 192GB

- Flash Memory: 1200GB

- Storage Capacity:

- Min: Raw Capacity: 16TB

- Max: Raw Capacity: 144TB

- Physical:

- Form Factor (Rack Units): 2U

- Weight (Lbs): 80

- Power (W): 500

- Expansion Shelves: Up to 3

- Network Connections:

- 1 Gbps Ethernet Ports: 12

- 1 Gbps IP-KVM Lights-out Management Port: 2

- Optional Connectivity: Dual-port 4/8 Fibre Channel, Dual-port 10GbE Copper/Fiber, Quad-port 1 Gbps Ethernet

- Software Services Included:

- Protocols: SAN Protocol Support (iSCSI, Fibre Channel), NAS Protocol Support (NFS, CIFS, SMB 3.0)

- Data Services: De-duplication, Compression, Thin Provisioning, Snapshots, Remote Replication, Application Profiles

- Management: Web browser, SSH, IP-KVM

- Redundancy: No single point of failure, Active-Active High Availability Architecture

- Standard Warranty: 90 Days: 24×7 support via phone and email. Next business day hardware replacement parts. Free software updates.

- Optional Warranty:

- 1, 3 or 5 years: 24×7 support via phone and email. Next business day hardware replacement parts. Free software updates.

- Onsite Gold Level Support: 4 hour onsite support with optional onsite hardware kit

- Onsite Silver Level Support: Next business day onsite technical support

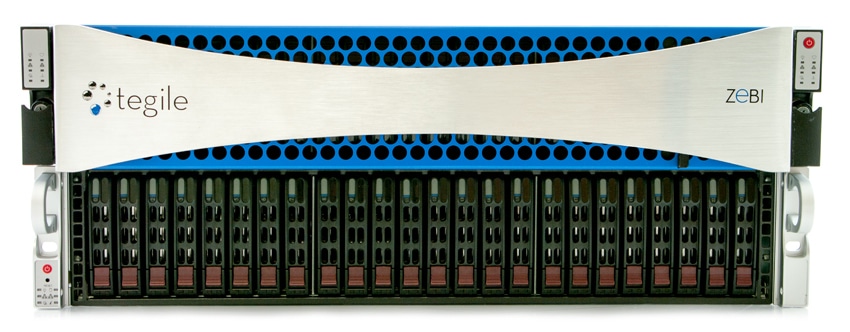

Build and Design

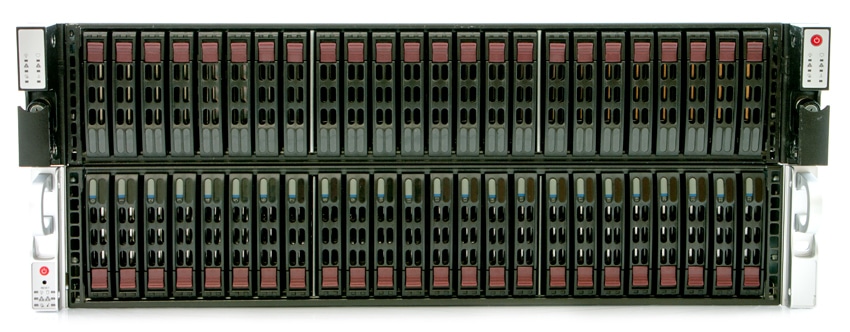

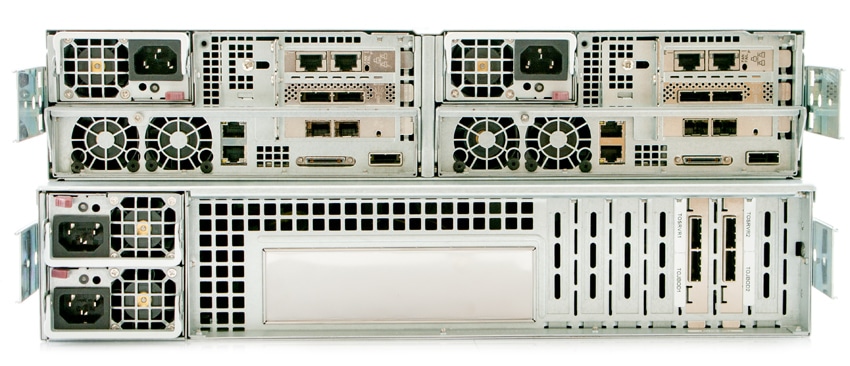

IntelliFlash arrays use a pair of redundant active/active controllers inside a chassis supporting up to 24 2.5" HDDs and SSDs. Each controller offers two PCIe slots for connectivity expansion, as well as supporting multiple interconnect types such as Ethernet and FC. All IntelliFlash storage media options incorporate dual ports for connectivity to both controllers, offering redundant links in the event one single connection goes down.

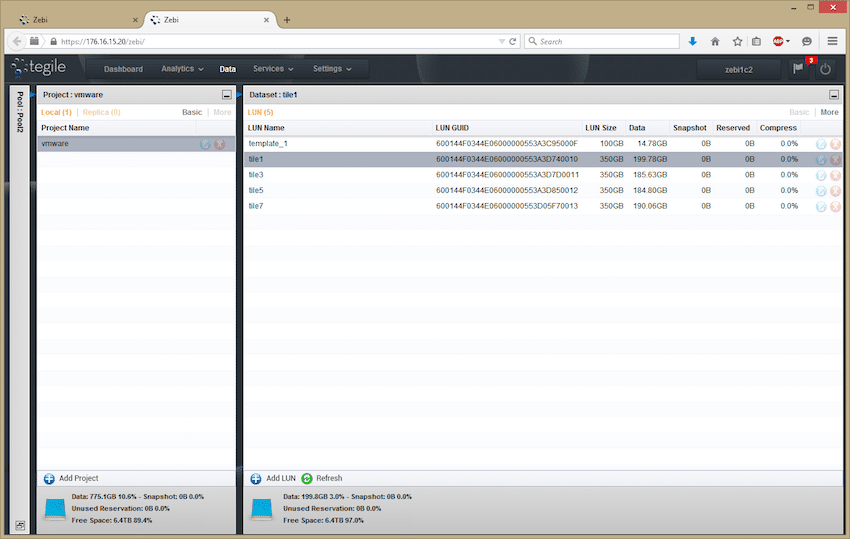

Our review configuration of the Tegile HA2300 includes the primary system with two controllers and 24 disks, as well as one ES2400 expansion shelf adding an additional 24 disks. Our storage configuration consisted of each controller handling its own 24-disk storage pool, made up of (6) 200GB eMLC SAS2 SSDs as well as (18) 1TB 7200RPM SAS2 HDDs.

Each controller is powered via two 2.4GHz Intel E5620 processors with 96GB of DRAM. This gives the HA2300 a combined 4 processors and 192GB across both active/active controllers. The additional ES2400 shelf included in our configuration is a JBOD unit only, adding only storage capacity. With the hardware supplied our platform offered 6.2TB raw usable through one controller and 7.14TB raw usable on the second controller.

Management

The Tegile IntelliFlash architecture virtualizes the underlying storage media and creates a pool of capacity that can be allocated as LUNs or file shares. This pool capacity can be expanded online. The Tegile architecture uses dynamic stripe widths in order to avoid performance overhead from read-modify-write operations and in order to decrease the time required to reconstruct failed drives. IntelliFlash arrays support dual parity, two-way mirroring, and three-way mirroring RAID levels. Data security is available in the form of 256-bit AES encryption of data at rest with native key management.

Individual volumes can be tuned based on use cases including database, server virtualization, and virtual desktop. This tuning process affects settings such as block size, compression, and deduplication. The management interface is designed to support virtualized environments and provides management tools that can be configured with virtual machine granularity rather than LUNs, file systems, and RAID groups for virtualized situations.

IntelliFlash data reduction services include inline deduplication, inline compression, and thin provisioning. Deduplication and compression can be enabled for the entire storage pool or for individual LUNs and file shares. Each LUN can be configured with block sizes from 4KB to 128KB and a choice of compression algorithms based on workloads. We dive further into the data reduction benefits in the next section.

Tegile IntelliFlash arrays can take point-in-time snapshots that are VM-aware and application-consistent. Snapshots can be replicated off-site, with only incremental changes since the prior snapshot transmitted via WAN. Writeable point-in-time images are created using the cloning feature and are also VM-aware and application-consistent. As with snapshots, clones are “thin” and only allocate capacity as needed for new data.

A vCenter web client and desktop client plugin allows VMware data stores to be managed through vCenter. Tegile also offers VAAI support to reduce I/O overhead in VMware environments. IntelliFlash arrays are also tested and verified as part of the Citrix Ready VDI Capacity Program Verified for Citrix XenDesktop.

In Microsoft environments, Tegile arrays integrate with CSV for failover clustering for Hyper-V; VSS for application-consistent snapshots and clones; and will have SMB 3.0 support in the future. Microsoft Hyper-V virtual machines can be managed through Microsoft Systems Center Virtual Machine Manager (SCVMM). Tegile offers pre-tested and validated Oracle architectures in addition to testing and certifying its arrays with Oracle VM and validated with Oracle Linux with UEK in single instance and Oracle RAC deployments.

The IntelliCare portal presents an access point for system information, configuration details, historical data and trending analyses, and data reduction rates as well as serving as the interface for managing support cases. IntelliCare can be configured to send capacity alerts to customers and to Tegile support based on linear progression analysis of space usage, threshold alerts for disks, and high availability errors. When an alert is triggered, IntelliCare can be configured so that a Tegile account manager who has access to array status and configuration data is automatically assigned to the case.

Data Reduction

In the high-end hybrid storage array space, Tegile is a front-runner when it comes to integrating the benefits of data reduction in a primary storage array. One of the most recognizable screencaps from users are those posting "doughnut" shots showing the amount of data stored, how much space is consumed after compression and finally how much space is consumed after deduplication. With many production environments running virtualized servers and increased desire for Test/Dev work to not be siloed, repetitive data is an industry-wide problem that has different ways of being addressed. You can either size an array with no data-reduction benefits to fit the current or estimate future demands, or leverage data reduction to minimize that footprint by removing duplicate or easily compressible data. Out of all of traditional hybrid storage platforms we've tested, the Tegile HA2300 has been the only one to offer this level of data reduction features.

To test out the data-reduction capabilities of the Tegile HA2300, we used the stock virtual machine LUN profile, with compression and deduplication enabled, and presented it to one of our ESXi hosts. This test simply looked at the footprint of that single VM after it had been migrated onto the array. The first one we tried was our CentOS 6.3 VM used for testing MySQL performance on shared storage. This VM has a pre-built database on one of its vDisks that is then copied onto a blank vDisk that is then put under load. In this particular scenario we saw roughly a 12% savings, coming mostly from compression.

Our next test looked at a CentOS 7 VM that we use for our upcoming OpenLDAP benchmarks. We saw a much better increase in data savings, measuring over 55%, coming mostly from compression. In an environment with many similar VMs (multiple Linux or Windows distributions), you'd see the benefits of both compression and deduplication. The same holds true for scenarios like VDI or Test/Dev on primary storage, where the deduplication benefits escalate largely linearly with every new VM created.

With all data reduction services, there is a performance tradeoff due to the system overhead required for the related tasks and metadata management. We saw slow single-threaded transfer speeds (copy/paste actions inside VMs, or SvMotion activities between datastores), topping out at roughly 100MB/s. This included both read and write operations, with near 100% cache hits. Interestingly, we saw very little difference in performance across all of our workloads with data reduction services enabled or completely disabled. By comparison, many arrays in this segment without data reduction services can come close to saturating a single 10Gb Ethernet or 16Gb FC interface through similar data movement activities.

Testing Background and Comparables

We publish an inventory of our lab environment, an overview of the lab's networking capabilities, and other details about our testing protocols so that administrators and those responsible for equipment acquisition can fairly gauge the conditions under which we have achieved the published results. To maintain our independence, none of our reviews are paid for or managed by the manufacturer of equipment we are testing.

We will be comparing the Tegile HA2300 to the Dot Hill AssuredSAN Ultra48, AMI StorTrends 3500i, X-IO ISE 710, HP StoreVirtual 4335, and the Dell EqualLogic PS6210XS. For all synthetic and application tests, compression and deduplication were disabled during performance runs. The array was deployed by a Tegile field rep in our lab, while the LUNs for synthetic benchmarks and our SQL Server test were configured remotely by a Tegile technical marketing representative. LUNs for VMmark were configured using the "virtual server" profile with snapshots disabled and attached through each ESXi host using the VMware iSCSI software adapter.

With each platform tested, it is very important to understand how each vendor configures the unit for different workloads as well as the networking interface used for testing. The amount of flash used is just as important as the underlying caching or tiering process when it comes to how well it will perform in a given workload. The following list shows the amount of flash and HDD, how much is usable in our specific configuration and what networking interconnects were leveraged:

- Tegile HA2300 w/ Expansion Shelf

- List price: $100,443 base configuration, $185,000 as tested with additional storage shelf

- Raw Usable Capacity before data reduction: 13.4TB (6.2TB first shelf + 7.14TB second shelf)

- Flash: 12 x 200GB HGST eMLC SAS2 SSDs

- HDD: 36 x 1TB Seagate SAS2 7200RPM HDDs

- Network Interconnect: 10Gb, 2 x 10Gb per controller

- Dot Hill AssuredSAN Ultra48 (Hybrid)

- List price: $113,158

- Flash: 800GB (4 x 400GB HGST SAS3 SSDs, 2 RAID1 pools)

- HDD: 9.6TB (32 x 600GB 10K 6G SAS HDDs, 2 RAID10 pools)

- Network Interconnect: 16Gb FC, 4 x 16Gb FC per controller

- AMI StorTrends 3500i

- List price: $87,999

- Flash Cache: 200GB (200GB SSDs x 2 RAID1)

- Flash Tier: 1.6TB usable (800GB SSDs x 4 RAID10)

- HDD: 10TB usable (2TB HDDs x 10 RAID10)

- Network Interconnect: 10GbE iSCSI, 2 x 10GbE Twinax per controller

- HP StoreVirtual 4335 – 3 Nodes

- List price: $41,000 per node, $123,000

- Flash: 1.2TB usable (400GB SSDs x 3 RAID5 per node, network RAID10 across cluster)

- HDD: 10.8TB usable (900GB 10K HDDs x 7 RAID5 per node, network RAID10 across cluster)

- Network Interconnect: 10GbE iSCSI, 1 x 10GbE Twinax per controller

- Dell EqualLogic PS6210XS

- List price: $134,000

- Flash: 4TB usable (800GB SSDs x 7 RAID6)

- HDD: 18TB usable (1.2TB 10K HDDs x 17 RAID6)

- Network Interconnect: 10GbE iSCSI, 2 x 10GbE Twinax per controller

- X-IO ISE 710

- List price: $115,000

- 800GB Flash (200GB SSDs x 10 RAID10)

- 3.6TB HDD (300GB 10K HDD x 30 RAID10)

- Network Interconnect: 8Gb FC, 2 x 8Gb FC per controller

This benchmark makes use of our ThinkServer RD630 benchmark environment:

- Lenovo ThinkServer RD630 Testbed

- 2 x Intel Xeon E5-2690 (2.9GHz, 20MB Cache, 8-cores)

- Intel C602 Chipset

- Memory – 16GB (2 x 8GB) 1333MHz DDR3 Registered RDIMMs

- Windows Server 2008 R2 SP1 64-bit, Windows Server 2012 Standard, CentOS 6.3 64-Bit

- Boot SSD: 100GB Micron RealSSD P400e

- LSI 9211-4i SAS/SATA 6.0Gb/s HBA (For boot SSDs)

- LSI 9207-8i SAS/SATA 6.0Gb/s HBA (For benchmarking SSDs or HDDs)

- Emulex LightPulse LPe16202 Gen 5 Fibre Channel (8GFC, 16GFC or 10GbE FCoE) PCIe 3.0 Dual-Port CFA

- Mellanox SX1036 10/40Gb Ethernet Switch and Hardware

- 36 40GbE Ports (Up to 64 10GbE Ports)

- QSFP splitter cables 40GbE to 4x10GbE

Application Performance Analysis

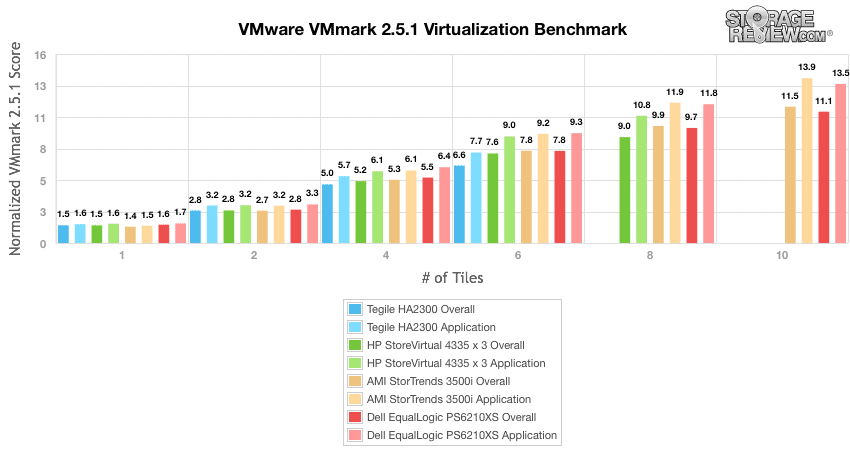

Our first two benchmarks of the Tegile IntelliFlash HA2300 are the VMware VMmark Virtualization Benchmark and the Microsoft SQL Server OLTP Benchmark, which both simulate application workloads similar to those that the HA2300 and its comparables are designed to serve.

The StorageReview VMmark protocol utilizes an array of sub-tests based on common virtualization workloads and administrative tasks with results measured using a tile-based unit. As an industry-recognized and established benchmark, VMmark puts compute and storage into a level playing field with little or no benchmark modification allowed. Tiles measure the ability of the system to perform a variety of virtual workloads such as cloning and deploying of VMs, automatic VM load balancing across a datacenter, VM live migration (vMotion) and dynamic datastore relocation (storage vMotion).

The normalized VMmark application scores and overall scores demonstrate the Tegile HA2300's performance against the comparable arrays at lower numbers of tiles. The HA2300 does begin to fall behind at 6 tiles with an overall score of 6.6, compared to the next-lowest overall score of 7.6 from the HP StoreVirtual 4335. The dual storage pools of our HA2300 were not powerful enough to go beyond 6 tiles, with an equal number of tiles assigned to each controller. In this test the HP StoreVirtual 4335 topped out at 8 tiles, while the Dell EqualLogic PS6210XS and AMI StorTrends 3500i reached 10 tiles.

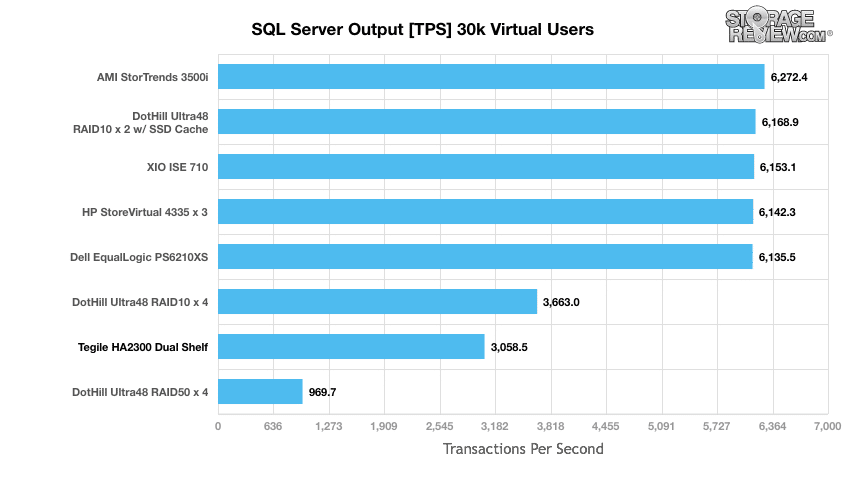

StorageReview’s Microsoft SQL Server OLTP testing protocol employs the current draft of the Transaction Processing Performance Council’s Benchmark C (TPC-C), an online transaction processing benchmark that simulates the activities found in complex application environments. The TPC-C benchmark comes closer than synthetic performance benchmarks to gauging the performance strengths and bottlenecks of storage infrastructure in database environments. Our SQL Server protocol for this review uses a 685GB (3,000 scale) SQL Server database and measures the transactional performance and latency under a load of 30,000 virtual users.

Our SQL Server benchmark leverages a large single database and log file that we present one single 1TB LUN to our Windows Server 2012 host with MPIO enabled. When configuring the Tegile HA2300 for our SQL Server benchmark, we leveraged a single 1TB LUN. This puts the load onto one controller, backed by one disk pool, leaving one controller and disk pool idle in its active/active configuration. All devices in this category are put in the same position, with one LUN and controller driving the activity behind this benchmark.

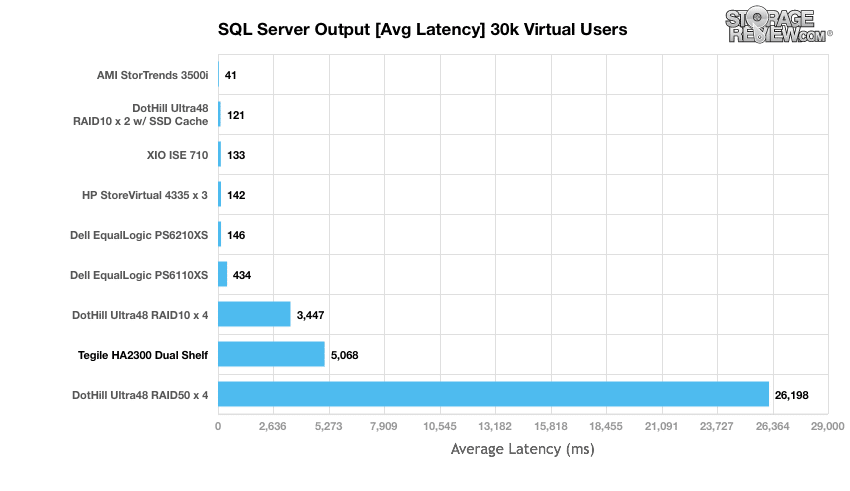

The Tegile HA2300 measured 3,058.5 transactions per second in this benchmark, placing it ahead of the all-HDD RAID50 DotHill Ultra48 configuration. This performance falls at about half the level of the highest performing hybrid arrays tested with this protocol.

The HA2300 maintained an average latency of 5,068ms during the SQL Server benchmark, the second-lowest performance among the comparables.

It should be noted that Microsoft SQL Server during the TPC-C workload is very write intensive, committing its log buffer to disk on regular intervals. As write performance slows and a storage array can no longer keep up, outstanding queues pile up and substantially increase latency. This can be seen with the Tegile HA2300, as well as the DotHill Ultra48 in a RAID50 configuration. During the initial setup of our SQL Server test with our Windows Server 2012 platform, we noted single-threaded write speeds topping 100MB/s through basic copy/paste actions moving our pre-built database onto the LUN presented from the HA2300. This slow write speed was also visible in our VMware environment during storage vMotion activities before and during our tests. Multi-threaded synthetic workloads where we were able to drive up the outstanding queue depths were able to see upwards of 1.1GB/s read and 755MB/s write, but these weren't visible in any of our application use cases.

Enterprise Synthetic Workload Analysis

Prior to initiating each of the fio synthetic benchmarks, our lab preconditions the device into steady-state under a heavy load of 16 threads with an outstanding queue of 16 per thread. Then the storage is tested in set intervals with multiple thread/queue depth profiles to show performance under light and heavy usage.

- Preconditioning and Primary Steady-State Tests:

- Throughput (Read+Write IOPS Aggregated)

- Average Latency (Read+Write Latency Averaged Together)

- Max Latency (Peak Read or Write Latency)

- Latency Standard Deviation (Read+Write Standard Deviation Averaged Together)

This synthetic analysis incorporates two profiles which are widely used in manufacturer specifications and benchmarks:

- 4k – 100% Read and 100% Write

- 8k – 70% Read/30% Write

While synthetic workloads can be helpful to drive a strong and repeatable load against storage devices, they offer diminishing value for customers trying to correlate IOPS and latency with database, virtualization or other application performance. Unlike application workloads, synthetic workload generators can also be massaged significantly, including the type of data being applied, the size of the workload, how many threads, the number of outstanding I/Os, how random the random workload is, or even how the load is applied to the underlying storage. That doesn't even come close to describing the scope and capabilities of FIO, IOMeter, or vdBench, instead just scratches the surface. To keep our benchmarks relevant as we compare different storage arrays, we apply the identical scripts and configuration to all platforms that come into our lab. While that might mean some platforms can most likely see different or higher numbers with different configurations, showing like to like results puts all platforms onto a level playing field. Currently we test in a Windows Server 2012 environment, using 8 25GB LUNs assigned to the server, evenly distributed across the array. This confines the workload into the storage or DRAM tier, while a long preconditioning period forces it to migrate up into the highest performance tier or cache.

At the time this review was started, as well as for the reviews of other same-generation hybrid storage platforms, we used the following FIO script to address our 8 defined LUNs as part of the same FIO thread.

fio.exe –filename=\\.\PhysicalDrive1:\\.\PhysicalDrive2:\\.\PhysicalDrive3:\\.\PhysicalDrive4:\\.\PhysicalDrive5:\\.\PhysicalDrive6:\\.\PhysicalDrive7:\\.\PhysicalDrive8 –thread –direct=1 –rw=randrw –refill_buffers –norandommap –randrepeat=0 –ioengine=windowsaio –bs=4k –rwmixread=100 –iodepth=16 –numjobs=16 –time_based –runtime=60 –group_reporting –name=FIO_group_test –output=FIO_group_test.out

This workload can also be applied where each LUN gets its own dedicated FIO thread. Using a modified script the FIO measured performance of the Tegile HA2300 can increase upwards of 50%, although those results are no longer like for like comparable to other arrays we've tested. It goes without saying that other arrays would see improvements or changes as well. An example of this change would be similar to the one below:

fio.exe –thread –direct=1 –rw=randrw –refill_buffers –norandommap –randrepeat=0 –ioengine=windowsaio –bs=4k –rwmixread=100 –iodepth=16 –numjobs=16 –time_based –runtime=60 –group_reporting –name=thread1 filename=\\.\PhysicalDrive1 –name=thread2 filename=\\.\PhysicalDrive2 –name=thread3 filename=\\.\PhysicalDrive3 –name=thread4 filename=\\.\PhysicalDrive4 –name=thread5 filename=\\.\PhysicalDrive5 –name=thread6 filename=\\.\PhysicalDrive6 –name=thread7 filename=\\.\PhysicalDrive7 –name=thread8 filename=\\.\PhysicalDrive8 –output=FIO_test.out

It's important to note that both benchmarks reach the same conclusion. They show a repeatable result that within the confines of those parameters offer a certain number of IOPS, latency and bandwidth. Neither number can be compared against one another. Both tests also won't show how a platform will perform in a real-world production environment either, and it can't since it doesn't introduce application demands.

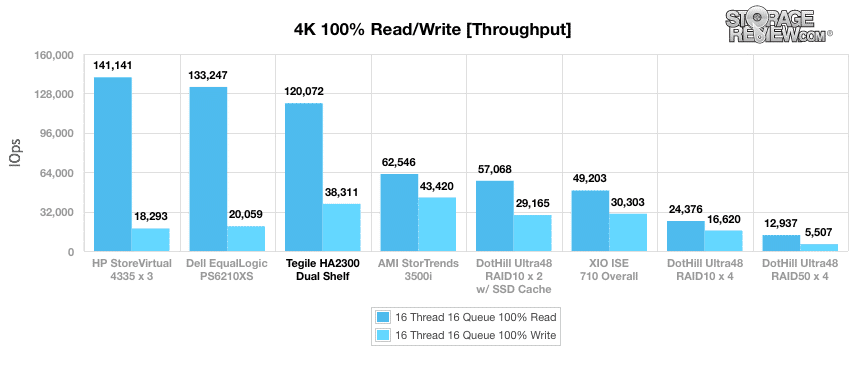

Our first benchmark measures the performance of random 4k transfers comprised of 100% write and 100% read activity. The Tegile HA2300 measured 120,072 IOPS, the third-highest read performance among the comparables in this benchmark, and 38,311 IOPS, the 4k category's second-highest write performance.

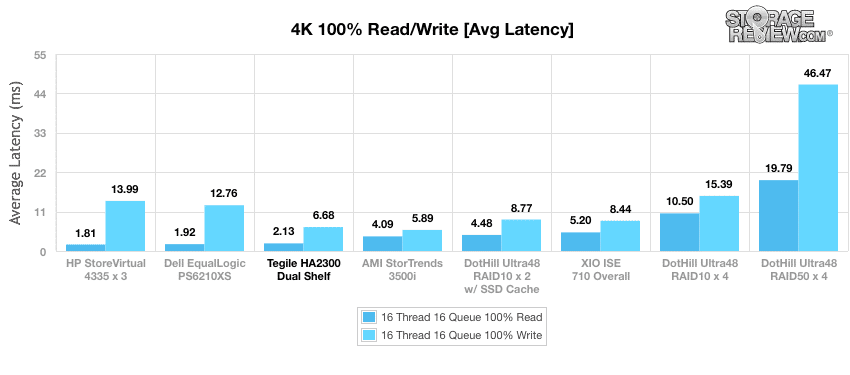

The Tegile HA2300 experienced an average read latency of 2.13ms and a write latency of 6.68ms, also third-best and second-best, respectively, among the comparable arrays.

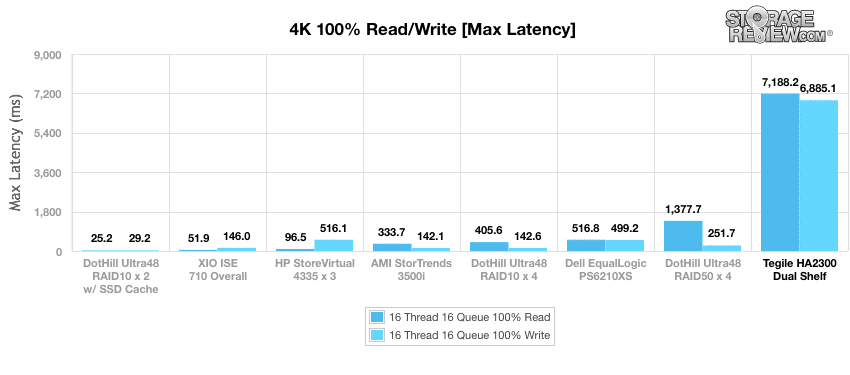

We measured maximum latencies for the Tegile HA2300 that were much greater than those of its comparables. The maximum read latency was measured at approximately 7,188ms and the maximum write latency reached 6,885ms.

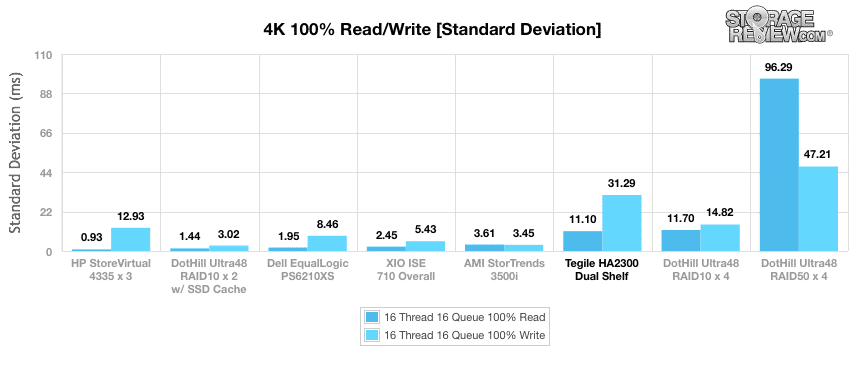

Standard deviation calculations show the Tegile HA2300 as much less consistent than its hybrid peers in terms of latency, at 11.10ms for read operations and 31.29ms for write operations. By comparison the DotHill Ultra48 configured with all HDDs ranked towards the bottom of this category, although once SSDs were introduced it came in second in read consistency and first in write consistency.

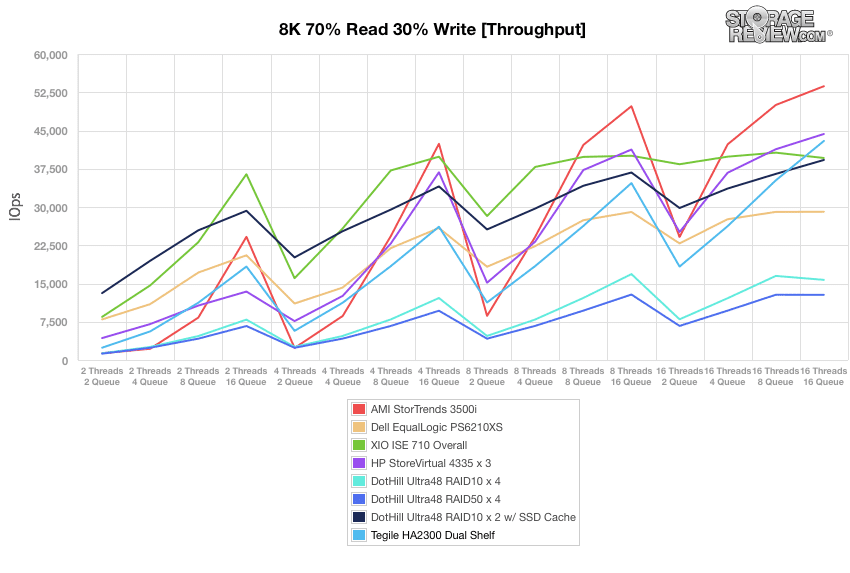

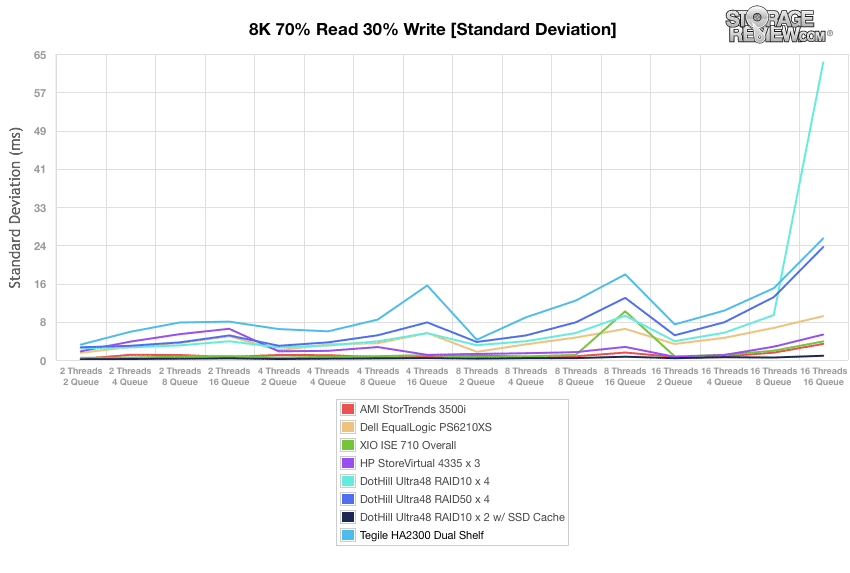

Moving to the 8k 70% read 30% write random workload, the Tegile HA2300 scaled in performance from 2,405 IOPS at 2T/2Q up to 42,957 IOPS at 16T/16Q. At lower thread and queue levels this measured roughly middle of the pack, while under full load it came in 3rd place behind the HP StoreVirtual 4335 cluster and AMI StorTrends 3500i.

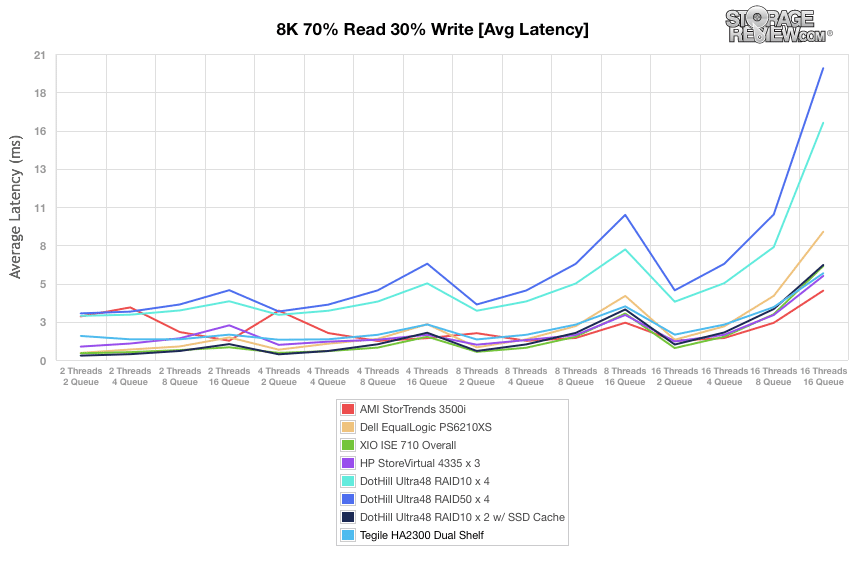

Average latency results for the 8k 70/30 benchmark measured 1.65ms at a load of 2T/2Q and increased to 5.95ms under the peak load of 16T/16Q.

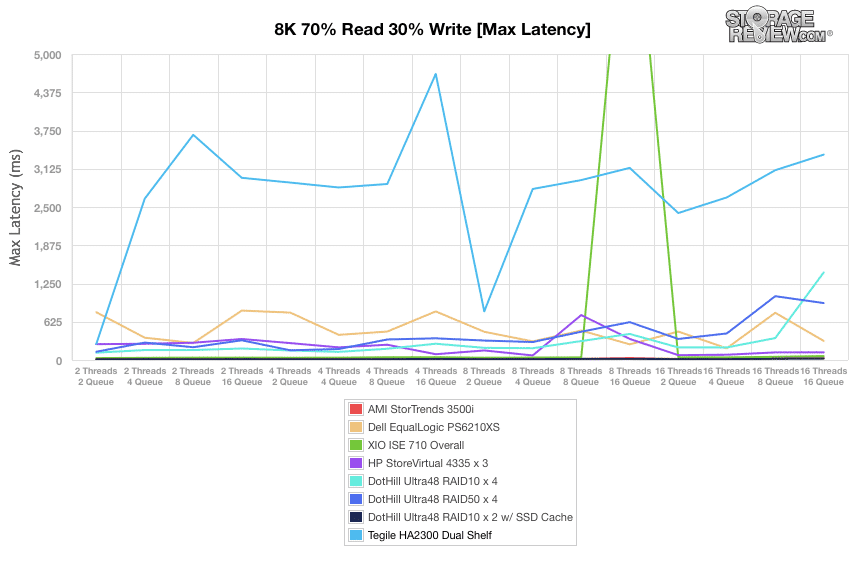

With a few exceptions, the Tegile HA2300 experienced the highest latencies among the arrays, which are compared in the 8k 70/30 benchmark. The highest latency we measured for the TA3200 was 4,674.84ms, which occurred with 4T/16Q.

The Tegile HA2300's standard deviation calculations for the 8k 70/30 benchmark are not unlike its standard deviation results for the 4k benchmark: they put the HA2300 in a more positive light than the maximum latency chart, but still portray the HA2300 as less consistent with latency than its comparables.

Conclusion

The HA2300 combined with its ES2400 expansion shelf is highly flexible allowing customers to use all HDD, flash, or a combination of the two, all of which can be replaced (along with other hardware) with zero downtime. An Intelligent caching algorithm moves hot data to the faster flash tier, along with that feature there is application-aware provisioning to set volumes for specific applications. Inline compression and deduplication capabilities minimize data footprint, which is a growing problem for all data centers. The HA2300 supports multiple host platforms being Citrix ready and can integrate with VMware and Microsoft virtualization software. Tegile’s HA2300 is also backed by its IntelliCare customer care program.

As far as performance goes, the results were somewhat mixed. In our application performance analysis the HA2300 came in behind other competing solutions in the VMmark benchmark, topping out at 6 tiles where others reached 8 or 10 tiles, or much higher in the case of the DotHill Ultra48 Hybrid array. In the SQL Server TPC-C benchmark it came in near the bottom with lower TPS and higher latency than other hybrid systems. The array faired quite a bit better in synthetic tests coming in third in both 4K throughput and 4K average latency. In max latency and standard deviation tests though, the HA2300 had the highest latencies of the hybrid arrays tested.

The Tegile array is a bit of an enigma as a result. On one hand it's easy to deploy and manage, has a deep set of data services and features and is one of the few in primary storage with hard drives that allows for compression and deduplication. The resulting smaller data footprint on disk gives Tegile a huge competitive advantage when it comes to discussing cost per TB both in this array and in their all-flash hybrids as well. Data reduction services come though with a hit in performance as we saw more or less. There's an overhead to contend with whether data reduction is enabled or not. Therein lies the rub with Tegile.

For many organizations, array performance may not be the primary decision criteria though. In a lot of ROBO and midmarket/SMB use cases, all HDD arrays are just fine for the needs of the applications. The truth is not every organization needs flash, at least from a performance perspective (save the TCO argument for another time). The HA2300 for its part addresses these needs just fine, where cost per TB, ease of management and data services outweigh performance demands. Overall though, it's just not a high-performance player in the hybrid space, which at this point in time appears to be the domain of traditional storage arrays that are not leveraging data reduction services or perhaps one of Tegile's all-flash hybrid offerings.

Pros

- Offers data reduction services

- Strong 4K throughput and average latency

- Feature rich WebGUI

Cons

- Relatively weak performance SQL Server and VMmark

- Inconsistent latency and high peak response times

The Bottom Line

The Tegile HA2300 is a hybrid array platform with a versatile feature set crowned by inline compression and deduplication engines. Its strengths lie in configuration flexibility, data services, protocol support and compelling overall cost profile per TB, although it doesn't necessarily meet performance parity with many of its peers.

Sign up for the StorageReview newsletter