The deployment and ongoing management of computing resources in small offices and remote/branch office (ROBO) environments have always been problematic, with many different and competing factors at play. Many enterprises, as well as small and mid-size businesses (SMBs), depend on ROBO HCI systems to handle the day-to-day business-critical transactions that are the lifeblood of these organizations. These systems need to be low-cost yet performant, offer redundancy yet have as few components as possible, and be well maintained yet not have expensive IT resources and personnel dedicated to each site.

with Dell EMC Solutions for Microsoft Azure Stack HCI

The deployment and ongoing management of computing resources in small offices and remote/branch office (ROBO) environments have always been problematic, with many different and competing factors at play. Many enterprises, as well as small and mid-size businesses (SMBs), depend on ROBO HCI systems to handle the day-to-day business-critical transactions that are the lifeblood of these organizations. These systems need to be low-cost yet performant, offer redundancy yet have as few components as possible, and be well maintained yet not have expensive IT resources and personnel dedicated to each site.

Fortunately, IT suppliers have recognized the unique challenges of ROBO systems and have come up with solutions to address them. In this article, we will look at how Dell Technologies hardware running Microsoft software tackles these challenges. Our approach will be a little bit different from that of our regular articles where we would usually focus on the system performance. Although we will be running performance tests on the system, we will also take a look at its entire lifecycle beginning with its initial sizing.

ROBO HCI Introduction

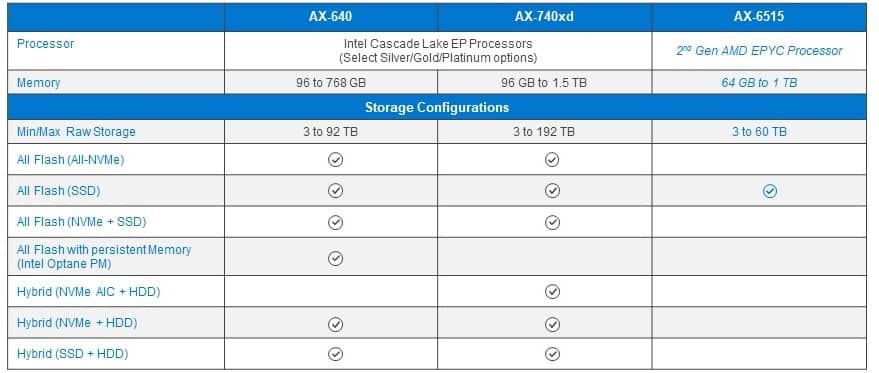

The system that we will be looking at in this article is a Dell EMC Solutions for Microsoft Azure Stack HCI cluster with 2 AX nodes running Windows Server 2019 (2NC). Earlier this year, Dell Technologies released AX nodes specifically designed (with validation and certification) to run Azure Stack HCI. Dell Technologies currently offers three different node types in their solution catalog: AX-640, AX-740xd, and AX-6515. Each of these models provides customers the ability to design the ideal platform for their ROBO HCI deployments through configuration with different components.

The AX-640 and AX-740xd nodes are dual-socket nodes that use second-generation Intel Xeon Scalable processors, while the AX-6515 is a single-socket node that runs a 64-core Gen 2 AMD EPYC processor. Dell EMC’s AX models allow customers to choose the nodes that best suit their use case. The AX-640 is geared for compute-density workloads, the AX-740xd for storage capacity heavy workloads, and the AX-6515 for users who require a value-optimized system and processor diversification in their enterprise data centers.

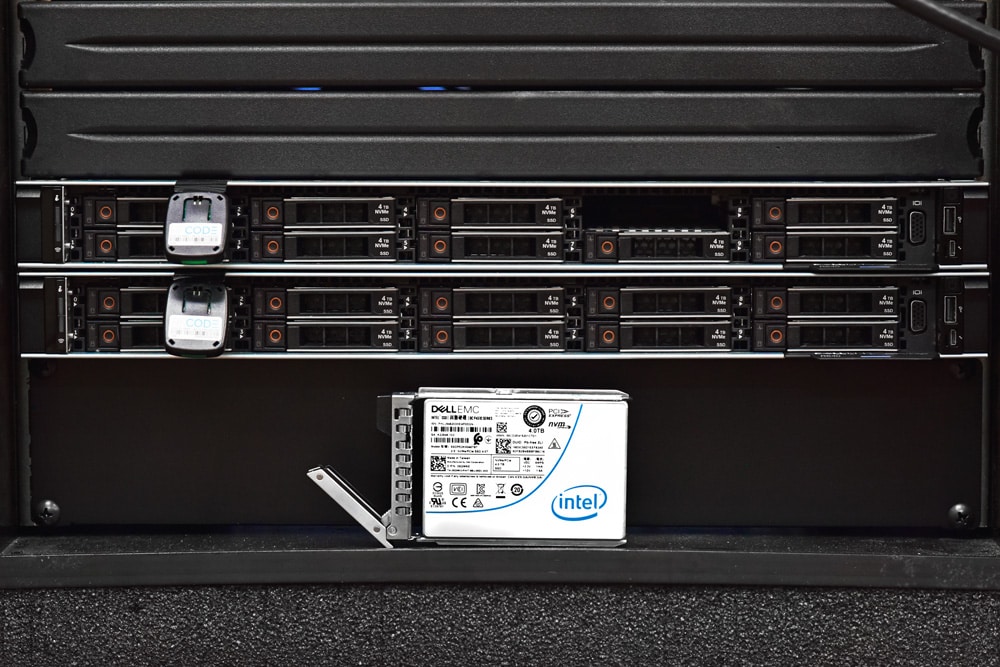

The system that we will be taking a closer look at in this article is the AX-640, a dual-socket 1U node that supports 96 to 768GB of RAM. It can be configured with 3 to 92TB of NVMe, SSD, and/or HDD storage to create hybrid or all-flash storage. It is currently the only node in the AX portfolio that supports Intel ultra-high performance Optane Persistent Memory and SSD devices. When properly configured, the AX-640 node is a strong contender for the title of the fastest HCI node commercially available. Dell Technologies has a nice chart that outlines their Azure Stack HCI configuration options for their AX nodes.

The AX-640 node that we will be using in this article came equipped with dual Intel Xeon 6230 CPUs, 384GB of DDR4 memory, as well as ten 4TB NVMe SSDs.

Having reliable and performant hardware is only half the story when deploying a ROBO HCI solution; the other half is the software. In this case, we will be running an Azure Stack HCI validated system. Azure Stack HCI allows customers to run a Windows Server OS with the added benefit of seamlessly connecting to the Azure cloud for additional services (such as backups and disaster recovery) via the Microsoft Windows Admin Center. The Azure services are integrated via WAC extensions from the same management plane.

Azure Stack HCI uses Hyper-V for its hypervisor and Storage Spaces Direct for its local storage. Using a 2NC for ROBO HCI deployments can considerably lower the cost of implementation. For extremely cost-conscious implementations, it can be configured to work in a 2NC configuration without a switch in a single or dual-link configuration for its storage fabric. For switched implementations, a 10GbE network will work. Dell Technologies recommends a 25GbE storage network as it will not cost much more than the 10GbE network.

Obviously, lessening the investment that a company makes in equipment is a non-starter if the system is non-resilient. On a per-system basis, Storage Spaces Direct supports two-way and three-way mirroring and single and dual parity erasure coding. Microsoft has done a good job documenting the storage efficiency, and general advantages and tradeoffs of these different protection schemes. We recommended reading it over to decide which scheme would be best suited to your environment. Mirroring is usually the most performant, which is what we used in our testing.

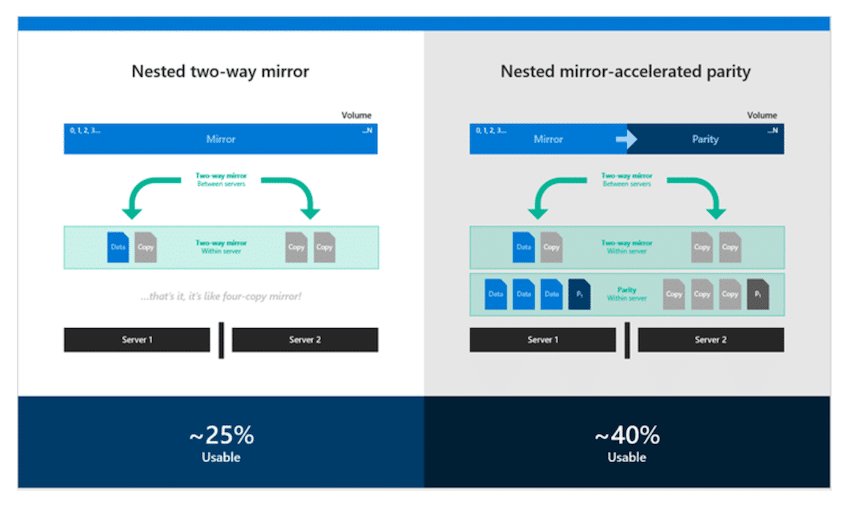

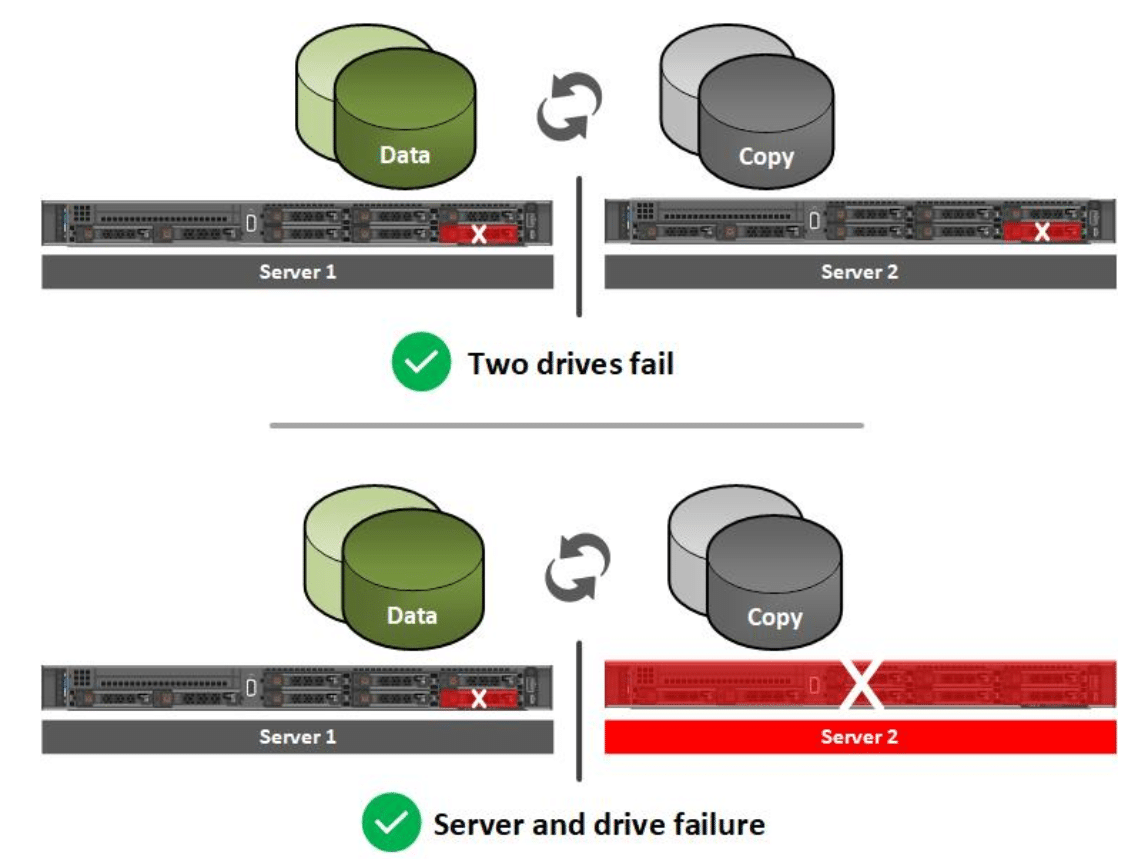

Azure Stack HCI can use nested two-way mirroring or nested mirror-accelerated parity as one option for resilience. The first offers better performance, and the later allows greater data efficiency. Nested two-way mirroring makes a RAID 1 copy of the data on the host and on the other node. Nested mirror-accelerated parity makes a copy of the data on each server but uses erasure coding, rather than RAID 1, for data resiliency (except for the recent writes which use two-way mirroring to ensure reliability). Nested two-way mirroring has a data efficiency rate of 25% as four copies of the data are written to disk; in comparison, nested mirror-accelerated parity has a data efficiency rate of 33% to 40%.

Both schemes are capable of simultaneously supporting a drive failure and server failure.

Neither nested resiliency schemes require special RAID hardware.

Microsoft 2NC topology requires a witness that acts as a neutral third-party to add a vote to the surviving node to prevent a “split-brain” scenario. You can either use a file share (which we used in our testing) or the Azure cloud as the witness. Microsoft recommends the latter if both nodes in the cluster have a reliable internet connection. The Azure cloud witness is a blob storage object while the file share is an SMB file share. The witness only contains the witness log file.

ROBO HCI Procurement and Deployment

As promised, we wanted to take a holistic look at what it takes to procure, deploy, and manage an AX node cluster in a ROBO situation.

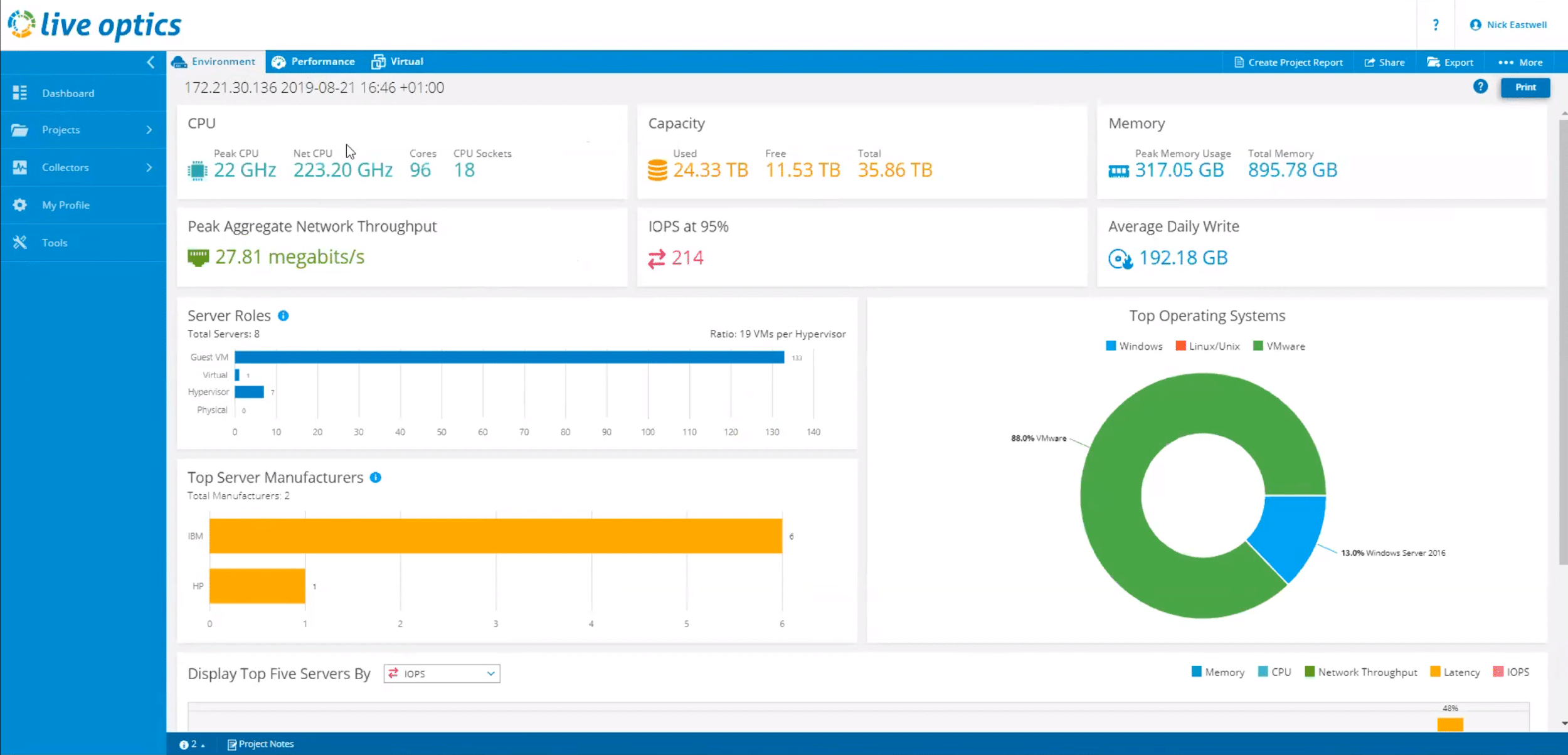

The first step when deploying a new system is to size it. Dell Technologies makes it easy to calculate the equipment that will be required for deployment with Live Optics, a free online tool used to collect data about an environment’s storage, data protection, server, and file systems. Though it is capable of gaining insights into your environment just 24 hours after deployment, the longer you let Live Optics run, the better understanding it will have regarding the characteristics of the workloads that you are running. Live Optics can collect data from Microsoft Windows, VMware vCenter, or Linux/Unix servers.

The Live Optics dashboard will present compiled collected data from the CPU, memory, and storage usage of your entire environment, all of which will provide you with an accurate picture of what type of system you will need in your environment. You can also share this data with other users (i.e., coworkers, VARs, etc.) if you want them to make sizing recommendations.

Data collected from Live Optics is used in the Azure Stack HCI sizer tool available through the Dell Technologies account team. The sizer tool has all Engineering’s best practices baked-in to produce configuration options not only for your current needs but they can take into account your future growth.

One of the issues with ROBO environments is finding local IT talent to set up and configure them. One way to get this done is to use Dell EMC ProDeploy Services. This option helps organizations to speed up the deployment to remote sites, meaning they’re online and adding value right away. Alternatively, if you do have local resources and want to deploy it yourself, Dell has documentation and scripts to walk you through the process.

One of the biggest headaches for any organization is supporting a system. A great deal of the hassle involved with supporting complex systems is the multiple hardware and software vendors involved. For instance, you may have one vendor that supplies the servers and storage, another that provides network switches, and a third that provides the operating system. Dell EMC ProSupport has helped streamline this process by having dedicated support staff for their HCI solutions. These support engineers are trained and knowledgeable about both the hardware and software of a Dell Azure Stack HCI system, and if needed, they know the correct people to escalate issues to.

We had the opportunity to utilize the Dell Technologies HCI dedicated support staff when we inadvertently misconfigured our system installing it. The support engineer that we worked with was very knowledgeable and was able to help us unravel the mess that we got ourselves into.

AX Node Daily Management

In the perfect world, ROBO HCI deployments would require no management at all. That’s not reality though and Dell Technologies and Microsoft have the next best option. When the systems are located in a remote location with little or no local IT support, it is important to have the tools required for system maintenance. Dell Technologies does this by using Windows Admin Center (WAC) with some extensions geared to their own IP, Dell EMC OpenManage Integration for Windows Admin Center.

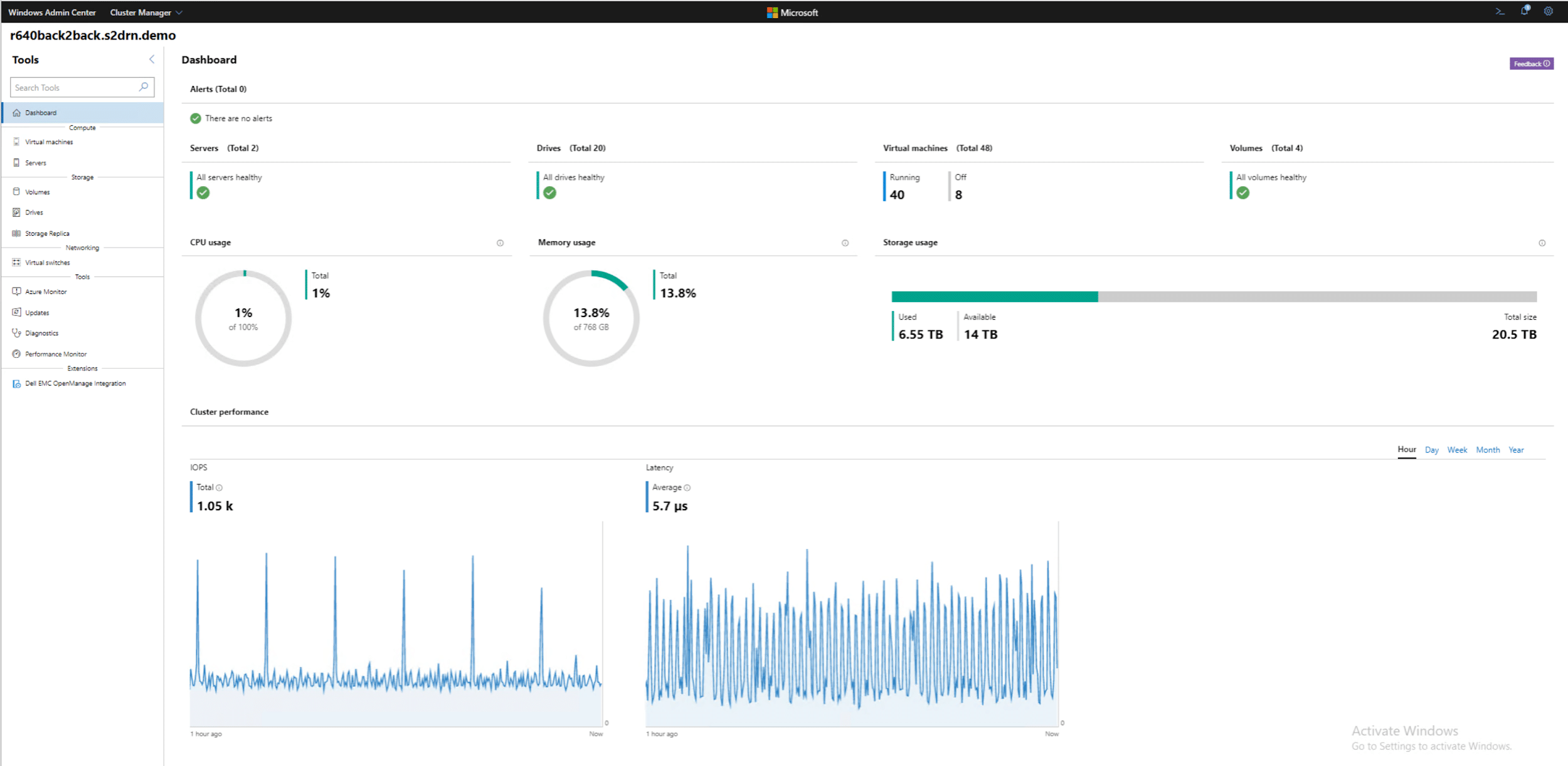

WAC is a browser-based management platform tool for managing Windows 10 and Windows Server. It is installed on a client system and uses remote PowerShell and Windows Management Instrumentation (WMI) over Windows Remote Management (WinRM) to monitor and manage the Windows systems as well as the Azure Stack HCI clusters.

WAC’s overview pane gives a summary of a system’s resource utilization and tools for managing a system’s certificates and devices. WAC also allows you to view events and processes, install roles and features, and manage local users and groups, firewalls, services, and storage.

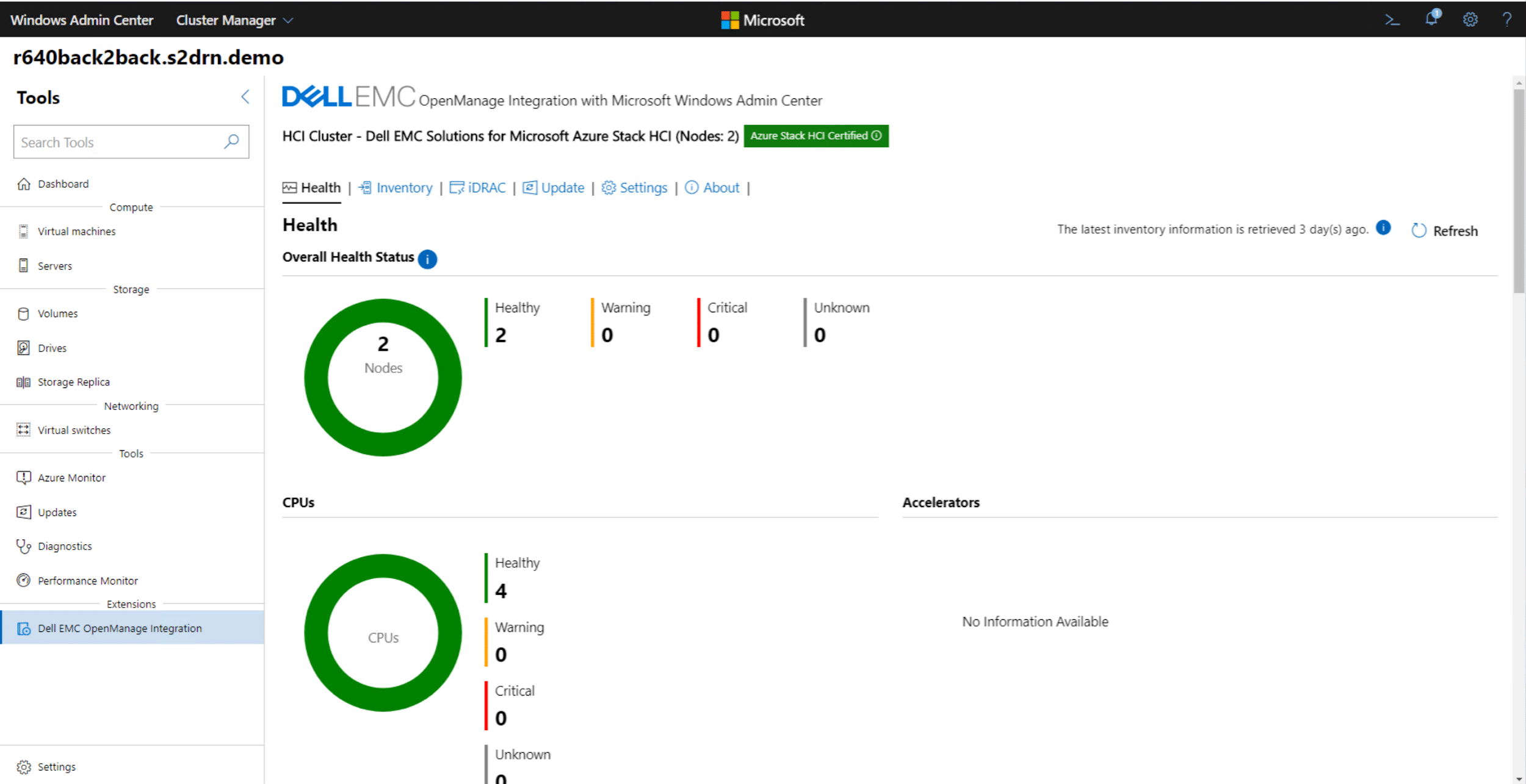

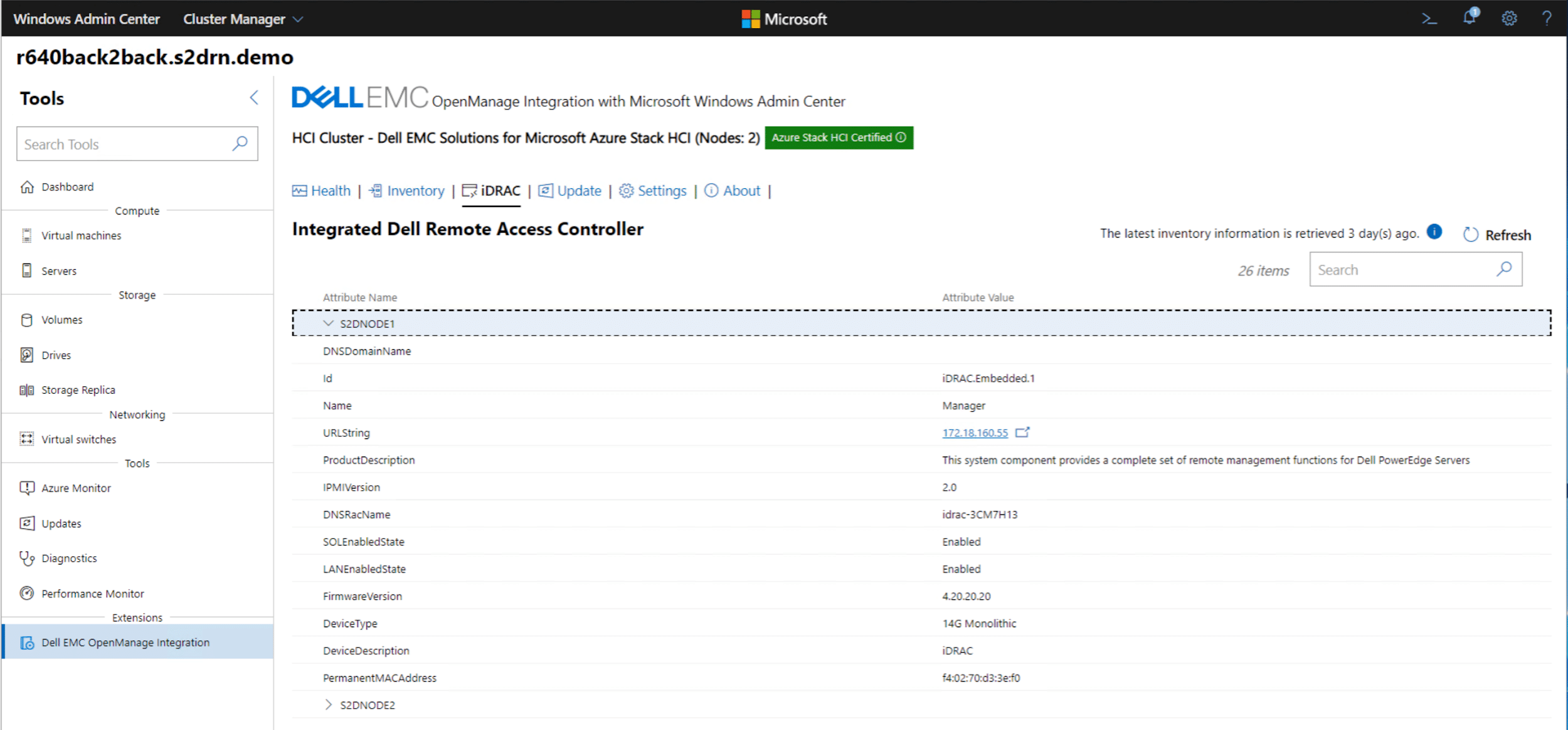

Dell Technologies took advantage of WAC’s extensibility and created Dell EMC OpenManage Integration with Microsoft Windows Admin Center (OMIMSWAC). This was designed to ease and simplify the process of deep hardware monitoring and inventory and orchestrating BIOS, firmware, and driver updates. OMIMSWAC uses the Cluster-Aware Updating feature of Windows Server 2019 to update AX nodes and the Azure Stack HCI cluster. To launch OMIMSWAC, click Dell EMC OpenManage Integration located on the ribbon bar of WAC.

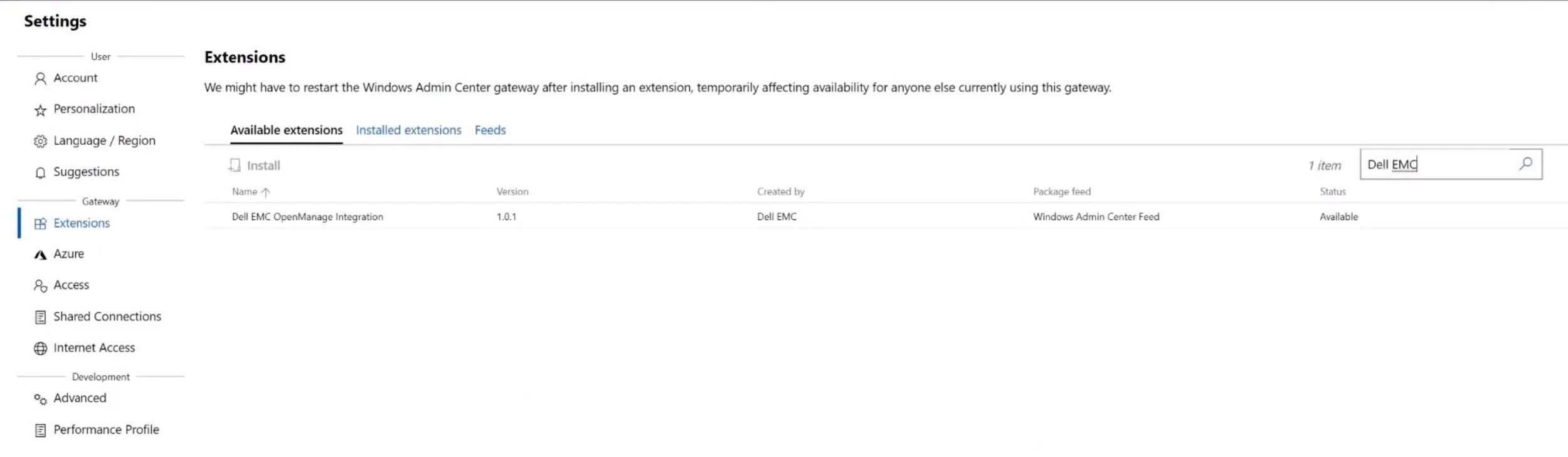

We added OMIMSWAC to our system by launching WAC, clicking Settings and then Extensions, entering Dell EMC in the search text box, selecting Dell EMC Open Manage Integration, and then clicking Install.

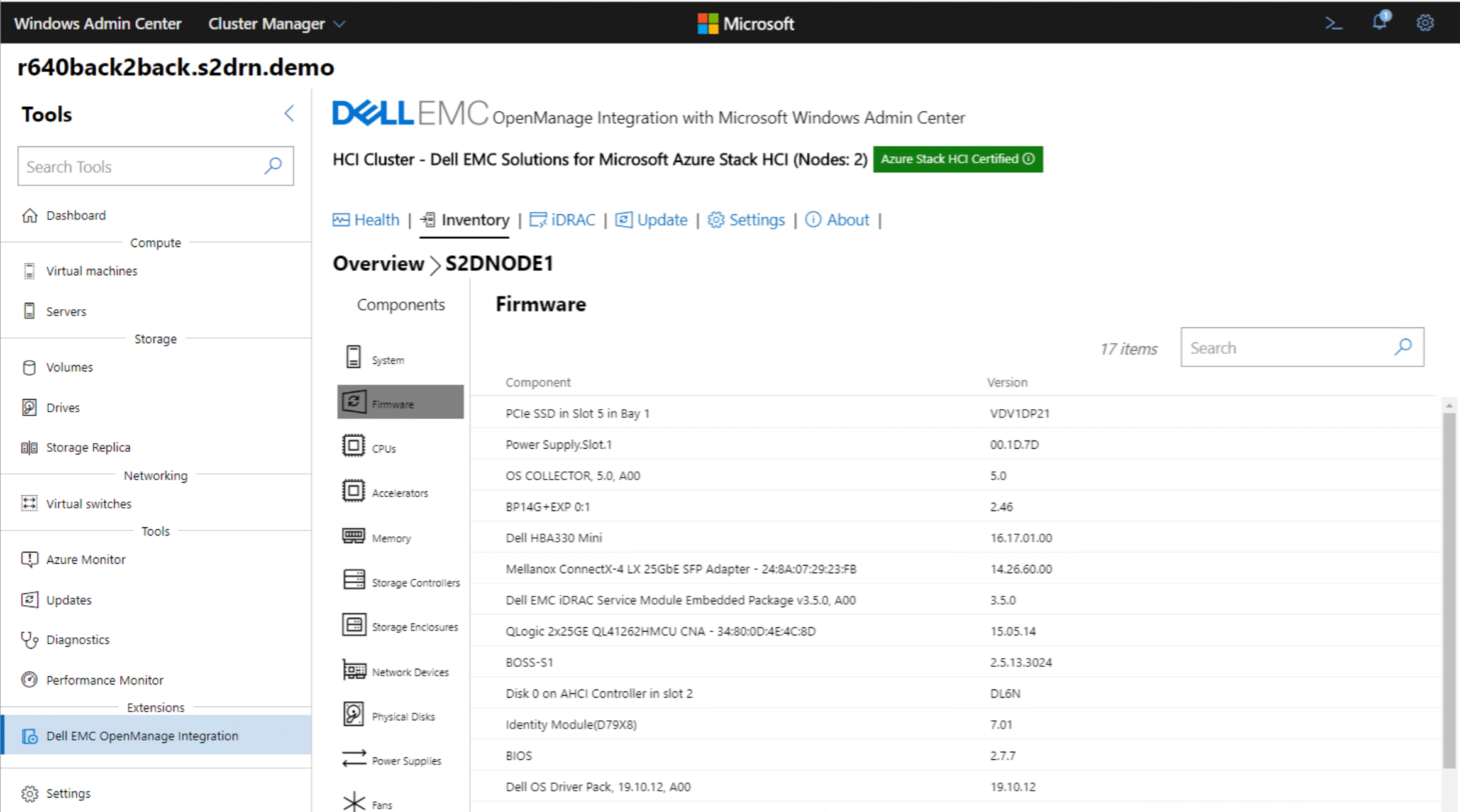

By using OMIMSWAC to look at our cluster, we could see the health of the system and dive down into the hardware deep enough to see an inventory of its components and what firmware they were using.

You can even use OMIMSWAC to launch the iDRAC console for out-of-band management of the AX nodes.

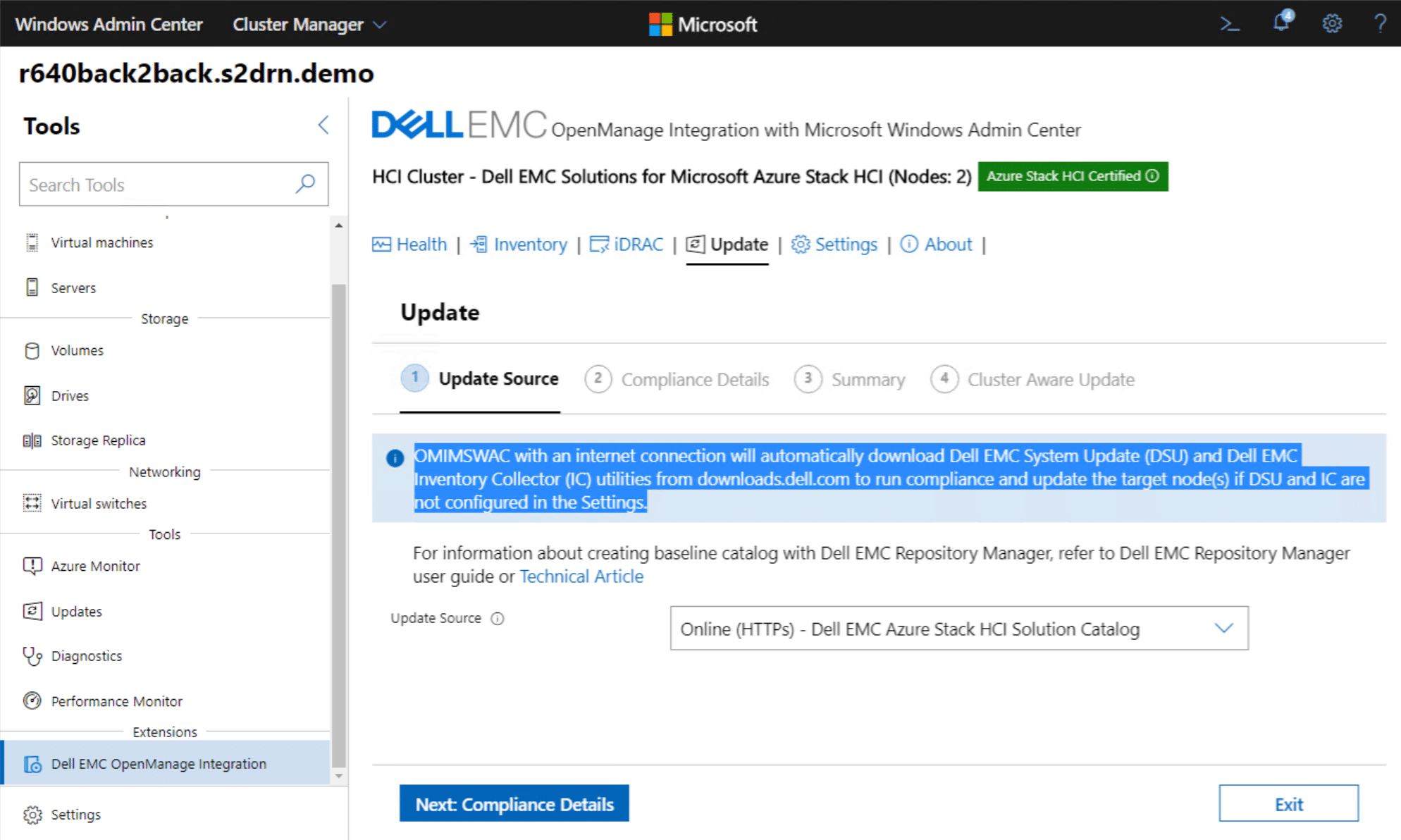

Once you have discovered the cluster, you can use OMIMSWAC to see a compliance report for the nodes in the cluster level. If the system OMIMSWAC is running on has an internet connection it will automatically download Dell EMC System Update (DSU) and Dell EMC Inventory Collector (IC) utilities from downloads.dell.com to run compliance and update the target node(s) if DSU and IC are not configured in the Settings.

OMIMSWAC really shines in accomplishing routine tasks, such as updates. Not only will OMIMSWAC automatically download needed Dell Update Packages (DUPs), it will also do a rolling update of a cluster to eliminate downtime.

AX Node Testing

As we looked at the Dell EMC 2-node HCI cluster, we wanted to look at both its performance, as well as application availability through different failure scenarios. To that end, we configured a SQL Server performance test consisting of up to 8 SQL Server 2019 VMs running Windows Server 2019 balanced on our 2-node cluster. Each SQL Server instance was then given a 1,500 scale TPC-C database where the database and log files equaled 350GB in capacity per instance. This gave us a database storage footprint ranging from 1.4TB with 4VMs up to 2.8TB with 8VMs. We used Quest’s Benchmark Factory as the workload generator for this project, with 15,000 virtual users interacting with each VM.

Each VM was allocated 8 virtual CPUs and 60GB of RAM along with its storage footprint. With our cluster being configured with 384GB of RAM per host, in our failed node scenario we lowered the VM RAM allocation to 40GB to fit all 8VMs on a single host.

Our four database testing scenarios were:

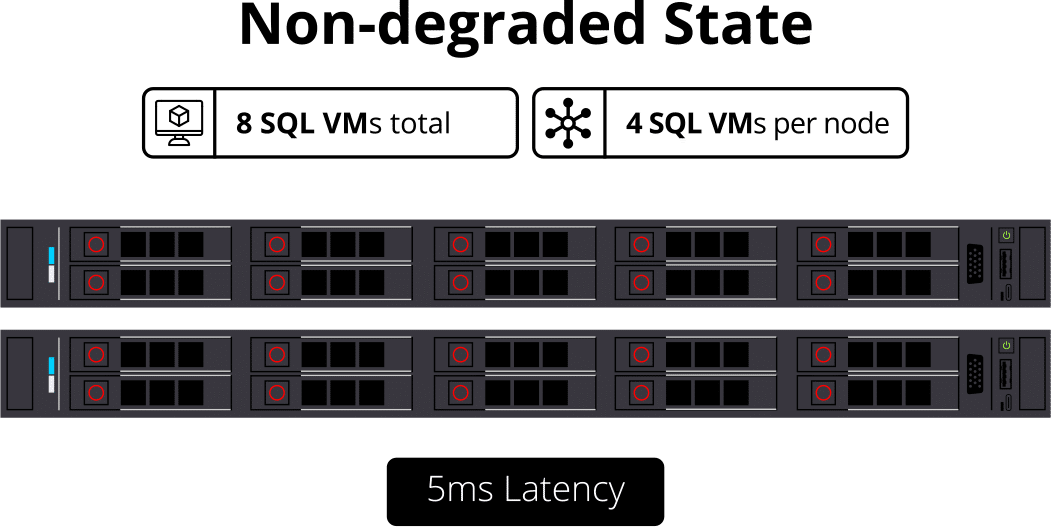

- Working Cluster: 8VMs total, 4VMs per node

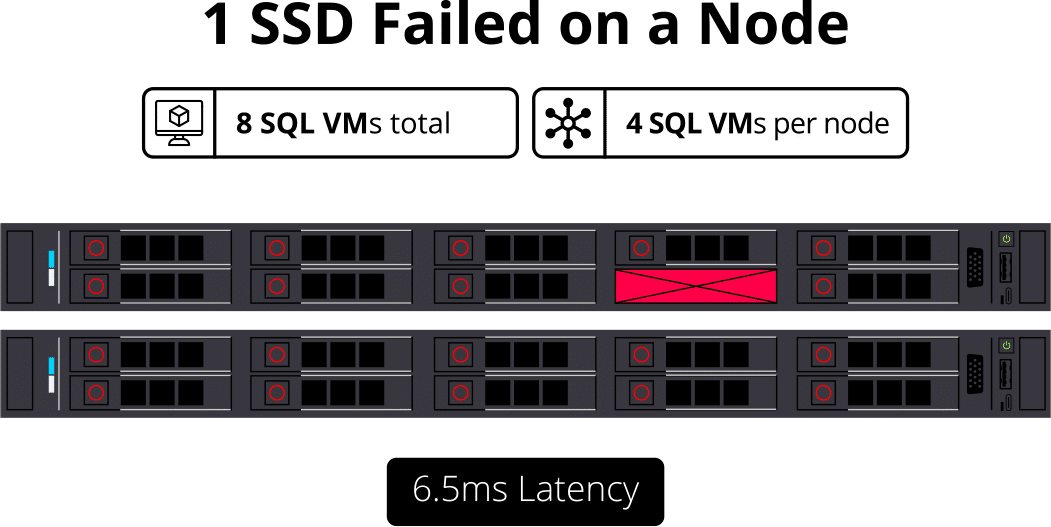

- 1 SSD Failed on a Node: 8VMs total, 4VMs per node

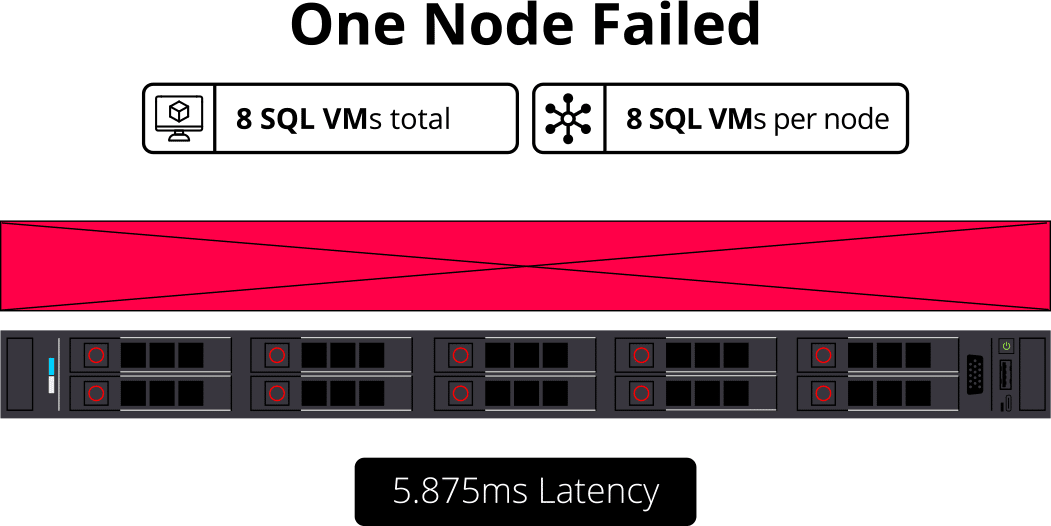

- One Node Failed: 8VMs total, 8 VMs per node

In our first test measuring the performance of 8VMs, 4 on each node, latency measured 5ms average.

While strong performance and low database latency is great, knowing how a platform performs under less-than-optimal conditions is just as important. Our first scenario covered how the platform would respond to a failed SSD. We kicked off the workload and right after it stabilized, we pulled a single SSD from one node. In that situation, performance slowed ever so slightly to 6.5ms from 5ms under normal conditions.

Our second scenario covered how the cluster would operate if a node was offline for maintenance or how things would operate if one failed. In both conditions, everything falls back to just one node, although there is a subtle advantage of no traffic passing over the backend network. In this situation, we measured an average latency of 5.875ms.

Final Thoughts

We are seeing more and more interest in 2NCs for ROBO applications. Companies are looking for systems that are reasonably priced, rock-solid, and only require a minimal amount of interaction with IT staff as access to them can be problematic. Dell EMC Solutions for Azure Stack HCI checks off all these requirements.

We looked at what it would take to correctly size, acquire, and set up a 2NC ROBO HCI system. We were impressed with how easy it was with Dell Technologies. After looking at the initial system setup, we then looked at what would be required to maintain the system and were once again impressed with how easy WAC made this process. What really blew us away, however, was Dell Technologies OMIMSWAC integration as it performed a rolling upgrade of our system, covering everything from the firmware on up, with little operator interaction. This is a fundamental differentiator for Dell Technologies, as this depth of integration is unique for Azure Stack HCI providers.

When we ran our benchmarks on the system, we found strong application workload performance under optimal conditions. Our SQL Server TPC-C workloads measured 2.25ms across four 1,500 scale VMs evenly placed on the cluster and 5ms when that workload was increased to eight VMs. What was even more impressive, though, was how well the cluster performed with a failed SSD or only one node operational. Under the first scenario of a failed SSD, our 8VM workload increased from 5 to 6.5ms latency. With a node completely offline though, latency barely picked up to 5.875ms.

To summarize our testing on this system, we found that it could easily handle the load ROBO deployments would put on it. This is important; these types of deployments should be worrying a lot less about the performance capabilities of a system like this and more about long-term operations. To the first point, Dell Technologies has engineered these AX nodes to the level where performance is largely irrelevant. All of our testing validates even aggressive SQL Server workloads were absorbed without issue.

If performance is effectively solved for ROBO HCI use cases, organizations then need to turn to day 2 operations. Here the Dell EMC AX nodes really start to pull away, the integration with WAC for cluster updates is critical from an ongoing management perspective. Dell Technologies is a clear leader on this front when it comes to Azure Stack HCI. Lastly, organizations should be looking at system resilience. With only two-nodes and in many cases no immediate on-site support, uptime is business-critical. In our testing of several degraded states, the AX nodes soldiered on without interruption, meaning the office will remain online without a hit to application performance. There are a number of ways to deploy Azure Stack HCI, but there is no more comprehensive solution than what Dell Technologies brings to the table with AX nodes.

Dell EMC Azure Stack HCI Solutions

This report is sponsored by Dell Technologies. All views and opinions expressed in this report are based on our unbiased view of the product(s) under consideration.