The rapid progression of Artificial Intelligence in 2023 is unparalleled, and at the center of all this fanfare (drumroll please) are Generative AI models, with a prime example being the Large Language Model (LLM), like ChatGPT. These LLMs have garnered significant attention for their capability to generate human-like text by providing responses, generating content, and aiding in a wide range of tasks. However, like every technological marvel, LLMs are not devoid of imperfections. Occasionally, these models display behavior that appears nonsensical or unrelated to the context. In the lab, we call this phenomenon the ‘Quinn Effect.’

The rapid progression of Artificial Intelligence in 2023 is unparalleled, and at the center of all this fanfare (drumroll please) are Generative AI models, with a prime example being the Large Language Model (LLM), like ChatGPT. These LLMs have garnered significant attention for their capability to generate human-like text by providing responses, generating content, and aiding in a wide range of tasks. However, like every technological marvel, LLMs are not devoid of imperfections. Occasionally, these models display behavior that appears nonsensical or unrelated to the context. In the lab, we call this phenomenon the ‘Quinn Effect.’

The Quinn Effect personafied by generative AI

Defining the Quinn Effect

The Quinn Effect can be understood as the apparent derailment of a generative AI from its intended trajectory, resulting in an output that is either irrelevant, confusing, or even downright bizarre. It might manifest as a simple error in response or as a stream of inappropriate thoughts.

Causes Behind the Quinn Effect

To fully grasp why the Quinn Effect occurs, we must venture into the world of generative AI architectures and training data. The Quinn Effect can be caused by several missteps, including:

- Ambiguity in Input: LLMs aim to predict the next word in a sequence based on patterns from vast amounts of data. If a query is ambiguous or unclear, the model might produce a nonsensical answer.

- Overfitting: Occurs when any AI model is too closely tailored to its training data. In such cases, a model might produce results consistent with minute details from its training set but not generally logical or applicable.

- Lack of Context: Unlike humans, LLMs don’t have a continual memory or understanding of broader contexts. If a conversation takes a sudden turn, the model might lose the thread, leading to outputs that appear off the rails.

- Data Biases: LLMs, including GPT architectures, learn from vast datasets. AI could replicate these patterns if these datasets contain biases or nonsensical data.

- Language Complexity: Natural language is intricate and laden with nuances. Sometimes, the model might grapple with homonyms, idioms, or phrases with multiple interpretations, leading to unexpected results.

Mitigating the Quinn Effect

While complete elimination of the Quinn Effect is a tall order, steps can be taken to mitigate its occurrence, including:

- Regular Updates and Refinement: Continual training and refining the model on diverse and updated datasets can reduce inaccuracies.

- Feedback Loops: Implementing a system where user feedback helps identify and rectify instances where the model goes off the rails can be beneficial.

- Improved Contextual Awareness: Future iterations of models might benefit from mechanisms allowing better context retention over conversation turns.

- Diverse Dataset Sources: Ensuring the training data is comprehensive, covering a wide range of topics, tones, and nuances, can assist in enhancing the robustness of the model.

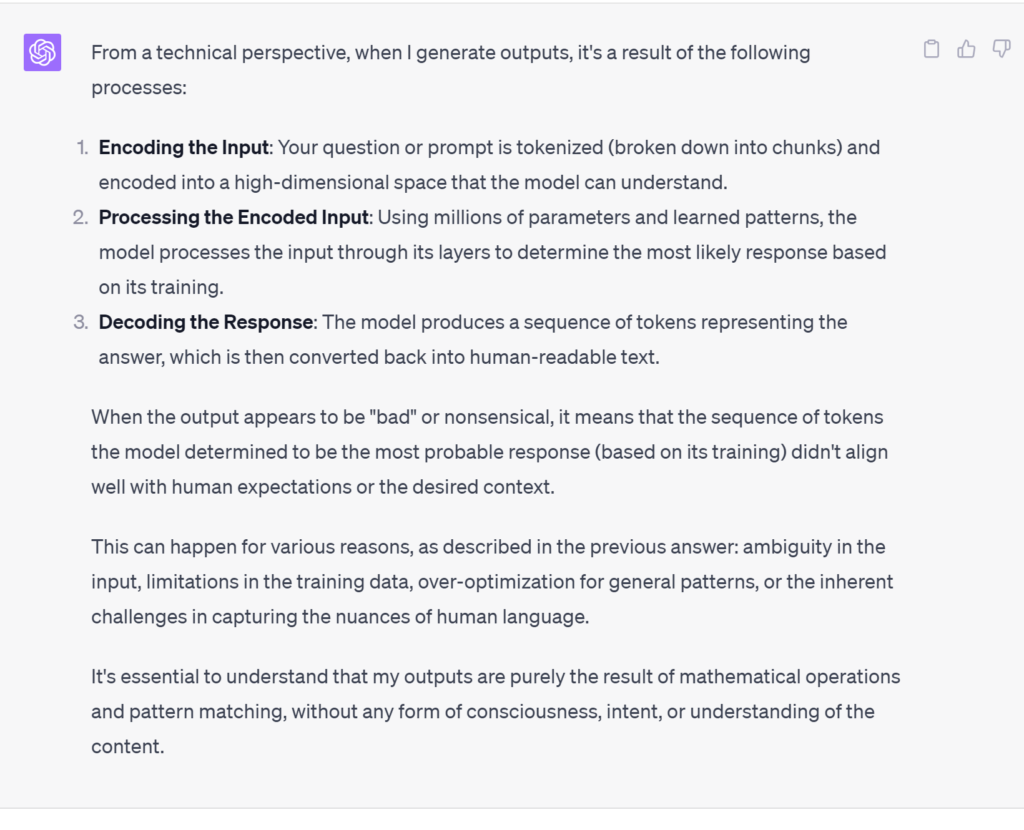

Enough Speculation, What Does The Model “Think”

We decided to ask ChatGPT-4 for its perspective on what happens.

What’s Next

The Quinn Effect sheds light on the imperfections inherent in even the most advanced AI models. Recognizing these limitations is the first step toward understanding, mitigating, and potentially harnessing those flaws. As the field of AI continues its meteoric growth, awareness of such phenomena becomes essential for users and developers alike, helping to bridge the gap between expectation and reality in the world of generative AI.

As we continue to evolve the complexity and number of parameters of the transformers, undoubtedly, there will be additional challenges such as this to overcome. However, it is also important to note that NVIDIA has the SDKs to deal with this. In the next piece, we will look at finetuning a model and then applying the guardrails to it in a pseudo-production environment.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed