To evaluate enterprise hardware, having the right testing environment is vital to producing industry accepted performance and usability metrics. We’ve collaborated with many industry leading vendors across multiple market segments including compute, storage, networking and infrastructure to offer informative evaluations of newly released and next-generation hardware. Working with StorageReview, these companies provide input and feedback on testing methodologies as well as donate gear to our lab to be incorporated into our diverse testing environment. To that end StorageReview has built up one of the largest independent test labs to put hardware through rigorous real-world testing scenarios to give buyers the information they need to make an informed buying decision.

To evaluate enterprise hardware, having the right testing environment is vital to producing industry accepted performance and usability metrics. We’ve collaborated with many industry leading vendors across multiple market segments including compute, storage, networking and infrastructure to offer informative evaluations of newly released and next-generation hardware. Working with StorageReview, these companies provide input and feedback on testing methodologies as well as donate gear to our lab to be incorporated into our diverse testing environment. To that end StorageReview has built up one of the largest independent test labs to put hardware through rigorous real-world testing scenarios to give buyers the information they need to make an informed buying decision.

As we’ve grown over the years, so has our lab and hardware needed to keep our day to day operations running smoothly. Recently we completed an overhaul of our existing test lab, moving it into a larger location with increased power capabilities. This new lab offers many of the same hardware elements we’ve leveraged in the past, although in much greater quantities. Our electrical capabilities have increased substantially, as well as our power conditioning and rackspace. Working closely with Eaton Electrical, we’ve expanded our primary lab from three to five racks, migrated to a new 12kW Eaton BladeUPS battery backup and streamlined the power distribution with Eaton metered and managed ePDUs. Our new lab design also offers a more-friendly “urban” atmosphere geared towards on-site visits as well as efficient hardware installation access to reduce to time from box to rack in our evaluation process.

To complement our new lab, Dell EMC has been a fantastic partner in helping StorageReview expand with new Purley-class servers to leverage in performance evaluations. With the addition of 12 top-spec Dell EMC PowerEdge R740xd servers, our lab is able to keep the newest storage platforms the bottleneck in testing, instead our compute hardware. As with previous server refreshes, we’ve worked closely with component vendors as well, including Intel, Mellanox, Emulex, Netgear, Brocade, Avago, Micron, Toshiba and others to customize these servers for their respective testing needs. Additionally Lenovo, HP and Supermicro have supplied Haswell, Ivy Bridge and Sandy Bridge-class servers to supplement other areas of our lab; offering a diverse makeup of platforms to choose from.

While the list of hardware in the StorageReview Enterprise Test Lab is constantly growing and adapting to our day to day needs, we do our best to offer a list of what devices are currently racked or on-hand. This hardware can also be leveraged for special projects or added to depending on the demands or a product evaluation.

- Enterprise Storage Performance Benchmarks

- Virtual Workstation Testlab

- Rack Hardware

- Power / Temperature Monitoring

- Eaton BladeUPS UPS w/ Rack Power Module (Primary – 12,000W)

- Eaton 9PX6K UPS (Secondary – 5400W)

- Eaton Advanced Monitored ePDU

- Eaton G3 Metered Input ePDU x 3

- Eaton G3 Managed ePDU

- Eaton Environmental Rack Monitor (ERM)

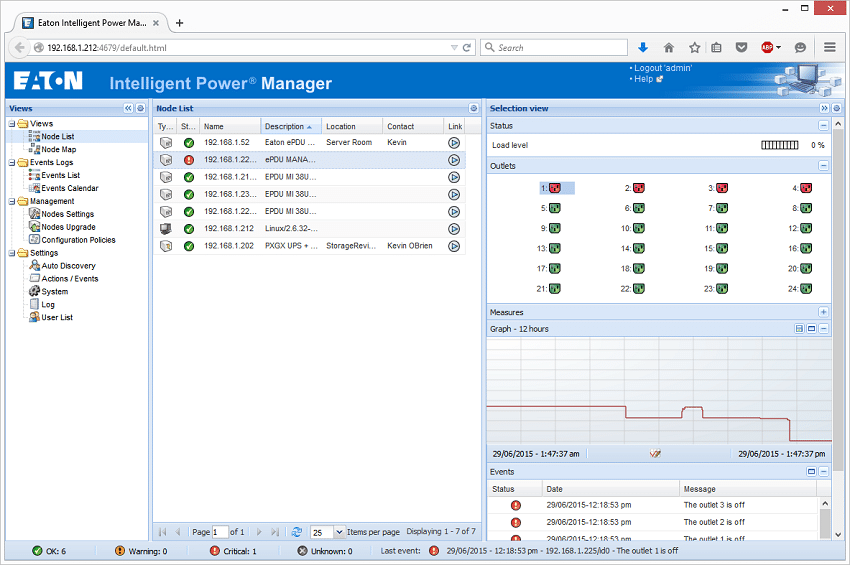

- Eaton Intelligent Power Manager

- Cooling

- TrippLite SRCOOL12K (12,000 BTU) x 2

- TrippLite SRCOOL24K (24,000 BTU)

- Custom Fresh Air Cooling (Primary Lab Cooling)

- Networking

- Dell Networking Z9100 x 2

- Dell Networking S4048 x 2

- Netgear ProSafe GSM7352S 48-Port 1GbE Switch

- Netgear ProSafe M7100 24-Port 10GbE Switch

- Netgear ProSafe XS712T 12-port 10GbE Switch

- Netgear ProSafe GS752TS 52-Port 1GbE Switch

- Netgear ProSafe M4100 POE+ 24-Port 1GbE Switch

- Netgear ProSafe GS752TXS 52-Port 1GbE Switch x 2

- Brocade 6510 16Gb Fibre Channel Switch x 2

- Broadcom G620 32Gb Fibre Channel Switch x 2

- Compute

- Dell EMC PowerEdge R740xd (x12)

- Dell PowerEdge R730 G13 (x12)

- HP Z620 WorkStation

- HP Z640 WorkStation

- Lenovo ThnkSystem SR850

- Lenovo ThinkSystem SR635

- Enterprise NAS/SAN

- NetApp AFF A200

- DotHill AssuredSAN Ultra48

- DotHill AssuredSAN 4824

- ExaGrid EX21000E

- Protective Storage

Rack Hardware

The largest components of the main StorageReview Test Lab are our five Eaton 42U S-Series racks. They allow us to put storage hardware in its native environment and have it located in close proximity to power, networking, and compute hardware. When looking at environmental needs, installing hardware in a rack-mount environment also helps with proper airflow and in keeping system and component temperatures down.

In our current layout, we divide our permanent compute infrastructure to the central racks and the outer racks to hardware being testing for minimal disruption during the installation and removal of hardware through the review process. One rack is dedicated to the main power conditioning gear as well as more transient NAS platforms that don’t need to be formally racked for testing. This layout allows us to keep network cabling routing for our multiple interconnect types neatly organized, which takes up most of the space in the rear of all racks.

From the rear of each rack, you can see ePDUs mounted to their respective 0U locations, although we hide the primary power cabling in the raceways built into each Eaton S-Series rack at the top. The bulk of our hardware is connected through traditional input metered ePDUs, while certain devices get outlet level monitoring through our Eaton Advanced Monitored ePDU.

Lastly we also have one managed ePDU which larger storage arrays or certain switches are connected through to disconnect power remotely after testing or toggle some components that might need the occasional power cycle. With a strong focus given towards efficient power usage, to both minimize electrical costs and cooling requirements, power monitoring and management is a key part of our lab’s focus.

For reliable day-to-day compute and storage operation, we regulate temperature in the lab with supplement exhaust fans to move hot air out of the room, as well as have two portable chillers on hand for much higher thermal loads. As with operating any testing or development environment, as opposed to a traditional production environment, cooling demands are a quick moving target with electrical usage and its associated thermal output varying dramatically between tests.

Power Infrastructure

At the component level, power consumption is continuously dropping as each new generation of product comes out, but at the datacenter level the total power required to run multiple switches, servers, SANs, and other required gear is constantly growing. The StorageReview Lab offers a wide range of utility options backed with three-phase power coming into our building.

To handle this massive power distribution on an outlet-by-outlet basis, we use Eaton ePDUs and battery backups. The bulk of our power is fed in through and Eaton BladeUPS battery backup, with an Eaton 9PX6K UPS on cold standby for maintenance and larger power loads. This setup ensures that no matter what outside conditions are present, short-term power disruptions don’t interfere with our day to day operations. We’ve also planned around future growth, by leveraging the BladeUPS platform that can easily scale to support additional modules.

With constant 24/7 power monitoring leveraging Eaton’s Intelligent Power Manager or IPM, we make sure tests are running smoothly, power conditions are clean, and that abnormal power conditions don’t affect our tests. With evaluations that take weeks or months and tests that span days individually, making sure everything is running without a blip is an important consideration when we review enterprise hardware.

To handle this massive power distribution on an outlet-by-outlet basis, we use a wide range of Eaton PDUs in our lab. This list includes an Advanced Monitored ePDU, three G3 Metered Input ePDUs as well as one G3 Managed ePDU. Power is supplied through an Eaton Rack Power Module which shares the output from our BladeUPS battery backup.

Each ePDU is connected through its own 24A L6-30R connection on the rear of the RPM, with multiple levels of power monitoring to appropriately balance the power load across each power phase.

Networking Infrastructure

The StorageReview Enterprise Test Lab supports all major interconnect standards including 1Gb, 10Gb and 40/56Gb Ethernet, 8Gb and 16Gb Fibre Channel, and 56Gb InfiniBand. These are supplied by partners that include Brocade, Emulex, Intel, Mellanox, Netgear and QLogic. This extensive networking infrastructure allows us to perform tests on every major storage platform while ensuring it has the fastest interface supported.

To interface with equipment over SSH, RDP, or iKVM, we use a 1GbE Ethernet infrastructure split into multiple VLANs to separate normal office and lab communications. This includes a Class C network for our office, as well as a Class B network for lab tests which gives us over 65,000 addressable hosts. Our 1GbE infrastructure is supplied by Netgear, with a broad range scaling from a fully managed L3 core switch to Smart switches for branch segments of our network. At the heart of this network is a Netgear UTM150 Firewall which manages incoming and outgoing traffic, load balances across multiple Internet connections and provides VPN access to the lab. For Internet access, we have a fiber-optic connection through Cincinnati Bell’s Fioptics service.

The next step up in interconnect hardware includes both 10Gb and 40Gb Ethernet supplied by Mellanox which is routed through SX1036 and SX1024 switches. This fabric is used to test SMB to large-enterprise NAS and SAN appliances that require bandwidth that can easily over-saturate standard 1GbE interfaces. We’ve also added support for 10GBase-T devices using Netgear’s latest switches.

For Fibre Channel SANs and storage appliances we have two Brocade 6510 16Gb FC switches, with host servers leveraging Emulex and QLogic 16Gb HBAs.

For high-performance throughput and latency sensitive applications, we also have 56Gb Mellanox InfiniBand fabric used for testing flash appliances and acting as the backbone of new application tests such as our MarkLogic NoSQL Database Benchmark.

Compute Infrastructure

The compute infrastructure in the StorageReview Enterprise Test Lab is split into two primary groups – individual servers used for single-system benchmarks and clustered servers for large-scale applications. We have many physical hosts leveraging Windows and Linux operating systems, including Windows Server 2012 and 2012 R2, as well as CentOS 6.2, 6.3, and 7.0. When it comes to virtualization we extensively use VMware in our lab, with ESXi 5.5 and ESXi 6.0 used in our testing environments. Currently every platform leveraged across our benchmarks are Intel Sandy Bridge-class or newer, offering full PCIe 3.0 support.

While we have a number of unmatched servers used for specialized applications, we have large groups of matched-configuration servers. Our newest cluster includes 12 Dell PowerEdge R730 servers equipped with dual E5-2690 v3 CPUs and 256GB of DDR4. We also have a group of 8 Lenovo RD630 servers equipped with dual E5-2690 CPUs and 128GB of DDR3. Finally we have a four-node cluster built around the EchoStreams GridStreams chassis, with each node configured with dual E5-2640 CPUs and 64GB of DDR3. These are used for clustered database applications, large virtualized environments, as well as VDI testing.

As the lab grows and evolves we’ll be sure to update this post with the latest gear and more detail on how we’re using it.