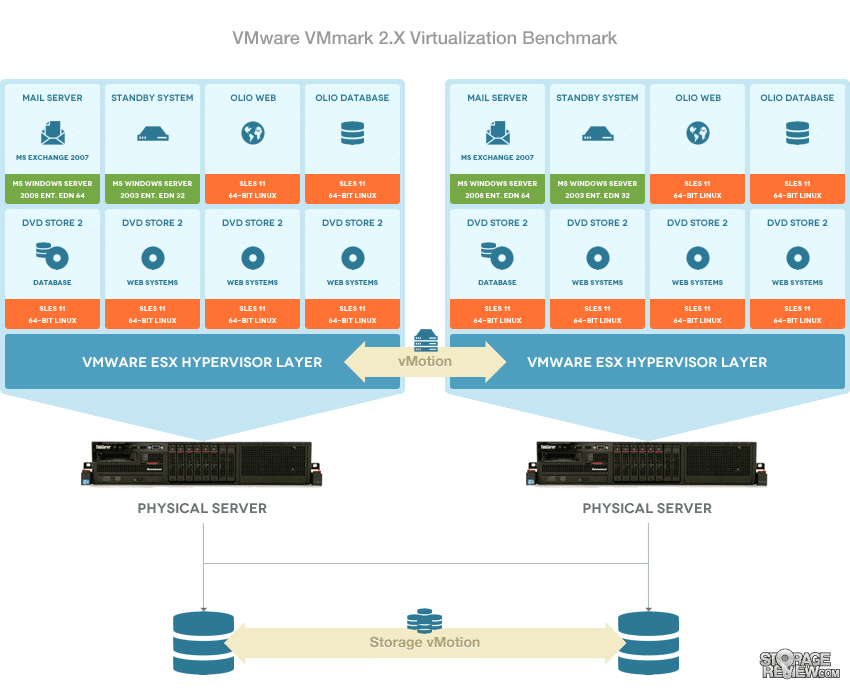

The VMmark Virtualization Benchmark is a comprehensive multi-host datacenter virtualization benchmark designed to mimic the behavior of complex consolidation environments. Legacy benchmarking methodologies designed for single-workload performance and scalability are insufficient for server consolidation, which gathers a collection of workloads onto a virtualization platform consisting of a set of physical servers with access to shared storage and network infrastructure.

The ability to virtualize irregular workloads while effortlessly load-balancing and automating workload provisioning combined with a wider range of administrative tasks has revolutionized server usage. As such, VMmark benchmarking focuses on user-centric application performance and accounts for the effects of this infrastructure activity (that can impact CPU, network, storage or other performance) on overall platform performance.

VMmark 2.x's benchmarking approach utilizes a series of sub-tests derived from commonly used load-generation tools and commonly initiated virtualization administration tasks. The benchmark implements a tile-based scheme for measuring application performance. The unit of work known as a tile is best defined as a collection of VMs running a diverse set of workloads encapsulated in diverse set on VMs.

VMmark 2.x also executes ubiquitous platform infrastructure workloads such as cloning and deploying of VMs, automatic VM load balancing across a datacenter, VM live migration (vMotion) and dynamic datastore relocation (storage vMotion). These operations complement the conventional application-level workloads. A data center's consolidation capacity, which measures scalability and individual application performance, is thus measured as the number of tiles that the data center platform can handle while at the same time supporting the required administrative operations. The performance of each workload within every tile that a multi-host platform can accommodate combined with the performance of the infrastructure operations determines the overall benchmark score.

Fully compliant VMmark benchmark tests are designed to run for a minimum of 3 hours with workload metrics reported every minute. After a benchmark run, each tile's metrics are computed and aggregated into a score for that tile. For aggregation, first the test normalizes the metrics via a reference system (in order to match up ratings such as MB/s and database commits/second). Then, a geometric mean is computed as the final score for the tile, with all of the per-tile score added to create the application workload portion of the final metric. Infrastructure workloads utilize a similar process for their portion of the metric. Dissimilar however, is how the infrastructure workloads are scaled by the size of the underlying server cluster and not explicitly by the user. As a result, the infrastructure workloads are compiled as a single group and no multi-tile sums are required. From this point, a final benchmark score is then computed as a weighted average where application-workloads account for 80% and infrastructure-workloads comprise 20%. These weights reflect the relative contribution of infrastructure and application workloads to overall resource demands.

In order to run the VMmark Virtualization Benchmark, there are some serious hardware requirements to start, which only increases as the number of tiles you are testing goes up.

VMmark Virtualization Benchmark Minimum Specifications

- ESXi Virtual Server Hosts (vMotion compatible)

- 2-host cluster (homogeneous systems not required) with the following:

- 4 logical CPUs per server

- 27GB of memory

- 320GB of shared storage

- vCenter Server installed on a separate, dedicated server

- Client System Per Tile

- Recommended minimum: two CPU cores

- 4-GB of RAM

- 15GB of available local disk space

- vMotion networking (10 Gb/s network recommended)

VMware's VMmark 2.5 utilizes a wide range of software and operating systems to fully reflect a real-world virtualized environment. Below is an overview of the VM's included in each VMmark tile and the applications and operating systems they use.

VMmark 2.5 Tile Configuration

- Client

- Microsoft Windows Server 2008 Enterprise Edition R2, 64-bit

- VMmark 2.x harness

- STAF framework and STAX execution engine

- LoadGen

- Microsoft Outlook 2007 (standalone or included in Microsoft Office 2007)

- Microsoft Exchange 2007 management tools

- Cygwin

- A Java JDK

- Rain Workload Toolkit

- Mailserver

- Microsoft Windows Server 2008 Enterprise Edition R2, 64-bit

- Microsoft Exchange 2007

- 1000 heavy profile users

- Standby

- Microsoft Windows Server 2003 SP2 Enterprise Edition, 32-bit

- OlioDB

- SUSE Linux Enterprise Server 11, 64-bit

- Olio database w/ MySQL database

- OlioWeb

- SUSE Linux Enterprise Server 11, 64-bit

- Olio workload

- DS2DB

- SUSE Linux Enterprise Server 11, 64-bit

- MySQL database

- DS2WebA

- SUSE Linux Enterprise Server 11, 64-bit

- Apache 2.2 Web server

- DS2 Web tier A

- DS2WebB

- SUSE Linux Enterprise Server 11, 64-bit

- Apache 2.2 Web server

- DS2 Web tier B

- DS2WebC

- SUSE Linux Enterprise Server 11, 64-bit

- Apache 2.2 Web server

- DS2 Web tier C

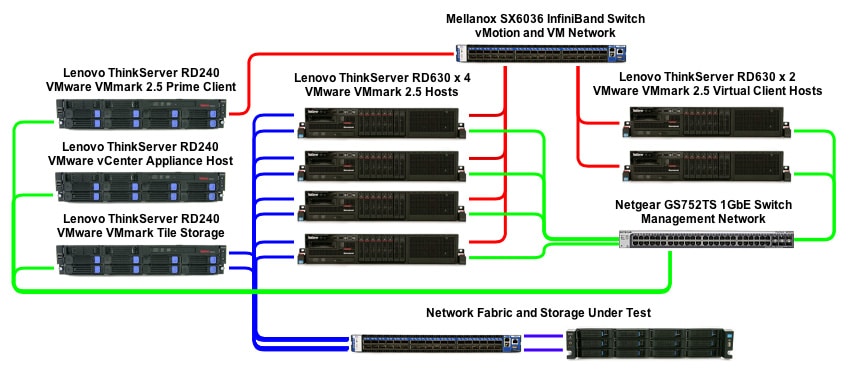

VMware VMmark Testing Environment

Storage solutions are tested with the VMware VMmark benchmark in the StorageReview Enterprise Test Lab utilizing multiple servers connected over a high-speed network. We utilize Dell PowerEdge R730s for different segments of the VMware VMmark Environment, including four for the VMmark 2.5.1 hosts, two for hosting multiple virtual clients, one operating as a physical prime client, one running a VMware vCenter Appliance, and one as a temporary staging ground for each tile leveraged in our VMmark test. The PowerEdge line also offers excellent hardware compatibility, which is an absolute must as we incorporate different forms of storage and networking technology into our testing platform. As with our other testing platforms, our goal is to show realistic performance customers can expect from mid-range server platforms, versus the top-spec servers generally leveraged in most competitive benchmarks. Another advantage of this unique 4-host VMmark platform is we can leverage more host-side resources in aggeragate than a top-spec 2-host setup, putting the stress on the storage product under test without getting CPU-bound.

For local storage in this VMmark environment, we went with a cost and power-efficient SD boot card layout. These SD cards are utilized as hypervisor boot drives on the VM server and virtual client side of our VMmark testing layout. This removes the cost of a SSD or HDD from each server in this environment, as well as cuts down on power consumption. Our Windows SErver 2008 R2-based virtual client VMs reside on storage presented by a DotHill Ultra48 SAN, running off a pool of 10K HDDs and SSD tiering. This helps rule out all possibility of the hosts becoming I/O bound during this benchmark.

Mellanox 56Gb InfiniBand interconnects were used to provide the highest performance and greatest network efficiency on each ESXi vSphere host to ensure that the VMs connected are not network-limited. We use one single-port Mellanox ConnectX-3 NIC operating in IPoIB mode, with multiple VM networks running on a single vSwitch. This alleviates any network constraints and reduces the complexity of the environment in our multi-use testing infrastructure.

We are constantly growing our networking infrastructure to use the best and fastest equipment in our reviews. As such, we are constantly upgrading our lab and enterprise testing equipment to adapt to the ever-changing technology.

First Generation VMmark Platform

First Generation VMware VMmark Virtualization Benchmark Equipment

- Lenovo ThinkServer RD630 VMware ESXi vSphere 4-node Cluster

- Eight Intel E5-2650 CPUs for 127GHz in cluster (Two per node, 2.0GHz, 8-cores, 20MB Cache)

- 512GB RAM (128GB per node, 8GB x 16 DDR3, 64GB per CPU)

- 400GB OCZ Talos 2 SAS SSD x 4 (via LSI 9207-8i)

- 4 x Mellanox ConnectX-3 InfiniBand Adapter (vSwitch for vMotion and VM network)

- 4 x QLogic QLE2672 16Gb FC Adapter

- VMware ESXi vSphere 5.1 / Enterprise Plus 8-CPU

- Lenovo ThinkServer RD630 VMware ESXi vSphere Virtual Client Hosts (2)

- Four Intel E5-2650 CPUs (Two per node, 2.0GHz, 8-cores, 20MB Cache)

- 256GB RAM (128GB per node, 8GB x 16 DDR3, 64GB per CPU)

- 400GB OCZ Talos 2 SAS SSD x 2(via LSI 9207-8i)

- 2 x Mellanox ConnectX-3 InfiniBand Adapter

- VMware ESXi vSphere 5.1 / Enterprise Plus 4-CPU

- Lenovo ThinkServer RD240 (Prime Client)

- Two Intel Xeon X5650 CPUs (2.66GHz, 6-cores, 12MB Cache)

- 16GB RAM (8GB x 4 DDR3, 8GB per CPU)

- 600GB 10K SAS HDD in RAID1 (via LSI 9260-8i)

- Mellanox ConnectX-3 InfiniBand Adapter

- Windows Server 2008 R2 64-bit

- Lenovo ThinkServer RD240 (vCenter Appliance Host)

- Two Intel Xeon X5650 CPUs (2.66GHz, 6-cores, 12MB Cache)

- 24GB RAM (8GB x 2 DDR3, 4GB x 2, 12GB per CPU)

- Hosted on shared SSD iSCSI LUN (125GB Thin Provisioned)

- VMware ESXi 5.1 vSphere / Enterprise Plus 2-CPU

- Lenovo ThinkServer RD240 (VMmark Tile Storage)

- Two Intel Xeon X5650 CPUs (2.66GHz, 6-cores, 12MB Cache)

- 32GB RAM (8GB x 4 DDR3, 16GB per CPU)

- 8TB x 8 WD RE4 SAS RAID6 + Hot Spare (20TB usable via LSI 9260-8i)

- VMware ESXi 5.1 vSphere / Enterprise Plus 2-CPU

Second Generation VMware VMmark Virtualization Benchmark Equipment

- Dell PowerEdge R730 VMware ESXi vSphere 4-node Cluster

- Eight Intel E5-2690 v3 CPUs for 249GHz in cluster (Two per node, 2.6GHz, 12-cores, 30MB Cache)

- 1TB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- SD Card Boot (Lexar 16GB)

- 4 x Mellanox ConnectX-3 InfiniBand Adapter (vSwitch for vMotion and VM network)

- 4 x Emulex 16GB dual-port FC HBA

- 4 x Emulex 10GbE dual-port NIC

- VMware ESXi vSphere 6.0 / Enterprise Plus 8-CPU

- Dell PowerEdge R730 VMware ESXi vSphere Virtual Client Hosts (2)

- Four Intel E5-2690 v3 CPUs for 124GHz in cluster (Two per node, 2.6GHz, 12-cores, 30MB Cache)

- 512GB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- SD Card Boot (Lexar 16GB)

- 2 x Mellanox ConnectX-3 InfiniBand Adapter (vSwitch for vMotion and VM network)

- 2 x Emulex 16GB dual-port FC HBA

- 2 x Emulex 10GbE dual-port NIC

- VMware ESXi vSphere 6.0 / Enterprise Plus 4-CPU

- Emulex 10GbE dual-port NIC (Prime Client)

- Two Intel E5-2603 v3 CPUs (1.6GHz, 6-cores, 15MB Cache)

- 32GB RAM (4GB x 8 DDR3, 16GB per CPU)

- 960GB SSD Boot Drive

- Mellanox ConnectX-3 InfiniBand Adapter

- Windows Server 2008 R2 SP1 64-bit

- Dell PowerEdge R720 (vCenter 6.0 Appliance Host)

- Two Intel E5-2690 v2 CPUs (3.0GHz, 10-cores, 25MB Cache)

- 64GB RAM (8GB x 8 DDR3, 32GB per CPU)

- Hosted on shared iSCSI LUN

- VMware ESXi 6.0 vSphere / Enterprise Plus 2-CPU

- Mellanox SX6036 InfiniBand Switch

- 36 FDR (56Gb/s) ports

- 4Tb/s aggregate switching capacity

- Mellanox SX1036 10/40GbE Switch

- 36 40GbE ports

- Netgear ProSafe M7100 10Gbase-t Switch

- 24 10Gbase-t RJ45 ports

- 480Gb/s aggregate switching capacity

- Brocade 6510 16Gb FC Switch

- 48 16Gb FC ports

- 768Gb/s aggregate switching capacity

All VMmark result folders are available for download upon request. The Synology RackStation RS10613xs+'s 1-Tile raw score of 1.10 Application and 1.11 VMmark2 with 10 15K SAS HDDs in RAID0 is used as our baseline of 1 to normalize results against.